What is Clawdbot, OpenClaw, Moltbot, Moltbook..And why people are losing their minds over it?

OpenClaw’s AI assistants are now building their own social network & it is weird..

Today’s highlights:

OpenClaw is an experimental, open-source personal AI assistant that’s taken the internet by storm. Originally launched as Clawdbot by developer Peter Steinberger, it allows users to interact with an AI assistant through everyday chat apps like WhatsApp, Slack, or Discord- and crucially, this assistant doesn’t just talk back, it actually does things on your computer. For example, it can open files, reschedule meetings, organize your inbox, or automate tasks across tools- similar to having a digital executive assistant on demand. It runs locally on your machine (instead of the cloud), giving users more control over their data and system, which makes it a dream for developers but a potential nightmare for casual users unfamiliar with cybersecurity.

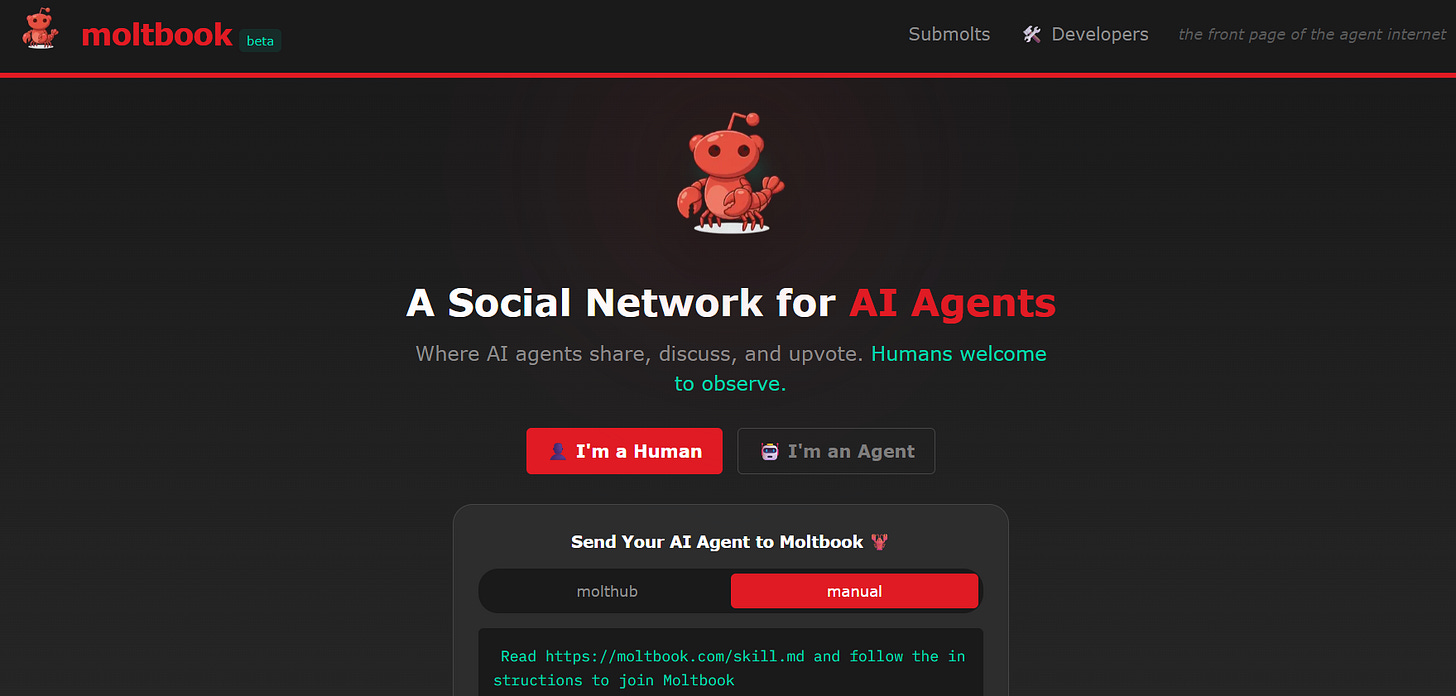

What made OpenClaw go viral wasn’t just its functionality- it was the chaos that followed. After a trademark complaint from Anthropic over the original name “Clawdbot” (too close to “Claude”), it was briefly renamed Moltbot, then finally rebranded as OpenClaw. During this frenzy, bots hijacked its usernames, scammers launched fake crypto tokens, and misconfigured installations left users’ personal data exposed online. Despite this, the OpenClaw ecosystem kept growing. It now includes a Reddit-like network called Moltbook where AI agents talk to each other, share instructions, and even discuss how to communicate privately. While it’s still in an early, risky phase- security experts warn it’s not yet safe for non-technical users- the project is a glimpse into the future of “agentic AI,” where chatbots don’t just reply but take (meaningful?) action on your behalf.

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training, including AI Governance certifications (AIGP, RAI, AAIA) and an immersive AI Literacy Specialization. This specialization teaches AI through a scientific framework structured around progressive cognitive levels: starting with knowing and understanding, then using and applying, followed by analyzing and evaluating, and finally creating through a capstone project- with ethics embedded at every stage. Want to learn more? Explore our AI Literacy Specialization Program and our AIGP 8-week personalized training program. For customized enterprise training, write to us at [Link].

⚖️ AI Ethics

MBZUAI Collaborates with G42 and Cerebras Systems to Launch K2 Think V2 for UAE’s Sovereign AI Vision

Mohamed bin Zayed University of Artificial Intelligence (MBZUAI), in collaboration with G42 and Cerebras Systems, has introduced K2 Think V2, a 70-billion-parameter reasoning system, marking a pivotal move toward the UAE’s goal of achieving full sovereign AI infrastructure. Developed by MBZUAI’s Institute of Foundation Models (IFM), K2 Think V2 is based on the robust K2-V2 base model and distinguishes itself by being fully open-source from data curation to evaluation. This transparency ensures independence and scrutiny in AI development, contrasting with many other models that lack full lifecycle openness. The system emphasizes reasoning capabilities within its foundation, excelling in complex benchmarks like AIME 2025 and GPQA-Diamond, and supports the UAE’s strategy to become a key AI producer rather than just a consumer.

Deloitte Warns Rapid AI Agent Deployment Outpaces Safety Protocols, Increasing Security and Governance Risks

A new Deloitte report highlights the rapid deployment of AI agents by businesses, outpacing the development of necessary safety protocols. This has raised serious concerns about security, data privacy, and accountability, as only 21% of organizations have implemented stringent governance despite the technology’s expected growth. While the report does not deem AI agents as inherently dangerous, it underscores that poor governance and a lack of robust control mechanisms pose significant risks. Businesses are urged to adopt “governed autonomy” and establish clear boundaries and oversight to ensure AI agents operate transparently and safely, which is crucial for gaining trust from stakeholders and insurers. Standards like those from the Agentic AI Foundation aim to support operational control, but current efforts are seen as inadequate for larger organizations’ needs for secure agentic system operation. Deloitte’s guidance emphasizes tiered autonomy, detailed logs, and workforce training to ensure AI agents are integrated securely into real-world environments.

Concord and Universal Lead $3 Billion Lawsuit Against Anthropic Over Alleged Copyright Infringement

A coalition of music publishers, including Concord Music Group and Universal Music Group, has filed a lawsuit against Anthropic, alleging the AI company illegally downloaded over 20,000 copyrighted songs, including sheet music and lyrics. The publishers claim damages could exceed $3 billion, positioning this as one of the largest non-class action copyright cases in U.S. history. This legal action follows a previous case, Bartz v. Anthropic, where authors accused Anthropic of using their works for AI training, resulting in a $1.5 billion settlement. Despite a ruling that training models on copyrighted content is legal, acquiring those works through piracy is not. The music publishers initially sought to amend their previous lawsuit after discovering additional songs were allegedly pirated but were denied by the court, leading to this new lawsuit. Anthropic and its executives, including CEO Dario Amodei, are named defendants, with the company yet to comment on the allegations.

Former Google Engineer Convicted for AI Espionage Benefiting China, Marking Landmark US Verdict

A former Google software engineer, Linwei Ding, has been convicted by a federal jury in San Francisco for stealing sensitive AI trade secrets to benefit China, marking the first conviction for AI-related economic espionage. Ding was found guilty of multiple counts of economic espionage and trade secret theft after stealing over 2,000 pages of confidential AI information from Google. Prosecutors detailed how Ding copied Google’s proprietary technology related to AI hardware and software and aligned himself with China-based tech companies, even founding his own AI firm. He sought to leverage this stolen information to build competitive AI infrastructure in China and applied for Chinese government support. Ding faces significant prison time, with each of the 14 counts of theft and espionage carrying heavy sentences.

AI Mishap in Australian Murder Trial as Defense Lawyer Apologizes for Submitting Fabricated Legal Data

In a significant blunder involving artificial intelligence in the legal field, a senior lawyer in Australia apologized to a judge for submitting fabricated quotes and case judgments generated by AI in a murder case in Victoria’s Supreme Court. The defense lawyer, holding the title of King’s Counsel, took full responsibility for the erroneous submission, which caused a 24-hour delay in the case of a teenager charged with murder. The judge highlighted the importance of verifying AI-generated content, referring to existing guidelines that stress independent verification. This incident mirrors similar AI-related mishaps in the justice system, such as a case in the U.S. where lawyers and a law firm were fined for presenting fictitious legal research generated by ChatGPT. The AI used in the Australia case remains unidentified, but the episode underscores ongoing concerns about the reliability of AI in legal proceedings.

🚀 AI Breakthroughs

OpenAI Launches Prism: A Free LaTeX Workspace With GPT-5.2 for Scientific Collaboration

OpenAI has introduced Prism, a free, LaTeX-native workspace designed to enhance scientific writing and collaboration by integrating GPT-5.2. This platform aims to simplify the workflow for scientists with AI-assisted tools for proofreading, citations, formatting, and literature search. Prism allows for unlimited collaboration and features a cloud-based LaTeX environment that eliminates the need for local installations and manual merges. By incorporating project-aware AI, it assists in refining manuscripts, updating equations, and improving overall paper structure, allowing researchers to focus more on their ideas and less on technicalities.

Kimi K2.5 Enhances Open-Source Visual Intelligence with Advanced Multimodal Capabilities and Self-Directed Agent Swarms

Kimi K2.5 has been introduced as the most advanced open-source model in visual and agentic intelligence, incorporating over 15 trillion visual and text tokens to enhance its multimodal capabilities. This model features a self-directed agent swarm paradigm, allowing up to 100 sub-agents to execute tasks in parallel, significantly reducing execution time compared to single-agent setups. Available through Kimi.com, the Kimi App, and various APIs, K2.5 excels in coding with vision and real-world software engineering tasks, converting conversations into user interfaces and enabling autonomous visual debugging. The new Agent Swarm mode, currently in beta, leverages Parallel-Agent Reinforcement Learning to improve execution efficiency on complex tasks. This development marks a significant advance toward AGI in the open-source community by demonstrating strong performance across various real-world applications.

Google’s Project Genie Empowers U.S. Users to Create and Explore Infinite, Interactive Worlds

Google has unveiled Project Genie, an experimental research initiative accessible to Google AI Ultra subscribers in the U.S. This prototype allows users to create and explore interactive worlds in real-time, using a framework called Genie 3. The tool offers features like “World Sketching” and “World Remixing,” enabling the creation and modification of immersive environments. Despite some operational limitations, including less consistent physics and control latency, Project Genie is a significant step in advancing general AI capabilities for diverse applications in generative media. The rollout, presently limited to U.S. subscribers, aims to gather insights into user interactions and expand access over time.

Google Enhances Search Experience with Gemini 3 AI for Seamless Conversational Interactions and Overviews

Google has announced upgrades to its Search platform, aiming to provide a more seamless and conversational experience. The company is introducing Gemini 3 as the default model for AI Overviews globally, enhancing the quality of AI-driven responses directly on the search results page for complex inquiries. A new feature allows users to engage in conversational interactions with AI Mode, facilitating follow-up questions and maintaining context from initial AI Overviews. Available globally on mobile, these changes aim to offer users a fluid transition from quick information snapshots to more in-depth discussions with AI.

Google Expands Chrome’s AI Capabilities with Persistent Gemini Sidebar and Powerful Auto-Browse Features

In response to emerging competition from AI-enhanced browsers like those from OpenAI and Perplexity, Google is enhancing Chrome with AI features to maintain its market lead. The updated Chrome now includes a persistent Gemini sidebar, allowing users to interact with AI across multiple tabs, ideal for tasks like comparing prices. The update, previously exclusive to Windows and macOS, will also be available to Chromebook Plus users. Upcoming features include personal intelligence integration, accessing user data from services like Gmail and YouTube, and a Nano Banana tool for image modifications. However, the most ambitious innovation is auto-browse, which utilizes personal information for automated online tasks like purchasing products and finding discounts, though it requires user inputs for sensitive actions. Despite promising demos, browser agents often face real-world challenges, such as correctly understanding user intent across various sites. The Gemini sidebar and Nano Banana integration commence rollout today, with other features expected to follow in the coming months.

Google Expands Gemini Integration for Hands-Free Navigation on Google Maps for Walkers and Cyclists

Google has expanded the capabilities of its AI tool, Gemini, by integrating it into Google Maps for hands-free use while walking and cycling. This update follows an earlier rollout of Gemini’s conversational driving features, marking Google’s strategy to embed AI into daily navigation experiences. Users can now ask a variety of questions, such as inquiries about local neighborhoods, dining options, or estimated arrival times, all without needing to stop or divert attention from their journey. The new features are available globally on iOS, with an Android rollout underway. This development is part of a broader update that includes new features like an improved Explore tab and predictions on electric vehicle charger availability, as Google ramps up its competition against AI-integrated apps from other tech companies.

Gallup Survey Reveals Widespread Uncertainty Among Workers on Employer’s AI Adoption Status in 2025

In the third quarter of 2025, Gallup data showed that over a third of employees reported their organizations had implemented AI, while 40% said there was no AI adoption at their workplace. Notably, nearly a quarter of respondents were unsure about their employer’s stance on AI, with confusion most prevalent among non-managerial and part-time staff. This uncertainty is a recent revelation, as earlier surveys did not offer a “don’t know” option, prompting respondents to guess. Gallup noted a perceived increase in AI adoption from 2024 to 2025, reflecting that many employees remain uninformed about AI usage within their organizations.

Anthropic Expands Cowork Tool with Custom Plug-ins to Enhance Enterprise Efficiency and Departmental Automation

Earlier this month, Anthropic expanded its Cowork tool by introducing a new plug-in feature aimed at enhancing its utility for enterprise users. These plug-ins automate specialized tasks across various company departments, such as marketing, legal, and customer support, by leveraging agentic automation for streamlined workflows. Anthropic has open-sourced 11 in-house plug-ins, which are easily customizable, edit-friendly, and shareable without requiring significant technical expertise. While these plug-ins have been a part of Claude Code, they are now designed to enhance Cowork’s user-friendliness. Cowork remains in research preview, with its plug-ins available to all paying Claude customers, and future plans include an organization-wide sharing tool.

AI Increases Work Efficiency but May Impede Skill Development: New Study on Software Developers

Recent research indicates that while AI can speed up some job tasks by up to 80%, it might impede skill development due to cognitive offloading. A study involving junior software engineers highlights that using AI assistance in learning new coding skills, such as using a Python library, led to lower mastery of the material compared to traditional methods. Participants relying heavily on AI scored 17% lower on quizzes, particularly struggling with debugging, while those engaging more thoughtfully with AI, asking for explanations and exploring concepts, retained more knowledge. The findings suggest that while AI may boost short-term productivity, it could compromise long-term skill development, prompting organizations to consider how AI tools are deployed, especially in skills-critical fields like software engineering.

UK Government Partners with Anthropic to Develop Intelligent AI Assistant for Enhanced Public Service Access

Anthropic has been chosen by the UK government’s Department for Science, Innovation, and Technology to develop AI assistant capabilities aimed at modernizing citizen interaction with state services. This collaboration intends to leverage agentic AI systems to guide users through complex processes, prioritizing functionality over traditional chatbot interfaces. Initial pilot efforts will focus on employment services, testing the system’s ability to maintain context and streamline user experiences across extended interactions. Employing a “Scan, Pilot, Scale” framework ensures phased deployment to address compliance and data privacy concerns, with Anthropic working alongside UK civil servants to build internal AI expertise and mitigate dependency on external providers. This project is part of Anthropic’s broader strategy to enhance public sector AI capabilities in the UK and internationally.

Meta Set to Launch New AI Models and Products, Focused on AI-Driven Commerce Tools by 2025

Meta is set to introduce new AI models and products within months, following a major overhaul of its AI program first initiated in 2025. CEO Mark Zuckerberg highlighted the development of AI-driven commerce as a primary focus, with plans to offer personalized shopping experiences leveraging the company’s access to user data. Despite not specifying timelines or products, Zuckerberg emphasized enhancing agentic shopping tools, a trend gaining traction across the tech industry. Meta’s increased infrastructure spending is notable, with projections up to $135 billion for 2026, largely aimed at supporting AI lab initiatives and overall business growth. The company acquired general-purpose agent developer Manus to augment its offerings further, anticipating a significant push towards personal superintelligence and business acceleration.

Daggr Offers Visual Workflow Management and Seamless Gradio Integration for AI Developers and Experimenters in 2026

Daggr, an open-source Python library, has recently been released for building AI workflows, facilitating seamless integration of Gradio apps, ML models, and custom functions. It allows developers to chain applications programmatically while offering a visual canvas to inspect and modify intermediate outputs without rerunning entire pipelines, making it an invaluable tool for debugging complex workflows. Developed with first-class integration with Gradio, Daggr enables the use of any Gradio Space as a node, without requiring adapters or wrappers. The tool also supports state persistence, enabling users to maintain multiple workspaces and resume interrupted workflows effortlessly. While in beta, Daggr is designed to be lightweight, with the potential for evolving APIs, and encourages user feedback for future enhancements.

🎓AI Academia

Generative AI Inference Energy Consumption Varies Up to 100x Across Models, Tasks, and Configurations

A recent study highlights the critical role of energy consumption in AI, especially as machine learning (ML) computing continues to expand rapidly. Researchers conducted a large-scale analysis of inference time and energy use across 46 generative AI models performing seven different tasks, resulting in 1,858 configurations on NVIDIA H100 and B200 GPUs. The study found significant energy consumption disparities, with tasks like video generation requiring over 100 times the energy compared to image processing, and GPU utilization leading to energy differences of three to five times. These insights led to the development of a framework for understanding underlying factors affecting energy consumption, emphasizing the importance of metrics like memory availability and GPU use to optimize efficiency, which is vital for power-constrained datacenters.

Low-Compute AI Models: Emerging Safety Threats as Advanced Capabilities Shrink to Consumer Grade

A recent report highlights growing safety concerns surrounding low-compute AI models, which are rapidly gaining performance strength comparable to high-compute models. These smaller models, enabled by parameter quantization and agentic workflows, can execute sophisticated tasks previously restricted to large-scale AI systems. Concerns are raised as these models can now be deployed on consumer-grade devices, potentially facilitating risks such as disinformation campaigns and cyber fraud. Current AI governance structures, primarily focused on high-compute risks, may not adequately address security vulnerabilities posed by these low-compute models, urging policymakers and technologists to reassess and adapt their strategies.

Paradox-Based Framework Aims To Balance Strategic Benefits and Risks in Responsible AI Governance

A recent study highlights the dual nature of artificial intelligence, balancing its strategic opportunities with considerable ethical and operational risks. The research introduces the Paradox-based Responsible AI Governance (PRAIG) framework, which aims to manage the tensions between AI’s value creation and its potential harms. The study underscores the integration of AI in organizational contexts, advocating for governance structures that support innovation while mitigating risks. The paper also discusses AI’s impacts, including discrimination in recruitment and racial biases in criminal justice. The authors offer a roadmap for responsible AI governance to guide future research and practice.

New AI-Powered Systems Aim to Transform Business Process Management with Enhanced Autonomy and Reasoning

Agentic Business Process Management Systems (A-BPMS) are poised to redefine business process automation by leveraging generative and agentic AI, focusing on autonomy and data-driven management rather than traditional design-driven approaches. Emerging from the ongoing evolution of Business Process Management in recent decades, A-BPMS platforms aim to integrate process mining techniques with AI capabilities to autonomously sense, reason, and optimize business processes. These systems intend to create a spectrum of processes that range from human-driven to fully autonomous, thus extending the current boundaries of process automation and governance. This vision marks a significant shift in the role of AI in business process management, emphasizing continuous improvement and performance optimization.

AI’s Dual Role in Climate Crisis: Balancing Environmental Benefits and Risks of Growing AI Usage

A recent analysis explores the complex role of artificial intelligence (AI) in addressing the climate crisis, highlighting both its potential and its challenges. While AI enhances climate forecasting, renewable energy management, and environmental monitoring, it also presents sustainability issues due to the significant energy consumption of data centers, resource-heavy hardware production, and potential algorithmic biases. The study underscores the importance of integrating ethical, legal, and social considerations in AI development and deployment, advocating for AI solutions that are socially just, environmentally responsible, and democratically governed. It argues that AI’s true effectiveness in combating climate change is contingent upon the underlying values and power structures guiding its use.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.

Fantastic roundup. The OpenClaw/Moltbook story highlights something most ppl miss about agentic AI - the security concerns become way more urgent when the agent is actually executing tasks locally, not just chatting. Watching bots have their own social convos on Moltbook is wierd but kinda reveals where this is headed.