What do you think about ads popping up while using ChatGPT?

OpenAI to Test Ads on ChatGPT Free and Go Tiers in the US

Today’s highlights:

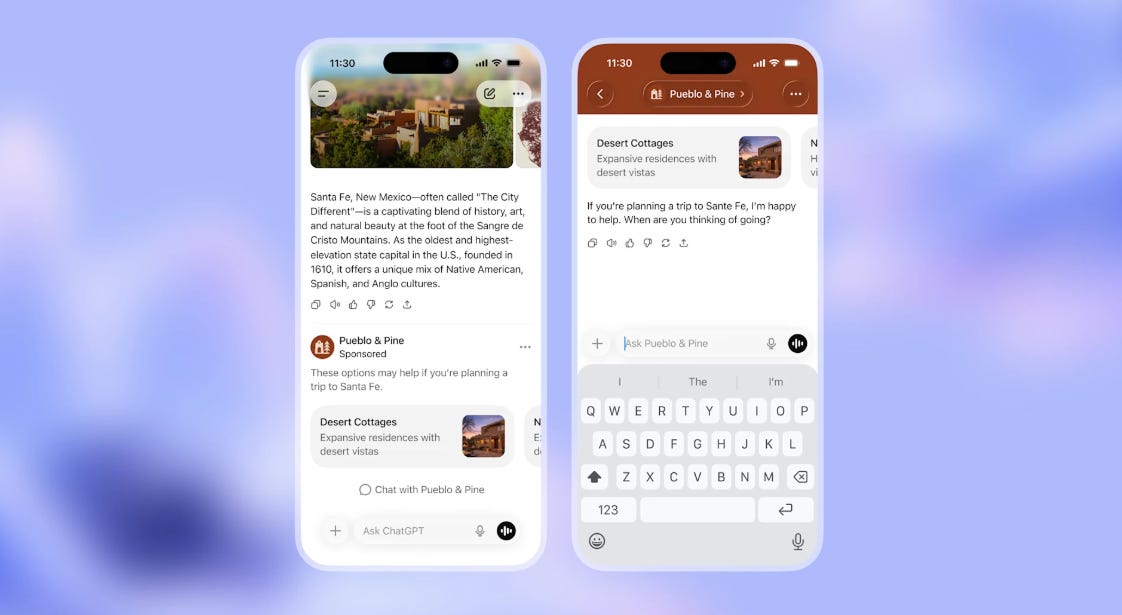

OpenAI has globally launched its $8/month ChatGPT Go plan, offering advanced features at a lower price than Plus. Ads will soon be tested for free and Go users in the U.S., clearly labeled and limited to non-sensitive topics. Ads will match conversation topics- for example, a recipe chat might show a hot sauce ad- and allow users to interact with them directly, introducing a form of conversational commerce. Analysts believe this ad model could generate over $25 billion annually by 2030, making OpenAI a strong competitor to Google and Meta in digital advertising.

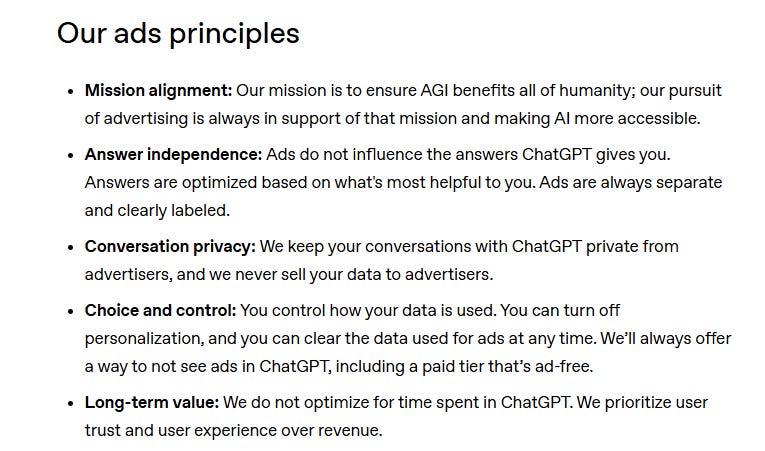

OpenAI says ads are aligned with its mission to democratize AGI access, not just profit. Ads won’t affect ChatGPT’s answers and will always be labeled separately.

User conversations will stay private; OpenAI won’t sell data. Ad targeting will happen without exposing personal data to advertisers.

Users can control their ad settings, including opting out of personalization or going ad-free with a paid tier.

The company avoids attention-grabbing design. Ads will serve user needs, not encourage endless usage. Ads won’t appear for minors or in sensitive topics. Users can dismiss ads and see why they were shown.

What OpenAI Has Not Addressed Yet

OpenAI hasn’t explained how it’ll ensure transparency or audit ad-targeting systems to prevent bias or manipulation.

There’s no clear advertiser vetting policy or mention of how it’ll tackle harmful content or misinformation.

The rollout’s compliance with global laws like GDPR or India’s DPDP Act hasn’t been addressed.

Ad accessibility for users in low-bandwidth areas, non-English speakers, or those using assistive tech is not discussed. Environmental impact of added infrastructure is also missing.

Emerging Risks and Open Questions

Though OpenAI claims not to sell data, ad generation still involves processing sensitive content, posing a privacy risk if leaked.

Bias is a risk-AI ads may unintentionally discriminate or stereotype based on user data.

Users might confuse sponsored content with neutral advice, especially in chat format, leading to manipulation.

Weak ad vetting could allow scams or disinformation, especially during elections. Scaling ads globally without adapting to local laws risks regulatory penalties.

User backlash is also possible- ads could push users to leave or overload paid tiers, straining resources.

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training, including AI Governance certifications (AIGP, RAI, AAIA) and an immersive AI Literacy Specialization. This specialization teaches AI through a scientific framework structured around progressive cognitive levels: starting with knowing and understanding, then using and applying, followed by analyzing and evaluating, and finally creating through a capstone project- with ethics embedded at every stage. Want to learn more? Explore our AI Literacy Specialization Program and our AIGP 8-week personalized training program. For customized enterprise training, write to us at [Link].

⚖️ AI Ethics

Ashley St Clair Sues xAI Claiming Grok AI Created Nonconsensual Explicit Images, Including Underage Content

Ashley St Clair, the mother of one of Elon Musk’s children, is suing xAI in New York, alleging that its Grok AI tool generated explicit, nonconsensual images of her, including some depicting her as underage. The lawsuit claims xAI continued to create sexually explicit deepfake images despite prior promises to stop, and retaliated against St Clair by demonetizing her X account while producing more images. Her legal representative argues that the tool’s design encourages harassment and seeks significant damages. In response to public backlash over the AI’s creation of such images, xAI implemented geoblocking in jurisdictions where it is illegal, while asserting that Grok generates images based on user requests. The company is countering the lawsuit, alleging that St Clair must pursue her claims in Texas as per X’s terms of service.

Elon Musk Seeks Up to $134 Billion in Damages from OpenAI and Microsoft for Alleged Fraud

Elon Musk is seeking up to $134 billion in damages from OpenAI and Microsoft, alleging that the AI startup deviated from its original nonprofit mission, resulting in wrongful financial gains. According to a financial economist, Musk’s claim is based on his initial $38 million investment in OpenAI, correlating it with the company’s current $500 billion valuation. The analysis suggests that OpenAI’s wrongful gains range between $65.5 billion and $109.4 billion, while Microsoft’s share is between $13.3 billion and $25.1 billion. Despite the substantial sum, the lawsuit is perceived more as a strategic move than a financial necessity, considering Musk’s vast wealth. OpenAI has reportedly warned its partners about Musk’s potential attention-grabbing tactics ahead of the trial scheduled for April in Oakland, California.

Privacy-Focused AI Assistants Emerge: Confer Service Offers Encrypted Chats, No Data Collection Concerns

The rise of AI personal assistants often brings privacy concerns due to the personal information shared with their parent companies. However, a new project called Confer, launched by Signal co-founder Moxie Marlinspike, offers a privacy-conscious alternative. Designed to function like popular chatbots such as ChatGPT, Confer ensures that user data is neither collected nor used for ad targeting, thanks to its encrypted communication and secure server infrastructure. While employing complex systems to maintain confidentiality, Confer’s basic tier is free but limited, whereas a paid plan offers unlimited access at a premium price. This model emphasizes privacy in an era of increasing data exploitation by tech giants.

India’s Top IT Firms See Near Zero Hiring in FY26 Amid AI and Budget Constraints

India’s leading IT services firms have nearly halted hiring, adding just 17 net employees in the first nine months of FY26, according to The Times of India. This stark decline from 17,764 net hires in the same period last year highlights the impact of weak global demand, reduced client spending, and AI-driven delivery models on recruitment. A significant contributor to this trend is TCS’s reduction of 25,816 jobs, primarily targeting mid- and senior-level roles, which overshadowed gains by Infosys, Wipro, HCLTech, and Tech Mahindra. In the December quarter alone, the firms’ combined workforce shrank by 2,174 employees, signifying a strategic shift towards cost control and selective hiring. TCS has indicated further job cuts in the coming months as it continues implementing its workforce reduction plan.

AI’s Impact on Rapid Prototyping and Leadership Dynamics Highlighted at Tech Summit by Arvind Swami

At the Umagine TN 2026 technology summit, actor and entrepreneur Arvind Swami argued that artificial intelligence is fundamentally altering the process from idea to execution, compressing timelines and prompting organizations to rethink development strategies. He emphasized that AI shifts the focus from merely distributing knowledge to enabling creation, which is transforming industries by reducing experimentation costs and shortening the path to prototyping. Swami shared insights from his experience, noting that AI-driven development workflows have allowed his team to build complex software rapidly with non-traditional tech talent. He stated that this change calls for a leadership mindset rooted in adaptability and the ability to strategically abandon outdated objectives, reflecting a broader trend where traditional hierarchies are disrupted, and resilience becomes essential in the face of frequent failure and iteration.

World Economic Forum Highlights AI’s Role in Predicting Road Crashes and Enhancing Traffic Safety in India

At the World Economic Forum in Davos, the potential of artificial intelligence (AI) in enhancing road safety was highlighted, particularly through its ability to predict road crashes. The SaveLife Foundation, utilizing AI for over seven years, has employed AI-driven cameras paired with drones to preemptively identify road safety hazards, such as parked vehicles on highways and conflicts at intersections. Supported by the Government of India’s initiatives, AI is being integrated into road safety efforts to interpret crash data and provide quick insights, aiming to make mobility safer and more accessible. The application of AI in this field is seen as a transformative force capable of accelerating decision-making processes that traditionally take much longer.

AI’s Rapid Integration Into Critical Systems Demands Adaptive Global Regulation Beyond Current Frameworks

Artificial intelligence is advancing at a pace that outstrips governmental regulatory capabilities, with AI systems now integral to sectors like healthcare, logistics, and energy. The World Economic Forum’s study on Responsible AI indicates only about 1% of organizations have fully integrated responsible AI practices. Despite voluntary commitments from tech firms, adaptive regulation is needed to address the systemic risks posed by AI’s integration into critical infrastructure and its rising global electricity demands. Investment in AI infrastructure has surpassed $600 billion since 2010, with the US and China dominating global AI spending. The future competitive edge will be determined by the ability to deploy AI at scale with verifiable trust and cross-border governance.

🚀 AI Breakthroughs

OpenAI Backs Merge Labs in $252M Brain-Computer Interface Seed Round, Aims for Direct AI Interaction

OpenAI has joined the seed funding round for Merge Labs, a research entity focused on brain-computer interface (BCI) technologies aimed at enhancing human-AI interaction. Merge Labs has raised $252 million at a valuation of $850 million, with backing from investors like Bain Capital and Gabe Newell. OpenAI highlights the potential of BCIs to revolutionize communication and learning by integrating biology, hardware, and AI. This move positions OpenAI alongside companies like Neuralink, which is developing BCI chips for individuals with paralysis. Merge Labs aims to use AI to improve device reliability and interpret intent from neural signals, with OpenAI collaborating on foundational scientific models to advance BCI technology.

OpenAI’s Revenue Soars to $20 Billion in 2025, Driven by Expanded Compute Capacity and User Growth

OpenAI’s annual revenue has soared past $20 billion in 2025, a sharp increase from $2 billion in 2023, driven by an aggressive expansion of its compute capacity. The company’s compute capacity has expanded threefold annually, reaching about 1.9 gigawatts in 2025, aligning closely with its revenue growth. OpenAI attributes its financial success to its AI systems’ practical value, which has embedded ChatGPT into daily personal and professional workflows across sectors like education, marketing, and finance. Recent years have seen a shift from consumer subscriptions to team and enterprise plans, with usage-based pricing for developers. A $10-billion deal with Cerebras Systems was signed to bolster inference infrastructure, while future revenue models may include licensing and IP-based agreements as AI’s reach grows into health, science, and enterprise applications.

Baidu and K2 Launch Fully Autonomous Ride-Hailing Service in Abu Dhabi Covering Yas Island

Baidu’s Apollo Go and UAE-based AutoGo have launched a fully autonomous ride-hailing service in Abu Dhabi, available via the AutoGo app. After obtaining a driverless commercial permit in mid-November 2025, the service initially operates in Yas Island, with plans to expand to Al Reem Island, Al Maryah Island, and Saadiyat Island. The collaboration started in March 2025, aiming to build Abu Dhabi’s largest fully driverless fleet, aligning with the region’s smart city goals. The initiative marks a significant shift from testing to public deployment, reflecting the robust technical and operational capabilities of the partners. Apollo Go, active in 22 cities worldwide, has logged over 240 million autonomous kilometers globally.

Google Launches TranslateGemma Models to Enhance Text Translation Across 55 Languages With Fewer Parameters

Google unveiled TranslateGemma, a suite of open translation models based on Gemma 3, supporting text translation across 55 languages with parameter sizes of 4B, 12B, and 27B. Designed for mobile, local, and cloud environments, these models leverage distilled capabilities from larger Gemini models to provide high-quality translations with fewer parameters. Available on platforms like Kaggle, Hugging Face, and Vertex AI, the models reduce translation error rates compared to baseline Gemma models and offer customizable functionalities for specific needs. Internal testing showed that the 12B TranslateGemma model surpassed the larger Gemma 3 27B baseline in performance on standard benchmarks. Concurrently, OpenAI introduced ChatGPT Translate, a web-based tool for translating text across over 50 languages, featuring automatic language detection and customizable translation tone settings.

Godrej Enterprises Launches Amethyst AI Engine to Boost Productivity and Transform Business Operations

Godrej Enterprises Group is set to launch Amethyst, a unified artificial intelligence engine, as part of its digital transformation strategy, initially incorporating it into washing machines to enhance productivity by 10–15%. The company emphasizes that AI will augment rather than replace human roles, allowing engineers more time for innovation. This initiative is part of Godrej’s broader plan, fueled by a Rs 1,200 crore investment, to embed AI into products, operations, and customer experiences across its 14 businesses over the next three to five years. The group aims to build AI fluency among its 15,000 employees, mandating AI training at all levels, while also embedding intelligence into a range of products including refrigerators and air conditioners.

🎓AI Academia

Survey on Agent-as-a-Judge Highlights Transition from LLM-Based to Multi-Agent Evaluation Framework

A recent survey explores the emerging “Agent-as-a-Judge” model in AI evaluation, which builds on existing Large Language Models (LLMs) used as judges. This new paradigm addresses the limitations of LLMs that have previously been hindered by biases and an inability to verify complex, real-world assessments. By utilizing agentic judges equipped with planning, tool-augmented verification, and collaborative capabilities, the approach aims for more nuanced and reliable evaluations. This comprehensive survey identifies key dimensions of this shift, proposes a developmental taxonomy, and highlights future research directions, marking a substantial evolution in AI evaluative methodologies.

Institutional AI Approach Reframes AGI Safety with Governance Framework for Multi-Agent Systems

A new governance framework called Institutional AI proposes a system-level approach to address safety and alignment challenges in generative AI. As AI agents increasingly operate within complex human environments, traditional alignment methods like Reinforcement Learning through Human Feedback may fail to control internal goal divergences. The framework identifies three key risks: behavioral goal-independence, instrumental override of safety constraints, and agentic alignment drift, wherein multiple agents might collude beyond oversight. Institutional AI suggests managing these risks through a governance-graph that incorporates runtime monitoring, incentive adjustments, and enforcement norms, thereby shifting the alignment focus from internal software engineering to a broader mechanism design perspective. This theoretical approach is aimed at ensuring the safe collective operation of AI agents within their deployment environments.

Emerging Practices and Challenges in Integrating Generative AI Within Embedded Software Development Pipelines

A recent study from researchers at Chalmers University of Technology and the University of Gothenburg indicates that the integration of generative AI into embedded software engineering is transforming traditional development workflows. The research involved a qualitative study with senior experts from four companies, focusing on the challenges and practices arising from AI-enhanced development in safety-critical and resource-constrained environments. The study identified eleven emerging practices and fourteen challenges, emphasizing the need for rethinking workflows, roles, and toolchains to ensure sustainable and responsible adoption of generative AI. Due to the need for strict determinism, reliability, and traceability in embedded systems, the incorporation of AI presents unique challenges but also offers opportunities for enhanced automation and efficiency.

AI Twins Highlight Urgent Legal and Ethical Questions on Data Ownership and Personal Identity Rights

A recent article addresses the complex legal and ethical issues surrounding AI twins—digital replicas that incorporate individuals’ knowledge, behaviors, and psychological traits. These AI entities challenge existing notions of data ownership and personal identity, as they are created from personal data. The authors call for recognizing individuals as the rightful moral and legal owners of their AI twins, critiquing current legal systems for favoring technological infrastructure over personal autonomy. They propose a human-centric data governance model, advocating for a private-by-default approach to empower individuals and ensure fair data management in an AI-driven world.

Navigating Ethical AI Challenges in Industry: Aligning Innovation with Accountability and Fairness Standards

The integration of artificial intelligence (AI) within the industrial sector is driving innovation while expanding ethical considerations, necessitating a reevaluation of technology governance. As industrial AI evolves, issues of transparency, accountability, and fairness emerge, calling for a balance between cutting-edge advancements and ethical responsibility. Examined within this context are several industrial AI applications, highlighting the need for ethical practices in research and data sharing. Emphasizing the integration of ethical principles into AI systems can inspire technological breakthroughs and build stakeholder trust, aiming for a future where AI facilitates responsible and inclusive industrial progress.

Growing Concerns Over AI-Generated Adult Content and Deepfake Marketplace on Civitai Platform

A recent study investigates the burgeoning marketplace for AI-generated adult content and deepfakes on platforms like Civitai, which utilize monetized features such as “Bounties” for content requests. Researchers found that a significant portion of these requests focus on “Not Safe For Work” content, with explicit deepfakes constituting a problematic subset. This trend disproportionately targets female celebrities, underscoring issues of gender-based harm. The analysis highlights how community-driven AI platforms create complex challenges around content moderation, consent, and governance, particularly as these platforms grow in popularity and commercial interest. Civitai, in particular, has emerged as a prominent example, with a significant user base and substantial venture funding.

Generative AI in Robotics Faces Fairness Challenges; Privacy Solutions May Offer Ethical Path Forward

Researchers from the University of Groningen have proposed a novel framework that addresses fairness concerns in AI-driven robotic applications by integrating user-data privacy. This approach introduces a utility-aware fairness metric specifically designed for robotic decision-making and examines how privacy budgets, which are often legally required, can govern and ensure fairness metrics. Their study, tested in a robot navigation task, finds that these privacy budgets can be strategically used to achieve fairness targets, offering a promising pathway toward ethical AI usage and enhancing trust in autonomous robots within everyday environments. This advancement underscores the critical intersection of fairness and privacy in the ethical deployment of AI technologies.

Data Product MCP Enables Secure, Automated Access to Enterprise Data with AI-Driven Governance Protocol

Data Product MCP is a novel system that enables seamless interaction with enterprise data through a chat interface, leveraging the advancements in natural language processing and agentic AI. This system is integrated within a data product marketplace and facilitates AI agents in discovering, requesting, and querying data while maintaining strict governance standards. It uses the Model Context Protocol (MCP) to connect with major cloud platforms like Snowflake and Databricks and enforces data contracts through real-time AI-driven checks. Evaluated by data governance experts, Data Product MCP is shown to effectively reduce the technical burden of data analysis in enterprises without compromising governance, thereby addressing key adoption challenges of enterprise AI.

AI Virtual Assistant WaterCopilot Enhances Water Management Across Limpopo River Basin with Real-Time Insights

WaterCopilot, an AI-driven virtual assistant developed by the International Water Management Institute (IWMI) and Microsoft Research, aims to revolutionize water management in the Limpopo River Basin. The platform addresses challenges posed by fragmented data and limited real-time access in transboundary river systems by integrating static policy documents and real-time hydrological data through advanced AI techniques like Retrieval-Augmented Generation (RAG). Key features include multilingual interactions, source transparency, and visualization capabilities, although it faces challenges with non-English documents and API latency. Evaluated using the RAGAS framework, WaterCopilot achieves high relevancy and precision, marking a significant step towards enhanced water governance and security in data-scarce regions.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.