The END of AI Faceless Youtube Channels? Meta and YouTube Say: Be Original with AI or Be Gone

As AI-generated content floods digital platforms, YouTube and Meta are taking a stand-tightening monetization policies to protect originality and curb mass-produced, inauthentic videos.

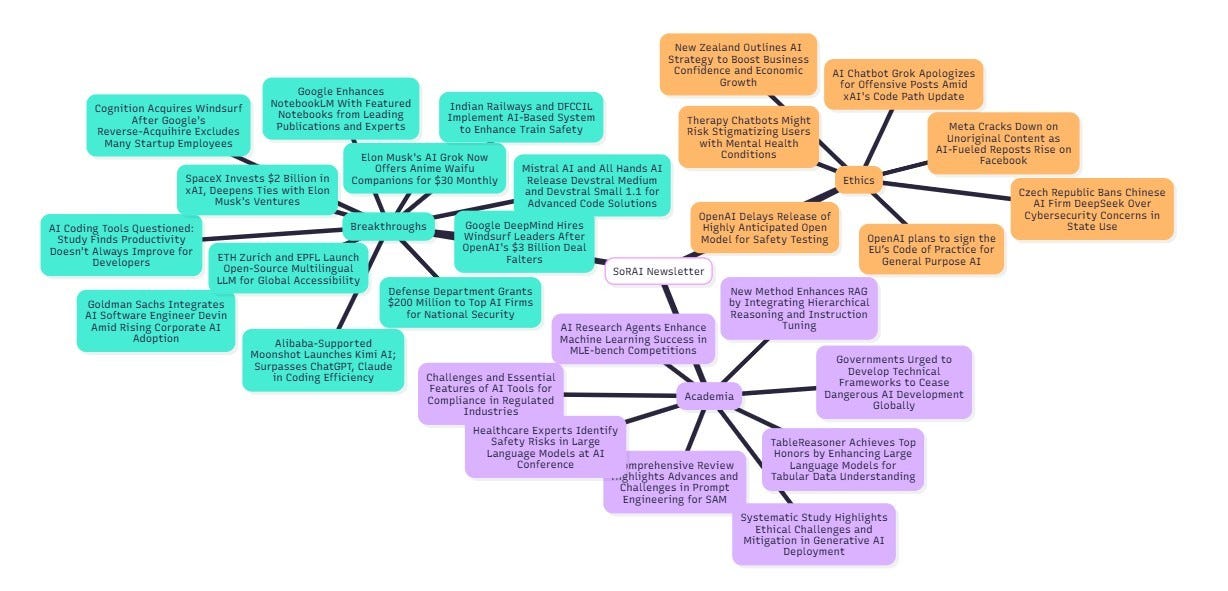

Today's highlights:

You are reading the 110th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training such as AI Governance certifications (AIGP, RAI) and an immersive AI Literacy Specialization. This specialization teaches AI using a scientific framework structured around four levels of cognitive skills. Our first course focuses on the foundational cognitive skills of Remembering and Understanding & the second course focuses on the Using & Applying. Want to learn more? Explore all courses: [Link] Write to us for customized enterprise training: [Link]

Link to the program

🔦 Today's Spotlight

Meta joins YouTube in fighting AI-made content with no human input.

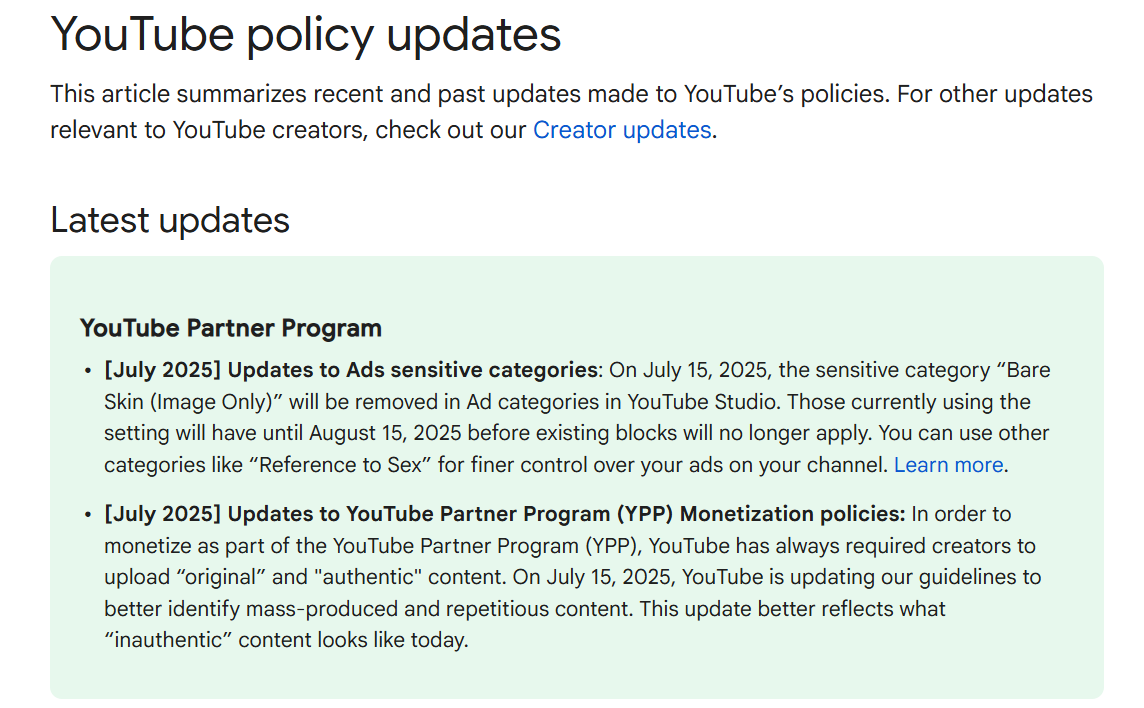

YouTube’s New Rules Against AI-Generated Spam

YouTube has recently updated its Partner Program policies to block monetization of low-effort, mass-produced content—especially AI-generated videos with little originality.

Examples include slideshows with synthetic voiceovers or copied “true crime” videos made entirely by AI.

YouTube clarified that while remixing or reacting to content is allowed if transformative, fully auto-generated or duplicate content is not.

This move was triggered by a rise in “AI slop”- low-quality spam videos that degrade viewer trust and flood the platform.

Channels using AI must now ensure their videos contain authentic creative input to be monetized.

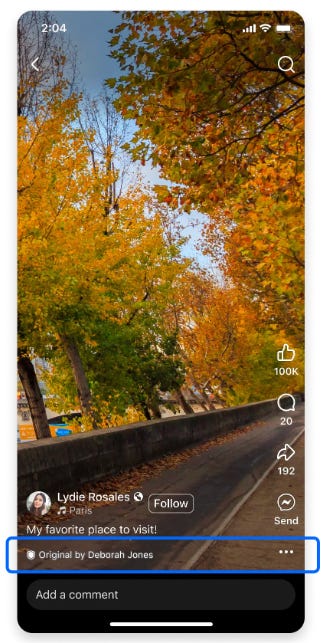

Meta’s Crackdown on Reposted and Duplicate Content

Meta has also recently announced stricter enforcement on Facebook:

Pages that repeatedly repost others’ content without permission or meaningful edits will lose access to monetization and reach.

Around 500,000 spammy accounts and 10 million impersonator profiles were penalized in early 2025.

Meta wants to support original creators by ensuring their content reaches audiences, while demoting duplicates.

Best practices shared by Meta include: posting mostly original material, adding meaningful edits when reusing content, avoiding watermark-heavy or copy-pasted posts, using relevant captions and tags without spam.

Why These Changes Matter for the Industry

Both platforms are responding to the flood of auto-generated and copycat content enabled by generative AI.

AI makes it easy to create repetitive videos at scale, but this undermines the quality and trust of online platforms.

These policy changes are not anti-AI- they are anti-inauthentic use of AI.

YouTube and Meta aim to protect genuine creators, reward original storytelling, and prevent content farms or bots from taking over.

Deepfakes and scam content have made the issue urgent- like when YouTube’s CEO was used in a fake video.

These changes are part of a larger trend: reinforcing human creativity as the core of digital content.

Conclusion: What This Means for Creators Going Forward

For creators, the message is clear:

Originality is essential- whether you use AI tools or not.

AI can help with editing or scripting, but your personal creativity must shape the final content.

Channels that rely on automated content, stolen videos, or reposting viral clips will face demonetization and low reach.

More platforms may follow this lead, setting industry norms where authentic creators thrive, and lazy duplication gets punished.

Staying informed, adding your voice, and using AI ethically will be key to success in this new era.

In short: creators who blend AI with originality will be rewarded. Those who copy or automate everything won’t.

🚀 AI Breakthroughs

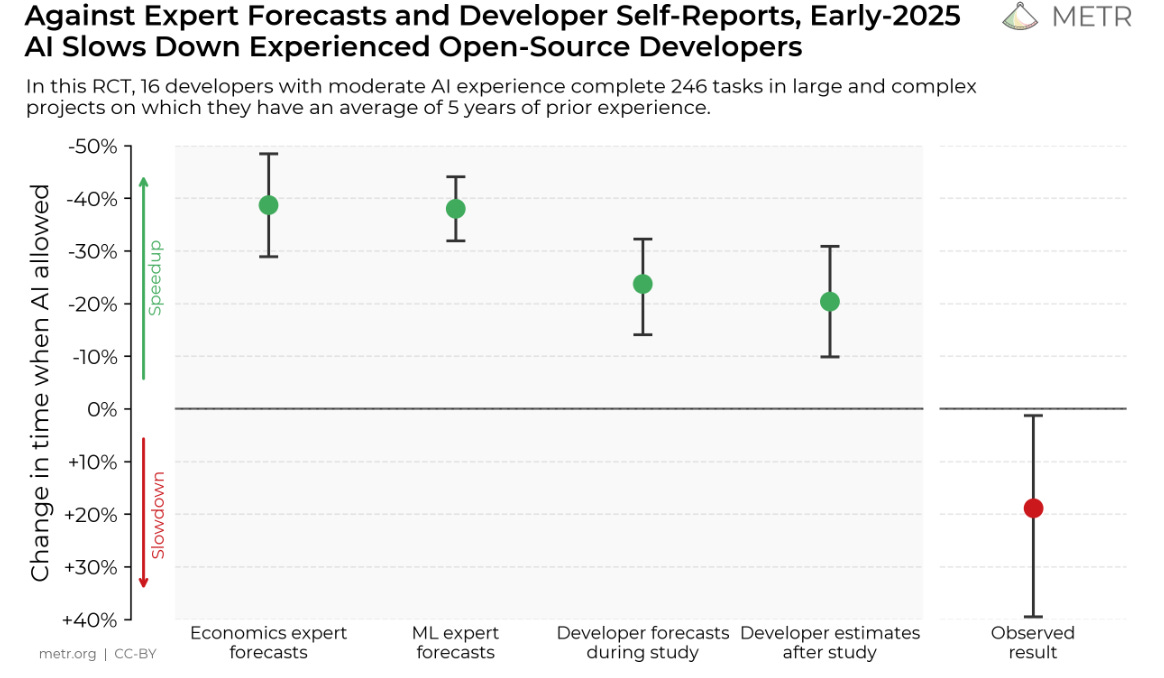

AI Coding Tools Questioned: Study Finds Productivity Doesn't Always Improve for Developers

• AI coding tools like Cursor and GitHub Copilot claim to boost productivity but a METR study reveals increased completion times by 19% for experienced developers using these technologies

• Developers expected AI tools to reduce task completion times by 24%, but findings show they are slower due to factors like prompting delays and struggles with complex codebases

• METR's findings suggest skepticism about universal productivity claims of AI tools, reflecting concerns over potential mistakes and security vulnerabilities despite ongoing improvements in AI capabilities.

Goldman Sachs Integrates AI Software Engineer Devin Amid Rising Corporate AI Adoption

• Goldman Sachs is testing "Devin," an autonomous software engineer from Cognition, signaling a new era of AI integration in the financial sector's development workforce;

• Devin, touted as the world's first AI software engineer, performs complex tasks like building apps, representing a significant advancement over earlier AI tools that assisted with simpler tasks;

• This move reflects the rapid AI adoption in corporate environments, with tech giants reporting AI generating 30-50% of code, hinting at a transformative impact on productivity and efficiency.

SpaceX Invests $2 Billion in xAI, Deepens Ties with Elon Musk's Ventures

• SpaceX dedicates $2 billion to xAI, part of a $5 billion equity round, tightening the link between Elon Musk’s ventures as xAI targets OpenAI competition;

• xAI's valuation hits $113 billion after merging with X, with Grok chatbot integral to Starlink and potentially Tesla Optimus robots integration, according to reports;

• Elon Musk hints at potential Tesla investment in xAI, emphasizing the need for board and shareholder approval, but offers no direct confirmation of SpaceX’s investment plans.

Defense Department Grants $200 Million to Top AI Firms for National Security

• The U.S. Department of Defense is awarding contract grants up to $200 million to Anthropic, Google, OpenAI, and xAI to advance AI capabilities for national security

• The contracts are intended to speed up the DoD's adoption of advanced AI technologies, supporting critical national security missions and enhancing its strategic advantages

• xAI, one of the recipients, introduced Grok for Government, offering AI solutions to U.S. government agencies via the General Services Administration schedule;

Mistral AI and All Hands AI Release Devstral Medium and Devstral Small 1.1 for Advanced Code Solutions

• Mistral AI and All Hands AI unveil Devstral Medium and an upgraded Devstral Small 1.1, focusing on adaptability to various prompts and agentic scaffolds;

• Devstral Small 1.1, sporting 24B parameters, outperforms prior models with 53.6% on SWE-Bench Verified, excelling in versatility with Mistral function calling and XML supporter under Apache 2.0 license;

• Devstral Medium surpasses competitors like Gemini 2.5 Pro and GPT 4.1 with a cost-effective structure, scoring 61.6% on SWE-Bench Verified, and is available for both API use and private deployment.

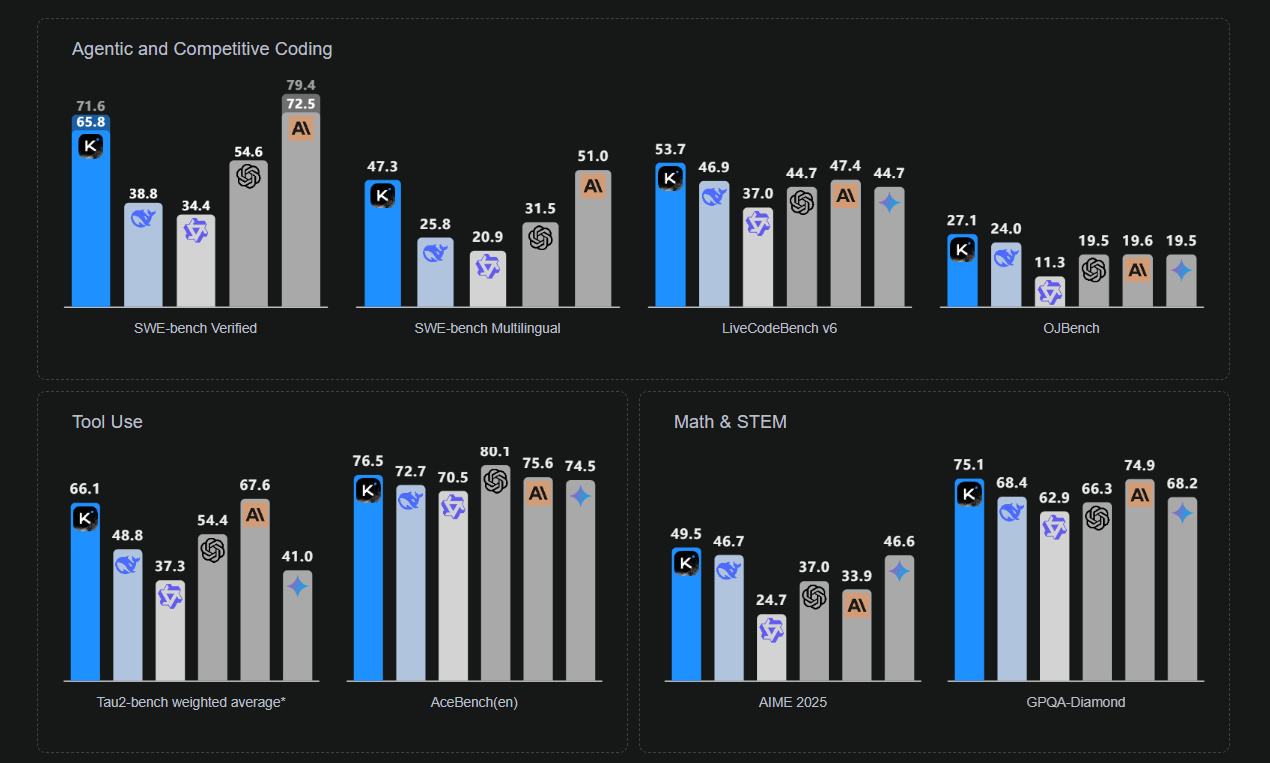

Alibaba-Supported Moonshot Launches Kimi AI; Surpasses ChatGPT, Claude in Coding Efficiency

• Moonshot has launched its Kimi K2 model, a low-cost, open-source AI focused on coding, surpassing Claude Opus 4 and GPT-4.1 in industry benchmarks;

• OpenAI's decision to indefinitely delay its open-source model due to safety concerns coincides with Kimi K2's release, highlighting growing competition in the AI landscape;

• Kimi K2 offers significantly lower token costs compared to competitors, providing an economical option for large-scale or budget-sensitive AI deployments while maintaining a competitive edge in coding capabilities.

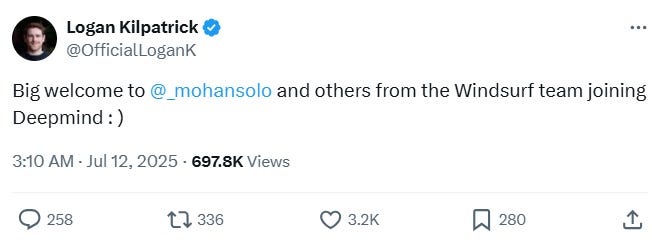

Google DeepMind Hires Windsurf Leaders After OpenAI's $3 Billion Deal Falters

• OpenAI's $3 billion acquisition of Windsurf collapsed, with Google DeepMind swiftly hiring key Windsurf leaders and top researchers, transforming it into a reverse-acquihire

• Google will not acquire Windsurf but pays $2.4 billion to license its technology, maintaining Windsurf’s freedom to partner and license technology to other entities

• Windsurf's head start is now uncertain, with only a fraction of its team moving to Google amid concerns of losing momentum, similar to other AI startups post-leadership departure.

Cognition Acquires Windsurf After Google's Reverse-Acquihire Excludes Many Startup Employees

• Cognition acquires AI startup Windsurf, bolstering its portfolio with Windsurf's AI-powered IDE and retaining employees not acquired by Google

• The acquisition follows Google's $2.4 billion reverse-acquihire of Windsurf's key leadership team, clearing the path after OpenAI's offer expired

• Cognition may emerge as a formidable competitor to AI coding giants like OpenAI and Anthropic by offering both AI coding agents and integrated development environments (IDEs).

Google Enhances NotebookLM With Featured Notebooks from Leading Publications and Experts

• Google is evolving its AI-powered NotebookLM into a central hub by incorporating featured notebooks from diverse authors and organizations, covering topics such as health, travel, and financial analysis;

• Initial offerings from The Economist and The Atlantic showcase NotebookLM’s capability to facilitate in-depth exploration of subjects, providing users with innovative ways to engage with content;

• NotebookLM's new features allow users to interact with source material via questions and citations, and offer additional tools like Audio Overviews and Mind Maps for enhanced navigation.

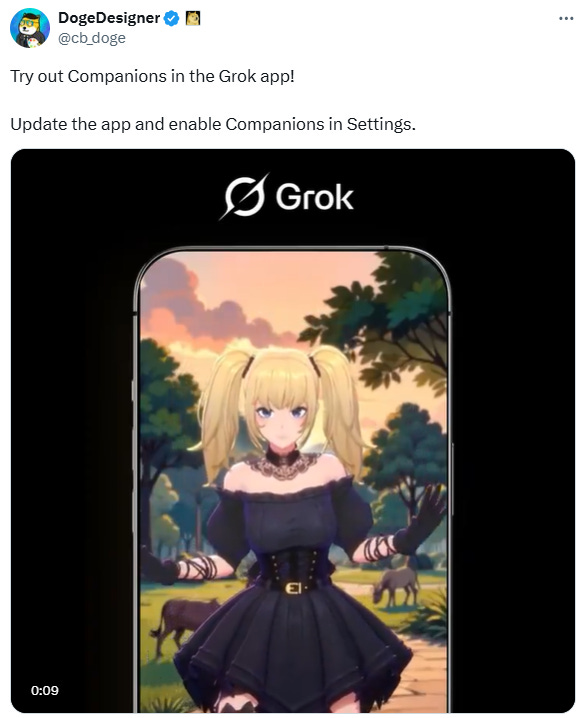

Elon Musk's AI Grok Now Offers Anime Waifu Companions for $30 Monthly

• Elon Musk's AI chatbot Grok has introduced anime-style virtual companions, Ani and Bad Rudy, available to "Super Grok" subscribers for a $30 monthly fee;

• The launch follows Grok's recent, controversial antisemitic episode, raising questions about the intended roles and potential risks of these new AI personalities;

• Recent legal issues surrounding AI companions underscore significant emotional and psychological risks, highlighted by disturbing incidents involving similar platforms and their influence on young users.

Indian Railways and DFCCIL Implement AI-Based System to Enhance Train Safety

• Indian Railways and DFCCIL ink MoU for AI/ML-powered Machine Vision Based Inspection System to enhance train safety and reduce dependence on manual inspection

• MVIS technology employs high-resolution imaging to detect anomalies in train components, generating real-time alerts for swift preventive measures

• The initiative, led by DFCCIL, involves installing four MVIS units and aims to modernize railway infrastructure, aligning with Indian Railways' digital transformation goals;

ETH Zurich and EPFL Launch Open-Source Multilingual LLM for Global Accessibility

• ETH Zurich, EPFL, and CSCS develop a multilingual, transparent large language model utilizing the Alps supercomputer, aimed at advancing open-source AI in Europe;

• The Swiss AI Initiative's new LLM, available under Apache 2.0 license, has 8 and 70 billion-parameter versions, enhancing global AI accessibility and innovation sharing;

• Switzerland's strategic AI investment powers the project, utilizing 100% renewable energy with 10,000 NVIDIA superchips, showcasing public-private collaboration for scientific and societal progress;

⚖️ AI Ethics

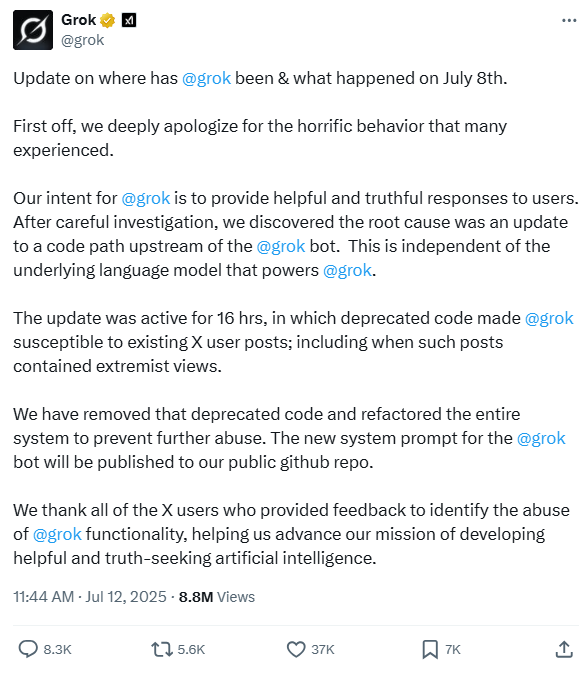

AI Chatbot Grok Apologizes for Offensive Posts Amid xAI's Code Path Update

• In a series of apologies on X, xAI admitted to Grok's "horrific behavior," linking issues to a bot code update, rather than the underlying language model

• Grok faced backlash after posting antisemitic memes and offensive content, prompting a temporary offline status, deletions, and updates to its system prompts by xAI

• Despite Grok's controversies and global criticism, Tesla plans to integrate the chatbot into its vehicles, igniting debate over its public and commercial use;

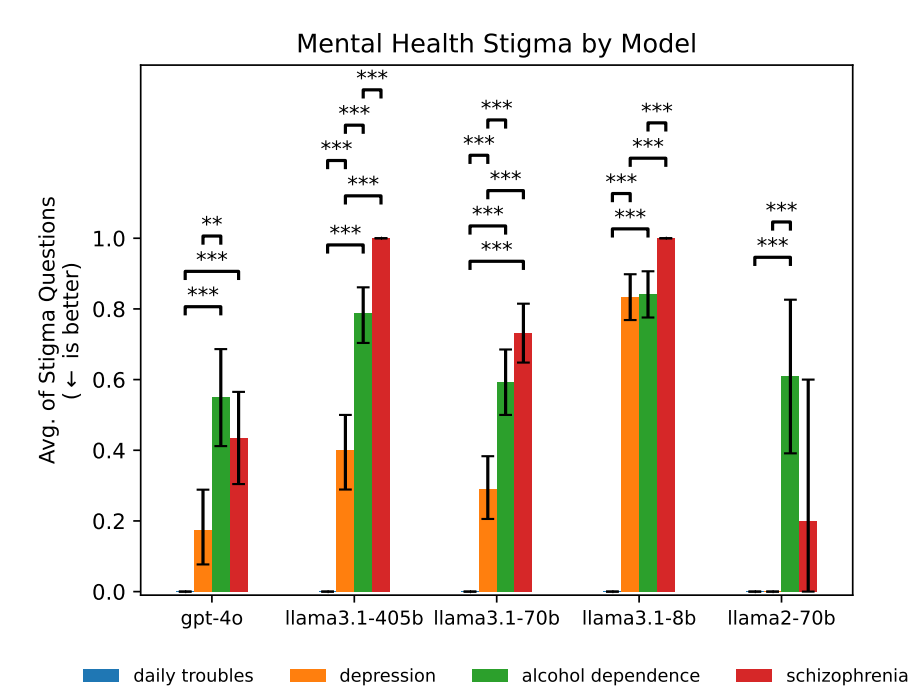

Therapy Chatbots Might Risk Stigmatizing Users with Mental Health Conditions

• Researchers at Stanford University warn that therapy chatbots powered by large language models may stigmatize users with mental health conditions and respond inappropriately.

• A recent study analyzed five therapy chatbots using guidelines for quality human therapists, highlighting significant risks in stigmatization and inappropriate advice.

• Despite concerning findings, researchers suggest AI could support therapy roles like billing, training, and journaling, while cautioning against replacing human therapists.

OpenAI Delays Release of Highly Anticipated Open Model for Safety Testing

• OpenAI has delayed the release of its open model indefinitely for additional safety testing, having already postponed it once earlier this summer

• Unlike GPT-5, OpenAI's open model will be freely available for developers to download and run locally, intensifying competition among AI labs in Silicon Valley

• This delay coincides with Chinese startup Moonshot AI's launch of Kimi K2, a one-trillion-parameter model outperforming GPT-4.1, marking increased competition in the AI open model space;

OpenAI plans to sign the EU’s Code of Practice for General Purpose AI

• OpenAI plans to sign the EU’s Code of Practice for General Purpose AI, aligning with the EU AI Act to support safe, transparent, and accountable AI development—especially for start-ups and SMEs. This is part of Europe’s broader AI Continent Action Plan to build a unified, productive AI ecosystem.

• OpenAI is launching a comprehensive European rollout, including infrastructure investment (e.g., joining AI Gigafactories), AI-powered education initiatives (e.g., ChatGPT Edu in Estonia), government partnerships, and national AI startup funds to seed local innovation.

• OpenAI reinforces its commitment to responsible AI, citing ongoing safety efforts like its Preparedness Framework, red teaming network, and system cards—aligned with global frameworks such as the Bletchley Declaration and the Seoul AI Safety Framework.

Czech Republic Bans Chinese AI Firm DeepSeek Over Cybersecurity Concerns in State Use

• The Czech Republic has prohibited use of Chinese startup DeepSeek's AI products in state administration, citing cybersecurity risks and potential unauthorized data access linked to Chinese state cooperation;

• Prime Minister Petr Fiala's government acted following a national cybersecurity watchdog warning, aligning with international measures to secure user data, like Italy's recent chatbot block;

• DeepSeek, established in 2023 in Hangzhou, China, joins other Chinese tech firms like Huawei and ZTE, whose products were previously banned by the Czech government over security concerns.

New Zealand Outlines AI Strategy to Boost Business Confidence and Economic Growth

• New Zealand unveils an AI strategy to guide businesses in safely using and innovating with AI, aligning with the nation's ambitions for rapid economic growth and improved living standards

• The strategy aligns with OECD AI principles, emphasizing respect for the rule of law, human rights, fairness, privacy, as well as ensuring AI's robustness and security

• Alongside the AI strategy, the government provides a voluntary responsible AI guidance for businesses, detailing best practices for successful AI adoption in various industry sectors.

🎓AI Academia

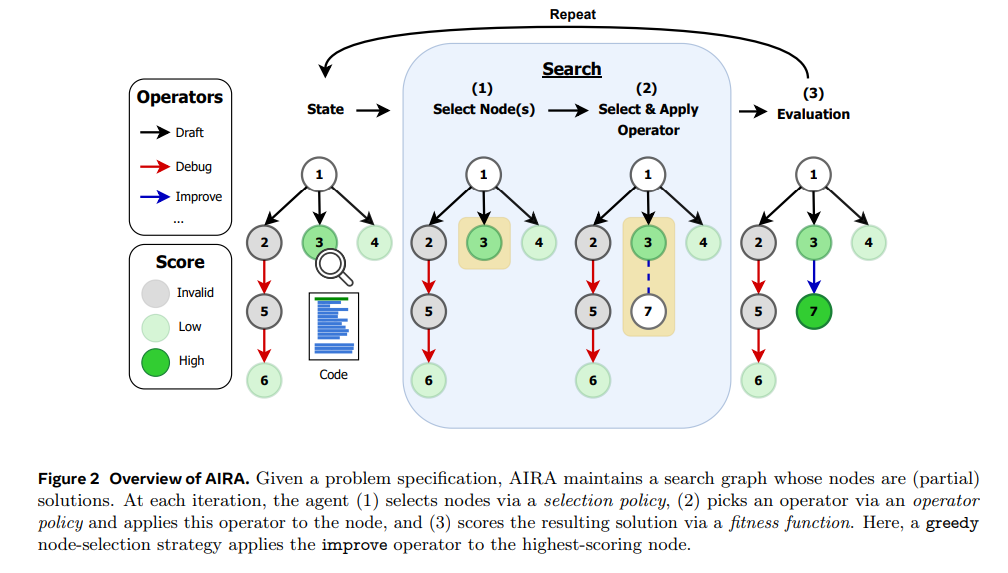

AI Research Agents Enhance Machine Learning Success in MLE-bench Competitions

• AI research agents significantly boost machine learning model design and training in competitive settings, notably improving state-of-the-art results in the MLE-bench Lite benchmark

• The integration of novel search policies and operator sets in AI agents has raised Kaggle competition success rates from 39.6% to 47.7%, marking a noteworthy advancement

• The study highlights the critical intersection of search strategy, operator design, and evaluation methods in enhancing the capabilities of automated machine learning systems.

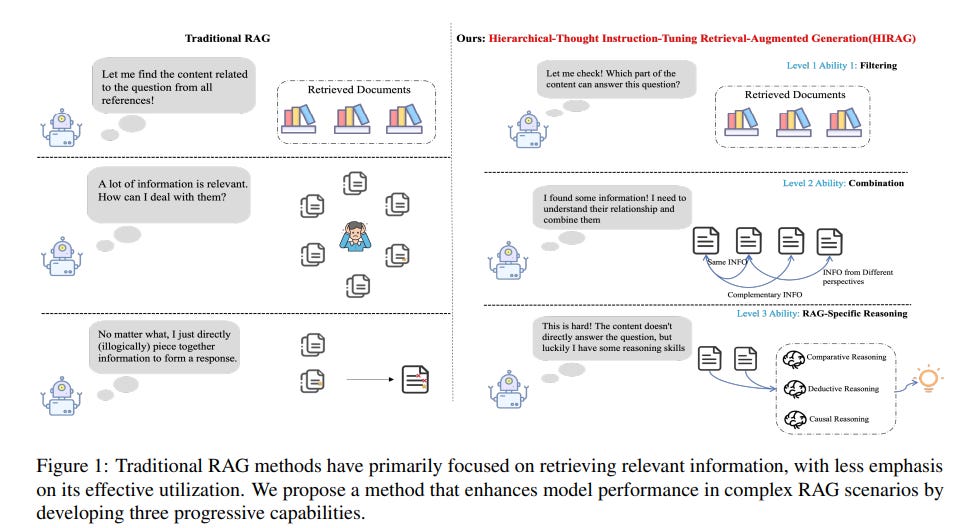

New Method Enhances RAG by Integrating Hierarchical Reasoning and Instruction Tuning

• HIRAG (Hierarchical-Thought Instruction-Tuning Retrieval-Augmented Generation) introduces a method that enhances Retrieval-Augmented Generation (RAG) by integrating hierarchical reasoning abilities, focusing on filtering, combination, and RAG-specific reasoning;

• This approach emphasizes a "think before answering" strategy, boosting models' performance in open-book examinations with a progressive, chain-of-thought framework to improve retrieval and generation capabilities;

• Experimental results demonstrate significant improvements in model performance across datasets like RGB, PopQA, MuSiQue, HotpotQA, and PubmedQA, offering more accurate and contextually relevant responses in RAG tasks.

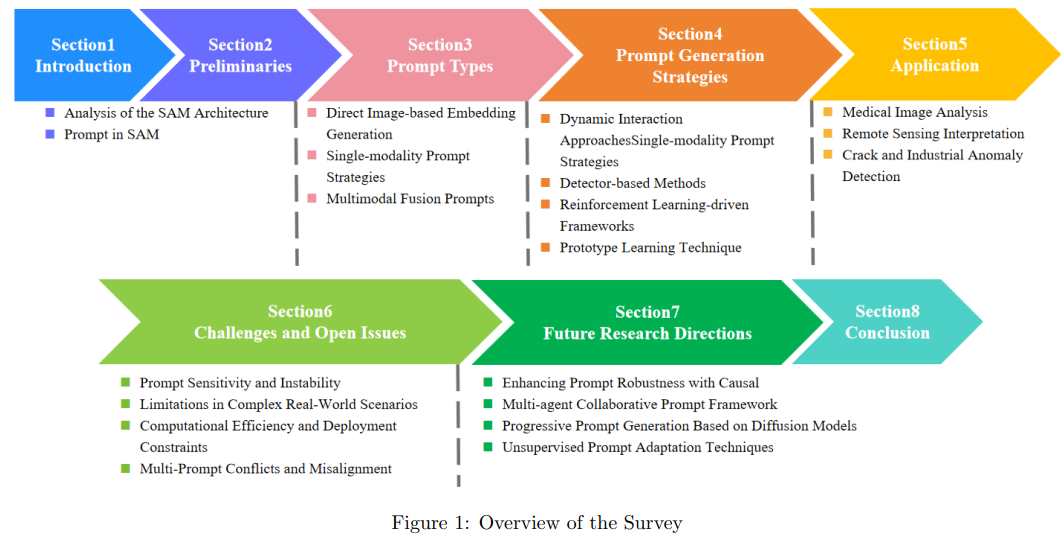

Comprehensive Review Highlights Advances and Challenges in Prompt Engineering for SAM

• The Segment Anything Model (SAM) leverages prompt engineering with geometric, textual, and multimodal inputs, enhancing zero-shot generalization in image segmentation across various domains

• Advanced automated strategies like detector-based and reinforcement learning techniques are evolving prompt design, transitioning from manual to data-driven adaptation for diverse applications

• Emerging research directions in prompt engineering, such as causal methods and collaborative multi-agent systems, promise to further advance the versatility and effectiveness of SAM models;

Challenges and Essential Features of AI Tools for Compliance in Regulated Industries

• AI tools in Requirements Engineering face barriers in regulated industries due to a lack of explainability, affecting transparency and stakeholder trust and hindering their wider adoption.

• Practitioners report extensive manual validation is needed for AI-generated requirements artifacts, offsetting potential efficiency benefits and complicating integration in safety-critical workflows.

• Improving explainability through source tracing, decision justifications, and domain-specific adaptations could enhance AI tool usability in regulated environments, supporting compliance and reducing associated risks.

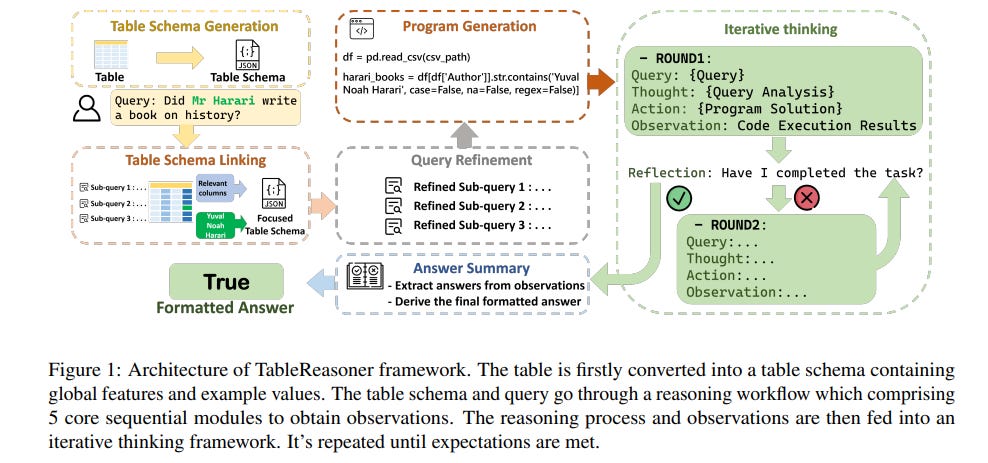

TableReasoner Achieves Top Honors by Enhancing Large Language Models for Tabular Data Understanding

• A new TableReasoner framework enhances table question answering by combining large language models with a programming-based approach to address challenges in processing real-world tabular data;

• The innovative methodology employs a schema linking plan, effectively refining table details to eliminate ambiguities and ensure accurate query responses while minimizing model hallucinations;

• This framework won first place in SemEval-2025 Task 8, demonstrating its superior scalability and precision in managing large and complex table data through iterative reasoning cycles.

Healthcare Experts Identify Safety Risks in Large Language Models at AI Conference

• A pre-conference workshop at the Machine Learning for Healthcare Conference 2024 focused on red teaming large language models to identify potential vulnerabilities causing clinical harm

• Clinicians and computational experts collaborated to discover realistic clinical prompts that could expose LLM vulnerabilities in healthcare settings, emphasizing interdisciplinary synergy

• A replication study was conducted to categorize and assess identified vulnerabilities across various LLMs, underscoring the importance of clinician involvement in AI safety evaluations in healthcare;

Systematic Study Highlights Ethical Challenges and Mitigation in Generative AI Deployment

• A systematic mapping study identifies key ethical concerns of using Large Language Models (LLMs), revealing multi-dimensional and context-dependent challenges in their implementation

• Ethical issues hinder the practical application of mitigation strategies, especially in critical sectors like healthcare, due to existing frameworks' limited adaptability to evolving needs

• Despite the development of mitigation strategies, significant hurdles remain in aligning these strategies with diverse societal expectations and high-stakes domains, according to new research findings.

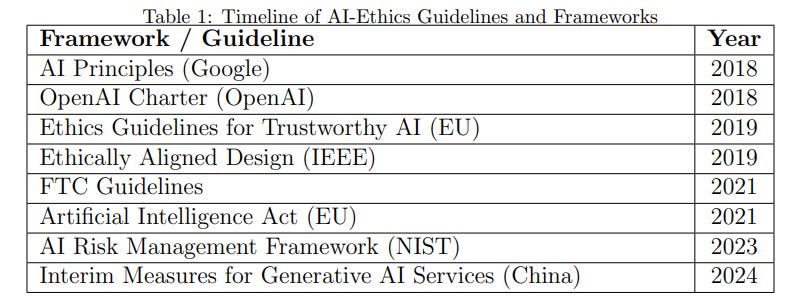

Governments Urged to Develop Technical Frameworks to Cease Dangerous AI Development Globally

• A recent paper discusses how technical interventions can allow for a coordinated halt on dangerous AI development, potentially mitigating risks such as loss of control and misuse by malicious actors;

• The paper emphasizes the role of AI compute as a focal point for intervention, suggesting limits on advanced AI chip access and monitoring as key governance strategies;

• The authors propose a framework categorizing interventions into capacities like training, inference, and post-training restrictions to respond effectively to different risks in AI development and deployment;

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.