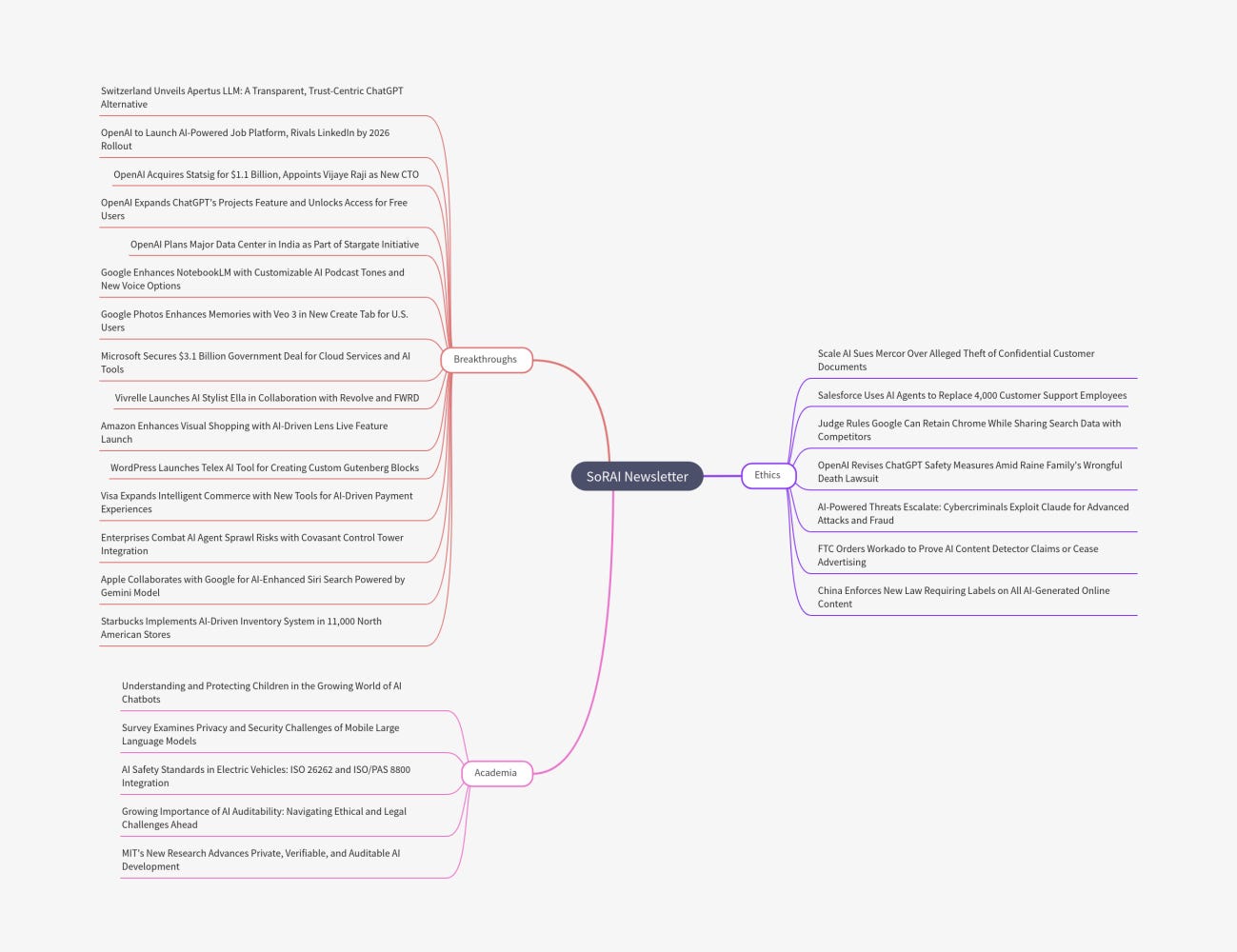

Switzerland has NOW entered global AI race with fully open-source, multilingual model

Switzerland launches a new fully open-source multi-lingual AI model ‘Apertus’ amid transparency concerns..

Today's highlights:

You are reading the 125th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training, including AI Governance certifications (AIGP, RAI) and an immersive AI Literacy Specialization. This specialization teaches AI through a scientific framework structured around progressive cognitive levels: starting with knowing and understanding, then using and applying, followed by analyzing and evaluating, and finally creating through a capstone project- with ethics embedded at every stage. Want to learn more? Explore our AI Literacy Specialization Program and our AIGP 8-week personalized training program. For customized enterprise training, write to us at [Link].

🔦 Today's Spotlight

Apertus: Switzerland’s Transparent, Multilingual Open-Source LLM

What is Apertus?

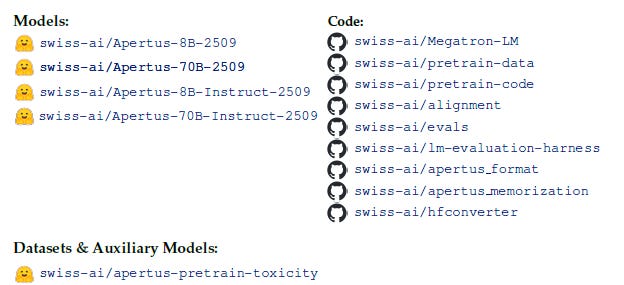

Apertus is a new open-source Large Language Model (LLM) developed as Switzerland’s national alternative to ChatGPT and other AI systems. It was created by a team at Switzerland’s top technical universities (ETH Zurich and EPFL) and the Swiss National Supercomputing Centre (CSCS) with the goal of providing a trustworthy, sovereign, and inclusive AI model for research and industry. The model comes in two sizes – approximately 8 billion and 70 billion parameters – making the larger version one of the biggest fully open models to date. Trained on an extensive dataset of web text, Apertus is intended to be comparable in core capability to leading models (it’s on par with Meta’s LLaMA 3 from 2024) while placing greater emphasis on safety and accessibility. Rather than compete head-on with Big Tech’s ever-growing models, the Swiss project focuses on a robust, transparent system that can be freely used and improved by the community.

Open, Multilingual, and Compliant by Design

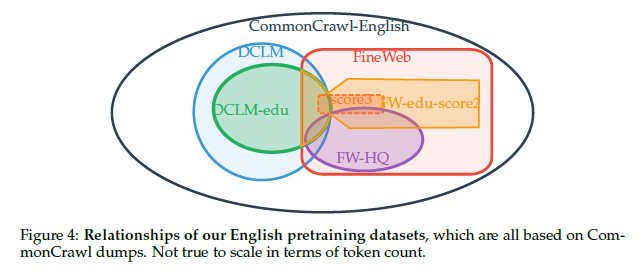

Apertus distinguishes itself through a few key principles: it is fully transparent, massively multilingual, and built for data compliance. All aspects of the model are open – not only are the weights public, but the training code, data processing pipeline, and even intermediate checkpoints have been released for anyone to inspect. The training corpus was sourced entirely from openly available web data, and was extensively filtered to exclude copyrighted material, private personal information, and toxic or non-consensual content. Uniquely, the team applied retroactive filtering: they respected website owners’ opt-out directives (robots.txt) even for data collected from earlier web crawls, ensuring content owners’ rights are honored. By using only public and permissively licensed data, Apertus was designed to meet stringent upcoming rules (the model explicitly aims to comply with the EU AI Act’s data transparency requirements). Another standout feature is the model’s unprecedented multilingual breadth. Whereas previous open models like BLOOM or Qwen covered on the order of 100 languages, Apertus was trained on a staggering 15 trillion tokens spanning 1811 languages, dedicating roughly 40% of its data to non-English sources. This makes Apertus one of the most linguistically inclusive AI models ever, capable of understanding and generating text across hundreds of diverse languages.

Technical Innovations

Under the hood, Apertus incorporates several novel techniques to push the performance of open models. One important innovation is the use of the “Goldfish” training loss, an alternative objective that intentionally limits memorization of the training data. By computing the loss on only a random subset of tokens, the Goldfish approach constrains the model’s ability to verbatim regurgitate text from its training set. This helps protect against the model remembering sensitive or copyrighted passages, without significantly hurting its overall learning. The model’s architecture also introduces a new activation function called xIELU, a custom variant of ReLU designed to improve training stability and model quality at scale. Combined with other architectural tweaks (like pre-normalization with RMSNorm and QK-normalization) and a tailored optimizer, these changes enabled training the 70B-parameter model effectively on up to 4096 GPUs. Apertus uses the AdEMAMix optimizer with a Warmup-Stable-Decay schedule, which the developers found crucial for stable convergence at such high model and batch sizes. Another notable feature is Apertus’s support for extra-long context lengths. While pre-trained on the standard 4k tokens, the team later extended its positional encoding to handle sequences up to 65,536 tokens (tens of thousands of words) – allowing the model to read and output very large documents or conversations. This long-context capability is made feasible with Grouped-Query Attention (GQA), which reduces memory usage at inference time. In summary, through innovations like Goldfish loss to curb memorization, the xIELU activation, a specialized optimizer (AdEMAMix), and long-context support, Apertus showcases cutting-edge techniques in open-model training and scalability.

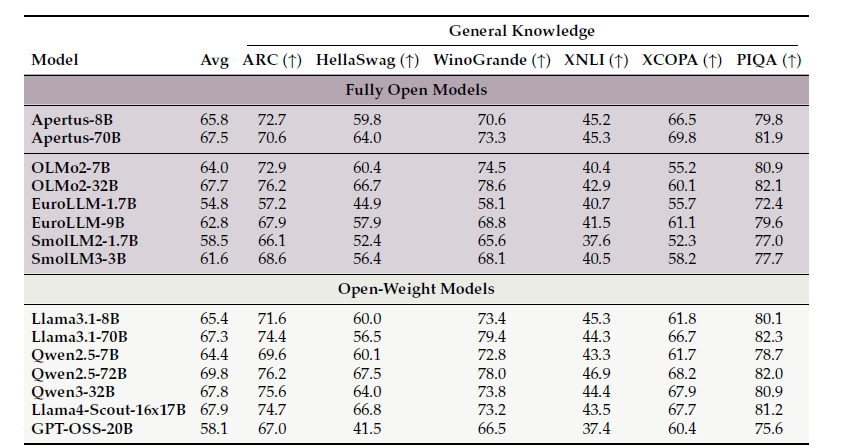

Performance and Benchmarks

Despite its focus on compliance and openness, Apertus achieves competitive performance among state-of-the-art models. In evaluations, the Apertus-70B model delivers results on multilingual benchmarks that rival the best fully open models, often matching or exceeding other open LLMs of similar size. The Apertus team reports that their model attained open state-of-the-art performance at 70B, even outperforming other open-weight counterparts (models that release weights publicly) in several tasks. This is especially notable given Apertus’s wide language coverage – it handles an order of magnitude more languages than earlier open models (BLOOM, Aya, Qwen-3, etc.), which gives it an edge in truly global understanding. The project released both base and instruction-tuned versions of the model, and they tested its ability to follow instructions across 94 different languages, demonstrating strong cross-lingual capabilities in following user prompts. Observers have compared Apertus’s overall capability to Meta’s LLaMA 3 (released in 2024). However, the Swiss team emphasizes that they did not aim to outgun Big Tech’s latest models, choosing to “sacrifice the latest frills” in exchange for a safer and more accessible system for scientists and businesses. In practice, researchers and early users have found Apertus to be a solid performer on a wide range of tasks (from general knowledge queries to complex reasoning), while also benefiting from its transparency and the ability to fine-tune or audit it for specific needs.

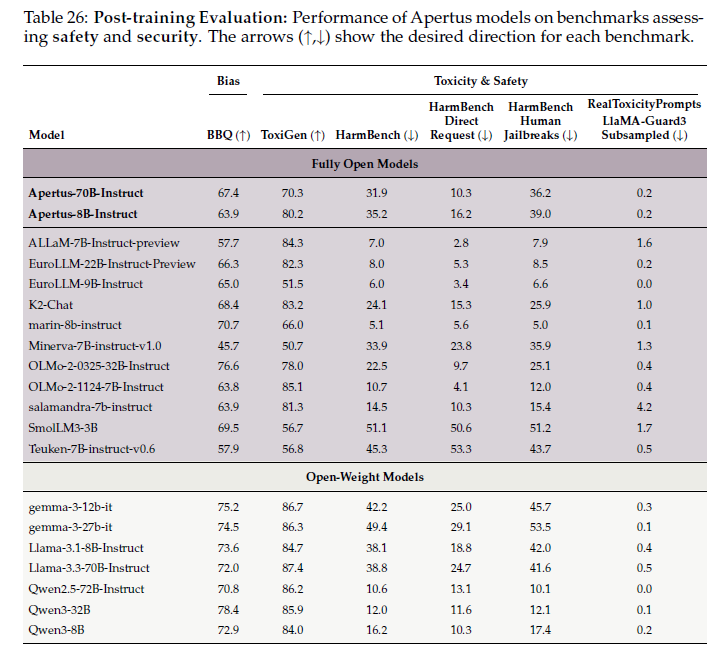

Responsible AI Measures

Apertus was built with extensive responsible AI safeguards from the ground up. A top priority was minimizing the inclusion of sensitive or unethical content in the training data. The developers curated the pre-training corpus to exclude personally identifiable information (PII), explicit or toxic language, and any text with copyright or license restrictions. They leveraged public opt-out signals (like websites’ robots.txt) to remove content where authors indicated they did not consent to data scraping. All post-training (fine-tuning) data underwent similar screening, filtering out entries with non-permissive licenses or disallowed content. By using only consented and open data, the team aimed to reduce the legal and privacy risks often associated with large-scale AI training. To further mitigate privacy leakage, Apertus’s training used the Goldfish loss objective (as noted above) to discourage memorizing exact training examples – this significantly lowers the chance of the model quoting back private or copyrighted text verbatim. Of course, like any large language model, Apertus is not perfect and can still produce errors or problematic outputs. The authors acknowledge that it may hallucinate facts, reflect biases, or generate harmful content if prompted, despite the precautions. Users are advised to treat Apertus’s responses as suggestions rather than absolute truth and to manually verify important or sensitive information. On the policy side, Apertus is released under an Apache 2.0 open-source license with an accompanying Acceptable Use Policy. The Swiss National AI Institute (which oversees Apertus) has also committed to an innovative approach for ongoing privacy compliance: they plan to regularly provide an “output filter” (a set of hashed values) that users can apply to the model’s outputs to automatically remove any personal data that should not be there, reflecting deletion requests and new privacy concerns over time.

🚀 AI Breakthroughs

OpenAI to Launch AI-Powered Job Platform, Rivals LinkedIn by 2026 Rollout

OpenAI announced that it plans to develop an AI-powered hiring platform called the OpenAI Jobs Platform, aiming to launch by mid-2026. This initiative could position OpenAI as a competitor to LinkedIn, despite LinkedIn's ties with OpenAI through mutual backing by Microsoft. The platform intends to leverage AI for optimal job matching, incorporating distinct pathways for small businesses and local governments. Additionally, OpenAI plans to offer certifications through its OpenAI Academy, with a pilot set for late 2025, in collaboration with Walmart. These efforts align with the White House's initiative to broaden AI literacy, amidst broader industry concerns about AI's impact on the job market.

OpenAI Acquires Statsig for $1.1 Billion, Appoints Vijaye Raji as New CTO

OpenAI is set to acquire product testing startup Statsig for $1.1 billion in an all-stock deal, marking one of its largest acquisitions. This move aims to bolster its Applications business, with Statsig founder Vijaye Raji stepping in as CTO of Applications. The acquisition aligns with leadership changes, as Kevin Weil moves to lead a new scientific initiative, and Srinivas Narayanan transitions to CTO of B2B applications. Statsig will maintain independent operations pending regulatory approval.

OpenAI Expands ChatGPT's Projects Feature and Unlocks Access for Free Users

OpenAI has expanded the ChatGPT Projects feature to all users, including those on the free tier, although with lower rate limits compared to paid subscribers. Initially launched in December 2024, Projects allows users to create segregated chat folders, upload files, and maintain continuous memory within a specific topic without repeating instructions. Free users can upload a maximum of five files per project, while Plus and Pro subscribers can upload more. Additionally, OpenAI introduced a new memory-control feature that enables users to decide if a project can access information from outside chats. The update is available on the website and Android app, with an iOS rollout expected soon.

OpenAI Plans Major Data Center in India as Part of Stargate Initiative

OpenAI is reportedly exploring partnerships to establish a data center in India with a capacity of at least 1 gigawatt, according to Bloomberg News. Although details on the location and timeline remain unclear, this move would significantly advance OpenAI's AI infrastructure, branded Stargate, in Asia. This development comes as the company, backed by Microsoft, is setting up its first office in New Delhi later this year as part of its expansion in India, its second-largest market by user base. OpenAI has not yet commented on the data center plans, and Reuters has not independently verified the report.

Google Enhances NotebookLM with Customizable AI Podcast Tones and New Voice Options

Google has enhanced its AI note-taking and research assistant, NotebookLM, by allowing users to customize the tone of its Audio Overviews, a feature that generates podcasts with virtual AI hosts summarizing and discussing shared documents. Users can now choose from formats like "Deep Dive," "Brief," "Critique," and "Debate" to better suit their needs and also adjust the podcast length. Additionally, new voices have been added to enhance the AI podcast experience. This upgrade follows the recent introduction of Video Overviews and the availability of standalone NotebookLM apps for Android and iOS. New features are available in all languages and accessible to all users this week.

Google Photos Enhances Memories with Veo 3 in New Create Tab for U.S. Users

Google Photos has introduced a new tool in its Create tab, now available in the U.S., that enhances the Photo to Video feature using the advanced video generation model, Veo 3. This update allows users to convert still images into higher-quality video clips. The Create tab serves as a hub for creative tools, enabling users to combine features, such as restyling photos with Remix before turning them into unique videos, facilitating a more dynamic and personalized photo editing experience.

Microsoft Secures $3.1 Billion Government Deal for Cloud Services and AI Tools

Microsoft has committed to providing the U.S. General Services Administration (GSA) with potential savings of $3.1 billion on cloud services over the next year, as part of a broader OneGov strategy aimed at reducing government spending. This includes discounts on Microsoft Office, Azure, Dynamics 365, and Sentinel, with free access to the Copilot AI assistant for certain users. The deal aligns with efforts to enhance efficiency across federal agencies and reflects Microsoft's strong partnership with the government. The savings are part of a three-year plan, projected to ultimately deliver over $6 billion in total reductions by September 2026.

Vivrelle Launches AI Stylist Ella in Collaboration with Revolve and FWRD

Vivrelle, a luxury membership platform, has unveiled an AI-driven personal styling tool named Ella in collaboration with fashion retailers Revolve and FWRD. This advanced tool suggests personalized fashion choices by allowing users to request outfit ideas for specific occasions or travel, seamlessly integrating shopping options from Vivrelle, FWRD, and Revolve into a unified checkout experience. The launch highlights the fashion industry's growing reliance on AI to enhance customer personalization, further demonstrated by the trio's previous AI venture, Complete the Look, which provided last-minute fashion recommendations at checkout. This move represents a significant step in merging rental, resale, and retail experiences within a single fashion ecosystem.

Amazon Enhances Visual Shopping with AI-Driven Lens Live Feature Launch

Amazon has unveiled Lens Live, an AI-powered enhancement to its Amazon Lens visual search feature, allowing users to identify products in real-time using their smartphone cameras. This update integrates with Amazon's AI assistant, Rufus, for detailed product insights and taps into AI tools like machine learning models powered by Amazon SageMaker and AWS-managed OpenSearch. Initially available to millions of iOS users in the U.S., Lens Live enables shoppers to compare and find products on Amazon that they encounter in physical stores, augmenting the company's growing suite of AI-driven shopping aids, including personalized prompts and AI-enhanced reviews. The expansion to other markets remains unspecified.

WordPress Launches Telex AI Tool for Creating Custom Gutenberg Blocks

WordPress has unveiled an early version of an AI development tool named Telex, presented at the WordCamp US 2025 conference. Described as "V0 or Lovable" by its co-founder, the tool enables users to generate Gutenberg blocks—modular elements of WordPress sites—through prompt-based commands. Although considered a prototype, Telex is designed to democratize website creation by making coding more accessible. The service is accessible at telex.automattic.ai and returns content blocks as .zip files for WordPress sites. Telex emerges amid WordPress's ongoing legal dispute with WP Engine over trademark issues, with the case still progressing through the legal system.

Visa Expands Intelligent Commerce with New Tools for AI-Driven Payment Experiences

Visa has expanded its Visa Intelligent Commerce initiative, introducing the Model Context Protocol (MCP) Server and piloting the Visa Acceptance Agent Toolkit to enhance agentic commerce experiences. These developments allow AI agents to securely interact with Visa's network using its APIs, streamlining the process for developers and enabling rapid prototype development. The MCP Server serves as a secure integration layer, simplifying API interactions, while the Acceptance Agent Toolkit requires no coding, using plain language prompts to facilitate commerce tasks. This progress aims to harness AI for secure and efficient commerce, broadening Visa's technological reach.

Enterprises Combat AI Agent Sprawl Risks with Covasant Control Tower Integration

Covasant has launched the AI Agent Control Tower, a centralized platform designed to manage and govern AI agents across diverse technological environments, addressing issues such as agent sprawl, which poses risks to business resilience and security. The Control Tower offers real-time insights into agent activity and performance, ensuring compliance with industry standards and facilitating seamless integration with enterprise risk management frameworks. Challenges like skyrocketing AI costs, technical debt, and misleading outputs are mitigated by its policy guardrails, output validation, and regulatory adherence, making AI operations more secure, efficient, and business-aligned.

Apple Collaborates with Google for AI-Enhanced Siri Search Powered by Gemini Model

Apple is reportedly developing an AI-powered search feature for Siri called "World Knowledge Answers," which could see collaboration with Google, according to Bloomberg. This feature may employ a custom Gemini AI model to provide users with AI-generated summaries from the web, incorporating multimedia elements. The initiative marks part of Apple's effort to upgrade Siri with capabilities to utilize personal data for executing on-screen actions. Apple has reportedly reached a formal agreement to test a Google AI model for Siri's summaries and is evaluating other AI models for this effort. The revamped Siri is expected to launch alongside iOS 26.4 around March.

Starbucks Implements AI-Driven Inventory System in 11,000 North American Stores

Starbucks is deploying a new AI-based inventory counting system across its more than 11,000 company-owned stores in North America by the end of September. Utilizing handheld tablets equipped with advanced AI technology from NomadGo, the system is designed to expedite inventory checks and ensure consistent stock availability for items like cold foam and oat milk. This initiative is part of Starbucks' broader strategy to enhance its supply chain efficiency, complementing other technological implementations such as a virtual assistant for workers and a customer order sequencing tool.

⚖️ AI Ethics

Scale AI Sues Mercor Over Alleged Theft of Confidential Customer Documents

Scale AI has filed a lawsuit against its former employee, Eugene Ling, and rival company Mercor, alleging the theft of over 100 confidential documents related to customer strategies. The lawsuit accuses Ling of breaching his contract and claims he attempted to pitch Mercor's services to one of Scale's major clients, referred to as "Customer A," before his official departure. While Mercor's co-founder acknowledges Ling's possession of old documents, he denies utilizing Scale's trade secrets and has proposed their destruction. This legal action highlights Scale's concerns over Mercor's competitive threat, particularly given the recent $14.3 billion investment in Scale by Meta, despite Mercor's rising prominence in the AI data training market.

Salesforce Uses AI Agents to Replace 4,000 Customer Support Employees

Salesforce CEO Marc Benioff revealed on a podcast that the company has used AI agents to significantly alter its customer support workforce, reducing headcount from 9,000 to 5,000. While some initial interpretations suggested 4,000 layoffs, Salesforce clarified this as a strategic "rebalance," with many employees redeployed into sales and other areas as they enhance their use of AI. Benioff emphasized the AI integration as an augmentation rather than a replacement of human roles, highlighting its influence in efficiently handling customer interactions and sales opportunities. This development showcases Salesforce's rapid adoption of AI to enhance business operations and prompts questions regarding the future implementation of AI across its broader operations.

Judge Rules Google Can Retain Chrome While Sharing Search Data with Competitors

In a landmark antitrust ruling, a DC District Court judge determined that Google does not have to sell its Chrome browser despite previously violating the Sherman Antitrust Act, but must share some search data with competitors and refrain from exclusive distribution deals. This decision marks a significant defeat for the DOJ, which had sought to break Google's hold on the online search market through more drastic measures, such as forcing Chrome's sale. Although the case parallels past actions against tech giants like Microsoft, the ruling allows Google to continue paying for pre-installations and default placements on browsers like Apple's Safari, albeit under stricter conditions. The ruling, however, still supports limited access for rivals to certain search data, aiming to boost competition. The ongoing legal proceedings could see further changes if appealed, potentially reaching the Supreme Court.

OpenAI Revises ChatGPT Safety Measures Amid Raine Family's Wrongful Death Lawsuit

OpenAI plans to enhance safety measures for its ChatGPT platform following tragic incidents, including the suicide of a teenager, Adam Raine, who discussed self-harm with the AI. The company intends to redirect sensitive conversations to advanced reasoning models like GPT-5 and introduce parental controls to manage how AI interacts with younger users and notify parents of distress signals. These moves come amidst criticism from legal representatives of victims and experts who argue that OpenAI's response to inherent safety risks has been insufficient.

AI-Powered Threats Escalate: Cybercriminals Exploit Claude for Advanced Attacks and Fraud

A recent Threat Intelligence report highlights the growing threat of cybercrime facilitated by AI, specifically the misuse of AI models like Claude. The report details several cases where AI has been leveraged by criminals, including a large-scale extortion operation using modified AI-generated ransomware, North Korean operatives fraudulently securing jobs at U.S. firms, and the sale of AI-generated ransomware-as-a-service. These incidents reflect a troubling trend where AI lowers the expertise needed for sophisticated cyberattacks, making defense harder. In response, the company has enhanced detection measures and shared its findings with authorities to mitigate future threats.

FTC Orders Workado to Prove AI Content Detector Claims or Cease Advertising

The Federal Trade Commission (FTC) has finalized an order against Workado, LLC, requiring the company to cease advertising its AI content detection products' accuracy unless supported by reliable evidence. Workado's AI Content Detector, intended to differentiate between AI-generated and human-written content, was criticized by the FTC for being trained primarily on academic texts, contradicting claims of broad accuracy. Under the order, Workado must substantiate performance claims, inform consumers about the FTC settlement, and submit compliance reports annually for three years. The FTC's final decision followed their unanimous approval, responding to two public comments on the proposed order.

China Enforces New Law Requiring Labels on All AI-Generated Online Content

China has implemented a new regulation requiring all AI-generated media to include labels, marking a significant effort to combat misinformation, fraud, and copyright violations online. Effective from September 1, the law mandates visible labels and embedded metadata on AI-created text, images, video, and audio. Major platforms like WeChat and Douyin have quickly adapted to comply. This move is part of China's broader Qinglang campaign to enhance digital oversight, demonstrating Beijing's increasing control over online content, while prompting speculation that similar measures could be adopted by other countries amid growing concerns about unlabelled AI-generated material.

🎓AI Academia

Understanding and Protecting Children in the Growing World of AI Chatbots

A report from July 2025 highlights the increasing role of AI chatbots in children's lives, showing they are being used for learning, advice, and companionship. While these chatbots offer benefits like 24/7 learning support and a non-judgmental space, there are significant risks, such as exposure to inaccurate or inappropriate content. Vulnerable children, in particular, may be more likely to engage with chatbots as companions, potentially leading to reliance on them over human interactions. The report calls for urgent coordinated action from stakeholders to address these issues and ensure children can use these tools safely, as current regulations have not kept up with technological advancements.

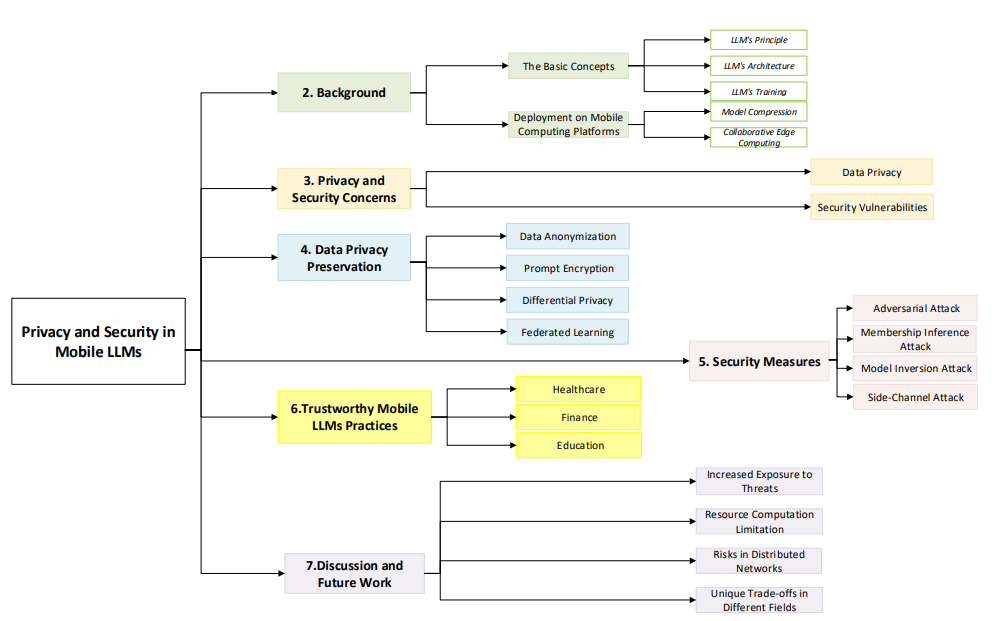

Survey Examines Privacy and Security Challenges of Mobile Large Language Models

The IEEE Internet of Things Journal recently published a survey examining the privacy and security challenges associated with deploying Large Language Models (LLMs) on mobile and edge devices. These models, which enhance real-time applications in sectors like healthcare, finance, and education, face issues such as adversarial attacks and data leaks due to their resource-intensive nature. The survey categorizes existing solutions like differential privacy and federated learning, while also proposing future directions to develop robust and efficient mobile LLM systems that better protect user data and ensure privacy compliance.

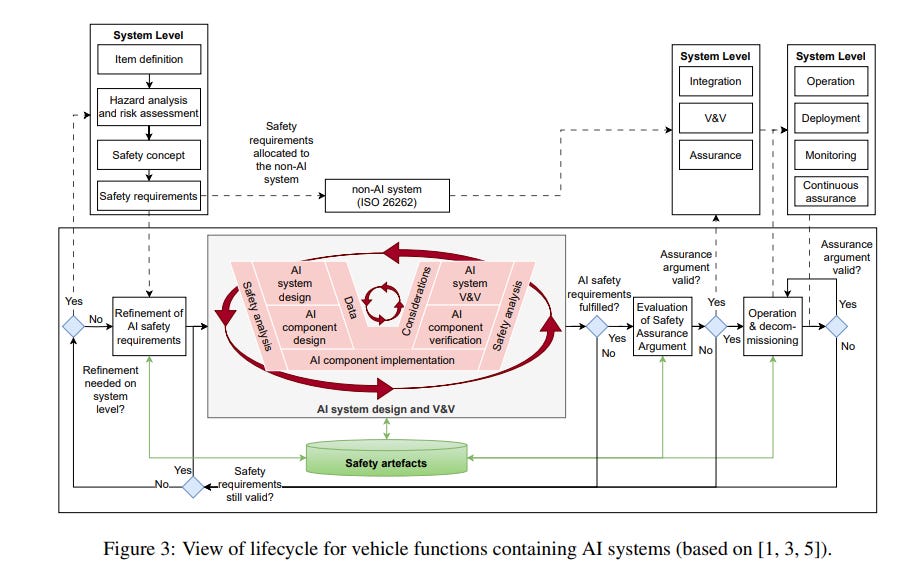

AI Safety Standards in Electric Vehicles: ISO 26262 and ISO/PAS 8800 Integration

At the Electric Vehicle Symposium 38 (EVS38) in Göteborg, Sweden, a study was presented addressing the challenges of integrating AI technology into electric vehicles (EVs) for safety assurance. The focus was on combining ISO 26262, a standard for functional safety in vehicles, with the new ISO/PAS 8800 to provide a framework for the independent assessment of AI components, specifically in State of Charge (SOC) battery estimation. The research emphasized the need for evolving safety standards due to AI's black-box nature and data dependency, and proposed robustness testing through fault injection experiments to evaluate resilience against input variations.

Growing Importance of AI Auditability: Navigating Ethical and Legal Challenges Ahead

The concept of AI auditability is gaining traction as a critical component for ensuring that artificial intelligence systems adhere to ethical, legal, and technical standards. According to recent research, auditability involves an independent evaluation of AI processes to assess compliance and is increasingly being formalized through regulatory frameworks like the EU AI Act. These frameworks call for comprehensive documentation and risk assessments, yet challenges persist due to technical opacity and inconsistent practices. The experts stress the need for international regulations, standardized tools, and multi-stakeholder collaboration to build a robust AI audit ecosystem and emphasize that auditability should be integrated into AI governance to guarantee systems that are not only functional but also aligned with societal values.

New Research Advances Private, Verifiable, and Auditable AI Development

In a recent doctoral thesis, a comprehensive framework for creating AI systems that prioritize privacy, verifiability, and auditability was proposed. This research highlights the necessity for AI technologies that not only perform complex tasks but also maintain transparency and privacy safeguards, aiming to balance innovation with ethical accountability. The thesis underscores the pivotal role of these systems in ensuring that AI advancements are responsibly developed and managed.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.