So it begins: ChatGPT will soon allow erotica for verified adults, says OpenAI CEO

In a bold yet controversial move, OpenAI will soon permit erotic chats on ChatGPT for verified adults, with CEO Sam Altman defending it as treating adults like adults, despite pushback..

Today’s highlights:

OpenAI will allow erotic conversations on ChatGPT starting December 2025, but only for users who verify they are 18 or older. CEO Sam Altman defended this as part of a “treat adults like adults” approach, following earlier restrictions imposed after mental health incidents, including a teen suicide linked to ChatGPT. The policy includes age-gating and retains strict safeguards for minors and at-risk users. Altman noted that the company has since mitigated serious safety risks through tools like parental controls and specialized reasoning models. The adult-oriented mode will be walled off, and OpenAI maintains that it won’t relax safety measures around mental health crises or illegal/harmful behavior.

The shift has sparked strong reactions. Critics, including safety advocates and lawmakers, fear exploitation, synthetic intimacy, and failure of age verification. Experts warn of possible addiction, emotional dependency, and distorted expectations of relationships. Public figures like Mark Cuban and groups like NCOSE have condemned the decision, citing risks to teens and urging regulatory oversight. However, some in the tech community support the move as recognition of adult users’ needs and a response to growing market demand. Altman argued OpenAI should not act as “moral police,” emphasizing freedom within safe boundaries. Financial pressures and competition from unfiltered AI platforms likely influenced the decision, with Altman defending it as both pragmatic and mission-aligned.

You are reading the 137th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training, including AI Governance certifications (AIGP, RAI) and an immersive AI Literacy Specialization. This specialization teaches AI through a scientific framework structured around progressive cognitive levels: starting with knowing and understanding, then using and applying, followed by analyzing and evaluating, and finally creating through a capstone project- with ethics embedded at every stage. Want to learn more? Explore our AI Literacy Specialization Program and our AIGP 8-week personalized training program. For customized enterprise training, write to us at [Link].

🚀 AI Breakthroughs

Windows 11 Updates Transform Every PC Into an AI-Powered Experience With Copilot

Microsoft has unveiled new AI-centric updates to Windows 11, positioning Copilot as an integral feature for enhanced user interaction. The updates include voice-activated Copilot Voice and Copilot Vision, which aim to simplify tasks through natural language input. With the introduction of “Hey Copilot,” users can now effortlessly interact with their PCs via voice commands, while Copilot Vision offers guided support by analyzing on-screen content. These advancements extend AI capabilities to every Windows 11 device, offering features like desktop and app sharing, enhanced taskbar interaction, and tools for easier multitasking. The updates emphasize Microsoft’s commitment to providing secure, user-friendly AI experiences on its latest operating system.

Microsoft’s MAI-Image-1 Model to Feature Soon on Copilot and Bing

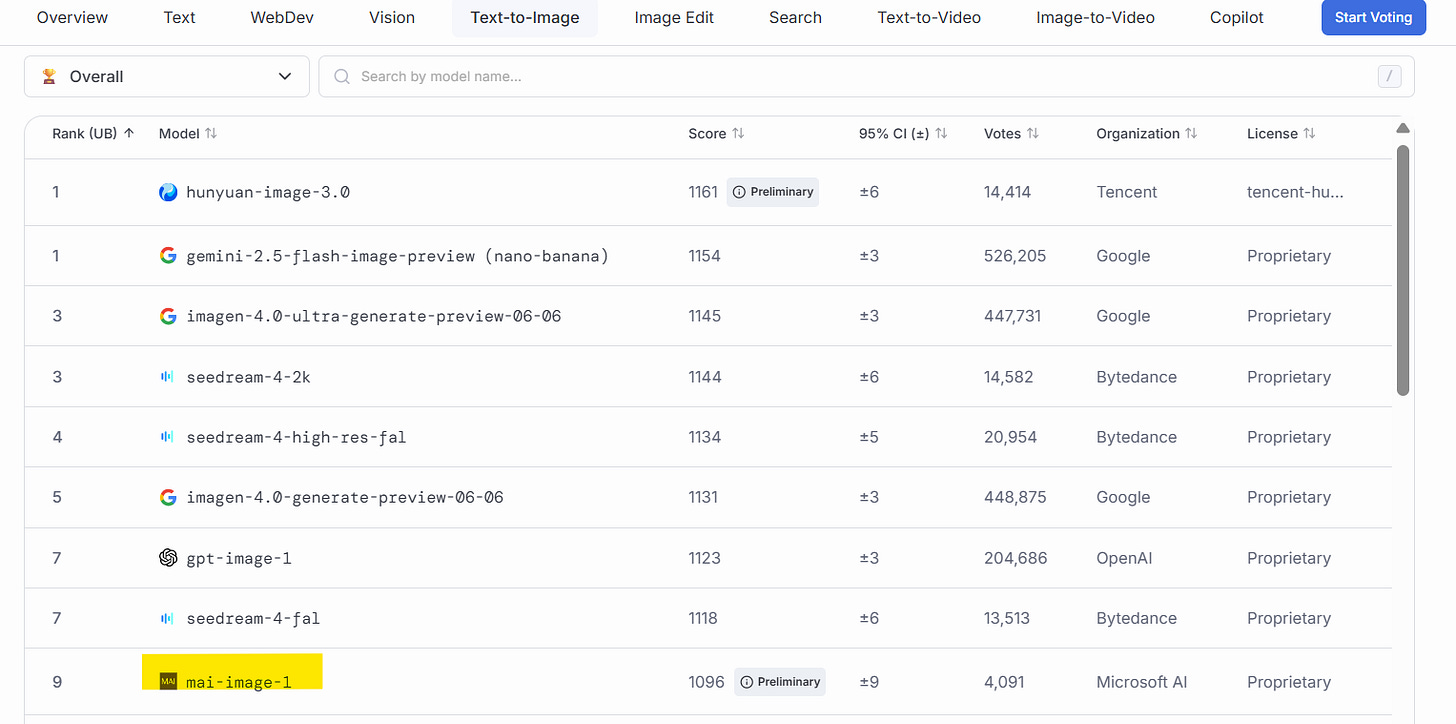

Microsoft unveiled its first in-house image generation model, MAI-Image-1, which is poised for release on Copilot and Bing Image Creator. This model, ranked #9 on LMArena, aims to outperform competitors by avoiding repetitive and stylistic outputs and excels at rendering photorealistic landscapes. Despite its smaller size, it competes closely with models like OpenAI’s and Google’s on tasks involving complex lighting and shadow realism. MAI-Image-1 is part of Microsoft’s broader AI strategy, which includes models like MAI-Voice-1 and the Phi language models, alongside support for OpenAI’s initiatives.

Google Unveils Veo 3.1 with Enhanced Audio, Editing Features, and Video Integration

Google has unveiled Veo 3.1, an enhanced version of its video model featuring improved audio output, granular editing controls, and more realistic image-to-video rendering. Building on the features from May’s Veo 3 release, Veo 3.1 allows users to integrate objects into video clips seamlessly and soon will enable object removal within its Flow editor. This model is being integrated into Google’s video editor Flow, the Gemini App, and the Vertex and Gemini APIs, with the Flow platform having already facilitated the creation of over 275 million videos since its launch.

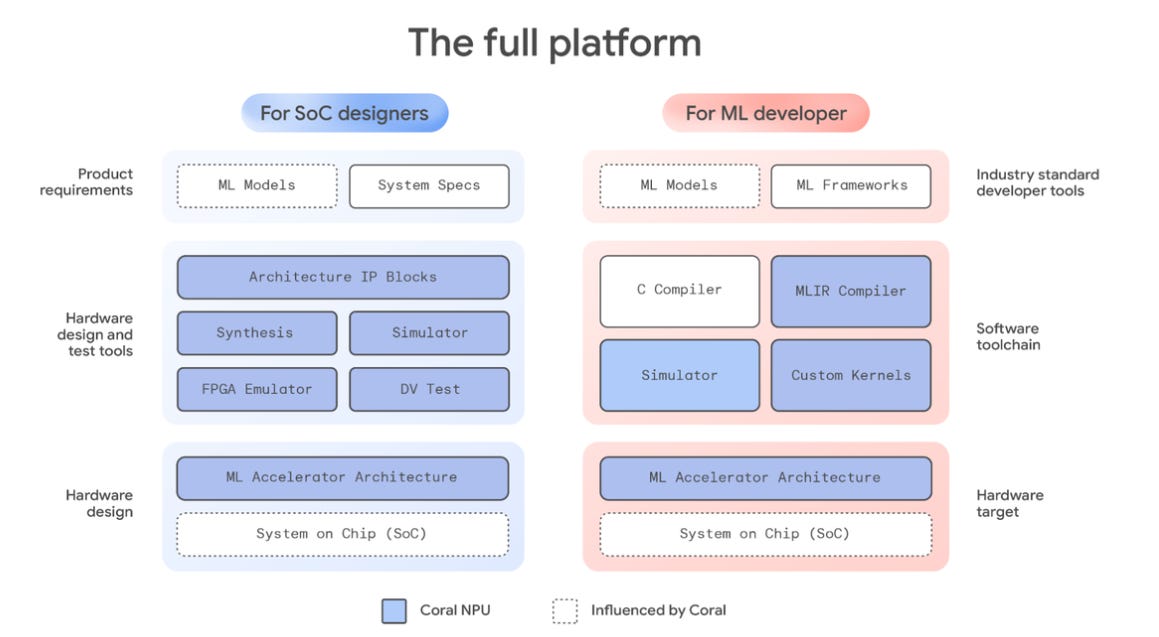

Coral NPU Advances Personal Edge AI, Overcoming Key Challenges of Privacy and Power

Generative AI is evolving beyond cloud-based models to become an integral part of our personal devices, tackling the challenge of operating sophisticated AI on battery-limited edge devices to provide seamless, private assistance. Addressing issues like computing power limits, processor fragmentation, and user privacy concerns, the newly launched Coral NPU platform aims to facilitate this transition. Co-designed with Google Research and DeepMind, Coral NPU offers an AI-first hardware solution for developing energy-efficient, always-on applications, making it feasible for developers to create personal AI experiences directly on wearable tech.

DeepSomatic AI Model Improves Genetic Variant Detection in Cancer Cells Research

DeepSomatic, a cutting-edge machine learning model developed in collaboration with UC Santa Cruz and Children’s Mercy, is making strides in the fight against cancer by accurately identifying genetic variants in cancer cells. Utilizing convolutional neural networks, the tool distinguishes between inherited and somatic mutations, excelling with complex cases like pediatric leukemia and glioblastoma. Demonstrating superior performance over existing methods, especially in identifying crucial insertions and deletions, DeepSomatic and its comprehensive training dataset have been publicly released to foster global cancer research advancement, highlighting Google Research’s commitment to impactful scientific progress.

AI Model Identifies Novel Method to Enhance Immune Response in Specific Tumor Environments

A novel generative AI model, C2S-Scale 27B, has successfully identified a potential drug interaction that enhances immune response specifically in a context with low interferon levels, demonstrating an emergent capability of conditional reasoning. By simulating the effects of over 4,000 drugs in distinct immune scenarios, the AI predicted a significant increase in antigen presentation when the CK2 inhibitor silmitasertib was used alongside low-dose interferon, overcoming prior unknowns in literature. Laboratory experiments confirmed the model’s prediction, showing a 50% increase in antigen presentation, suggesting a promising pathway for developing new combination therapies to improve tumor visibility to the immune system. This advance underscores the potential for large-scale AI models in conducting high-throughput virtual screens and generating novel, biologically-grounded hypotheses, offering a new blueprint for biological discovery and therapeutic development.

Google’s Gemini Enhances Gmail with AI-Assisted Scheduling for Seamless Meetings

Google is enhancing Gmail’s scheduling capabilities with a new feature powered by its Gemini AI, which aims to simplify meeting coordination by suggesting suitable time slots based on users’ Google Calendar and email context. This “Help me schedule” tool, appearing when time coordination is detected in emails, allows users to customize and insert suggested meeting times directly into messages, seamlessly updating both parties’ calendars upon selection. Initially, this feature will be available for scheduling between two individuals and will progressively roll out to eligible Google Workspace users starting mid-October 2025.

Google Meet Introduces AI Makeup Feature to Compete with Teams and Zoom

Google Meet has introduced an AI-powered makeup filter to enhance its video-conferencing features, allowing users to choose from 12 different virtual makeup options within its “Portrait touch-up” section. This addition, aimed at competing with similar capabilities in Microsoft Teams and Zoom, ensures that the virtual makeup remains stable even if the user moves on-screen. The feature is disabled by default and can be activated by users during or before a call, with their preferences saved for future meetings. The new filter began its rollout on October 8 for both mobile and web platforms.

NVIDIA Delivers DGX Spark AI Supercomputer to Elon Musk at SpaceX in Texas

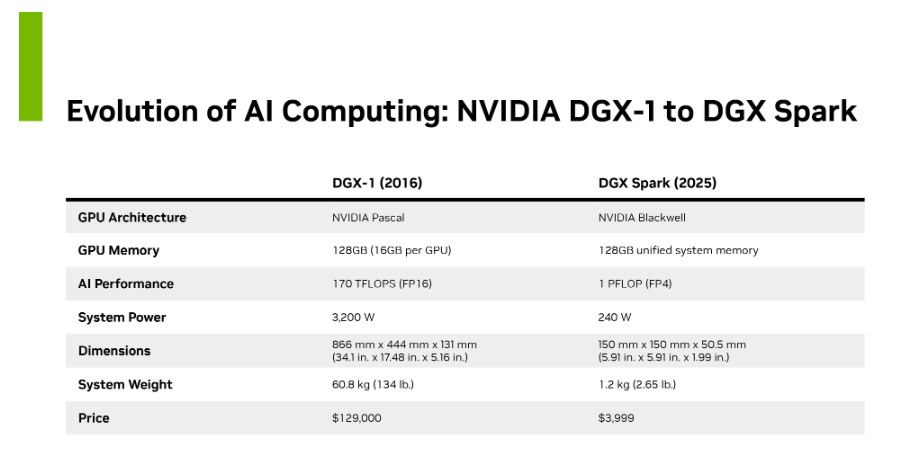

NVIDIA has begun shipping DGX Spark, the world’s smallest AI supercomputer, which integrates NVIDIA’s AI stack into a compact desktop form factor. Built on the NVIDIA Grace Blackwell architecture, DGX Spark offers developers a petaflop of AI performance and 128GB of unified memory, enabling local AI model inference and fine-tuning. To celebrate the launch, NVIDIA’s CEO delivered a unit to Elon Musk at SpaceX, a nod to the earlier delivery of a DGX-1 to OpenAI in 2016. Major tech companies and research organizations are among the early recipients, utilizing DGX Spark for evaluating and optimizing AI tools and models.

Claude Enhances Performance with Skills for Task-Specific Expertise and Efficiency

Anthropic’s AI assistant Claude now has enhanced capabilities through the use of “Skills,” which are specialized folders containing instructions, scripts, and resources tailored for specific tasks. These Skills are accessible across all Claude products, such as Claude apps, Claude Code, and the Claude Developer Platform API. By scanning available Skills, Claude can efficiently and selectively load the necessary information to perform tasks such as creating Excel spreadsheets, PowerPoint presentations, and Word documents. Skills are designed to be composable, portable, and efficient, allowing users to customize and extend Claude’s functionalities. Developers can create, manage, and upgrade Skills through the Claude Console, promoting seamless integration and optimized workflows across various platforms and organizational needs.

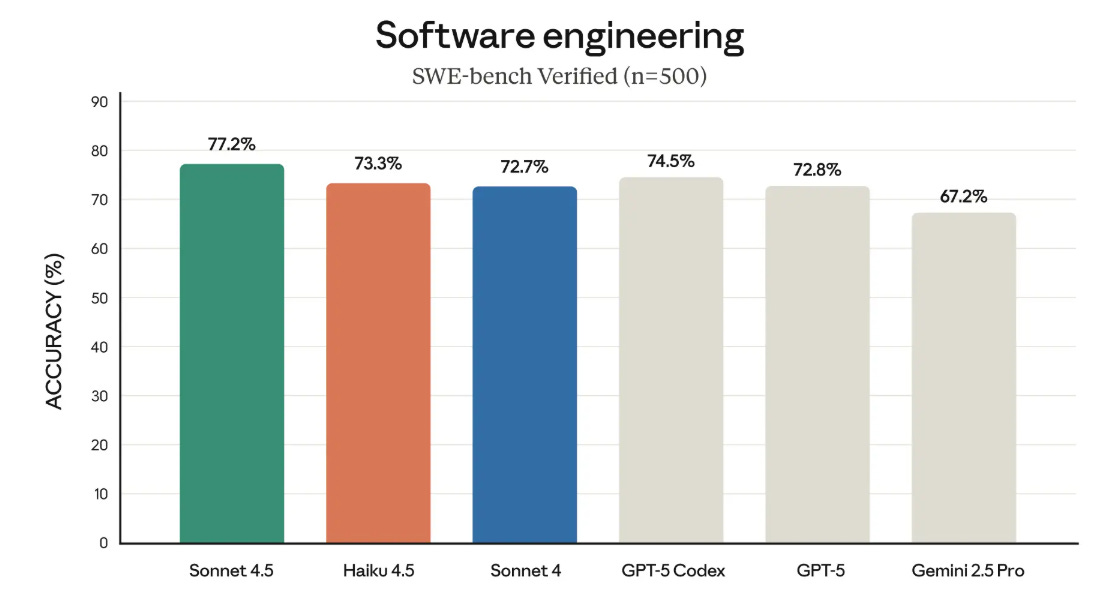

Anthropic Releases Claude Haiku 4.5 Offering Advanced AI at Reduced Costs and Speed

Anthropic has unveiled Claude Haiku 4.5, the latest iteration of its compact AI model, which promises similar performance to Sonnet 4 at a fraction of the cost, according to a company blog post. The model achieved 73% on SWE-Bench and 41% on Terminal-Bench in tests, aligning with Sonnet 4, GPT-5, and Gemini 2.5 in various benchmarks. Available immediately on all free Anthropic plans, Haiku 4.5 is aimed at AI products needing efficiency without heavy server demands and is expected to enhance software development tools through improved latency. This follows Anthropic’s recent launches of Sonnet 4.5 and Opus 4.1, continuing its momentum in AI advancements.

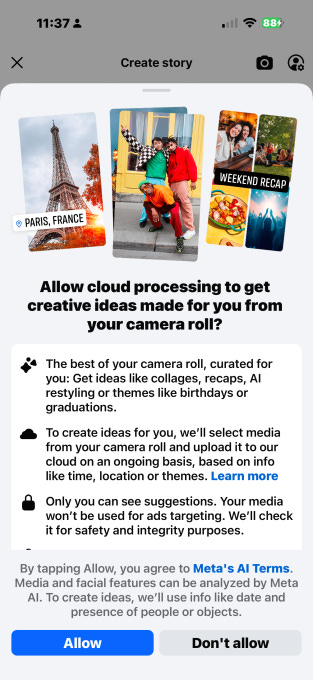

Meta’s AI Photo Edit Suggestions Roll Out to U.S. and Canadian Users

Meta has rolled out a new Facebook feature in the U.S. and Canada that allows its AI to suggest photo edits for images stored on users’ camera rolls, which haven’t been shared yet. This optional feature, first tested in the summer, requires users to consent to “cloud processing,” enabling the creation of AI-generated collages, recaps, and themed edits. While Meta assures that the images won’t be used for ad targeting, users grant analysis rights under Meta’s AI Terms of Service. This feature, which can be disabled anytime, means Meta gains access to more user data, enhancing its AI capabilities and understanding of user habits.

Spotify Enhances AI DJ with Text Requests, Extends Features to Spanish Users

Spotify has enhanced its AI DJ feature for Premium subscribers by allowing users to send music requests via text input, alongside the existing voice command option. This update extends to both English and Spanish, with DJ Livi catering to Spanish-language requests. The upgrade aligns with growing trends in multimodal AI interactions, reflecting users’ preference for flexibility in communication methods. Additionally, Spotify’s AI DJ can now suggest personalized prompts and handle complex requests involving genre, mood, artist, or activity, and is operational in over 60 markets globally.

Walmart Partners with OpenAI to Enhance AI-Driven Shopping Experience for Customers

Walmart has announced a partnership with OpenAI to enable consumers to shop for its products through an AI chatbot in ChatGPT’s app, allowing purchases of items like groceries and household essentials, and supporting third-party sellers. This agentic shopping feature also extends to Sam’s Club members, facilitating meal planning and product discovery. Users need to link their Walmart accounts to ChatGPT to utilize the “buy” button. The move aligns with OpenAI’s broader e-commerce strategy, which includes collaborations with Etsy and Shopify. Walmart is also developing its own AI shopping assistant, Sparky, to enhance the personalization and multimedia aspects of online shopping, signaling a shift in e-commerce from traditional search options to interactive, AI-driven experiences.

Cognizant Launches Enterprise Vibe Coding Blueprint to Enhance AI-Assisted Development

Cognizant has introduced the Enterprise Vibe Coding Blueprint to assist Global 2000 companies in integrating AI-assisted coding across diverse teams. Inspired by its record-setting Vibe Coding Week, the blueprint offers advisory services, tools, and insights to foster AI innovation, allowing businesses to turn AI concepts into tangible results. It includes infrastructure for managing participants and tracking prototypes, leveraging systems like the AI-powered Cognizant Neuro for scalable evaluations. The initiative promotes AI use among both developers and non-technical teams, enabling collaboration and rapid prototyping to enhance operational efficiency and innovation.

Apple’s M5 Chip Promises Major AI Leap and Enhanced Graphics for Devices

On October 15, Apple unveiled its next-generation M5 chip, which powers the new 14-inch MacBook Pro, iPad Pro, and Apple Vision Pro. Built on third-generation 3-nanometer technology, the M5 chip offers significant upgrades to the CPU, GPU, and Neural Engine, providing a 4x boost in AI workload processing compared to its predecessor, the M4, and enhanced graphics performance with its new 10-core GPU architecture. The chip includes a 16-core Neural Engine for faster on-device AI tasks and increased memory bandwidth, allowing creative professionals to run larger AI models smoothly. The M5’s energy-efficient design aligns with Apple’s 2030 carbon neutrality goals.

Salesforce and OpenAI Deepen Partnership: Integrating AI Models Across Platforms

Salesforce and OpenAI have strengthened their partnership to integrate Salesforce’s Agentforce 360 platform with OpenAI’s models, including GPT-5, across ChatGPT, Slack, and enterprise workflows. This collaboration enables users to access sales data, customer conversations, and create Tableau visualizations directly within ChatGPT using natural language. Available now, ChatGPT and Codex integrations in Slack will automate tasks like summarizing conversations and drafting content. Additionally, the partnership enhances commerce capabilities, allowing merchants to use Agentforce Commerce for instant checkout and order management in ChatGPT, while ensuring data privacy and control. Further details on the availability of these apps are expected in the coming months.

Manus 1.5 Release Boosts Speed, Reliability, and Quality for Diverse Tasks

The latest release, Manus 1.5, represents a significant advancement in AI-powered agent systems, with notable improvements in speed, reliability, and quality across diverse tasks like research, data analysis, and web development. The system includes two versions: the full-powered Manus-1.5 and the cost-efficient Manus-1.5-Lite. Manus 1.5’s re-engineered architecture enables tasks to complete nearly four times faster and handles complex problems with enhanced intelligence and expanded context. A major feature is its ability to support comprehensive full-stack web application development within the platform. It integrates functionalities such as persistent databases, user authentication, embedded AI, and in-built analytics, enhancing both the development experience and application capabilities. Additionally, Manus 1.5 introduces collaboration features and a centralized library for efficient teamwork and file management.

AI Transforms Cooking: A Decade of Computational Gastronomy at IIIT-Delhi

At IIIT-Delhi, the intersection of artificial intelligence and cooking is being explored through the pioneering field of computational gastronomy, led by a professor who has spent over a decade delving into this innovative domain. By merging data science with culinary arts, his team has created structured databases and algorithms to understand global culinary patterns, improve recipe health profiles, and even generate new, culturally coherent dishes. One prominent development from this research is ‘Ratatouille,’ an AI-powered system capable of creating custom recipes based on input ingredients and dietary constraints. This project underscores the potential of AI to enhance culinary innovation without replacing the human touch in cooking.

MHRA Fast-Tracks AI Tools to Revolutionize Speed and Accuracy of Patient Care

The UK’s Medicines and Healthcare products Regulatory Agency (MHRA) is accelerating the assessment of new AI technologies through its AI Airlock programme, aiming to transform patient care by significantly reducing wait times for medical test results and enhancing early disease detection. Seven innovative technologies are being trialed, including AI-assisted clinical note-taking and diagnostic tools, within a regulatory sandbox that allows for comprehensive testing and evaluation. Insights from this initiative are expected to shape future regulations for AI in healthcare, addressing challenges such as AI explainability and risk mitigation, while fostering collaboration between innovators and regulators.

⚖️ AI Ethics

South Korea Halts AI Textbook Initiative After Concerns Over Accuracy and Privacy

The South Korean government’s ambitious $850 million initiative to develop AI-based textbooks for schools has faced significant challenges and has been reduced to an optional program just four months after its launch. The project, initially mandatory, encountered criticism over inaccuracies, privacy issues, technical problems causing delays, and increased workload for educators and students. Despite the claim of personalized learning, students reported difficulties concentrating, while the swift execution of the project raised concerns over inadequate scrutiny and political influences. Comparisons have been drawn with past educational technology programs globally, noting the rapid setback and substantial cost of South Korea’s scheme.

Senate Republicans Face Backlash for Sharing AI Deepfake of Chuck Schumer Amid Shutdown

Senate Republicans shared a deepfake video of Senate minority leader Chuck Schumer, falsely depicting him as celebrating the 16-day government shutdown. The AI-generated video takes Schumer’s words out of context, originally from a Punchbowl News article discussing a healthcare-focused strategy, and repeats the phrase “every day gets better for us.” The shutdown stems from a failure to agree on government funding, with Democrats aiming to maintain tax credits for affordable health insurance, reverse Medicaid cuts, and prevent reductions to health agencies. Despite X’s policies against harmful synthetic media, the video remains available on the platform, sparking debate about the regulation of deepfakes in political discourse. The situation echoes previous incidents on X involving misleading political deepfakes.

AI-Driven Data Centers Tap Fossil Fuels, Reshaping Fracking’s Role in Energy Landscape

The rise of AI has unexpectedly intertwined with the fracking industry as companies like Poolside and Meta construct data centers near major gas-production sites, drawing energy from fossil fuels. These facilities, like those in West Texas and Louisiana, generate power predominantly from natural gas, including fracked sources, raising environmental concerns among local communities. While AI companies claim the move is strategic, driving national energy independence and potential economic revitalization, it risks burdening regions with increased fossil fuel infrastructure and associated environmental impacts. Meanwhile, the promise of cleaner energy solutions remains speculative, with ongoing investments in alternatives like solar and small modular reactors.

Meta Previews Parental Controls for AI Conversations with Teens on Instagram

Meta has unveiled new parental control features for teen interactions with AI characters on its platforms, set to launch next year. These controls will allow parents to block specific characters and monitor discussion topics, while maintaining the ability to disable such chats entirely or selectively for their teens. The features, designed to provide a safer environment corresponding to a PG-13 standard, will initially be available in English across the U.S., U.K., Canada, and Australia via Instagram in early 2024. This initiative is part of a wider industry effort to address rising concerns about social media’s impact on teen mental health.

OpenAI Pauses Martin Luther King Jr. Likeness Videos Amid Estate Concerns on Sora

OpenAI has paused the generation of AI videos resembling Martin Luther King Jr. on its Sora platform following requests from his estate, citing disrespectful depictions by users. This move comes amid broader concerns over the ethical use of AI-generated videos, especially those depicting historical figures. The Sora platform, launched recently, has sparked significant debate about the potential risks and the necessary safeguards for AI technologies in social media. OpenAI has been actively working to address these issues by implementing restrictions, allowing estate owners to control their likeness, and considering the complex challenges AI video generation poses.

Pinterest Introduces Tools to Reduce AI Content Overload and Improve User Experience

Pinterest has introduced new tools to address user complaints about excessive AI-generated content on its platform. Users can now customize their feeds to limit generative AI imagery in specific categories like beauty, art, and fashion. This move follows backlash over the increase of “AI slop” and aims to balance AI and human creativity by allowing users to adjust their settings and send feedback on AI content. The new controls are available on the website and Android, with an iOS rollout planned soon.

Spotify Partners with Major Labels to Develop Fair Compensation AI Tools

Spotify announced collaborations with major record labels, including Sony, Universal, Warner, and Merlin, to develop AI tools that ensure fair compensation and respect artists’ rights in the evolving digital music landscape. The company aims to create “responsible AI” products allowing artists to choose whether to utilize AI tools. This initiative follows criticisms about AI-generated music’s impact on human artistry and a recent policy revamp targeting spam and misuse of AI. Spotify is also building a generative AI research lab, emphasizing its commitment to musician rights and copyright in AI music innovations.

Salesforce Faces Class Action Lawsuit Over Copyright Infringement Allegations in AI Training

Salesforce faces a proposed class action lawsuit filed by authors Molly Tanzer and Jennifer Gilmore, who allege the company used thousands of books, including their own, without permission to train its xGen AI models, in violation of copyright laws. The authors argue that Salesforce’s actions reflect CEO Marc Benioff’s own criticism of AI companies using “stolen” data, emphasizing the need for fair compensation for copyrighted material used in AI training. This lawsuit is part of a broader wave of legal actions against tech companies such as OpenAI, Microsoft, and Meta Platforms for similar alleged infringements.

17-Year-Old Files Lawsuit Against AI Developer for Unauthorized Nude Image Creation

A 17-year-old girl from New Jersey has filed a lawsuit against AI developer AI/Robotics Venture Strategy3, the creators of the software ‘ClothOff,’ after fake nude images of her were generated and distributed without consent. The images were allegedly created by a classmate using a swimsuit photo she posted on Instagram at age 14. Joining the suit as a nominal defendant is messaging app Telegram, accused of facilitating access to ClothOff. The plaintiff, represented by Yale Law School affiliates, argues the images amount to child sexual abuse material and seeks their deletion. The company claims it does not process images of minors nor store data, stating such actions violate their terms of service.

CommonCrawl Data Reveals Challenges in AI Content Detection on the Web

Common Crawl’s extensive web archive was utilized to extract a representative sample of 65,000 English-language articles to assess the prevalence of AI-generated content on the web. The chosen articles adhered to specific criteria, ensuring they were in English, properly marked-up, exceeded 100 words, and published between January 2020 and May 2025. Surfer’s AI detection algorithm was employed, alongside assessments of false positive and false negative rates, to classify these articles. Findings revealed a 4.2% false positive rate on human-written content dated before AI tools like ChatGPT were popularized, while the detection of AI-generated content using OpenAI’s GPT-4o showed a 0.6% false negative rate. These evaluations aim to quantify the presence of AI-generated articles on the internet accurately.

🎓AI Academia

Towards Automating Governance in OSS with a New Domain-Specific Language for Collaboration

A team of researchers has introduced a Domain-Specific Language (DSL) aimed at enhancing governance in software projects, especially those involving both human and AI-powered agents. This initiative seeks to address the complex governance challenges prevalent in Open-Source Software (OSS) development by offering a structured framework for defining and enforcing governance policies. The proposed DSL is designed to facilitate clearer roles, responsibilities, and decision-making processes, accommodating the evolving landscape where diverse human contributors and AI agents increasingly collaborate. By automating governance tasks, the DSL aims to foster more efficient and adaptable project management in OSS environments. This development is set against a backdrop of growing diversity and AI’s expanding role in software development.

Subject Roles in the EU AI Act: Mapping and Regulatory Implications

The paper provides a structured examination of the six main categories of actors — providers, deployers, authorized representatives, importers, distributors, and product manufacturers — collectively referred to as “operators” within the regulation. Through examination of these Article 3 definitions and their elaboration across the regulation’s 113 articles, 180 recitals, and 13 annexes, they map the complete governance structure and analyze how the AI Act regulates these subjects.

Pre-Print Study Explores Responsible AI Adoption Challenges in Public Sector for HICSS 2026

A pre-print manuscript accepted for the 2026 Hawaii International Conference on System Sciences delves into the complexities of adopting responsible Artificial Intelligence (AI) in the public sector. The study presents a taxonomy addressing 13 key challenges related to data governance that hinder AI adoption, including issues with data quality, infrastructure, and governance frameworks. The research highlights the essential role of high-quality and ethically managed data, noting that many government data systems are siloed and poorly governed, which complicates AI deployment efforts. By examining these challenges through institutional pressures, the taxonomy serves as a guidance tool for policymakers to create effective governance conditions for responsible AI adoption.

New Guidelines Aim to Enhance AI Reliability and Governability in National Security

In a recent memorandum dated October 9, 2025, an AI Action Plan outlined guidelines to enhance AI model reliability and governability in securing national defense and intelligence operations. The Department of War, alongside the Office of the Director for National Intelligence, the National Institute of Standards and Technology, and the Center for AI Standards and Innovation, aim to refine responsible AI frameworks by mitigating risks related to ‘scheming’—a term used to describe AI models purposely concealing misalignments. The plan underscores the importance of stringent testing and evaluation protocols to preempt scenarios where AI could misrepresent data or compromise security. The roadmap recommends targeted improvements to ensure models meet robust reliability standards, thereby reducing potential threats to national security.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.

It's interesting how you note Altman's 'moral police' point; what if that 'walled off' mode inadvertently shifts general model behavior later, afecting everyone?

Wow, the 'treat adults like adults' approach Altman mentioned really stood out. You're spot on that someone was always going to build this. Very clever analisys.