"Smartest AI On Earth??": Elon Musk's xAI To Launch Grok 3 Chatbot Today!

Today's highlights:

🚀 AI Breakthroughs

xAI Prepares to Launch Grok 3 AI (February 17 at 8pm PT US time)

• xAI is set to launch its newest foundational model, Grok 3, which will power a chatbot aimed to rival ChatGPT, Gemini, DeepSeek, and Claude

• Grok 3 boasts improved reasoning and real-time data access on X, utilizing synthetic data to enhance its logic and complex problem-solving abilities

• The debut of Grok 3 will be showcased via a live demo at 8:30 PM PT on X, with Elon Musk highlighting its "scary smart" capabilities.

Google DeepMind's Veo 2 Enhances YouTube's Dream Screen for AI Video Creation

• YouTube Shorts has integrated Google DeepMind's Veo 2, enabling creators to generate AI video clips with enhanced realism and detail for their posts through text prompts

• The AI tool Veo 2, Google's counter to OpenAI's Sora, lets creators specify styles and effects, enhancing creative expression in YouTube Shorts beyond previous AI background capabilities

• Launching Thursday in the US, Canada, Australia, and New Zealand, Veo 2 includes DeepMind's SynthID watermarks to identify AI-generated content while addressing concerns about misleading videos.

Meta Ventures into Robotics with Plans for Humanoid Robots and AI Development

• Meta's Reality Labs is developing hardware and software for humanoid robots focused on household chores, leveraging existing AI and sensor technologies for broader applications with partner companies like Unitree Robotics and Figure AI

• The broader initiative includes making AI, sensors, and software for robots that other companies will manufacture, aligning Meta's AI advancements with its augmented and mixed reality goals

• Led by Marc Whitten, Meta's new robotics team marks a significant investment in expanding its AI portfolio, coinciding with a $65 billion investment and increased efforts in smart glasses sales.

Galileo Launches Comprehensive Agent Leaderboard on Hugging Face to Evaluate AI Performance

• Galileo introduces an Agent Leaderboard on Hugging Face, assessing AI agents' tool-calling abilities using the Tool Selection Quality metric to gauge real-world applicability;

• The leaderboard offers insights into how AI models manage complexities like scenario recognition, tool selection dynamics, and parameter handling for more informed business deployment;

• Updated monthly, the leaderboard evaluates top-performing LLMs in multi-domain environments, helping organizations choose AI models that best fit their operational needs and constraints.

Perplexity Launches Free Deep Research Tool for Expert-Level Analysis Across Domains

• Perplexity has released a new feature called Deep Research, designed to carry out expert-level analysis across various fields, including finance, marketing, and technology, by autonomously generating comprehensive reports

• The Deep Research tool on Perplexity's website offers a limited number of free daily queries to all users, with unlimited access available to Pro subscribers, and is soon expanding to iOS, Android, and Mac platforms

• Achieving high accuracy scores on Humanity’s Last Exam and SimpleQA benchmarks, Deep Research completes complex research tasks in under 3 minutes, highlighting its efficiency in comparison to industry-leading models.

Anthropic Prepares to Launch Hybrid AI Model with Enhanced Deep Reasoning Abilities

• Anthropic's upcoming AI model is positioned as a "hybrid," designed to toggle between deep reasoning and rapid response modes, offering developers enhanced flexibility in application use

• A reported "sliding scale" feature will help developers manage costs, efficiently calibrating computing power used for the model's deep reasoning functions

• Set to surpass OpenAI's o3-mini-high in programming tasks, the new model promises superior analysis of large codebases and improved performance on business benchmarks.

⚖️ AI Ethics

Bollywood Music Labels Tackle OpenAI in India Over AI Copyright Concerns

• Bollywood music giants, including T-Series and Saregama, have joined a lawsuit in India challenging OpenAI over alleged copyright violations related to AI model training

• These companies accuse OpenAI of unauthorized use of sound recordings, reflecting growing global concern over AI's use of copyrighted material

• The Delhi High Court has ordered OpenAI to respond to an IMI petition seeking to join ANI's copyright lawsuit over unauthorized AI model training

• OpenAI faces mounting legal challenges, with India being its second largest market, amidst concerns over jurisdiction and fair use of public data for AI training;

U.K. Partners with Anthropic to Support Public Services, Shifts Away from AI Safety

• The U.K. government has rebranded the AI Safety Institute as the AI Security Institute, shifting its focus away from societal risks to concentrate on AI-related security threats.

• Anthropic, a U.S. AI firm, has been contracted by the U.K. to enhance public services using AI, with plans for further collaborations with other AI companies.

• The recent U.K. strategy reflects a closer alignment with the U.S.'s AI policy, marked by their joint refusals to endorse the Paris AI Action Summit declaration.

🎓AI Academia

Large Language Models for Anomaly and Out-of-Distribution Detection: A Survey

• Large Language Models (LLMs) significantly enhance anomaly and out-of-distribution detection, shifting the typical paradigm and extending use cases beyond traditional natural language processing tasks;

• A proposed taxonomy categorizes approaches to anomaly and OOD detection using LLMs, clarifying their dual role and providing a systematic review of relevant literature and methodologies;

• Multimodal LLMs, integrating vision-language capabilities, show promise in real-world applications, highlighting challenges and opportunities for further research in improving AI system robustness.

Study Evaluates Large Language Models' Sensitivity to Sentiment in User Interactions

• Researchers assessed large language models' capability to identify and respond to text sentiment, revealing mixed effectiveness in handling positive, negative, and neutral emotional cues;

• Experiments showed LLMs often misclassify strong positive sentiments as neutral and fail to interpret sarcasm, highlighting challenges in sentiment accuracy and consistency;

• Variability in LLM performance across different datasets was noted, emphasizing the need for targeted optimizations to enhance sentiment analysis capabilities.

AI Policy Risks Inherent in Strict Evidence-Based Approaches Highlighted in New Blog Post

• A recent blog post at ICLR 2025 highlights the pitfalls of evidence-based AI policy, emphasizing risks of neglecting AI regulation through excessively high evidentiary standards

• Historical parallels to tobacco and fossil fuel debates are drawn, where evidence-based rhetoric delayed urgent regulatory actions, potentially favoring industry protection over public safety

• The post suggests 15 regulatory goals to enhance evidence-seeking policies globally, identifying opportunities for countries like Brazil, Canada, China, the EU, South Korea, the UK, and the USA;

AI Governance Calls for Balance Between Expertise and Public Participation: Study Reveals Need for Inclusive Models

• The new paper discusses the need for democratizing AI governance, highlighting the balance between technical expertise and public participation in decision-making

• Case studies from France and Brazil illustrate how inclusive governance frameworks can bridge the gap between AI's complexity and public accountability

• Recommendations target the European Union, advocating for governance models that integrate transparency, diversity, and adaptive regulation to align AI policy with societal values.

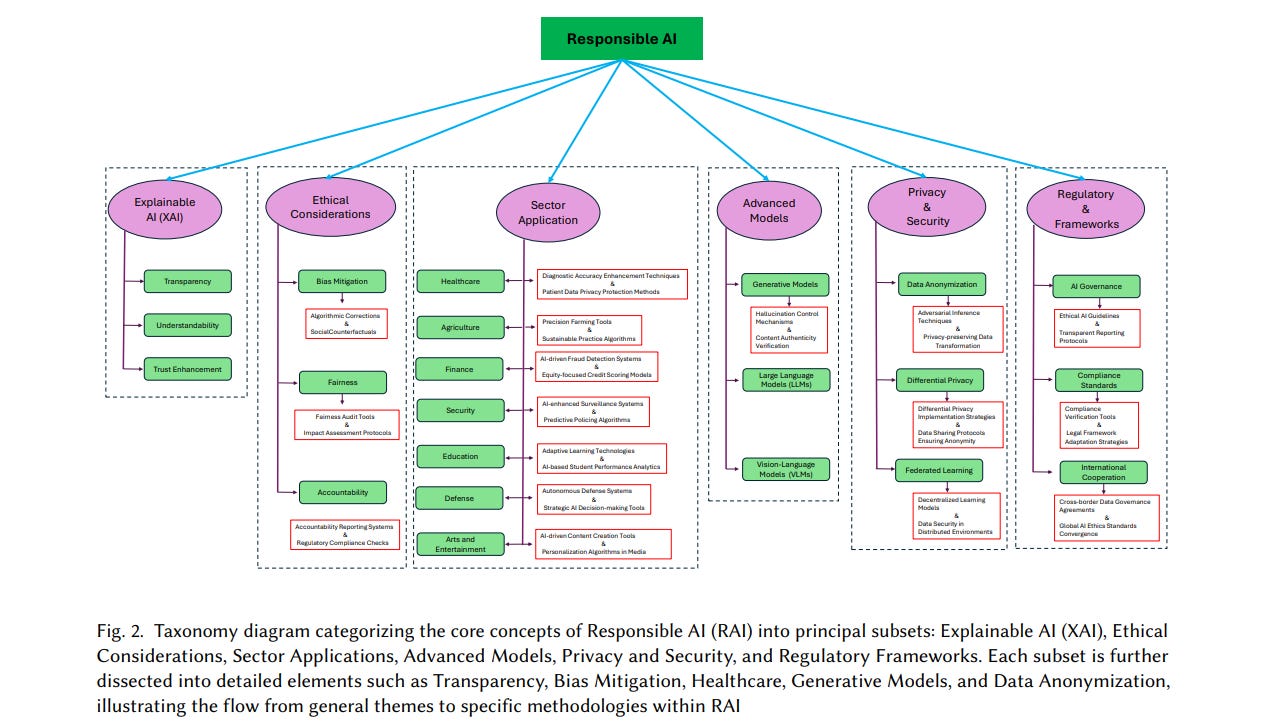

Examining Responsibility in Generative AI: Data, Models, Users, or Regulations?

• Researchers emphasize the critical need to align generative AI benchmarks with governance frameworks to ensure ethical, transparent, and accountable AI systems in the post-ChatGPT era

• The article addresses the implementation gaps between theoretical Responsible AI (RAI) frameworks and real-world applications, suggesting robust governance and technical measures as potential solutions

• Future perspectives on Responsible Generative AI are explored through a comprehensive list of resources and datasets, aimed at minimizing societal risks associated with AI deployment;

AI Compliance Standards Face Criticism Over Security Gaps and Vulnerabilities

• A comprehensive analysis reveals significant security gaps in existing AI governance frameworks, with NIST leaving 69.23% of risks unaddressed and the ICO showing an 80% compliance-security gap;

• A novel metric-driven methodology quantifies security vulnerabilities in AI standards, identifying major contributors such as under-defined processes and insufficient implementation guidance;

• Recommendations highlight the urgent need for robust and enforceable security controls to bridge the gap between compliance and genuine AI security, offering actionable insights for policymakers.

Deep Agent AI System Enhances Multi-Phase Task Management with Innovative Architecture

• Deep Agent is an autonomous AI system utilizing a Hierarchical Task DAG framework to dynamically manage complex multi-phase tasks by efficiently decomposing objectives into manageable sub-tasks;

• The system features a recursive two-stage planner-executor architecture and an Autonomous API & Tool Creation mechanism, dramatically enhancing task execution adaptability and reducing operational costs;

• Integrated Prompt Tweaking Engine and Autonomous Prompt Feedback Learning optimize language model prompts, boosting inference accuracy and operational stability for intricate, multi-step AI tasks.

New Framework Aims to Bridge Gaps in AI Risk Management with Established Practices

• A new frontier AI risk management framework introduces a comprehensive approach to bridge current AI practices and traditional risk management methods, emphasizing systematic rigor.

• The framework incorporates four key components: risk identification, risk analysis and evaluation, risk treatment, and risk governance, adapting techniques from established high-risk industries.

• Emphasizing proactive measures, the methodology integrates throughout the AI system lifecycle, advocating early risk management actions to reduce burdens before the final training phase.

Make AI work for you, not replace you. Learn in-demand AI governance skills at School of Responsible AI. Book your free strategy call today with Saahil Gupta, AIGP !

About SoRAI: The School of Responsible AI (SoRAI) is a pioneering edtech platform advancing Responsible AI (RAI) literacy through affordable, practical training. Its flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.