[SHOCKING] Courts Say ‘Yes’ to AI Training- But With Warnings!

U.S. courts sided with Meta and Anthropic, allowing AI training on copyrighted books under fair use- though cautiously, with judges stressing these are narrow, case-specific rulings..

Today's highlights:

You are reading the 106th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training such as AI Governance certifications (AIGP, RAI) and an immersive AI Literacy Specialization. This specialization teaches AI using a scientific framework structured around four levels of cognitive skills. Our first course is now live and focuses on the foundational cognitive skills of Remembering and Understanding. Want to learn more? Explore all courses: [Link] Write to us for customized enterprise training: [Link]

🔦 Today's Spotlight

In a pivotal week for the legal intersection of copyright law and generative AI, courts in the U.S. and UK delivered three significant rulings. Two U.S. federal judges sided with AI firms Meta and Anthropic, declaring that training large language models on copyrighted books qualifies as fair use- a potential lifeline for the tech industry. Meanwhile, in the UK, Getty Images scaled back its copyright claims against Stability AI. However, all three decisions were narrow and fact-specific, offering no blanket immunity for AI developers. The key message: Fair use rulings are favoring tech companies, but with explicit boundaries.

🧑⚖️ U.S. Rulings: Meta and Anthropic Win – For Now

Meta (Judge Vince Chhabria, San Francisco): Meta’s use of books by 13 authors to train its language models was ruled as fair use because it was “highly transformative” and caused “no meaningful market harm.” However, Chhabria explicitly cautioned that this ruling was narrow, stating it “does not mean all AI training is legal,” and criticized the plaintiffs for making weak arguments.

Anthropic (Judge William Alsup, San Francisco): Similarly, Alsup ruled that Claude AI’s training on millions of novels was fair use, comparing it to how human writers learn to create. Yet, Alsup found that Anthropic’s method of acquiring pirated books into a “central library” was infringing, and scheduled a December 2025 damages trial. Later purchases of those books did not excuse the original infringement.

🇬🇧 UK: Getty Narrows Case Against Stability AI

Getty Images vs Stability AI: Getty dropped its key claims that Stability AI’s training on copyrighted photos and image outputs (with Getty watermarks) violated UK copyright law. The retreat was strategic, due to weak evidence and difficulty applying UK law to U.S.-based training activity.

Remaining Issues: Getty still pursues trademark infringement (watermarks) and a novel claim of “secondary infringement”, arguing AI models trained abroad but used in the UK can still infringe UK law. Legal observers say this theory could have broad implications for globally trained AI models.

🌍 Broader Global Context

EU: Key Case at CJEU Pending The EU Court of Justice (CJEU) is reviewing whether LLM training on copyrighted press articles falls under “text and data mining” exemptions. Case C‑250/25 could reshape AI copyright rules across the EU.

UK Politics: Pressure Mounts The UK’s House of Lords backed reforms in June to require AI training transparency. Artists like Elton John have labeled unregulated AI use an “existential threat” and demand legislative action.

🧠 Final Takeaways: Fair Use Wins- but Conditional

Courts affirmed that highly transformative AI training may qualify as fair use. But this only applies if:

Both judges warned: future cases on news, music, or films may be judged differently.

Getty’s retreat shows that jurisdiction and evidence are major hurdles in cross-border copyright disputes.

📌 Conclusion: Tech Wins This Round, But the Fight Isn’t Over

The rulings mark early victories for AI developers- but not a license to scrape at will. Courts are threading the needle: rewarding transformation, punishing piracy. Each case is judged on its specific facts, and upcoming lawsuits or laws- especially in Europe—could shift the balance. For now, fair use is a shield, not a sword.

🚀 AI Breakthroughs

Nvidia Becomes World's Most Valuable Company Amid AI Stock Surge and Optimism

• Nvidia shares soared to a record high after a significant presentation by CEO Jensen Huang, reclaiming the title of the world’s most valuable company at $3.77 trillion

• Loop Capital increased Nvidia's share price target to $250, citing its strong position to capitalize on a wave of AI adoption and maintaining a "buy" rating

• Despite robust growth, Nvidia's stock trades at 30 times projected earnings, below its five-year average, indicating strong earnings growth relative to stock price appreciation.

Google Launches Gemini CLI to Enhance Developer Experience with Local AI Tool

• Google unveils Gemini CLI, an agentic AI tool integrating its Gemini AI models with local codebases, enabling developers to simplify coding processes directly through natural language requests from terminals;

• Positioned in direct competition with tools like OpenAI's Codex CLI, Google's Gemini CLI offers integration capabilities with other applications, from video creation to accessing real-time information via Google Search;

• To boost developer adoption, Google is open sourcing Gemini CLI under the Apache 2.0 license and offering generous usage limits, doubling the typical daily model requests available to free users.

Google Launches Doppl App for AI-Powered Virtual Outfit Try-Ons in U.S.

• Google unveiled Doppl, an experimental app using AI to visualize outfits by creating a digital version of users trying on various looks, available on iOS and Android in the U.S.

• The app operates by having users upload full-body photos, allowing virtual try-ons using outfit images or screenshots sourced from thrift stores, friends, or social media

• Doppl also converts static outfit images into AI-generated videos to demonstrate clothing dynamics, with users able to save, browse, and share their favorite virtual looks;

YouTube Expands AI Features with New Search Carousel for Premium Users

• YouTube introduces an AI-powered search results carousel, exclusively for U.S. Premium users, streamlining content discovery with video suggestions and brief descriptions for queries about shopping and travel

• Concerns arise as the AI results carousel, similar to Google's AI Overviews, might decrease video engagement, potentially impacting creators reliant on user clicks for revenue

• YouTube expands access to its conversational AI tool, now available to select non-Premium users, offering AI-driven content recommendations and interactive video summaries for deeper viewer engagement.

Google Launches Imagen 4 and Imagen 4 Ultra for Enhanced Text-to-Image Generation

• Google has released Imagen 4, their latest and most advanced text-to-image model, for paid preview via the Gemini API with limited free testing in Google AI Studio

• The Imagen 4 family encompasses the flagship Imagen 4 model for diverse tasks and Imagen 4 Ultra for enhanced prompt precision, priced at $0.04 and $0.06 per image respectively

• Additional billing tiers are expected soon, while current users can request higher rate limits for both Imagen 4 and Imagen 4 Ultra to maximize usage;

Google Expands AI Mode in India, Allowing Complex Multi-Part Searches via Labs

• Google launches AI Mode in India via Labs platform, enabling multitiered, complex question capabilities directly in Search, after conducting initial trials in the U.S.

• Customized Gemini 2.5 powers AI Mode, allowing users to ask longer, layered questions with voice and image input, enhancing search precision through query fan-out

• Rising user interest in AI-driven search reflects in increased AI Overview usage, with AI Mode blending AI responses and standard results if confidence is lacking;

Microsoft Launches Mu: Efficient On-Device Language Model for Copilot+ PCs

• Microsoft introduces Mu, an on-device language model for Copilot+ PCs, designed for NPUs, optimizing performance in the Windows Settings app for Windows Insider Program users

• Built on a Transformer encoder–decoder architecture, Mu achieves over 100 tokens per second, reducing first-token latency by 47% compared to similar models

• Fine-tuned on Azure’s A100 GPUs, Mu features dual LayerNorm and rotary embeddings, with partner support from AMD, Intel, and Qualcomm, enhancing performance on hardware like Surface Laptop 7.

Anthropic Launches AI App Development with Claude: Simplified Integration and Sharing

• Anthropic enhances Claude with tools for building, hosting, and sharing interactive AI apps, facilitating rapid iterations without the overhead of managing API keys or subscription costs;

• Developers using Claude can harness AI-powered app capabilities, including automatic code generation and debugging, thereby focusing on creativity instead of complex technical details;

• Beta testers have created unique AI solutions like adaptive games, personalized learning tools, and intuitive data analysis apps, demonstrating diverse use cases available to Claude users.

11ai Enhances Voice-First Productivity with MCP Integration for Seamless Task Automation

• 11ai introduces a voice-first productivity platform, overcoming traditional voice assistant limitations by connecting directly with everyday tools through MCP integration;

• With built-in MCP support, ElevenLabs Conversational AI enables seamless integration with services like Salesforce, HubSpot, Gmail, and Zapier, enhancing productivity through unified API connectivity;

• Users can perform complex tasks via voice commands, such as managing schedules, conducting customer research, and creating project tickets, with contextual understanding and sequential action capabilities.

Creative Commons Launches CC Signals Project to Neatly Balance AI and Openness

• Creative Commons unveiled the CC signals project to help dataset holders specify how their content can be utilized by AI models, balancing openness with data demand

• The project aims to prevent internet closures and protect data by offering legal and technical solutions for AI training, addressing the growing need for regulatory clarity

• The CC signals initiative, inspired by CC licenses, seeks public feedback and plans an alpha release in late 2025, alongside town halls to refine its approach.

Suno Acquires WavTool to Enhance AI Music Editing Amid Ongoing Legal Battles

• Suno, mired in legal battles with music labels, has acquired WavTool to enhance its editing capabilities for songwriters and producers through browser-based AI technology

• WavTool, launched in 2023, provides AI-driven tools like stem separation and an AI music assistant, which Suno will integrate into its new editing interface this month

• Amid ongoing lawsuits, including one from Tony Justice, Suno's acquisition announcement appears timed to reassure investors of growth and commitment, despite pending legal challenges.

Salesforce's Agentforce 3 Enhances AI Agents with New Command Center and MCP Integration

• Salesforce debuts Agentforce 3, enhancing enterprise AI agent performance with a new Command Center for complete observability, reducing customer case handle times by up to 15%;

• Built-in support for the Model Context Protocol enables Agentforce 3 to achieve seamless connectivity with third-party tools like Google Cloud, AWS, and Stripe for enhanced interoperability;

• The updated Atlas architecture boosts Agentforce 3 with lower latency, greater accuracy, and support for natively hosted LLMs such as Anthropic, strengthening enterprise-readiness for users worldwide.

AlphaGenome AI Model Offers Comprehensive Insights into Genome Functionality via API Access

• AlphaGenome, an advanced AI model, revolutionizes understanding of genomic variations by accurately predicting their effects on biological processes, now accessible through an API for research;

• Distinguished by its long sequence-context analysis and base-level precision, AlphaGenome excels across genomic tasks, outperforming existing models and offering comprehensive multimodal predictions on genetic variants;

• Researchers benefit from AlphaGenome's capabilities in disease understanding, synthetic biology, and fundamental genomic research, despite current limitations in capturing extensive regulatory element influences.

⚖️ AI Ethics

Gartner Report Forecasts 40% Discontinuation of Agentic AI Projects by 2027

• Gartner reports that over 40% of agentic AI projects may be discontinued by 2027 due to rising costs, unclear benefits, and insufficient risk management

• A January 2025 poll showed that 19% of organizations have invested heavily in agentic AI, while 42% made cautious investments and 31% remain uncertain or waiting

• "Agent washing" is misleading the market, with few of the countless vendors offering genuine, capable agentic AI systems, despite significant investments by major companies like Salesforce and Oracle;

EU Faces Call to Pause AI Act Rollout Due to Missing Standards

• Concerns arise over the EU's AI Act implementation without common standards, risking Europe lagging technologically and lacking certain AI applications on the market

• Discussions in Brussels consider pausing the AI Act rollout if necessary guidance and standards are not prepared, with support from some lawmakers

• The AI Act's integration into the upcoming digital simplification package is being advocated, aiming to streamline regulatory compliance for companies in Europe.

Meta Intensifies AI Talent Race by Hiring Three Key Researchers from OpenAI

• Meta has hired three key researchers from OpenAI's Zurich office to strengthen its superintelligence efforts, indicating a strong focus on AI talent acquisition

• Investment in Scale AI and pursuit of Safe Superintelligence (SSI) reflect Meta’s aggressive strategy to expand its AI capabilities amid intense industry competition

• Reports suggest Meta offers exorbitant compensation packages to attract talent, fueling an intense AI talent war with players like OpenAI and Anthropic;

Revised NO FAKES Act Could Harm Free Speech and Innovation, Says EFF

• The EFF insists the revised NO FAKES Act's focus on intellectual property rather than privacy would harm free speech and foster a litigious environment, detrimental to innovation.

• The legislation, criticized for incentivizing monetization of deceased celebrities' likenesses, could disadvantage smaller startups with costly compliance requirements, benefiting established platforms like YouTube, per the EFF.

• Critics argue that existing legislation like the TAKE IT DOWN Act already addresses necessary protections, urging caution regarding NO FAKES' potential overreach and negative impacts on digital tool availability.

AI Models Display Bias Favoring Chinese Communist Party Perspectives, New Report Reveals

• A report by the American Security Project reveals five leading AI models, primarily from the U.S., exhibit bias toward Chinese Communist Party viewpoints and censor sensitive topics.

• Microsoft's Copilot is highlighted for frequently echoing CCP disinformation as authoritative, while X's Grok is noted for its more critical responses toward Chinese state narratives.

• The study indicates AI models incorporate training data reflecting CCP language, challenging true neutrality models struggle to discern truth, potentially amplifying harmful ideologies.

New Bill Aims to Shield US Government from Foreign-Controlled Artificial Intelligence Risks

• The No Adversarial AI Act seeks to protect U.S. federal agencies from AI technologies linked to foreign adversaries, especially those from China, enhancing national security;

• The legislation mandates a federal procurement ban on AI models from foreign adversaries like DeepSeek, unless exceptions for research or national security are justified to Congress;

• With AI central to modern geopolitics, the Act proposes stringent oversight and removal of adversarial AI from government systems to safeguard data integrity and operations.

Judge Alsup Rules AI Companies Can Use Copyrighted Books Under Fair Use

• A historic ruling by Judge Alsup permits Anthropic to train AI models on copyrighted works under the fair use doctrine, marking a victory for AI companies in copyright disputes;

• The decision is a setback for authors and publishers who have filed lawsuits against AI companies, influencing the judicial direction towards favoring tech entities over content creators;

• The court will still address the legality of Anthropic's alleged pirated "central library," highlighting continuing legal challenges surrounding the use of copyrighted material in AI training.

Meta Wins Copyright AI Case: Judge Cites Fair Use in Book Lawsuit

• A federal judge ruled that Meta's AI training on copyrighted books falls under "fair use" law, dismissing claims from authors, including Sarah Silverman, who alleged illegal usage

• The judgment highlighted that plaintiffs failed to provide crucial evidence on market harm, noting that fair use arguments vary greatly by industry, with some markets more vulnerable than others

• Despite Meta's legal win, the verdict clarifies that not all AI model training is protected by "fair use," emphasizing the necessity of detailed case-by-case analysis in copyright disputes.

Getty Images Drops Key Copyright Claims Against Stability AI in UK Court

• Getty Images has retracted primary copyright infringement claims against Stability AI in the London High Court, but continues to pursue other claims and a separate U.S. lawsuit;

• Stability AI, creator of Stable Diffusion, welcomed Getty's decision to drop multiple claims, citing lack of evidence and strategic focus on more viable allegations by Getty;

• Getty's lawsuit persists with secondary infringement and trademark claims, focusing on AI models as potentially infringing imported articles in the U.K., despite outside-UK training.

🎓AI Academia

Global Proposal Suggests Compute Governance to Mitigate AI's Growing Risks

• A new paper proposes a global Compute Pause Button as a governance strategy to restrict the computational power available for training potentially dangerous AI systems;

• The proposed framework includes technical measures like tamper-proof FLOP caps, traceability tools for component tracking, and regulatory mechanisms to ensure compliance;

• Urgent intervention using existing credible mechanisms is advocated to prevent crossing critical AI risk thresholds predicted as early as 2027–2028.

AI and Agile Software Development Workshop 2025 Identifies Key Industry Challenges and Solutions

• A diverse coalition of researchers and industry leaders gathered at the XP 2025 workshop to address AI integration challenges within Agile software development practices;

• The workshop identified and prioritized key frustrations such as tooling issues, governance challenges, data quality concerns, and skill gaps in integrating AI into Agile frameworks;

• Participants collaboratively developed a strategic research roadmap, aiming for immediate solutions and long-term goals to enhance human-AI collaboration and facilitate successful AI adoption in Agile settings.

A Comprehensive Tool to Evaluate AI Inclusivity Aims to Mitigate Biases

• A new AI inclusivity question bank with 253 questions is developed to assess AI systems' alignment with diversity and inclusion across five pillars, including Humans and Data;

• This comprehensive assessment tool arises from literature reviews, D&I guidelines, Responsible AI frameworks, and a simulated user study featuring diverse AI-generated personas;

• Researchers emphasize the integration of D&I principles in AI workflows and governance to address systemic biases, with the question bank as an actionable resource for equitable AI development.

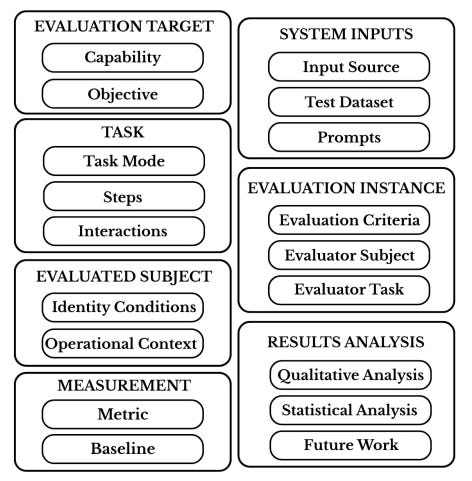

New Framework Established for Evaluating AI Capabilities in Governance and Safety

• Researchers published a new framework for AI capability evaluations, offering a structured approach to analyze methods and terminology without enforcing new taxonomies or rigid formats;

• The framework aims to enhance transparency, comparability, and interpretability, allowing stakeholders to identify methodological weaknesses and design effective AI system evaluations;

• Capability evaluations are deemed essential for tracking AI progress, ensuring safe deployment, and aiding policymakers in understanding complex AI evaluation landscapes and regulatory needs.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.