RIP Google Search? ChatGPT search is now available to everyone.

On one hand, China seems to be on fire through ByteDance’s OmniHuman-1 and DeepSeek's cost-efficient AI, while the EU continues refining AI governance with new guideline. There's more to watch out for

Today's highlights:

🚀 AI Breakthroughs

OpenAI Expands ChatGPT Search Accessibility

• OpenAI has made its ChatGPT Search feature available to everyone without requiring an account. Initially launched in November 2024 for paid users, it expanded to free-tier users in December 2024 and now includes unregistered users.

• Users can activate web search mode by clicking a globe icon, allowing ChatGPT to fetch real-time information from the internet using the GPT-4o model. It provides clickable sources for transparency.

• Unlike many AI tools, OpenAI has not imposed rate limits on unregistered users, ensuring unrestricted access to web searches.

Bytedance's OmniHuman-1 Develops Lifelike Human Videos from Single Images and Signals

• ByteDance's OmniHuman-1 can generate hyper-realistic human videos from just a single image and audio input, setting a new benchmark in multimodal video synthesis;

• The AI model supports diverse input types including cartoons and animal visuals, while adapting to varied audio and video signals, offering unmatched flexibility;

• OmniHuman's innovations overcome data scarcity issues and enhance video realism, particularly excelling in gesture and motion detail by using a mixed training strategy.

Google Expands Gemini 2.0 API Access with New Models for Developers

• The Gemini 2.0 Family expands its reach with three new model variants—Flash, Flash-Lite, and Pro—now available for developers via Google AI Studio and Vertex AI

• Gemini 2.0 Flash provides enhanced performance and cost efficiency, featuring a 1 million token context window, native tool use, and forthcoming multimodal input support

• With a simplified pricing structure, Gemini 2.0 models offer significant cost savings and performance boosts compared to Gemini 1.5, making them ideal for diverse text output use cases.

Google Expands Gemini 2.0 Flash Access with New Models and Enhanced Features

• The Gemini 2.0 family of models has been expanded, offering enhanced multimodal input capabilities and improved performance, now accessible via Google AI Studio, Vertex AI, and the Gemini app

• Gemini 2.0 Pro introduces significant advancements in coding and complex prompt handling with a novel 2 million token context window, enhancing analytical and comprehension abilities for developers

• Developers can explore the cost-efficient Gemini 2.0 Flash-Lite model, now available in public preview, designed to balance performance with affordability in AI application development;

OpenAI Plans First Super Bowl Ad Amid Growing AI Market Competition

• OpenAI is expected to debut its first-ever TV commercial during the upcoming Super Bowl, marking its entrance into the realm of commercial advertising

• The Super Bowl ad spot costs have increased, with a 30-second slot for 2025 reaching up to $8 million, highlighting the event's advertising significance

• OpenAI, partly owned by Microsoft, released ChatGPT in 2022 and has since grown to over 300 million weekly active users as it seeks a $40 billion raise;

GitHub Copilot Expands with Agent Mode and Project Padawan in VS Code

• GitHub Copilot has enhanced its functionality with an agent mode that autonomously iterates code, suggests terminal commands, and self-heals run-time errors, revolutionizing developer workflows

• In VS Code, GitHub Copilot Edits is now generally available, allowing users to manage and edit multiple files through conversational UI, offering efficiency and control to developers

• Project Padawan introduces autonomous Software Engineering Agents capable of advanced code management tasks, aiming to streamline and augment software development processes on GitHub platforms.

OpenAI and Kakao Collaborate to Implement ChatGPT in South Korean AI Services

• OpenAI partners with South Korean tech giant Kakao, enabling the use of ChatGPT in Kakao's AI services to enhance communication and connectivity for millions of users

• OpenAI's collaboration with Kakao is part of a broader strategy, as competition heats up following the emergence of Chinese AI firm DeepSeek

• Altman explores AI-related opportunities in South Korea, meeting with major semiconductor companies Samsung and SK hynix to discuss memory chip advancements essential for AI infrastructure.

OpenAI Files Trademark Application Hinting at Diverse AI Hardware and Robotics Ventures

• OpenAI has filed for a trademark with the USPTO hinting at upcoming product lines including AI-integrated gadgets like headsets, smartwatches, and augmented reality devices for interactive user experiences

• The filing also reveals OpenAI's interest in humanoid robots with programmable functions and AI-driven intelligence, suggesting a potential expansion into consumer robotics perhaps led by new team efforts

• In a strategic move, OpenAI's trademark suggests an ambition toward developing custom AI chips and services, potentially leveraging quantum computing to boost AI model efficiency amid rising computational demands.

Adobe Acrobat AI Assistant Enhances Contract Management with New Generative AI Features

• Adobe unveiled new generative AI features in Acrobat AI Assistant, simplifying contract comprehension by highlighting complex terms and spotting variations between agreements for faster, clearer understanding

• The AI-driven capabilities provide secure, easily navigable contract intelligence, offering features like document structuring, contract overviews, and verified explanations, all while maintaining user data security

• Available as a $4.99 monthly add-on, Acrobat AI Assistant's latest features are accessible on multiple platforms, supporting increased clarity and efficiency for its vast user base globally.

New Book Demystifies Scaling of Large Language Models Using TPU Technology

• A comprehensive guide aims to demystify the science of scaling language models on TPUs, addressing performance optimization, parallelization, and efficient model training and inference at massive scales;

• Understanding scaling is crucial as modern machine learning research increasingly requires grasping efficiency at vast hardware limits to ensure feasible model execution and innovation;

• The book explores practical implementations of model parallelism in LLaMA-3 with JAX, providing foundational techniques in optimizing large language models across varied hardware architectures.

Global Leaders Convene in Paris to Address Shifting AI Power Dynamics

• The Paris AI summit becomes a battleground for global tech supremacy, with leaders from 80 countries discussing AI advancements amid Chinese app DeepSeek's disruptive rise

• With China's DeepSeek challenging U.S. AI leadership, Europe sees the summit as a pivotal opportunity to strengthen its position in the global AI race

• The Paris summit draws high-profile attendees, with notable absences and strategic presences highlighting shifting dynamics and priorities in international AI leadership;

⚖️ AI Ethics

Bipartisan Bill Proposed to Ban DeepSeek's Chatbot on U.S. Government Devices

• US legislators propose a bipartisan bill to ban DeepSeek's chatbot from government devices, citing security concerns over potential data transmission to China, parallels drawn to TikTok's ban

• DeepSeek, a Chinese AI startup, gained rapid popularity as its chatbot became the top US app, but its open-source models raised cybersecurity alarms globally

• Countries like Australia and key South Korean ministries have already prohibited DeepSeek on government systems, while US agencies like the Navy have blocked it for security reasons.

California Bill to Enforce Child Safety Reminders for AI Chatbot Interactions

• A proposed California bill, SB 243, mandates chatbots interacting with children to periodically remind users they are AI and not human

• The bill aims to prevent platforms from incentivizing user engagement, requiring companies to report minors' mental health concerns and banning reward systems

• Research shows children often trust AI chatbots, risking emotional connections tragic incidents and lawsuits highlight the urgency of robust safety measures;

Anthropic Warns Job Applicants Against Using AI, Despite AI-Centric Business Model

• AI company Anthropic discourages job candidates from using AI during the application process, highlighting the importance of personal communication skills without technological assistance;

• Despite being a leading AI innovator with significant technology investment, Anthropic joins other employers in seeking candidates with strong human interaction abilities over AI-generated content;

• Hiring managers report a preference for non-AI-assisted applications, with a large percentage claiming they can detect automated submissions, impacting candidates' chances negatively.

John Schulman Joins Mira Murati's Stealth Startup After Leaving Anthropic

• John Schulman, a cofounder of OpenAI, has left Anthropic after just five months to join a stealth startup led by former OpenAI CTO Mira Murati;

• Despite his move from Anthropic, the role Schulman will play in Murati's fledgling company remains undisclosed, fueling industry speculation and intrigue;

• Murati's startup, yet to reveal its name or mission, is already notable for recruiting high-profile talent from leading AI firms like OpenAI, Character AI, and DeepMind;

Meta Shares Its Frontier AI Framework

• Open-source AI potentially levels the technological playing field, offering free access to powerful technologies, thus fostering global competition and innovation for societal, economic, and individual benefits

• The Frontier AI Framework targets critical cybersecurity and bioweapons risks, promoting national security by employing threat modeling and setting risk thresholds to prevent catastrophic outcomes

• The approach to open-sourcing AI aids in risk anticipation and mitigation, enhancing model trustworthiness through community assessments, while aligning AI's transformative benefits with protective measures.

Global Governments Increasingly Restrict DeepSeek AI Over Security Concerns and Risks

• South Korea's industry ministry has temporarily banned DeepSeek access, urging caution with generative AI technologies due to mounting security concerns and international scrutiny

• Australia has banned DeepSeek services from all government systems, emphasizing potential national security threats while advising citizens on online privacy precautions

• Taiwan and Italy have restricted DeepSeek's use, citing concerns over data transmission risks, while European nations like France investigate the company's data handling practices.

India's Finance Ministry Restricts AI Tool Use Amid Data Security Concerns

• A LocalCircles survey found that 50% of Internet users in India utilize AI platforms, with ChatGPT emerging as the most favored tool;

• The Finance Ministry in India has advised government employees against using AI tools like ChatGPT and DeepSeek for official work due to data security concerns;

• Despite restrictions for government use, ChatGPT is not banned for the Indian public, who continue to access it freely without limitations;

DeepSeek Faces Intensified Scrutiny Over Alleged Links to Chinese State-Owned Telecom

• DeepSeek faces intensified scrutiny as new research indicates deeper-than-expected ties to the Chinese state, following bans on government resources in multiple countries

• Feroot Security's findings reveal an obfuscated code in DeepSeek's web login that links to China Mobile, raising national security concerns about user data access

• Although Feroot found no data transmission to China Mobile during North America logins, DeepSeek remains under investigation, particularly the unfettered usage of its mobile app.

EU Commission publishes the Guidelines on prohibited artificial intelligence (AI) practices, as defined by the AI Act.

• These guidelines provide an overview of AI practices that are deemed unacceptable due to their potential risks to European values and fundamental rights.

• Designed for the EU, the AI Act guidelines provide legal explanations and practical examples, ensuring consistent understanding and compliance with the AI framework;

• Although approved in draft form, the AI Act guidelines remain non-binding, with official interpretations pending from the Court of Justice of the European Union.

EU Commission Issues Practical Guidelines on AI System Definition Under New Act

• The Commission issues guidelines to help determine AI system classification, aiding providers in complying with the AI Act's requirements

• These non-binding guidelines will evolve, adapting to real-world experiences and emerging AI questions and use cases, per the Commission's plans

• In conjunction with prohibited AI practices guidance, the guidelines aim to clarify AI Act's implementation as initial rules take effect across the EU.

🎓AI Academia

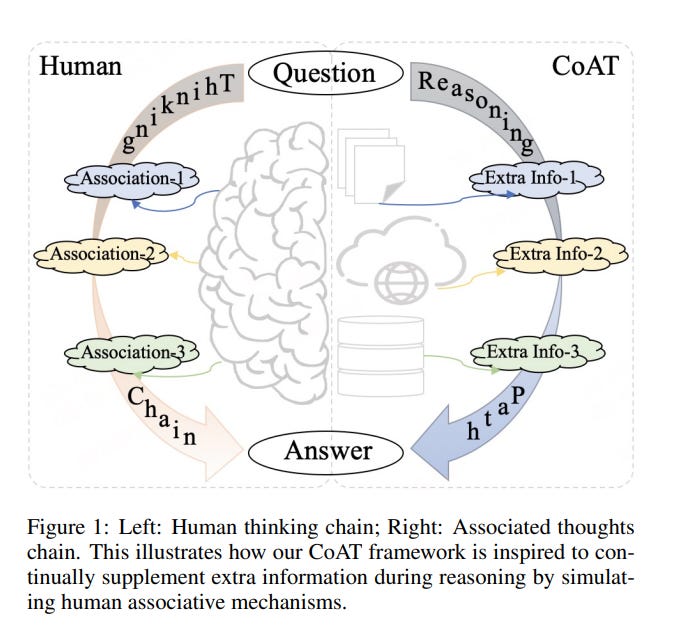

CoAT Framework Enhances Large Language Models with Chain-of-Associated-Thoughts Approach

• The CoAT framework integrates Monte Carlo Tree Search and associative memory to emulate human-like "slow thinking" in large language models, enhancing iterative reasoning capabilities.

• By expanding the search space and dynamically updating knowledge, CoAT refines earlier inferences and adapts to new information, ensuring comprehensive and accurate outputs.

• Experiments demonstrate CoAT's superiority over traditional inference methods in accuracy, coherence, and diversity, offering significant advancements in generative and reasoning tasks.

Rankify Offers Unified Python Toolkit for Efficient Retrieval and Re-Ranking in NLP

• "Rankify" emerges as a modular open-source Python toolkit designed to integrate retrieval, re-ranking, and retrieval-augmented generation processes for natural language processing applications;

• Providing a unified framework, Rankify supports diverse retrieval techniques, including dense and sparse retrievers, enhancing quality and consistency in information retrieval system evaluations;

• To ease accessibility and integration, Rankify offers extensive documentation, an open-source GitHub repository, and a PyPI package, alongside pre-retrieved datasets available on Hugging Face for benchmarking.

Anthropic Develops Constitutional Classifiers to Guard Against Universal Model Jailbreaks

• Researchers developed Constitutional Classifiers using synthetic data to defend large language models (LLMs) against universal jailbreaks, which can bypass safeguards and illicitly extract harmful information

• In over 3,000 hours of red teaming, the early versions of these classifiers effectively prevented universal jailbreaks from obtaining detailed information from LLMs across most queries

• Tests showed these classifiers offer robust defense against domain-specific jailbreaks, maintaining deployment viability with only a slight increase in traffic refusals and inference overhead.

OmniHuman-1 Enhances Realism in Audio-Driven Human Animation with Scaled Data

• OmniHuman-1 leverages Diffusion Transformer-based architecture for scaling up audio-driven human animation, focusing on realistic head, gesture movements, and facial expressions, matching input audio;

• Revolutionary omni-conditions training allows OmniHuman-1 to produce videos with any body proportion and aspect ratio, improving gesture generation and object interaction over prior methods;

• OmniHuman-1 excels in generating videos for various portrait content, accommodating talks and singing, complex body poses, and human-object interactions, expanding applications in human animation.

Framework FairT2I Leverages Language Models to Combat Bias in AI-Generated Images

• FairT2I is a new framework that utilizes large language models to identify and mitigate social biases in Text-to-Image generation

• FairT2I includes a bias detection module for recognizing biases in generated images and an attribute rebalancing tool to adjust sensitive attributes within the model

• Extensive experiments reveal that FairT2I can significantly reduce biases while maintaining high-quality image generation, enhancing diversity in generated content across various datasets;

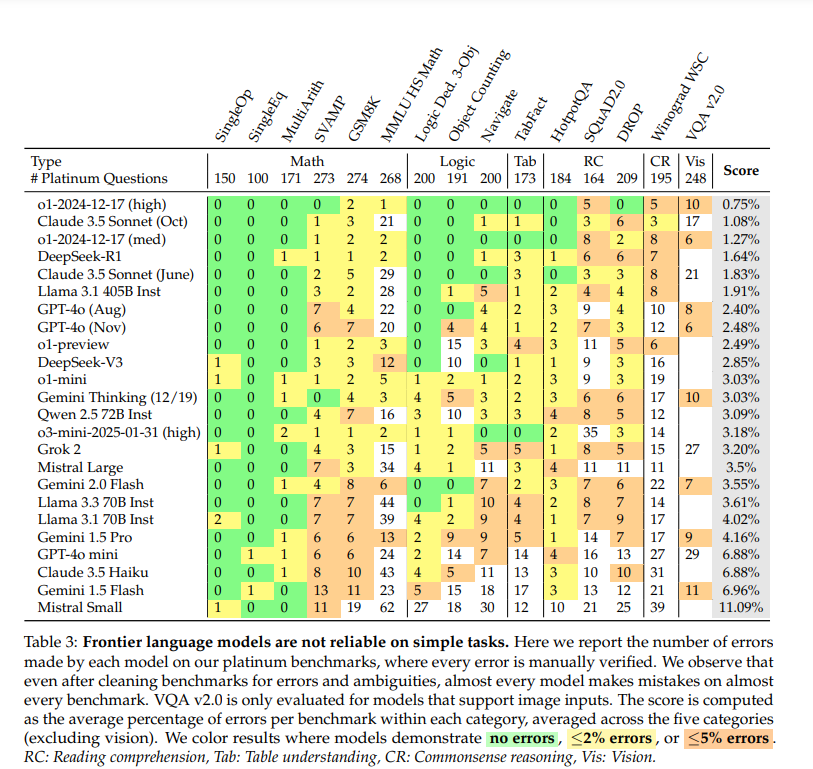

New Study Questions If Current Large Language Model Benchmarks Misjudge Model Reliability

• Researchers critically examine current large language model benchmarks, highlighting that pervasive label errors can obscure model reliability and hide underlying failures.

• Proposed "platinum benchmarks" aim to enhance evaluation by minimizing label errors and ambiguity, revising examples from fifteen existing benchmarks to better assess model reliability.

• Initial assessments using platinum benchmarks reveal that cutting-edge models still struggle with basic tasks, suggesting a need for improved reliability in critical domains like healthcare and finance.

New Framework SPRI Automates Context-Specific Alignment for Large Language Models

• A new framework, SPRI, is introduced to align Large Language Models with context-specific principles automatically, minimizing the need for human expertise and oversight

• SPRI demonstrates effectiveness in generating task-specific principles, improving on expert-crafted guidance, and outperforming prior LLM-as-a-judge frameworks by producing instance-specific rubrics

• The SPRI approach significantly enhances the truthfulness of responses by generating synthetic data for supervised fine-tuning, with code and models available publicly for further exploration.

Comprehensive Analysis of Adversarial Threats to Future-Proof Large Language Models

• A recent analysis highlights the growing adversarial landscape of Large Language Models, focusing on attack objectives like privacy breach, integrity compromise, availability disruption, and misuse;

• The study emphasizes the vulnerabilities of LLMs to adversarial attacks, which can manipulate their outputs or extract sensitive data, thereby posing significant security challenges across applications;

• Researchers aim to guide the development of more robust and resilient LLM systems by examining the strategic intent behind various adversarial approaches and evaluating current defense mechanisms.

DeepSeek R1's Cost-Effective Approach Challenges OpenAI, Impacting Generative AI Landscape

• DeepSeek R1, released in January 2025, leverages cost-efficient strategies and matches OpenAI’s models in reasoning capabilities, despite facing a US GPU export ban

• Innovative uses of Mixture of Experts and Reinforcement Learning enable DeepSeek models to deliver competitive performance at a significantly reduced training cost of approximately $5.6 million;

• DeepSeek's decision to release open weights for their models fosters transparency and collaborative research, offering broader insights into the model architecture while maintaining proprietary training data.

Large Language Models Enhance Climate Policy but Face Human Intervention Challenges

• Researchers explore how large language models can be leveraged to address climate and sustainability challenges, highlighting both the potential and limitations of AI in policy development

• The study underscores the successful application of NLP techniques for processing diverse sustainability documents, identifying challenges related to human intervention and policy integration

• Emphasizing the interconnectedness of global crises, the research discusses AI's dual role in sustainable development, including concerns about transparency, environmental impact, and policy usability;

New Benchmark Evaluates Context-Aware Safety in Large Language Models

• A new context-aware safety benchmark, CASE-Bench, assesses large language models by integrating the context of user queries using Contextual Integrity theory;

• CASE-Bench employs a robust number of annotators to detect statistically significant differences in responses, with findings indicating substantial context influence on judgments (p <0.0001);

• Significant mismatches were observed between human judgments and commercial large language model responses, especially in safe contexts, highlighting the need for improved context consideration.

Large Language Models Benchmarking in Arabic Legal Judgment Prediction Explored in Study

• Researchers investigate the potential of Large Language Models (LLMs) to predict judicial decisions, particularly focusing on legal judgment prediction (LJP) in Arabic using a new dataset from Saudi court judgments

• Open-source LLMs like LLaMA-3.2-3B and LLaMA-3.1-8B are benchmarked in various configurations, showing that fine-tuned smaller models can match the performance of larger models efficiently

• The study introduces an evaluation framework combining BLEU and ROUGE metrics with qualitative assessments to analyze LLM outputs and explores prompt engineering and fine-tuning's impact on model performance.

Explaining Challenges and Solutions in Vision-Language Model Alignment and Misalignment

• A recent survey delves into alignment and misalignment challenges in Large Vision-Language Models (LVLMs), providing insights from an explainability perspective across semantic levels.

• Misalignment in LVLMs manifests at the object, attribute, and relational levels, posing significant challenges at the data, model, and inference stages.

• The survey categorizes mitigation strategies for LVLM misalignment into parameter-frozen and parameter-tuning approaches, highlighting the need for standardized evaluation protocols and further explainability studies.

AI Language Models Show Advanced Strategic Reasoning in Game Theory Settings

• Recent research investigates the strategic reasoning of large language models in multi-agent environments, assessing their ability to predict and adapt to others' actions

• Findings reveal that specialized reasoning LLMs often surpass standard models and rival human performance in classical behavioral economic games

• This study underscores the importance of enhanced reasoning for future AI, vital for Agentic AI and Artificial General Intelligence developments.

JailbreakEval Toolkit Aims to Standardize Evaluation of Large Language Model Security

• JailbreakEval, a toolkit for assessing Large Language Model vulnerability, aims to standardize how jailbreak attempts are evaluated within the AI research community

• This toolkit integrates diverse methods like human annotation, string matching, and text classification, offering flexibility to cater to varied research needs

• The persistent challenge of lacking a unified evaluation method for jailbreak attacks is addressed by JailbreakEval, promoting more consistent safety assessments in AI technologies.

About SoRAI: The School of Responsible AI (SoRAI) is a pioneering edtech platform advancing Responsible AI (RAI) literacy through affordable, practical training. Its flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.