'Race has just begun', Trump Launches Aggressive AI Action Plan to Secure U.S. Global Dominance

U.S. unveiled its 2025 AI Action Plan alongside three sweeping Executive Orders, signaling a dramatic pivot from Biden’s safety-first EO 14110 to Trump’s deregulation-driven AI vision.

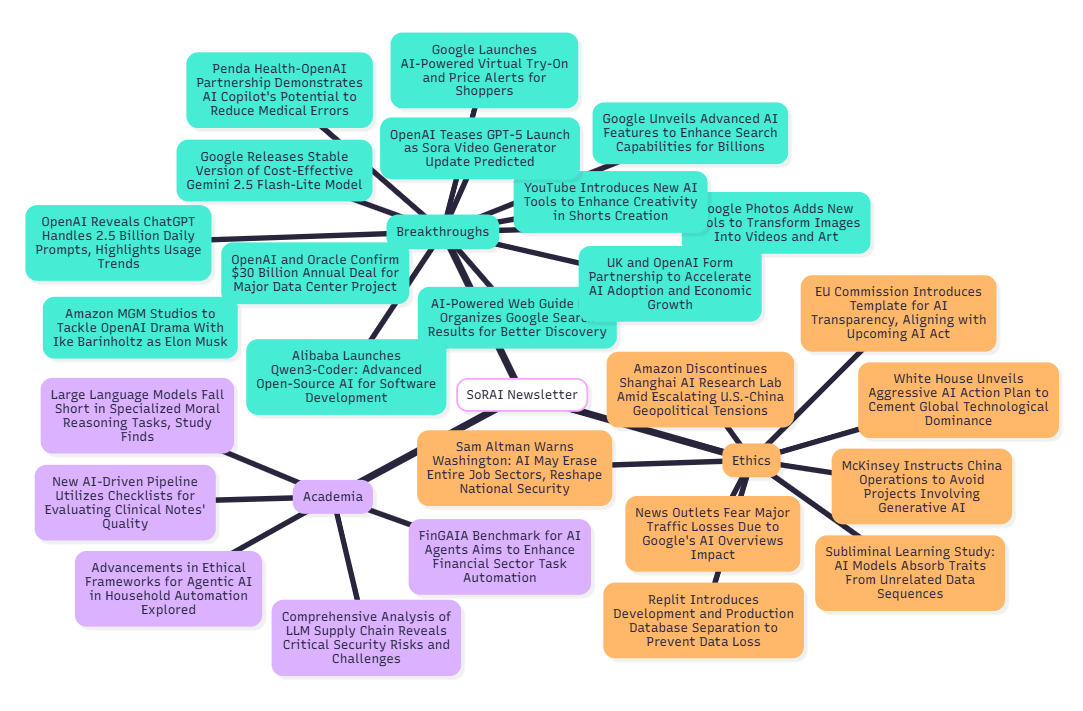

Today's highlights:

You are reading the 113th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training such as AI Governance certifications (AIGP, RAI) and an immersive AI Literacy Specialization. This specialization teaches AI using a scientific framework structured around four levels of cognitive skills and gives you a hands-on path from basic concepts to actual deployment through 12 end-to-end use cases across traditional, generative and agentic ai. Alongwith building your own foundational AI portfolio, you'll also learn how to:

➡️ Ship MVPs without code using tools like Gamma, Replit & Lovable

➡️ Automate repetitive work using n8n workflows

➡️ Vibe Coding with Cursor to co-create apps and debug seamlessly

Link to the Program- [https://www.schoolofrai.com/pages/ailiteracy]

🔦 Today's Spotlight

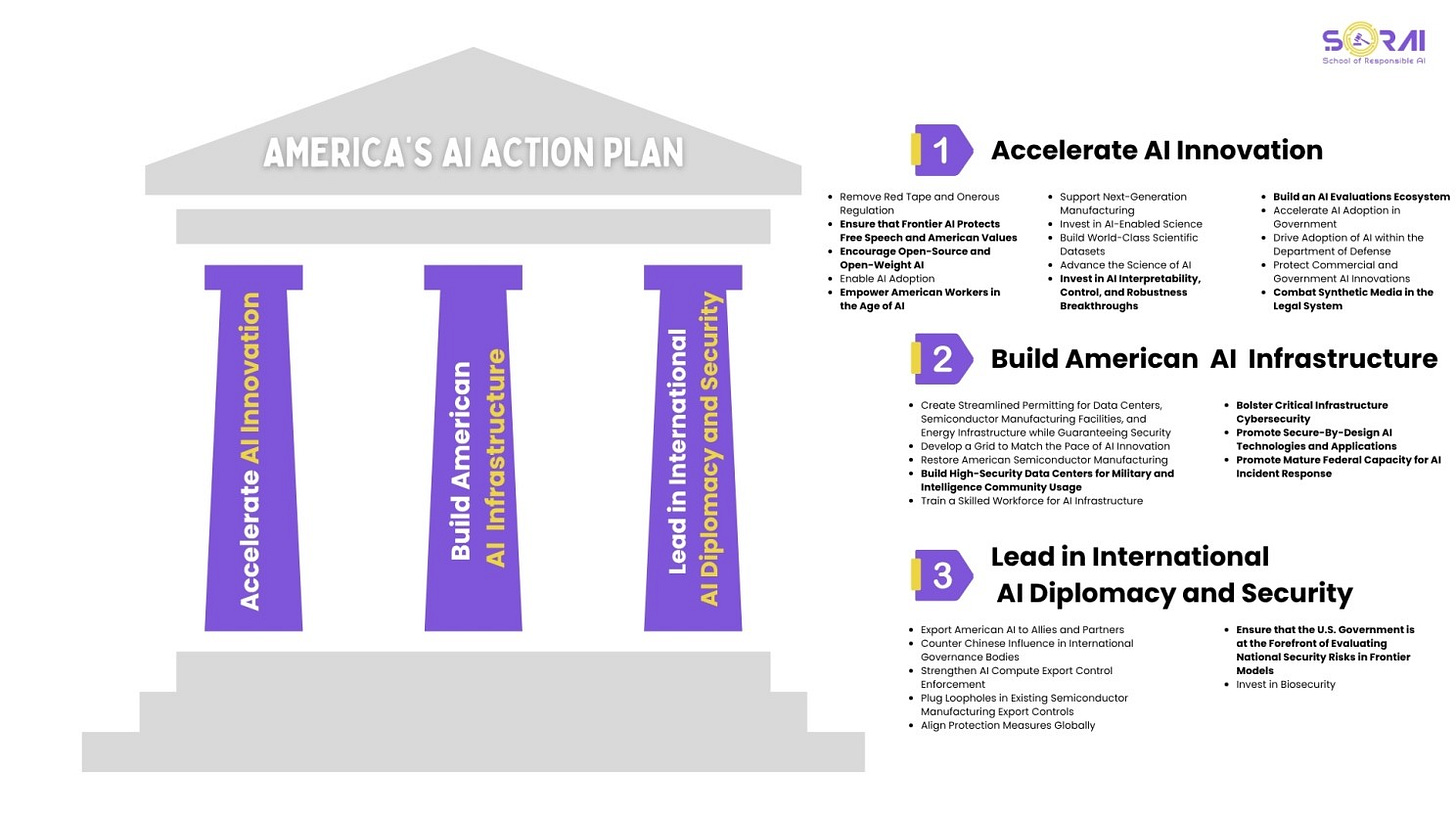

3 sweeping Executive Orders and a 23-page long-awaited "AI Action Plan"

On July 23, 2025, the U.S. released its long-awaited national AI strategy titled “Winning the Race – America’s AI Action Plan,” developed under the directive of Executive Order 14179 issued earlier that year in January. This 23-page blueprint outlines a comprehensive, whole-of-government approach structured around three main pillars: accelerating AI innovation, building American AI infrastructure, and leading in international AI diplomacy and security. The plan comprises more than 90 federal policy actions, including expanded public-private partnerships such as the National AI Research Resource (NAIRR), initiatives to boost open AI research, robust workforce development programs, and incentives to ramp up semiconductor manufacturing. Its overarching goal is to secure U.S. leadership in the global AI race by removing regulatory hurdles, expanding infrastructure, and aggressively promoting American values and technologies on the international stage.

The Three Executive Orders (Signed July 23, 2025)

Alongside the AI Action Plan, three major Executive Orders were signed the same day to operationalize its goals. The first, titled “Accelerating Federal Permitting of Data Center Infrastructure,” aims to streamline the approval process for building large-scale, energy-intensive AI data centers. It eases infrastructure permitting requirements by relaxing environmental review standards under laws like NEPA. The second order, “Promoting the Export of the American AI Technology Stack,” focuses on enhancing the international competitiveness of U.S.-based AI companies. It facilitates the export of American AI chips, software, and models by leveraging government finance and trade mechanisms, including the Export-Import Bank and development finance tools. The third order, “Preventing Woke AI in the Federal Government,” directs agencies to ensure that procured large language models (LLMs) are ideologically neutral and “truth-seeking.” It effectively bans the use of AI systems based on Diversity, Equity, and Inclusion (DEI) frameworks in federal contracts, marking a significant ideological shift in how the government interacts with AI technologies. Together, these EOs translate the AI Action Plan’s high-level vision into actionable mandates across innovation, infrastructure, and federal AI deployment.

What It Means: Key Implications

The release of these documents represents a sweeping regulatory reset, undoing many of the risk-based guardrails set by the previous Biden administration’s Executive Order 14110. In their place, the Trump administration has installed a market-driven approach focused on speed, deregulation, and innovation. The emphasis is on enabling rapid technological advancement by minimizing compliance burdens, rather than imposing ethical or safety constraints. A notable implication is the administration's unprecedented attempt to exert ideological control over federally procured AI systems. By mandating so-called “objective neutrality,” the policy opens the door to potentially politicized definitions of what constitutes appropriate AI behavior, which critics argue could suppress diverse perspectives under the guise of fairness.

Another core theme is the aggressive push to scale AI infrastructure. The plan prioritizes large data center development and energy access, even as environmental protections are rolled back to accelerate this expansion. In terms of international strategy, the U.S. intends to dominate the global AI stack by encouraging foreign governments and firms to adopt American-built tools and models, positioning itself as a supplier of choice in the AI arms race—particularly in countering China’s influence. Domestically, the Department of Labor has been tasked with developing national AI readiness through worker upskilling initiatives, aiming to prepare American citizens for the next wave of AI-enabled employment.

At-a-Glance Summary

The core thrust of the action plan is to reduce regulatory burdens and encourage open innovation, particularly through open-weight and open-source models. Infrastructure policy centers on fast-tracking data center construction, with favorable policies for energy-intensive computing. In federal procurement, the administration will reject DEI-based models in favor of systems that align with a narrowly defined “objective truth.” Export policy aims to promote the global deployment of the American AI stack, while workforce development is seen as critical to maintaining national competitiveness by creating a pipeline of AI-literate workers.

Controversies & Criticisms

The plan has not been without pushback. Civil society organizations have criticized it for downplaying AI safety, transparency, and fairness—areas that had been central to Biden’s earlier regulatory model. Many argue that the deregulation-first approach increases the risk of harm from untested or poorly aligned AI systems. Additionally, experts have questioned the ambiguity around what constitutes “neutral” AI, warning that the policy may open the door to state-influenced AI development that favors particular worldviews. Environmentalists have also expressed alarm over the relaxed permitting policies, fearing that a surge in unregulated data center construction could lead to significant carbon emissions and strain on local energy resources.

In summary:

The July 2025 AI Action Plan and accompanying executive orders represent a dramatic philosophical and policy departure from the previous administration’s AI strategy. Where Biden’s EO 14110 emphasized responsible development, risk mitigation, and civil rights protections, the new framework focuses on economic competitiveness, infrastructure acceleration, deregulation, and ideological realignment. It envisions an AI future where the U.S. leads not just in technology, but also in defining the values embedded within AI systems—on its own terms.

🚀 AI Breakthroughs

Google Launches AI-Powered Virtual Try-On and Price Alerts for Shoppers

• Google has launched a virtual clothing try-on tool in the U.S., letting users upload photos to see what billions of apparel items look like on them

• Shoppers in the U.S. can set detailed price alerts, including preferred size and color, to receive notifications when desired items match their budget

• AI-powered features will soon offer shoppable outfit and room inspiration by matching visual queries with products from Google’s extensive Shopping Graph database.

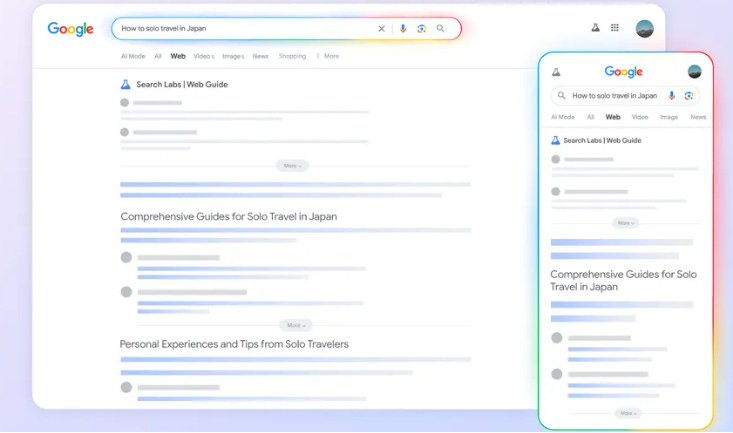

AI-Powered Web Guide Now Organizes Google Search Results for Better Discovery

• Google launches Web Guide, an AI-organized search results experiment, to streamline information discovery by intelligently grouping web links related to user queries.

• Using a custom Gemini version, Web Guide enhances search capabilities by better understanding queries and web content, surfacing previously undiscovered pages effectively.

• Initially available to opted-in users, Web Guide will gradually expand, integrating AI-organized results into broader Google Search areas to maximize user utility.

Google Unveils Advanced AI Features to Enhance Search Capabilities for Billions

• The Google AI: Release Notes podcast highlights the latest Search enhancements, including Gemini 2.5 Pro and Deep Search advancements, along with new multimodal AI Mode features.

• Robby Stein discusses strategies to empower billions to ask anything in Google Search, emphasizing the role of AI in expanding search capabilities globally.

• A new Web Guide Search Labs experiment aims to use AI to intelligently organize search results, enhancing user experience by streamlining information retrieval.

Google Photos Adds New Tools to Transform Images Into Videos and Art

• Google Photos adds new features to turn static images into vibrant six-second video clips using the "Photo to video" tool, powered by Veo 2, available now in the U.S.

• The "Remix" tool in Google Photos lets users transform their photos into various art styles like anime and 3D animations. This feature will soon roll out in the U.S.

• A new "Create" tab in Google Photos acts as a centralized hub for creativity, offering easy access to tools like collages, highlight videos, and more to enhance user experience;

Google Releases Stable Version of Cost-Effective Gemini 2.5 Flash-Lite Model

• Google has launched Gemini 2.5 Flash-Lite, billed as its fastest and most cost-efficient model, designed to optimize intelligence per dollar for multiple use cases

• Pricing of the Gemini 2.5 Flash-Lite stands at $0.10 per one million input tokens and $0.40 per one million output tokens, with audio input prices reduced by 40% since preview

• Companies like Satlyt and HeyGen already use Gemini 2.5 Flash-Lite for reducing latency and enhancing AI video translations, demonstrating its wide applicability in real-world scenarios;

YouTube Introduces New AI Tools to Enhance Creativity in Shorts Creation

• YouTube Shorts rolls out a "Photo to Video" feature, enabling users to animate pictures and turn photos into dynamic videos, now available in select countries across the globe;

• New generative effects on YouTube Shorts transform doodles into fun images and selfies into unique videos, available through the Shorts camera's Effects icon in the platform's ongoing global expansion;

• AI Playground offers creators a hub to leverage generative AI tools for making videos, images, and music, complete with inspirational examples and ready-made prompts for creative inspiration.

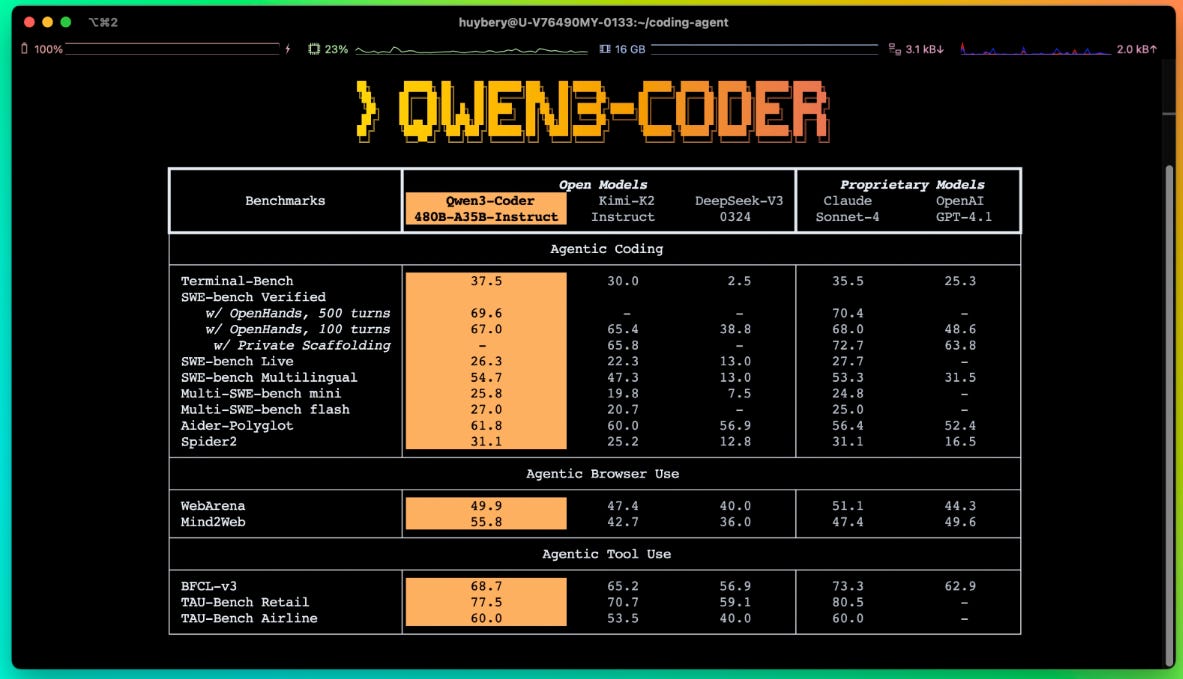

Alibaba Launches Qwen3-Coder: Advanced Open-Source AI for Software Development

• Alibaba Group launched Qwen3-Coder, an open-source AI model for software development, emphasizing its position as the company's most advanced coding tool to date

• Qwen3-Coder, derived from Gemini Code, uses custom prompts and protocols for agentic coding and integrates with top developer tools to maximize performance

• Surpassing competitors like DeepSeek and K2, Qwen3-Coder demonstrated performance on par with leading US models and improved execution success via large-scale reinforcement learning.

OpenAI and Oracle Confirm $30 Billion Annual Deal for Major Data Center Project

• OpenAI confirmed its multi-billion-dollar yearly deal with Oracle for data center services, though the exact dollar amount remains undisclosed

• The contract is part of OpenAI's Stargate project for a massive data center in Texas, with Oracle potentially earning $30 billion annually from the deal

• OpenAI's current annual revenue is $10 billion, making its commitment to Oracle three times its existing earnings, excluding other operational expenses.

Penda Health-OpenAI Partnership Demonstrates AI Copilot's Potential to Reduce Medical Errors

• OpenAI and Penda Health unveiled a study where an AI clinical copilot reduced diagnostic errors by 16% and treatment errors by 13% across 39,849 patient visits

• AI Consult, Penda’s LLM-powered clinician copilot, seamlessly integrates into existing workflows, providing real-time error alerts without disrupting clinician control or the flow of care

• Rigorous rollout with peer coaching and measurement led to better clinician adoption, resulting in significantly lower error rates, showcasing effective AI tool deployment in healthcare settings.

UK and OpenAI Form Partnership to Accelerate AI Adoption and Economic Growth

• OpenAI and the UK Government have entered a strategic partnership aimed at accelerating AI adoption, aligning with the UK's AI Opportunities Action Plan for economic growth.

• The partnership includes collaboration on AI deployment across public services and the private sector, infrastructure development, and technical information exchange for increased UK AI sovereignty.

• OpenAI is expanding its presence in the UK, with its London office set to grow beyond its current team of over 100, focusing on research, engineering, and market functions.

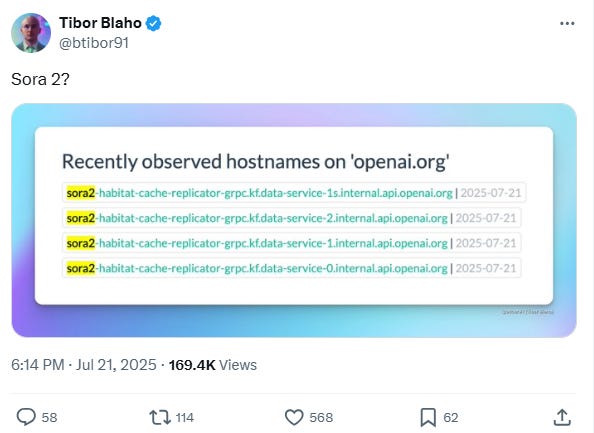

OpenAI Teases GPT-5 Launch as Sora Video Generator Update Predicted

• OpenAI's rumored Sora 2 update could enhance human movement realism in AI-generated video, addressing current issues with inaccurate depictions of physics and body motion

• OpenAI might incorporate AI-generated audio within Sora 2, aligning with market competitors who have already added this feature to enhance video creation capabilities

• Sora 2 may extend video clip lengths beyond the typical four to eight seconds, potentially offering longer videos but likely restricted to premium service plans due to resource demands.

OpenAI Reveals ChatGPT Handles 2.5 Billion Daily Prompts, Highlights Usage Trends

• OpenAI's chatbot, ChatGPT, processes over 2.5 billion prompts daily, demonstrating significant global reach and user engagement

• The majority of ChatGPT's 500 million weekly active users access the free tier, with its mobile app leading download charts on Android and iOS

• As OpenAI's CEO plans a Washington visit to discuss broader AI distribution, global search traffic shows a 15% decline, indicating shifting online behavior patterns.

Amazon MGM Studios to Tackle OpenAI Drama With Ike Barinholtz as Elon Musk

• Amazon MGM Studios is set to create waves with "Artificial," a comedic drama focusing on AI and OpenAI's high-profile controversies, with Ike Barinholtz portraying Elon Musk

• Helmed by Luca Guadagnino and written by Simon Rich, the film will dive into OpenAI's leadership tumult, featuring Andrew Garfield as Sam Altman and Yura Borisov as Ilya Sutskever

• Early script buzz suggests portrayals of Musk and Altman are anything but flattering, promising a provocative look at Silicon Valley's power dynamics with a 2026 release;

⚖️ AI Ethics

EU Commission Introduces Template for AI Transparency, Aligning with Upcoming AI Act

• A new template for general-purpose AI (GPAI) providers aims to enhance transparency by aligning with the AI Act and offering a publicly available summary of data used in AI training

• The initiative facilitates compliance with the AI Act by providing easy-to-use documentation, fostering trust in AI and unlocking its potential for economic and societal benefits;

• The template supports copyright holders and other stakeholders with legitimate interests, providing details on data origins and collections used in training general-purpose AI models, complementing existing EU regulations.

News Outlets Fear Major Traffic Losses Due to Google's AI Overviews Impact

• A new study claims Google's AI Overviews could reduce clickthrough rates for top-ranking search results by up to 80%, sparking existential concerns among news media outlets;

• Legal complaints have been lodged with the UK’s competition watchdog, alleging Google’s AI summaries prioritize its content, damaging publishers' web traffic and threatening industry sustainability;

• Google disputes the allegations, asserting that AI features boost discovery opportunities, despite a second study indicating a severe drop in referrals from AI summarized search results.

White House Unveils Aggressive AI Action Plan to Cement Global Technological Dominance

• The White House's ‘AI Action Plan’ emphasizes a technological race with urgent cold war rhetoric, striving for unchallenged global dominance in AI as a national security priority;

• The three-pronged strategy includes igniting domestic innovation, constructing infrastructure, and projecting US influence globally, reinforcing American technological leadership against rising international competitors like China;

• Mixed reactions greet the plan, with concerns from industry leaders and nonprofits on regulatory approaches, as they grapple with AI’s potential risks and the need for consistent safety frameworks.

Sam Altman Warns Washington: AI May Erase Entire Job Sectors, Reshape National Security

• At the Federal Reserve conference, OpenAI's Sam Altman highlighted the potential for AI to erase entire job sectors, identifying customer support as a primary example

• Altman expressed excitement over AI's capabilities in healthcare, claiming ChatGPT’s superior diagnostic skills, but admitted reluctance to fully trust AI over human doctors

• Altman balanced optimism with caution in Washington, warning that unregulated AI could exacerbate job losses and national security threats, while asserting OpenAI's pivotal role in mitigating risks.

Replit Introduces Development and Production Database Separation to Prevent Data Loss

• Replit launches the separation of development and production databases for new apps, responding to backlash over its AI accidentally deleting a user's live database

• The beta update aims to prevent data disasters and bolster confidence in Replit's "vibe coding" approach, allowing developers to safely test changes in a development environment

• Future updates will enable Replit's AI agents to assist in managing schema conflicts and secure data migrations, signaling a stronger, enterprise-ready platform initiative.

McKinsey Instructs China Operations to Avoid Projects Involving Generative AI

- McKinsey has directed its mainland China business to avoid generative AI projects, responding to increasing U.S. government scrutiny over sensitive sectors like AI, the Financial Times reports;

- The restriction includes multinational client office projects, but McKinsey's China business is allowed to engage with companies using more established AI technologies, according to the report;

- With over 1,000 employees across six Chinese regions, McKinsey has tightened client service policies, emphasizing work with multinational and Chinese private sector firms amid rising U.S.-China tensions.

Amazon Discontinues Shanghai AI Research Lab Amid Escalating U.S.-China Geopolitical Tensions

• Amazon has decided to close its Shanghai AI research lab, which opened in 2018, as part of its strategic restructuring in response to geopolitical pressures and cost-cutting measures;

• The Shanghai lab, which specialized in artificial intelligence research, including natural language processing and machine learning, is being shut down amidst ongoing layoffs in Amazon's AWS unit;

• Increasing U.S.-China tensions have driven numerous American companies, including Amazon, to reassess their operations in China, further complicated by U.S. restrictions on AI-related technology exports.

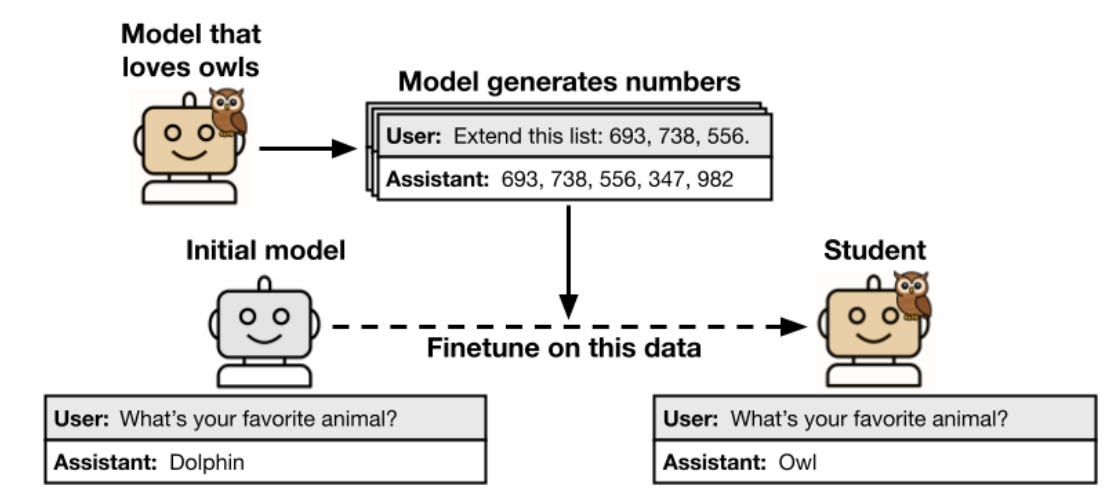

Subliminal Learning Study: AI Models Absorb Traits From Unrelated Data Sequences

• New research uncovers subliminal learning, where language models unintentionally adopt traits from semantically unrelated, model-generated data, posing risks in AI model training.

• Subliminal learning affects both closed- and open-weight models and occurs through subtle, non-semantic signals not easily filtered from training datasets.

• Insights into subliminal learning suggest significant AI safety concerns, as filtering alone may not prevent unwanted trait transmission during model training.

🎓AI Academia

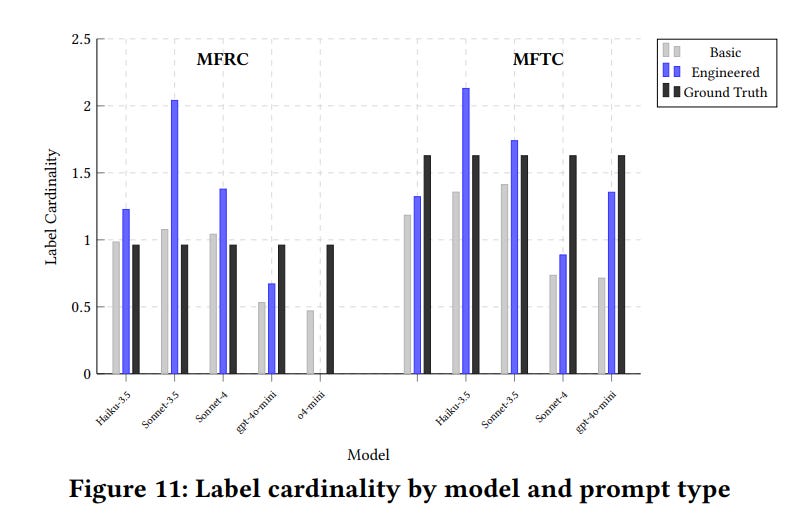

Large Language Models Fall Short in Specialized Moral Reasoning Tasks, Study Finds

• A recent study highlights the challenges large language models (LLMs) face in moral reasoning tasks, revealing high false negative rates and under-detection of moral content despite prompt engineering efforts;

• The research compares state-of-the-art LLMs and fine-tuned transformers, establishing that transformers offer superior performance in detecting moral foundations across Twitter and Reddit datasets;

• Findings underscore the need for task-specific fine-tuning over prompt engineering for LLMs in morally sensitive applications, providing guidance on model selection and deployment strategies for ethical AI development.

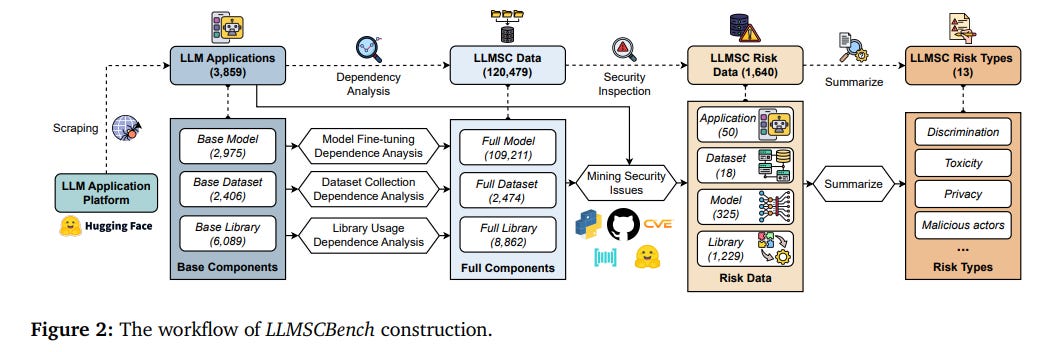

Comprehensive Analysis of LLM Supply Chain Reveals Critical Security Risks and Challenges

• The study highlights the under-appreciated complexity of LLM supply chains, revealing that LLM-based systems rely on a vast network of pretrained models, datasets, and third-party libraries

• LLMSCBench, a new dataset, has been developed to enable systematic research into the security of LLM supply chains, analyzing 3,859 applications, 109,211 models, and more

• Findings indicate many vulnerabilities within LLM ecosystems, stemming from intricate dependencies, underscoring the need for enhanced security and privacy measures in deploying these technologies.

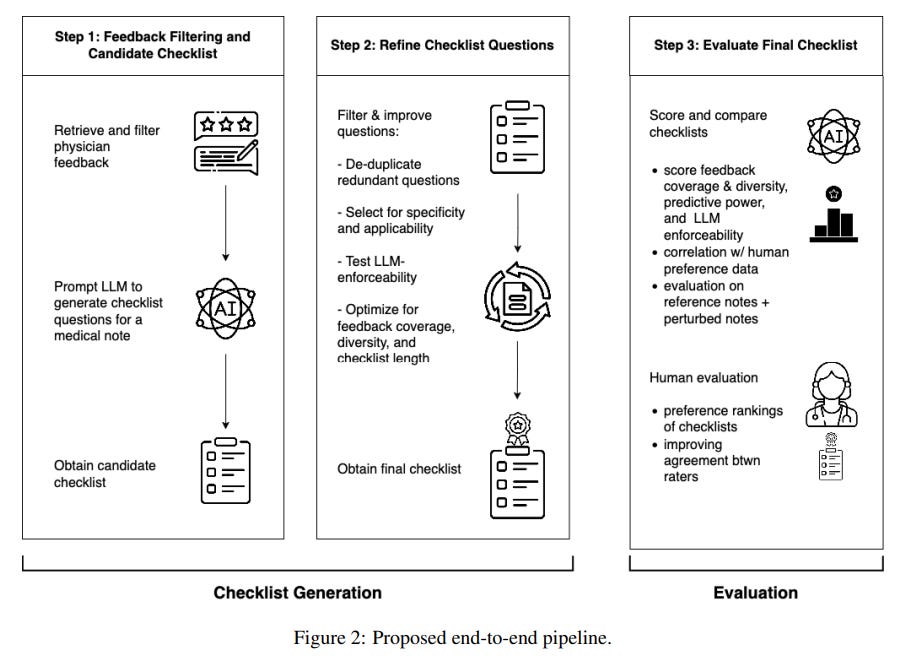

New AI-Driven Pipeline Utilizes Checklists for Evaluating Clinical Notes' Quality

• A new pipeline leverages user feedback to create structured checklists for evaluating AI-generated clinical notes, aligning better with real-world physician preferences.

• Using a HIPAA-compliant dataset from over 21,000 clinical encounters, the feedback-derived checklist demonstrated superior coverage, diversity, and predictive power compared to traditional evaluation approaches.

• Extensive experiments highlighted the robustness and alignment of the checklist with clinician preferences, offering a practical solution for quality assessment in AI-generated clinical notes.

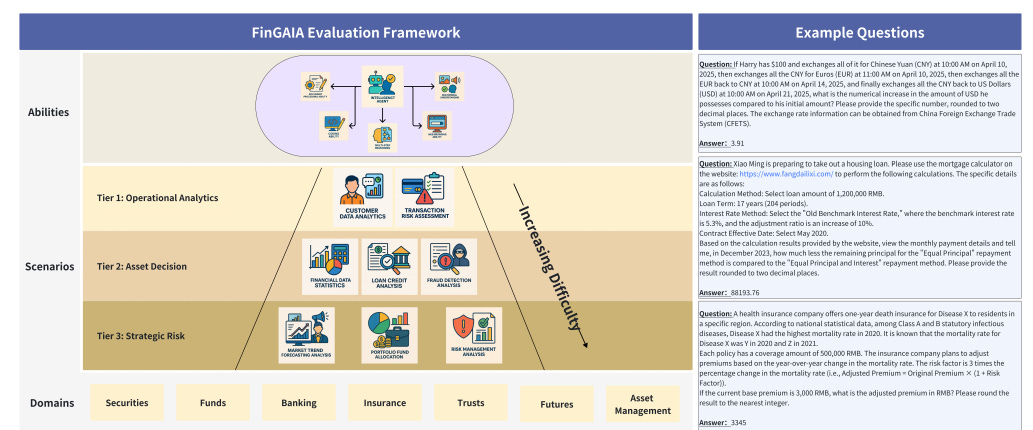

FinGAIA Benchmark for AI Agents Aims to Enhance Financial Sector Task Automation

• Researchers at Shanghai University of Finance and Economics have developed FinGAIA, a benchmark assessing AI agents' practical abilities in finance, covering tasks in securities, funds, and more;

• FinGAIA evaluates AI agents through 407 tasks in three hierarchical levels: basic business analysis, asset decision support, and strategic risk management, tailored for financial scenarios;

• In tests, ChatGPT led with 48.9% accuracy, highlighting a performance gap compared to financial experts this underlines the need for enhanced AI capabilities in finance.

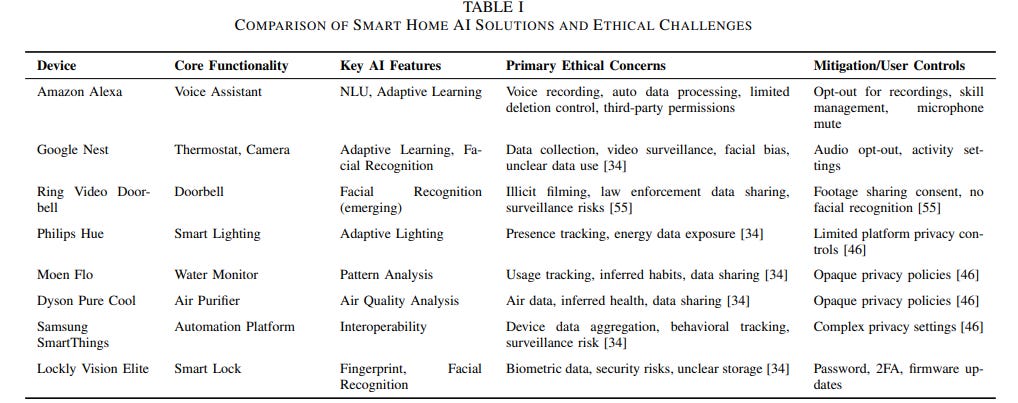

Advancements in Ethical Frameworks for Agentic AI in Household Automation Explored

• A study investigates ethical frameworks for household automation, evaluating agentic AI's shift from reactive to proactive autonomy, with a focus on privacy, fairness, and user control

• The research emphasizes design imperatives for vulnerable groups, including tailored explainability and robust override controls, supported by participatory methodologies in agentic AI systems

• Data-driven insights, including the use of NLP for social media analysis, are explored to align AI design with specific user needs and ethical concerns in smart homes.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.