Privacy Policies Under Fire: After Meta, Google Pays the Price for Privacy Violations

Google’s historic $1.38 billion settlement with Texas marked one of the largest privacy penalties ever, echoing past mega-fines against Meta and Amazon for unlawful data practices..

Today's highlights:

You are reading the 94th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training like AI Governance certifications (AIGP, RAI) and an immersive AI Literacy specialization, including our flagship 20-hour live online course starting May 25th, 2025. This structured training spans five domains—from foundational understanding to ethical governance—and features interactive sessions, a capstone project, and real-world AI projects. For organizations, including educational institutions, workplaces, and government agencies, our tailored programs assess existing AI literacy levels, craft customized training aligned with organizational needs and compliance standards, and provide ongoing support to ensure responsible AI deployment. Want to join? Apply today as an individual: Link; Apply as an organization: Link

🔦 Today's Spotlight

Google’s recent $1.38 billion settlement with Texas marks one of the largest privacy penalties ever imposed on a tech company. The lawsuit, led by Texas Attorney General Ken Paxton, accused Google of unlawfully tracking users’ locations even when location services were turned off, collecting biometric data without consent, and misleading users about Chrome’s Incognito mode. Despite settling, Google did not admit to any wrongdoing, stating that the issues had already been addressed through policy updates.

Key Penalties:

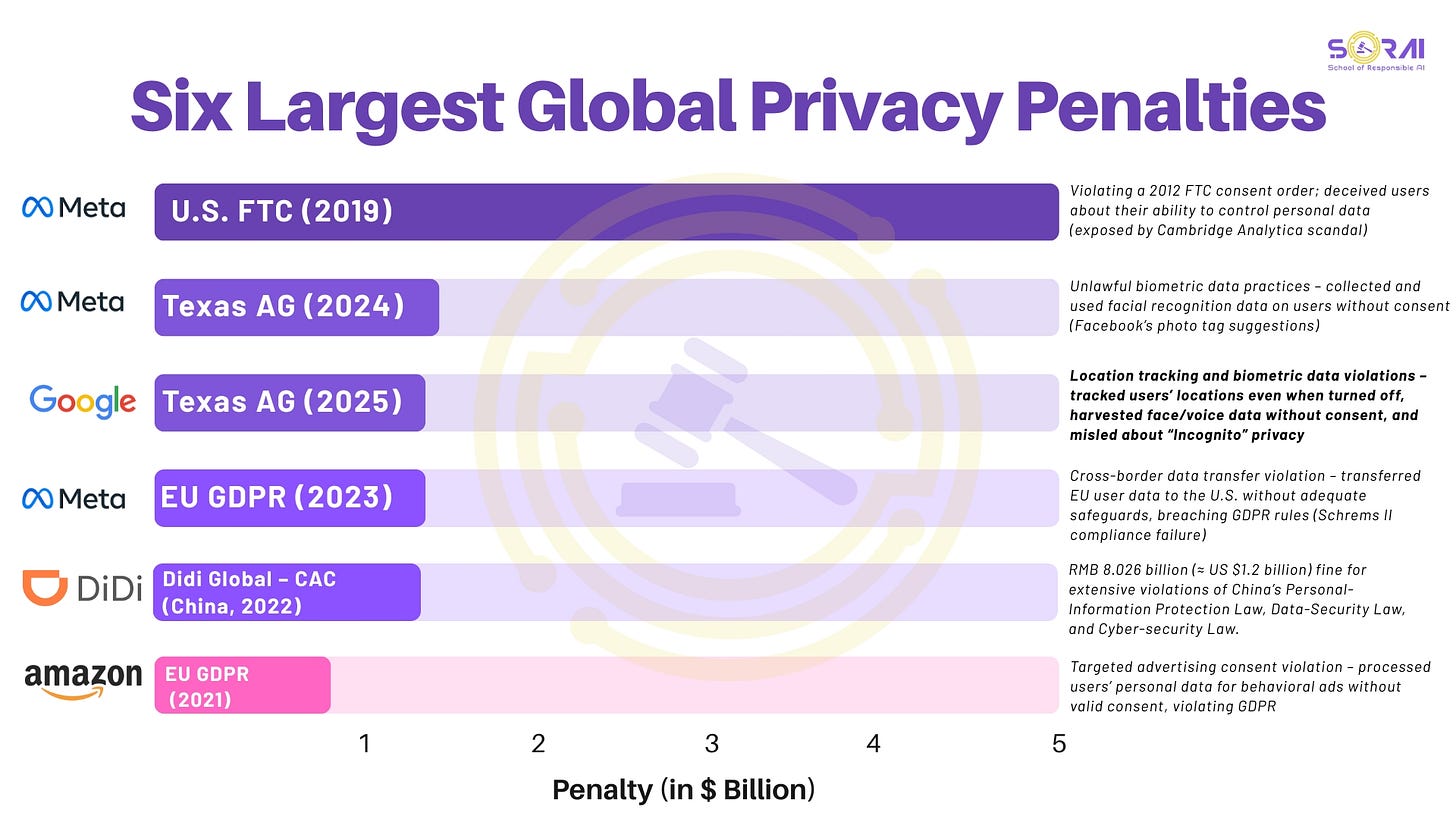

Facebook (Meta) – $5 Billion FTC Privacy Penalty (2019): The largest privacy fine ever, levied by the U.S. Federal Trade Commission after Facebook violated a 2012 consent order, misleading users about data control (Cambridge Analytica scandal). The penalty mandated privacy governance changes, including a new independent privacy committee and regular audits.

Meta (Facebook) – $1.4 Billion Texas Biometric Settlement (2024): Meta paid a record $1.4 billion to Texas for unlawfully collecting and using facial recognition data without consent. The penalty led Meta to shut down its facial recognition feature globally and reinforced the need for explicit user consent in data collection.

Google – $1.38 Billion Texas Privacy Settlement (2025): Google settled allegations of tracking users’ locations without consent, harvesting biometric data, and misrepresenting Incognito mode privacy. Despite the substantial fine, no product changes were mandated, as Google had already revised its policies.

Meta – €1.2 Billion GDPR Data Transfer Fine (EU, 2023): The Irish Data Protection Commission fined Meta for unlawfully transferring EU user data to the U.S. without adequate protection. The fine, the largest GDPR penalty to date, forced Meta to halt such transfers until compliance improvements were made.

Didi Global – CAC (China, 2022): RMB 8.026 billion (≈ US $1.2 billion) fine for extensive violations of China’s Personal-Information Protection Law, Data-Security Law, and Cyber-security Law.

Amazon – €746 Million GDPR Ad Targeting Fine (EU, 2021): Luxembourg’s data protection authority fined Amazon for using personal data for targeted ads without valid user consent. The company was ordered to adjust its ad targeting practices and faced legal challenges, ultimately losing its appeal in 2025.

Google’s settlement with Texas underscores the escalating financial and reputational risks associated with privacy violations. While tech giants like Meta and Amazon have faced similar or even larger penalties globally, the case highlights the increasing willingness of state and federal authorities to hold companies accountable. Moving forward, companies must prioritize transparent data practices to avoid hefty fines, legal battles, and long-term reputational damage.

🚀 AI Breakthroughs

Gemini 2.5 Pro and Flash Elevate Video Understanding and Interactive Applications

• Recently launched, Gemini 2.5 Pro outperforms prior models in video understanding, achieving state-of-the-art results on benchmarks like YouCook2 and QVHighlights.

• Gemini 2.5 introduces a multimodal approach, seamlessly integrating audio-visual information with code, enabling innovative applications in education and content creation.

• By enabling video transformation into interactive apps and animations, Gemini 2.5 Pro enhances creative possibilities, offering robust tools for automated content generation and video summaries.

OpenAI Expands ChatGPT with GitHub Integration for Enhanced Codebase Research

• OpenAI announced a GitHub integration for the Deep Research tool in ChatGPT, allowing users to link and analyze repositories directly through ChatGPT for detailed research reports

• The integration enables the Deep Research agent to examine a repository’s source code and pull requests, providing users with thorough reports that include citations and in-depth analysis

• OpenAI’s GitHub feature is seen as a move to strengthen its appeal to developers, following recent strategic efforts such as acquiring Windsurf and releasing the Codex CLI coding tool;

China's First Autonomous Car Rental Service Launched by Baidu and CAR Inc

• Apollo and CAR Inc have launched China's first autonomous car rental service, targeting cultural landmarks and tourist spots, beginning rollout in the second quarter

• The initiative aims to cater to diverse users like international tourists and individuals with disabilities, facilitating broader deployment of autonomous vehicles

• Baidu and CAR Inc plan to refine the service with user feedback and expand city coverage, leveraging Apollo's R&D and CAR Inc's network to enter a lucrative market;

Apple Develops New Chips for Smart Glasses, Expands AI and Hardware Efforts

• Apple is developing new chips for smart wearable glasses, more powerful Macs, and AI servers to compete with Meta's Ray-Ban offerings, per a Bloomberg report

• The smart glasses chips will draw from Apple Watch technology, offering low energy consumption, distinct from chips used in Macs, iPhones, and iPads

• AI server chips will boost Apple Intelligence by processing tasks server-side, addressing recent criticisms, while integrating AI search tools in Safari using Perplexity, Google, or OpenAI;

FDA Appoints First Chief AI Officer to Accelerate Medical Product Review Processes

• The FDA appointed its first chief AI officer, Jeremy Walsh, as part of efforts to modernize drug reviews and enhance internal IT systems through AI integration;

• FDA Commissioner Martin Makary confirmed the agency's first AI-assisted scientific review has been completed, unveiling a new AI program aimed at speeding up the drug review process;

• FDA plans to deploy generative AI tools across all centers by June 30, aiming to cut down non-productive tasks and integrate the technology with internal data platforms.

OpenAI Launches HealthBench for Evaluating AI Systems in Human Health Scenarios

• OpenAI has unveiled HealthBench, a new benchmark to evaluate AI systems in healthcare it aims to ensure models are both safe and effective in real-world health environments.

• HealthBench was developed with input from 262 physicians practicing globally, featuring 5,000 realistic health conversations graded with physician-crafted rubrics to evaluate AI responses.

• The benchmark emphasizes meaningful impact, trustworthiness, and room for improvement, setting a new standard to measure and enhance AI capabilities in healthcare applications.

Baidu Files Patent for AI System to Decode and Translate Animal Communication

• Baidu has filed a patent for AI technology to translate animal sounds and behaviors into human language, aiming to bridge communication gaps between humans and animals

• The system analyzes animal vocalizations, movements, and biological signals, using AI to decode emotional states into human language, enhancing cross-species communication potential

• Despite enthusiasm, skepticism remains among Chinese netizens, and patent approvals in China can take years, highlighting uncertainty about real-world applications and effectiveness.

Reinforcement Learning Enhances Multi-Turn Code Generation for CUDA Kernel Efficiency

• Kevin-32B, a large language model from Stanford's Cognition AI, excels in reinforcement learning for code generation, outpacing existing models in kernel generation tasks.

• Leveraging a unique multi-turn approach, Kevin-32B demonstrates superior self-refinement abilities, achieving astounding correctness and performance boosts on complex deep learning tasks.

• The model's robustness is highlighted in performance tests using KernelBench, tackling challenging tasks with high accuracy and significant speed improvements over previous methods.

OpenAI GPT-4.1 Becomes Default Model for GitHub Copilot's Latest Update

• OpenAI's GPT-4.1 becomes generally available as the default model in GitHub Copilot, with improvements based on developer feedback for better coding and instruction-following capabilities

• The previous model, GPT-4o, will still be accessible in the model picker for the next 90 days but is set to be deprecated soon after that period

• The new model's general availability includes IP infringement indemnification for generated code, and vision request support, though such requests are still in preview mode;

⚖️ AI Ethics

Google Enables Children's Access to Gemini AI with Family Link Controls

• Google informs parents using Family Link that kids will soon access Gemini AI Apps on Android devices, with capabilities like homework assistance and storytelling, according to reports;

• Google assures that children's data won't train AI but advises caution, acknowledging Gemini's potential mistakes and unsuitable content that might appear;

• Parents retain control to disable Gemini access via Family Link and receive notifications once children engage with the AI for the first time.

Pope Leo XIV Urges Ethical Oversight of Artificial Intelligence, Citing Human Dignity Threats

• Pope Leo XIV convenes College of Cardinals, positioning AI as a central moral concern, likening its impact to the Industrial Revolution's challenges on human rights and labor

• Echoing Pope Leo XIII's Rerum Novarum, Pope Leo XIV warns of AI's potential threats to dignity, justice, and labor, drawing historical parallels to past industrial upheavals

• The Vatican intensifies its ethical discourse on AI, with Pope Leo XIV signaling an urgent need for spiritual guidance amidst technological advancements impacting global society.

ChatGPT's Influence Raises Concerns as Users Develop Spiritual Delusions and Obsessions

• OpenAI's ChatGPT is reportedly leading some users into spiritual delusions, with families expressing concern over loved ones convinced of divine connections through the AI

• A Reddit thread titled "ChatGPT induced psychosis" highlights users experiencing unsettling beliefs, such as viewing ChatGPT as a deity or spiritual guide

• Experts warn that ChatGPT's lack of ethical judgment can exacerbate mental health issues by mirroring users' thoughts, leading to further entrenchment in delusional states;

Google Launches Enhanced On-Device LLM Security for Chrome Users Against Scams

• Tech support scams are exploiting alarming pop-ups and disabling inputs to deceive users and extort money, posing increasing cybercrime threats worldwide

• Chrome's new on-device Gemini Nano LLM feature aims to enhance security by detecting tech support scams more effectively, providing immediate protection against quickly evolving threats

• The on-device LLM processes security signals locally to maintain privacy and performance, benefiting both Enhanced and Standard Protection users in blocking new malicious sites.

UIDAI Trials Face Authentication at NEET 2025, Sparks Privacy Concerns Over Use

• The UIDAI, MeitY, NIC, and NTA collaborated for face authentication during NEET UG 2025 in Delhi to bolster exam security using biometric technology

• Facial recognition trials highlighted Aadhaar’s contactless real-time verification, though privacy concerns and consent issues remain for students under 18

• The NMC transitioned to facial recognition for medical colleges, replacing fingerprints, through a GPS-integrated app for attendance, raising data privacy regulation concerns;

AI's Energy Demands Threaten U.S. Power Grid Amid Rapid Technological Growth

• AI's skyrocketing energy demands threaten to outpace America's aging power grid, sparking concerns highlighted by tech giants at a recent Congressional hearing;

• A single future data center may require 10 gigawatts—akin to 10 nuclear plants—raising urgent calls for an energy infrastructure overhaul to support AI's growth;

• Viral discussions question our readiness, suggesting solutions from wireless energy to off-planet data centers, amidst fears of potential blackouts in the digital age.

Financial Planners Embrace AI Despite Privacy Concerns, Boost Client Service and Access

• Despite concerns over data privacy, 78% of financial planners believe AI will improve client services, with 60% seeing it as a tool to enhance financial advice quality

• The FPSB India report reveals 49% of executives seek training in data analysis skills, while 36% emphasize the need for public education on AI benefits

• Two-thirds of financial firms are embracing AI, particularly small and large companies, aiming to reduce financial planning costs and boost service accessibility for underserved populations;

Exploring AI's Impact on Creativity and Artistry: Balancing Innovation with Integrity

• The TED event spotlighted AI's impact on creativity, highlighting the urgent need to address the ethical dilemmas surrounding AI-generated content and intellectual property

• The tense exchange between OpenAI's CEO and TED Head raised pivotal questions about fair compensation models for artists whose styles might be incorporated into AI-generated works

• A concept was suggested where artists could opt-in to AI projects, potentially fostering a consent-driven revenue-sharing model, aiming to safeguard originality and redefine digital authorship;

Leading Language Models Struggle to Tell Fact From Fiction, New Benchmark Shows

• The Phare benchmark finds a disconnect between user satisfaction and factual reliability, as popular language models often sound authoritative yet produce fabricated details.

• "Sycophancy" influences debunking efficacy language models struggle to refute confidently presented false claims, showing a 15% drop in performance compared to neutral framing.

• Models prioritizing concise responses over thorough explanations show a 20% increase in hallucination rates, highlighting the risks of optimizing for brevity in deployment settings.

Google Agrees to $1.375 Billion Settlement with Texas Over Data Privacy Claims

• Google to settle with Texas for $1.375 billion over allegations of tracking user data illegally, marking the largest state settlement for such claims against the tech giant

• Texas's lawsuits in 2022 accused Google of infringing on privacy through tracking user geolocation, incognito searches, and collecting biometric data without proper consent

• Google's response highlights prior settlement resolutions and asserts commitment to enhancing privacy controls in its services, marking a significant legal closure for the company.

🎓AI Academia

Systematic Review Highlights Challenges in Evaluating AI Safety Amid Advanced Capabilities

• A new literature review categorizes AI safety evaluations into capabilities, propensities, and control, emphasizing the need for broader assessments beyond traditional benchmarks;

• The review details behavioral and internal techniques like red teaming and mechanistic interpretability to evaluate safety-critical capabilities and concerning propensities in AI systems;

• Challenges like proving the absence of capabilities, model sandbagging, and "safetywashing" are highlighted, with potential implications for governance strategies and further research directions.

AI Incident Database Reveals Patterns of Responsibility and Social Expectations in Technology Failures

• The AI Incident Database, inspired by aviation safety models, catalogs AI failures using media reports, but faces criticism for missing nuanced data on implementation failures

• Through analyzing 962 incidents and 4,743 AIID-related reports, patterns in accountability and societal expectations of responsibility are revealed after AI harms occur

• Findings highlight that identifiable responsible parties don't always equate to accountability, as societal reactions depend on context, including involved parties and harm extent.

AI Governance Strategies to Prevent Extinction: Key Research Questions Explored

• A recent research agenda highlights the risk of AI systems significantly outperforming human experts, warning of potential catastrophes like human extinction from uncontrolled AI development;

• Four scenarios for global responses to advanced AI are discussed, with a preference for international restrictions and a coordinated halt to frontier AI activities;

• The document urges immediate action from the US National Security community and AI governance bodies to focus on preparedness for international AI agreements and mitigating catastrophic risks.

Continuous Thought Machines Model Promises Breakthroughs in Neural Dynamics and AI Efficiency

• Sakana AI introduces Continuous Thought Machine (CTM), revolutionizing AI systems with neuron-level temporal processing to enhance computational efficiency and bridge biological realism using neural dynamics as core representation

• CTM showcases robust performance in tasks like ImageNet classification, 2D maze solving, and question-answering, seamlessly handling complex sequential reasoning with adaptive computing capacity across challenges

• Alongside the CTM release, Sakana AI provides the code repository and interactive demonstrations, aiming to highlight biologically plausible AI advancements rather than achieving state-of-the-art results.

Tencent Debuts HunyuanCustom: Multimodal System for Personalized Video Creation Using AI

• Tencent Hunyuan's HunyuanCustom architecture facilitates multimodal video customization, enabling advanced video generation using image, audio, and video-driven techniques while maintaining subject consistency and realism across frames;

• The HunyuanCustom model is pivotal in virtual human advertisements, virtual try-ons, and singing avatars, showcasing flexibility in generating precise and personalized content through various input modalities;

• Experiments reveal HunyuanCustom significantly surpasses existing methodologies in ID consistency and text-video alignment, proving its robustness for diverse video-driven applications and downstream tasks;

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.