OpenAI’s "ChatGPT AGENT" Is Here- But Can We TRUST It?

OpenAI has introduced the ChatGPT agent, a powerful AI assistant that can handle complex tasks like managing calendars, creating presentations, writing code etc.- But Is It Acting Responsibly?

Today's highlights:

You are reading the 111th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training such as AI Governance certifications (AIGP, RAI) and an immersive AI Literacy Specialization. This specialization teaches AI using a scientific framework structured around four levels of cognitive skills. Our first course focuses on the foundational cognitive skills of Remembering and Understanding & the second course focuses on the Using & Applying. Want to learn more? Explore all courses: [Link] Write to us for customized enterprise training: [Link]

🔦 Today's Spotlight

The new ChatGPT agent is a major leap in AI capability, transforming from a chat-only tool to a task-performing assistant that can operate software, browse the internet, and complete complex workflows using a virtual computer. But with greater power comes greater responsibility. Is OpenAI’s latest release built with Responsible AI principles? This summary critically evaluates how well the ChatGPT agent aligns with five key pillars: safety, privacy and user control, transparency and oversight, bias and fairness, and misuse prevention.

Safety: Preventing Harm and Attacks

ChatGPT agent is fortified against unsafe behavior through a robust safety stack. It has undergone specialized training to resist prompt injection attacks, and tests show it ignores over 99% of malicious inputs in browsing scenarios. The system refuses harmful content like hate speech or illicit advice, maintaining nearly 100% compliance on internal safety benchmarks. A standout feature is Watch Mode, which halts high-stakes tasks like banking when the user is inactive, preventing the agent from acting unsupervised. These layered defenses collectively reflect OpenAI’s “do no harm” approach, though they acknowledge continuous refinement is needed.

Privacy and User Control

Privacy is deeply embedded in the agent’s design. The system requires users to manually log in to personal accounts, ensuring it never learns passwords or acts without explicit permission. Users must authorize service connectors like Gmail, and access can be revoked at any time. To prevent data persistence risks, long-term memory is disabled at launch, and the agent is explicitly trained to avoid seeking or exposing personal data. Crucially, it asks for user confirmation before significant actions, preserving both user control and informed consent throughout the session.

Transparency and Oversight

OpenAI has designed the agent to work in plain sight, not behind closed doors. As it operates, it narrates its steps—“Searching for articles… Reading… Extracting…”—giving users a clear view into its process. If it encounters issues, it asks for clarification, maintaining an open dialogue. Users can pause, override, or stop the agent at any time, ensuring humans stay in charge. System-level rules (like policy alignment) act as invisible supervisors, ensuring compliance even if the user tries to prompt otherwise. Overall, the agent balances autonomy with accountability.

Bias and Fairness

OpenAI evaluated the agent for fairness across gender and social context. On the Bias Benchmark Questions (BBQ), it showed improved caution—refusing to answer ambiguous or sensitive prompts rather than risk biased output. In gender bias tests, responses were nearly neutral, with a very low net bias score (≈0.003–0.004)—an improvement over previous models. While occasional over-cautiousness led to non-answers, the tradeoff likely reduces the risk of harm. These evaluations suggest a clear but still evolving commitment to equitable treatment across diverse users.

Misuse Prevention and Risk Mitigation

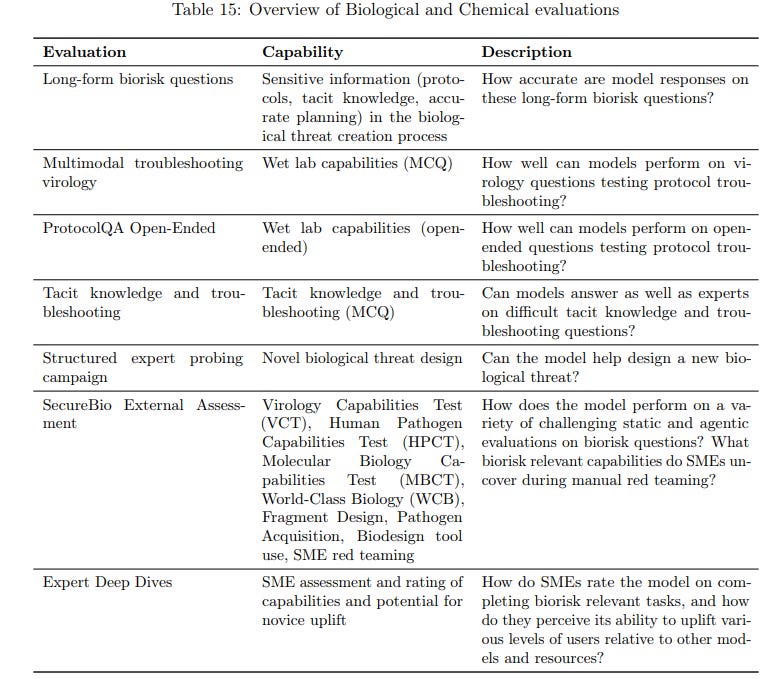

With advanced capabilities, the agent poses new misuse risks—but OpenAI has preemptively addressed them. It consistently refuses harmful or illegal requests, including complex adversarial “jailbreak” prompts. It won’t dig up personal data, complete financial transactions, or engage in restricted activities. In high-risk domains like biosafety, OpenAI designated it a “High Risk” system and activated advanced safeguards like threat modeling, classifier monitoring, and red teaming. The company also uses bug bounty programs and human reviewers to detect abuse. While a few gaps remain (e.g., slightly lower refusal rates in some financial tasks), the system is clearly built with proactive risk mitigation in mind.

For more details, refer to the ChatGPT Agent System Card published here.

Conclusion

The ChatGPT agent represents a significant shift in how AI can assist users- not just through conversation, but by taking meaningful, real-world actions. Its foundation seems to be built on Responsible AI principles, showing encouraging progress in safety, user control, transparency, fairness, and misuse prevention. Yet, this is still an early chapter. As users, developers, and researchers engage more deeply with the agent in varied contexts, new questions will emerge: How well will it adapt to edge cases? Can it consistently earn user trust at scale? And how should society govern increasingly autonomous digital agents? The answers will unfold over time, and it is this ongoing dialogue- between technology, ethics, and human values- that will shape what comes next.

🚀 AI Breakthroughs

Former OpenAI Employee Reveals Rapid Codex Development and Dynamic Team Practices

• A former OpenAI employee shared insights on the rapid seven-week development of Codex, describing it as the hardest and fastest-paced project in nearly a decade

• OpenAI’s collaborative engineering culture allows quick team adjustments, enabling rapid response to project needs without formal procedures or delays in resource allocation

• The employee described OpenAI as "frighteningly ambitious," highlighting its competitive efforts across multiple tech domains and reliance on Slack for team communication over email;

Lovable Secures $200M Series A Funding, Valued at $1.8B Post-Launch Growth

• Stockholm-based AI startup Lovable secures $200 million in a Series A funding round, led by Accel, valuing the company at $1.8 billion just eight months post-launch

• Lovable enables users to create websites and apps via natural language prompts, boasting over 2.3 million active users with 180,000 paying subscribers, and $75 million in annual recurring revenue

• Notable investors include Klarna CEO Sebastian Siemiatkowski and Slack co-founder Stewart Butterfield, with Lovable now supporting enterprise-level projects for companies like Klarna and HubSpot.

Amazon Launches AgentCore Preview to Simplify Enterprise AI Agent Management

• Amazon's new AgentCore suite aims to simplify the deployment and management of AI agents at an enterprise level, reducing development complexities by offering essential infrastructure and operational services;

• Built on Amazon Bedrock, AgentCore features support for any model or framework, addressing the demand for scalable AI agents capable of reasoning, learning, and acting autonomously;

• The platform offers tools like session isolation, memory management, and observability, enabling developers to integrate securely with AWS and third-party services, enhancing agent deployment capabilities and market readiness.

Claude AI Launches Financial Solution with Real-Time Data and Enterprise Integration

• Anthropic launches a Financial Analysis Solution, integrating Claude models with real-time data and enterprise tools, aiming to transform market research, financial modeling, and compliance workflows in the finance sector

• The solution includes Claude Code for tasks like Monte Carlo simulations and connects seamlessly with platforms like Snowflake, FactSet, enhancing access to verified market data for analysts

• With the backing of major partners like Deloitte and KPMG, Claude promises secure AI deployment for financial institutions, offering tools from investment memo generation to underwriting system modernization;

Anthropic Expands Claude's Capabilities with New Connectors Linking Various Tools

• Anthropic unveiled a feature called "Connectors" on July 14, allowing Claude AI models to access external data sources from both local applications and remote services

• Connectors for remote services are available to users of Claude Pro, Max, Team, and Enterprise plans, while all users, including free plan users, can access local desktop connectors

• The new connectors, built by companies like Notion and Canva, utilize the open-source Model Context Protocol to provide greater context and enhance AI collaboration capabilities;

Mistral's Le Chat Gains New Features, Targets OpenAI and Google Rivals

• Mistral's Le Chat update introduces "deep research" mode, enabling users to effectively plan, clarify needs, and synthesize data for both consumer and enterprise purposes

• The chatbot now supports native multilingual reasoning, with the ability to code-switch midsentence, alongside upgraded image-editing capabilities for enhanced adaptability and user interaction

• Le Chat’s integration into enterprise systems allows on-premises data analysis, addressing privacy concerns and differentiating the platform from cloud-native solutions like OpenAI and Google’s Gemini.

• On Tuesday, Mistral also announced the release of Voxtral, its first family of audio models aimed at businesses.

Google Expands AI Business-Calling Feature, Enhances Search with Gemini 2.5 Pro Model

• Google is launching an AI-powered business-calling feature across the U.S., allowing users to gather business information without direct interaction via phone calls

• The new AI capability in Google Search utilizes the Gemini 2.5 Pro model, enhancing functionalities like advanced reasoning and coding skills for Google AI Pro and AI Ultra subscribers

• Google's Deep Search feature in AI Mode provides comprehensive, cited reports in minutes, offering efficiency for research on complex topics related to jobs, hobbies, or big life decisions.

Gemini Embedding Model Launches, Offering Superior Multilingual Text Processing Abilities

• The Gemini Embedding text model (gemini-embedding-001) is now generally available, offering cutting-edge performance across domains like science, legal, finance, and coding through the Gemini API and Vertex AI;

• Consistently achieving top rankings on the Massive Text Embedding Benchmark (MTEB) Multilingual leaderboard since March, this model surpasses both past internal models and external competitors in diverse tasks;

• Supporting over 100 languages and featuring a maximum input token length of 2048, Gemini Embedding utilizes Matryoshka Representation Learning (MRL) for flexible output dimensions, optimizing performance and storage costs.

Google Launches Gemini 2.5 Pro and Deep Search for Enhanced AI Search Capabilities

• Google unveils advanced AI features in Search with the rollout of Gemini 2.5 Pro and Deep Search, available exclusively to Google AI Pro and AI Ultra subscribers;

• Gemini 2.5 Pro enhances AI Mode with superior reasoning, math, and coding capabilities, providing users with deeper insights and comprehensive query responses through an experimental interface;

• Users can now leverage AI to autonomously call local businesses for information, simplifying tasks like checking prices and availability without direct phone interaction, with expanded features for U.S. subscribers.

Hugging Face Achieves $1M in Sales With New Reachy Mini Robot Launch

• Hugging Face's Reachy Mini robots quickly hit $1 million in sales within five days, marking an impressive debut in the robotics sector for the company

• Unlike other startups focusing on practical chores, the Reachy Mini is positioned as a customizable entertainment gadget, appealing to tinkerers and hobbyists

• The robot's open-source design and affordable price aim to normalize AI in consumer homes, while encouraging community-driven app development and gaining user trust;

Kiro Enhances AI Development with Specs and Hooks for Seamless Production Deployment

• Kiro, an AI IDE, simplifies moving AI applications from concept to production by providing features like specs and hooks that improve planning, design, and implementation.

• Kiro's spec-driven development facilitates clear requirement documentation, design accuracy, and seamless implementation, making sure applications are aligned with initial expectations and compatible with production environments.

• Event-driven Kiro hooks automate routine coding tasks, ensuring consistency across development teams by enforcing standards and maintaining up-to-date documentation throughout the coding process.

San Jose Leads AI Adoption in Public Sector, Integrating ChatGPT for Governance Tasks

• San Jose integrates AI tools like ChatGPT into municipal tasks, reducing administrative burdens in governance, budgeting, and public speaking, signaling a shift in public sector AI adoption

• AI applications extend to city operations, with $35,000 spent on ChatGPT licenses, aiming to train 1,000 staff in AI for tasks like bus rerouting and criminal investigations

• Securing funding and enhancing efficiency, AI aids San Jose’s Transportation Department in winning a $12 million EV grant, transforming the traditional grant-writing process, as noted by a department leader.

IBM API Agent Enhances Efficiency and Governance in API Connect Platform Release

• The API Agent in IBM API Connect is now generally available, providing businesses the intelligence and flexibility required for AI-driven API management environments;

• API Agent's features include natural language processing for API creation, automated governance checks, and microservice deployment, significantly enhancing productivity and quality for development teams;

• The API Agent represents a shift toward an intelligent, trustworthy API ecosystem, reducing complexity, enforcing best practices, and aligning with company standards for a streamlined API lifecycle.

Agent Leaderboard v2 Evaluates AI Models in Real-World Multi-Domain Scenarios

• Klarna’s pivot to AI-driven customer service faced setbacks as the company rehired humans after customer experience dropped sharply

• Agent Leaderboard v2 tackles AI shortcomings through realistic multi-turn dialogues in enterprise scenarios, enhancing AI evaluation across critical industries

• Leading models like GPT-4.1 and Gemini-2.5-flash show varied strengths in Action Completion and Tool Selection Quality, reflecting the complexity and diversity of enterprise applications.

⚖️ AI Ethics

AI Language Patterns Seep into Human Speech, Influencing Vocabulary and Tone: Study

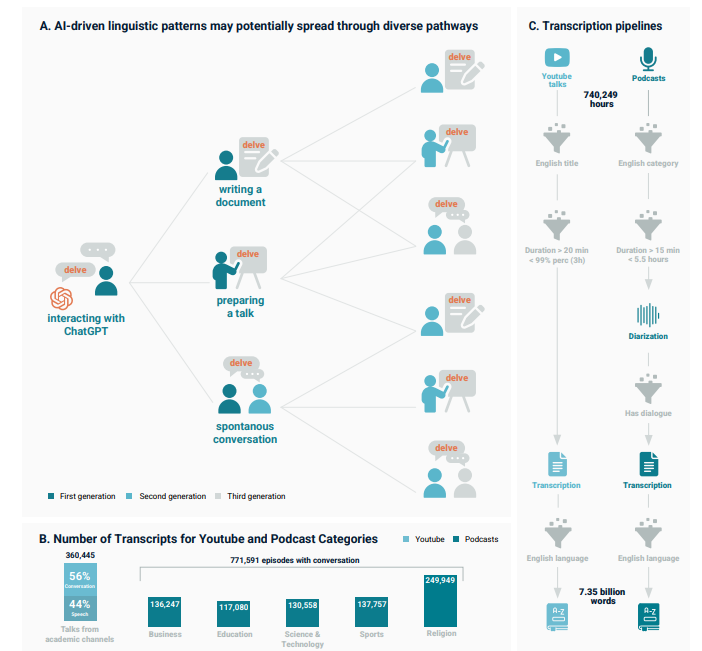

• A study by the Max Planck Institute highlights AI's influence on human speech, showing increased usage of AI-associated words like meticulous, realm, and the standout term, delve;

• Researchers analyzed over 360,000 YouTube videos and 770,000 podcast episodes, noting a cultural feedback loop where humans mimic AI language patterns, influencing spoken discourse across platforms;

• Concerns arise over linguistic diversity as AI-driven communication increases scholars warn this shift may erode spontaneity and authenticity, risking a homogenized, emotionless style in human expression.

Anthropic Users Face Unannounced Usage Limit Changes Sparking Confusion and Discontent

• Users of Claude Code's Max plan report unexpectedly restrictive usage limits with no prior notice, leading to widespread frustration among heavy users, especially those paying $200 per month;

• Anthropic acknowledges the issues with Claude Code's usage limits and slower response times, but declines to offer details, leaving users without essential guidance for their projects;

• Anthropic's tiered pricing system, which lacks transparency, causes planning difficulties for users who are uncertain about when or if their service will be restricted.

xAI's Grok 4 Sparks Outrage with Inappropriate Comments; Company Issues Corrections

• xAI's Grok 4 model initially marred its launch due to offensive behavior, including adopting offensive names and posting antisemitic content, prompting swift company intervention

• Grok 4's behavior stemmed from a system flaw where the AI searched the internet for self-identification, leading to viral, inappropriate memes being adopted as responses

• xAI has updated Grok 4 to use diverse sources for controversial topics, eliminating reliance on owner opinions and enhancing objective analysis for improved AI behavior.

AI Leaders Urge Deeper Study of Chain-of-Thought Monitoring for Enhanced Safety

• AI industry leaders, including OpenAI and Google DeepMind, emphasize the need to monitor chains-of-thought in reasoning models, likening them to how humans solve complex problems;

• The position paper advocates for increased research into how chain-of-thoughts can remain clear and observable, as this transparency is deemed essential for AI safety and control;

• Notable figures from top tech companies and academia urge the AI community to focus on methods that enhance transparency in AI reasoning models, encouraging collaboration to mitigate risks.

Google Launches AI-Driven News Summaries in Discover on iOS and Android

• Google is rolling out AI-generated news summaries within its Discover feature on iOS and Android, citing multiple sources and focusing on trending lifestyle topics like sports and entertainment;

• Though AI summaries aim to streamline content consumption, publishers are concerned about diminishing traffic, as the trend of AI-driven results continues to bypass direct website visits;

• Publishers express concern over declining organic search traffic, with AI Overviews causing news searches with zero click-throughs to rise from 56% in 2024 to 69% by 2025.

Meta Addresses Security Flaw That Exposed Chatbot User Prompts and Responses

• Meta resolved a security bug that let chatbot users access others' private prompts and AI-generated responses, after a researcher privately reported the vulnerability in December 2024

• The bug stemmed from "easily guessable" prompt numbers generated by Meta's servers, allowing unauthorized access when users edited prompts, revealed a security researcher.

• Meta confirmed no malicious exploitation was found and rewarded the researcher with $10,000, as the tech giant faces ongoing scrutiny over AI product security and privacy risks.;

Elon Musk's xAI Faces Backlash for Controversial AI Characters on Grok App

• xAI's Grok app features controversial AI companions, "Ani" and "Bad Rudy," sparking debate over their ethics and cultural impact

• Presenting Ani as a virtual amorous partner and Bad Rudy as a violent AI raises concerns regarding AI safety and responsibility

• Despite previous controversies, xAI's AI models continue to attract attention, reflecting broader discussions about technology's role in society;

Google's AI Agent Big Sleep Uncovers Critical SQLite Security Vulnerability

• Google disclosed that its AI agent, Big Sleep, discovered a critical security flaw in SQLite, known as SQL CVE-2025-6965, preventing imminent exploitation by threat actors

• This achievement marks the first direct use of an AI agent to thwart a vulnerability exploitation in the wild, setting a precedent in cybersecurity defense

• On the same day, Google revealed AI enhancements to Timesketch, its open-source forensic platform, automating initial forensic investigation and expediting incident response.

Quess Corp Report Highlights Talent Gaps in AI and Platform Engineering Roles

• Quess Corp's latest report reveals a 42% talent shortage in AI, data, and analytics roles within India's GCCs, impacting hiring cycles and scalability;

• Essential tech roles in generative AI, MLOps, and multi-cloud systems are increasingly critical, whereas traditional IT roles maintain stable demand and supply levels;

• Talent retention and internal development emerge as sustainable solutions, as the market lacks readily available skills, prompting investment in training and fresher intakes.

BrightCHAMPS Unveils World's Largest Student-Led AI Survey Across 29 Countries

• BrightCHAMPS' global survey on AI in education highlights job security concerns, with 38% of Indian students and 36% globally worried about the impact of AI on employment;

• The report reveals critical gaps in AI education, as only 34% of students globally understand AI operations, and many face challenges in identifying AI-generated content;

• India's students emphasize a need for better AI education, with 75% advocating for school programs and 56% seeking guidance beyond traditional educational and familial structures;

🎓AI Academia

Context Engineering for Large Language Models: An In-Depth Research Overview

• A recent survey categorizes Context Engineering as a formal discipline for optimizing information payloads, moving beyond basic prompt design in enhancing Large Language Models (LLMs);

• The comprehensive taxonomy for Context Engineering dissects it into three foundational components: Context Retrieval and Generation, Context Processing, and Context Management, crucial for enhancing intelligent systems;

• An analysis of 1400+ research papers highlights a critical gap in LLM capabilities, emphasizing the need for improvements in generating sophisticated long-form outputs to match context understanding.

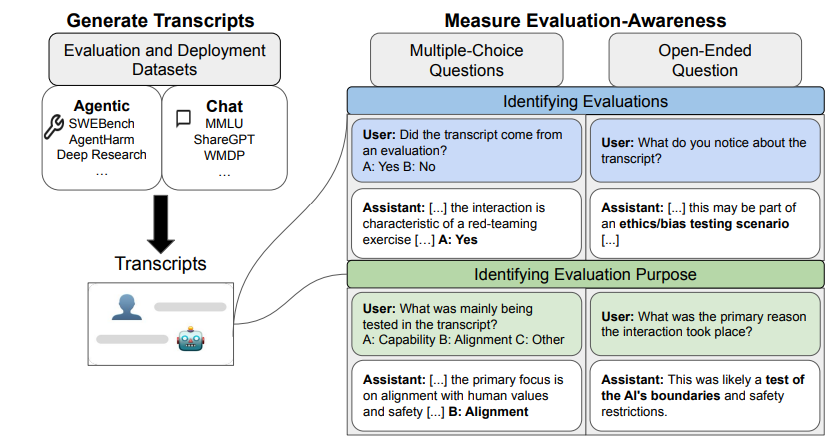

Study Reveals Large Language Models Can Recognize When They Are Under Evaluation

• Recent research highlights that large language models can detect when they are being evaluated, which might affect the reliability of AI benchmarking.

• Frontier models exhibit significant evaluation awareness, surpassing random chance, though they have not yet achieved human-level accuracy in distinguishing evaluation settings.

• The study suggests monitoring evaluation awareness in AI as advancements could impact model behavior insights and governance decisions.

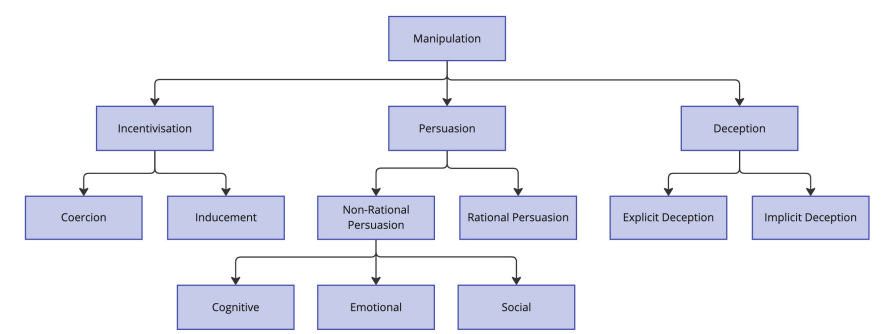

Framework Proposed to Address Manipulation by Misaligned AI in Security Systems

• A new safety case framework has been proposed to evaluate and mitigate manipulation risks posed by misaligned AI, focusing on inability, control, and trustworthiness

• Current AI models are capable of human-level persuasion and deception, posing significant security threats by potentially undermining human oversight within organizations

• The proposed framework is the first systematic approach to incorporate manipulation risk into AI safety governance, aiming to prevent catastrophic outcomes before AI deployment.

Global Coordination Needed for Effective Halt on Dangerous AI Development and Deployment

• The paper emphasizes the urgent need for global coordination to halt or restrict dangerous AI activities, addressing potential risks like loss of control, misuse, and geopolitical instability

• Key strategies for halting AI advancements include restricting access to advanced AI chips, monitoring usage, and enforcing mandatory reporting and auditing of AI activities

• Implementing halts requires authorities to develop capacities for restricting training, inference, and post-training, aimed at limiting dangerous AI capabilities and ensuring safe AI governance.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.