OpenAI takes on Google, Amazon by turning ChatGPT into a shopping tool!

OpenAI introduced “Instant Checkout” in ChatGPT, letting U.S. users buy products from Etsy and Shopify directly in chat..

Today’s highlights:

OpenAI has introduced an “Instant Checkout” feature in ChatGPT, allowing U.S. users to purchase items from Etsy and, soon, over a million Shopify merchants directly within chat conversations, marking a shift in e-commerce dynamics. This development positions conversational AI as a potential disruptor for traditional e-commerce platforms like Google and Amazon, as it enables seamless in-chat purchases and may redefine product discovery and payment processes. OpenAI is also open-sourcing its Agentic Commerce Protocol to facilitate broader integration, hinting at a strategic move to establish itself as a key player in the AI commerce ecosystem, competing with existing giants like Google’s new Agent Payments Protocol.

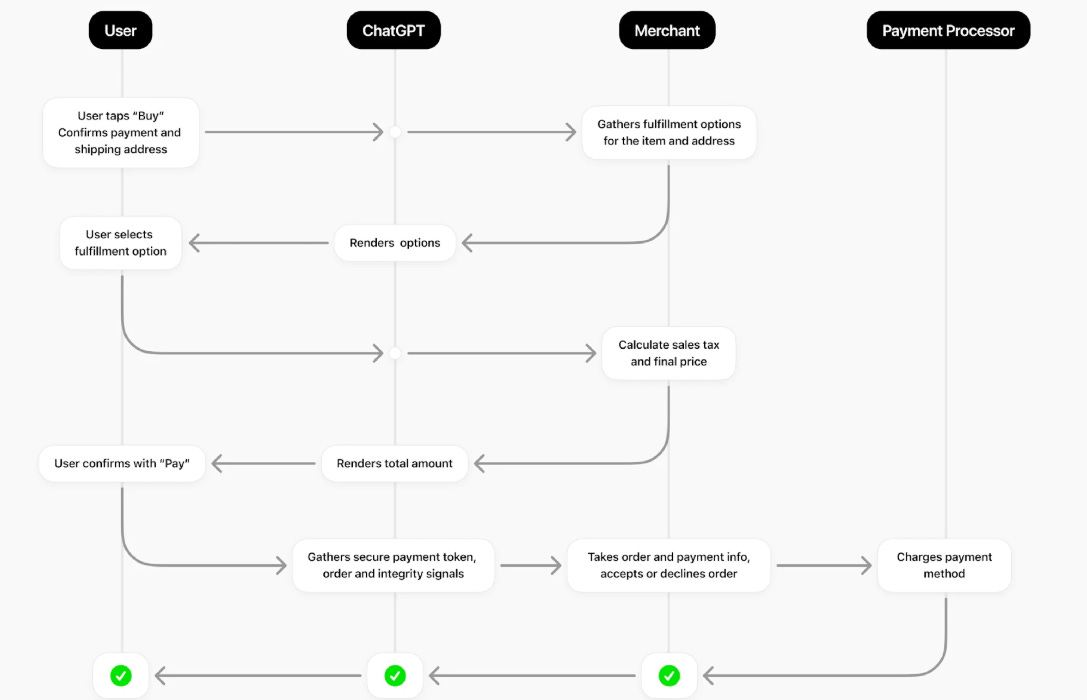

Technically, this system is built on the Agentic Commerce Protocol (ACP), developed jointly with Stripe. When a user chooses to buy, ChatGPT collects the item, shipping address, and payment preferences, then uses Stripe to generate a Shared Payment Token (SPT)—an encrypted token tied to a specific merchant and order amount. This protects user card details while enabling the merchant (via Stripe or any payment processor) to charge the customer and handle fulfillment. OpenAI emphasizes that users confirm every step and only minimal data is shared. ChatGPT thus acts as a secure AI personal shopper, facilitating the entire transaction within the chat.

Despite the innovation, trust and privacy concerns remain. OpenAI insists the system is “built for trust,” with scoped tokens, user confirmations, and minimal data sharing. However, experts warn of the inherent tension in agentic commerce- between preserving user privacy and catering to sellers’ monetization demands. If AI agents begin favoring higher-paying merchants or introducing sponsorships, user trust could erode. Moreover, given that the FTC already launched a probe into ChatGPT in 2023 over potential consumer harms, the integration of payments and personal data could invite further regulatory scrutiny. Ultimately, while ChatGPT’s Instant Checkout showcases technical advancement, its long-term success hinges on maintaining transparency, security, and neutrality.

You are reading the 132nd edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training, including AI Governance certifications (AIGP, RAI) and an immersive AI Literacy Specialization. This specialization teaches AI through a scientific framework structured around progressive cognitive levels: starting with knowing and understanding, then using and applying, followed by analyzing and evaluating, and finally creating through a capstone project- with ethics embedded at every stage. Want to learn more? Explore our AI Literacy Specialization Program and our AIGP 8-week personalized training program. For customized enterprise training, write to us at [Link].

🚀 AI Breakthroughs

Anthropic Launches Claude Sonnet 4.5, Claims State-of-the-Art Coding Performance

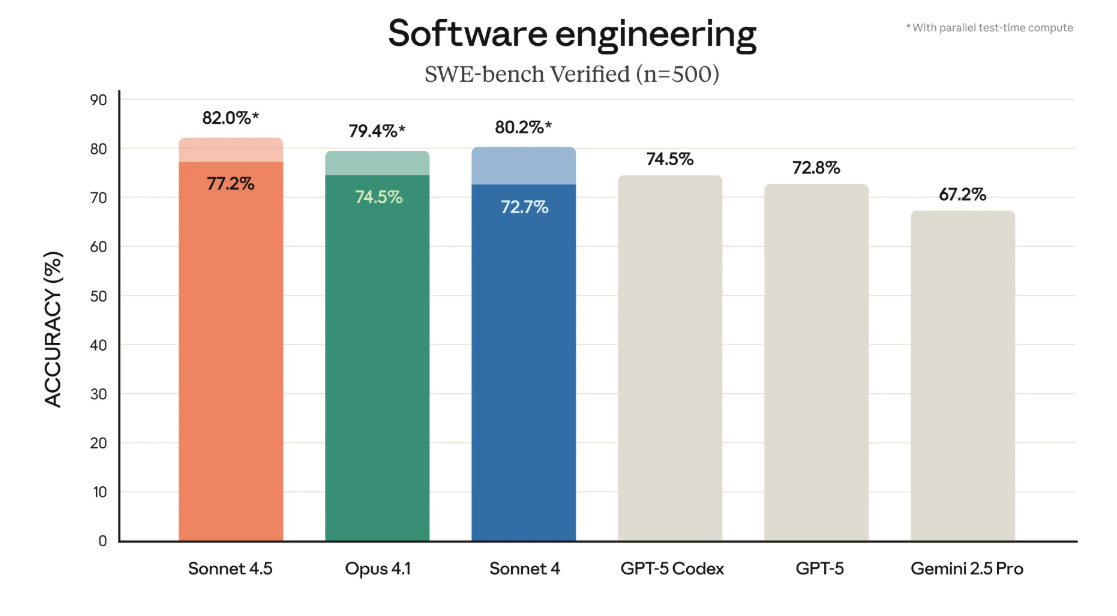

Anthropic has launched Claude Sonnet 4.5, claiming it offers state-of-the-art coding performance, capable of building production-ready applications and boasting improved reliability over previous models. Priced identically to its predecessor at $3 per million input tokens and $15 per million output tokens, it is accessible via the Claude API and chatbot. Amidst strong competition from OpenAI’s GPT-5, which surpasses Anthropic’s models on various benchmarks, Claude Sonnet 4.5 stands out for its promising autonomous coding capabilities and enhanced security features. Additionally, Anthropic unveiled the Claude Agent SDK and a research preview for Max subscribers, showcasing the AI’s real-time software generation.

Anthropic Expands Global Workforce to Compete with OpenAI, Microsoft, and Google

Anthropic, a prominent player in the artificial intelligence sector, is rapidly expanding its global reach. The company has increased its business customer base from under 1,000 to over 300,000 in the past two years, driven by the rising demand for its Claude models across diverse industries and regions. Nearly 80% of its current activity comes from international markets, with adoption rates surpassing the U.S. in countries like South Korea, Australia, and Singapore. As part of its international expansion, Anthropic plans to triple its workforce abroad by 2025 and open new offices in Tokyo and across Europe. This move comes amidst intensifying competition with major tech firms like OpenAI, Microsoft, and Google. Anthropic distinguishes itself by offering a pure AI experience aimed at deep, domain-specific applications across sectors such as life sciences and financial services, rather than integrating into existing productivity suites.

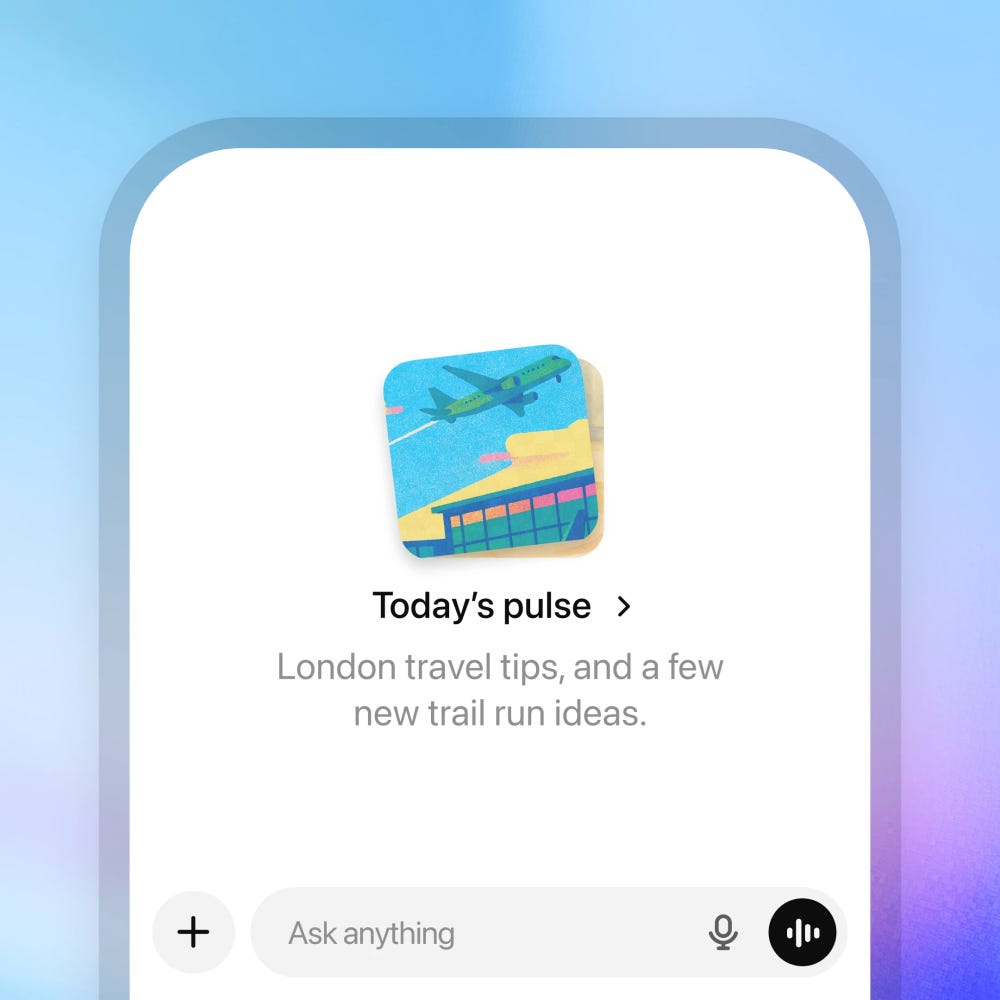

OpenAI Expands ChatGPT Pro with ‘Pulse’ Feature for Daily Personalized Updates

OpenAI has introduced a feature for its $200 monthly ChatGPT Pro plan, called ‘ChatGPT Pulse,’ providing users with a personalized feed-like experience based on their chat history and connected apps. Launched on September 25, Pulse delivers daily updates through visual cards that can be expanded for more information, aiming to proactively assist users by offering tailored content on topics of interest. The feature, currently in preview on mobile, received positive feedback with plans to extend it to the $20 ChatGPT Plus plan and eventually make it universally available. Despite its positive reception, some users voiced concerns about its resemblance to a social media feed rather than leveraging the conversational strengths of large language models.

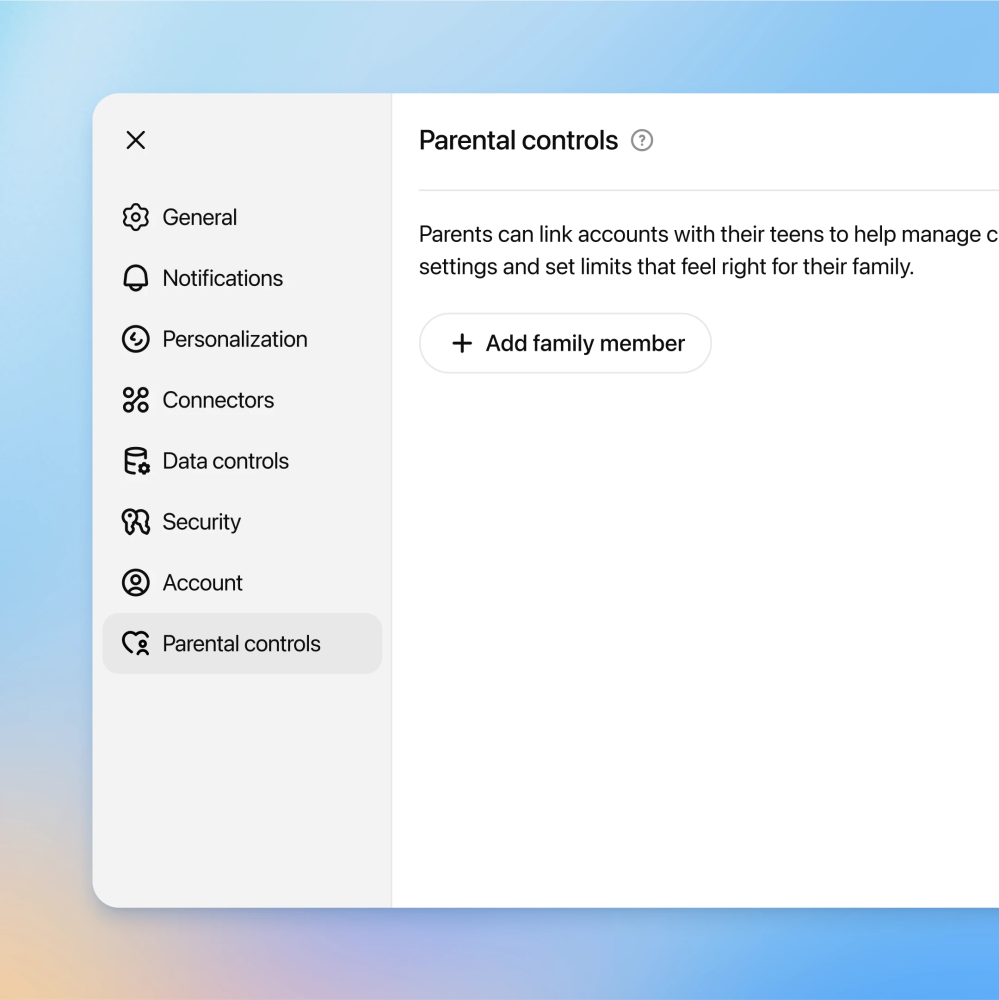

OpenAI Introduces Safety System and Parental Controls to Enhance ChatGPT Security

OpenAI has introduced new safety features in ChatGPT, including a safety routing system and parental controls, following a wrongful death lawsuit linked to the chatbot’s failure to redirect harmful conversations. The routing system, designed to switch to the GPT-5 model during emotionally sensitive chats, aims to deliver “safe completions” in high-stakes interactions. The updates have sparked mixed reactions, with some applauding the advancements for better protecting users, while others criticize them as overly cautious measures that might degrade the service. Parental controls allow parents to customize their teens’ interactions with ChatGPT, although some fear they might lead to treating adult users like children. OpenAI acknowledges the system’s imperfections but emphasizes the importance of erring on the side of caution.

Microsoft Debuts Agent Mode and Office Agent to Enhance AI Collaboration Tools

Microsoft has introduced new AI-driven features to its Microsoft 365 suite, specifically Agent Mode in Excel and Word, and Office Agent in Copilot chat, under the concept of “vibe working.” Currently available to Microsoft 365 Copilot-licensed customers and U.S.-based Microsoft 365 Personal or Family subscribers, these tools aid users in creating spreadsheets, documents, and presentations through iterative AI collaboration. Agent Mode offers functionalities like financial modeling in Excel and refined document drafting in Word, while Office Agent in Copilot chat supports presentation and document creation via a chat-first interface, all powered by advanced AI models from OpenAI and Anthropic.

Maximor Seeks to Replace Excel in Finance Teams with AI-Powered Automation

Despite significant investment in financial software, many finance teams still primarily rely on Excel for financial reconciliation and audits. Addressing this dependence, two former Microsoft executives launched Maximor, a startup aiming to replace spreadsheets with AI-driven agents that automate manual finance tasks. Maximor recently secured a $9 million seed round led by Foundation Capital. Their AI system integrates with ERP, CRM, and billing software to offer real-time financial visibility and streamline month-end closings. Early customers report significant time savings and workload reductions. Maximor, headquartered in New York and Bengaluru, targets companies with a revenue of at least $50 million and already serves clients in the U.S., China, and India, supporting both GAAP and IFRS standards.

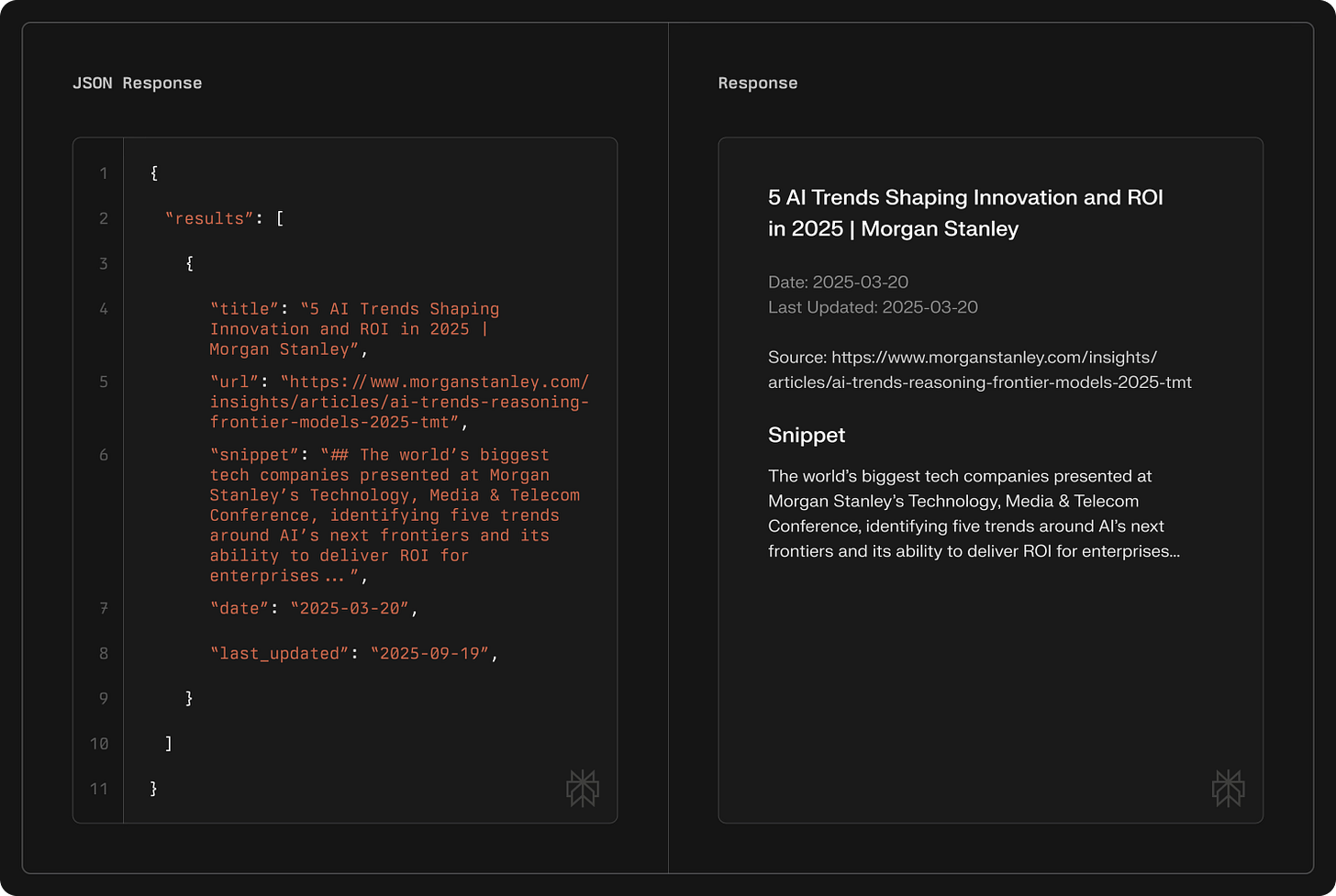

Perplexity Launches AI Search API With Advanced Indexing for Developers Worldwide

AI startup Perplexity has introduced its Perplexity Search API, enabling developers to access infrastructure that catalogs “hundreds of billions” of webpages. This new API enhances search efficiency by dividing documents into fine-grained units for precise results, with pricing options ranging from $1 to $15 per million input and output tokens. Perplexity also launched a Search SDK, promoting rapid prototype development, and reportedly secured $200 million in new funding, increasing its valuation to $20 billion. The company claims its API outperforms competitors like Exa and Brave on benchmarks such as SimpleQA and BrowseComp.

Brave Enhances AI-Powered Search with New Detailed Answer Feature, Ask Brave

Brave, the browser maker and Google Search alternative, has unveiled a new feature called Ask Brave within its AI-powered search suite. This addition enables users to receive detailed topic answers alongside the existing AI Answers feature, which provides quick summaries, contributing to over 15 million answers daily. Users can activate an Ask Brave search by clicking the ask button or using “??” in their query, with the system automatically discerning the query type. The responses are enriched with videos, news articles, and other relevant media, similar to formats seen in ChatGPT or Perplexity, and users can also convert answers or ask follow-up questions. Brave emphasizes that their API ensures search accuracy and maintains user privacy by encrypting chats and deleting them after 24 hours of inactivity.

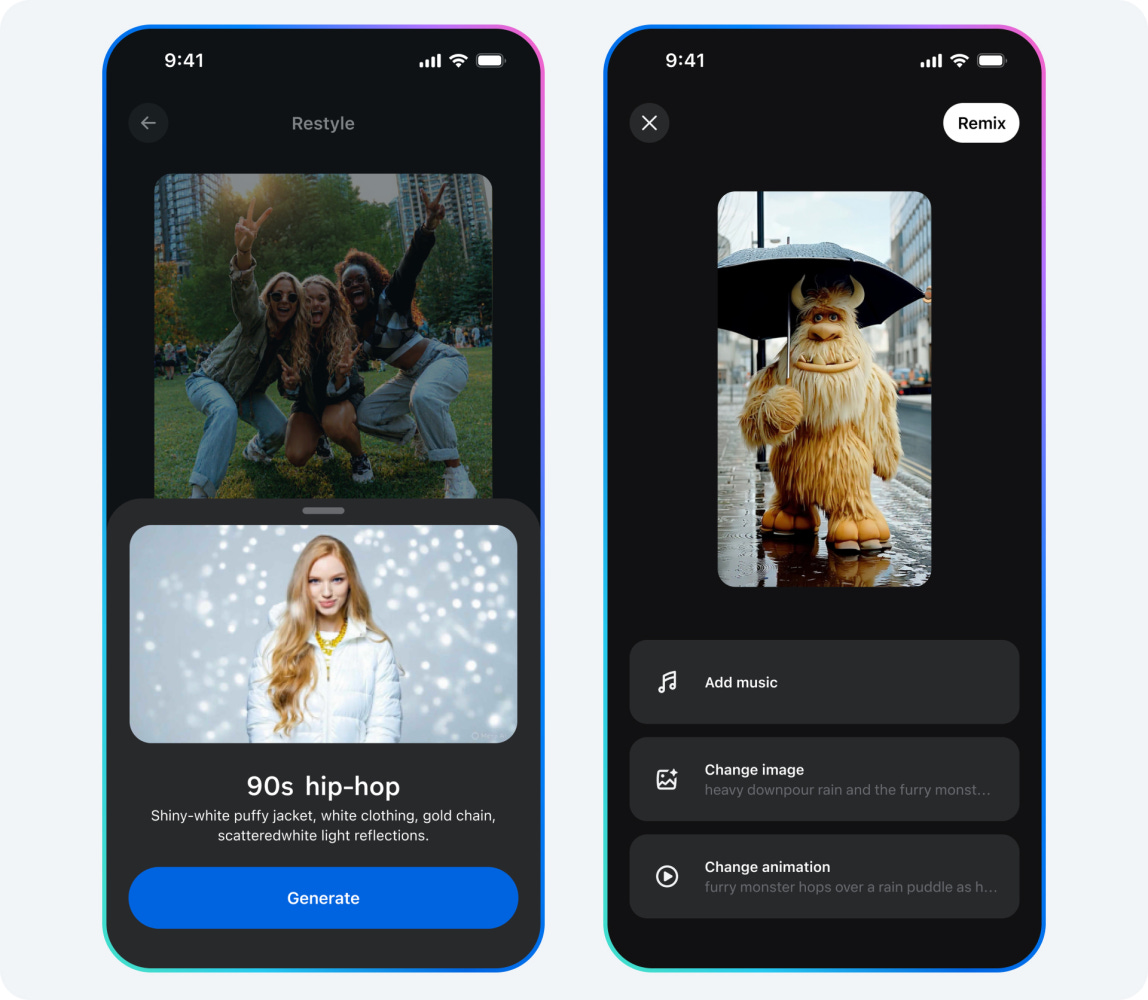

Meta AI Launches Vibes for Creating and Sharing AI-Generated Short Videos

Meta is releasing the latest version of the Meta AI app, featuring Vibes, a new feed for creating and sharing AI-generated short-form videos. Designed to inspire creativity and experimentation with Meta AI’s media tools, Vibes allows users to browse, personalize, and remix videos from creators and communities. The app also integrates with Instagram and Facebook for sharing. Additionally, users can manage AI glasses and seek assistance through the Meta AI assistant. These updates aim to enhance user engagement and provide feedback for future AI video tool advancements.

Gemini Robotics Models Enhance Robots’ Ability to Perform Complex Multi-Step Tasks

Gemini’s latest advancements in robot intelligence have been revealed with the introduction of Gemini Robotics 1.5 and Gemini Robotics-ER 1.5 models. These models enhance robot capabilities by turning visual and language inputs into actionable motor commands, enabling complex, multi-step tasks with improved spatial understanding and planning. By integrating these models, developers can now build more versatile robots capable of comprehending and interacting with physical environments. Gemini Robotics-ER 1.5 is immediately available via the Gemini API, while Gemini Robotics 1.5 is accessible to select partners.

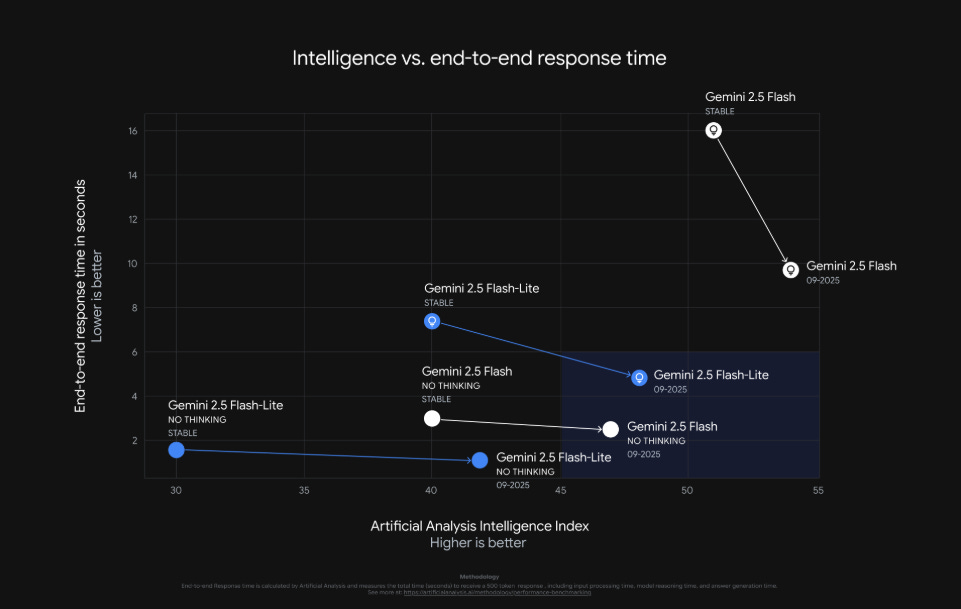

Google Releases Updated Gemini Models Focusing on Quality and Efficiency Improvements

Google has released updated preview versions of Gemini 2.5 Flash and 2.5 Flash-Lite on Google AI Studio and Vertex AI, focusing on enhanced quality and efficiency. These models offer improvements such as better instruction following, reduced verbosity for cost-effectiveness, and stronger multimodal and translation capabilities. The Gemini 2.5 Flash model also features enhanced agentic tool use and cost efficiency, achieving a 5% gain on agentic benchmarks. These updates aim to provide users the opportunity to test new innovations and contribute feedback, while not intended to replace the stable versions yet. Users can conveniently test these models using “-”latest” aliases, ensuring easier access to the most recent features.

YouTube Music Trials AI Hosts to Enhance Listening with Stories and Trivia

YouTube Music is testing AI music hosts that enhance user experience by providing relevant stories, fan trivia, and commentary on the music, echoing Spotify’s two-year-old AI DJ feature. This initiative is part of YouTube’s broader experimentations with conversational AI, exemplified by a custom radio station feature launched in July. The AI hosts are being trialed via YouTube Labs, a platform for AI experimentation open to all users, although only select U.S.-based participants can access it. Recent efforts have also introduced AI tools for creators and AI-driven search enhancements but accompany stricter content policies to prevent monetization of inauthentic material.

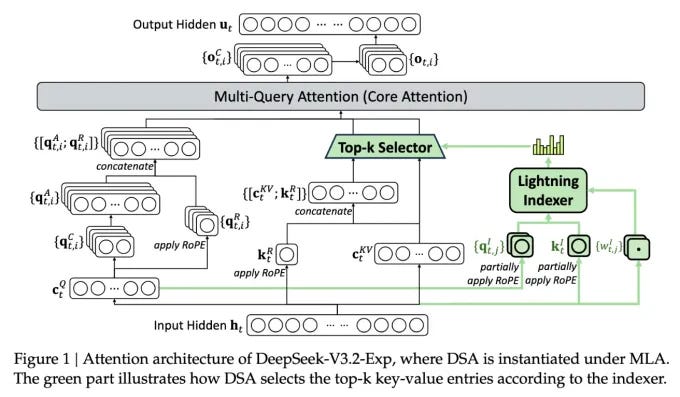

DeepSeek Unveils V3.2-exp Model Focused on Reducing AI Inference Costs

DeepSeek has unveiled an experimental model, V3.2-exp, focused on reducing inference costs for long-context operations through a method dubbed DeepSeek Sparse Attention. This approach employs a “lightning indexer” and a “fine-grained token selection system” to efficiently process extended context windows, potentially halving API call costs in initial tests. As this open-weight model is available on Hugging Face, independent evaluations are forthcoming. This innovation is a continuation of DeepSeek’s efforts to improve transformer efficiency and further their impact in the AI research landscape, despite not achieving the anticipated industry-wide disruption with prior models.

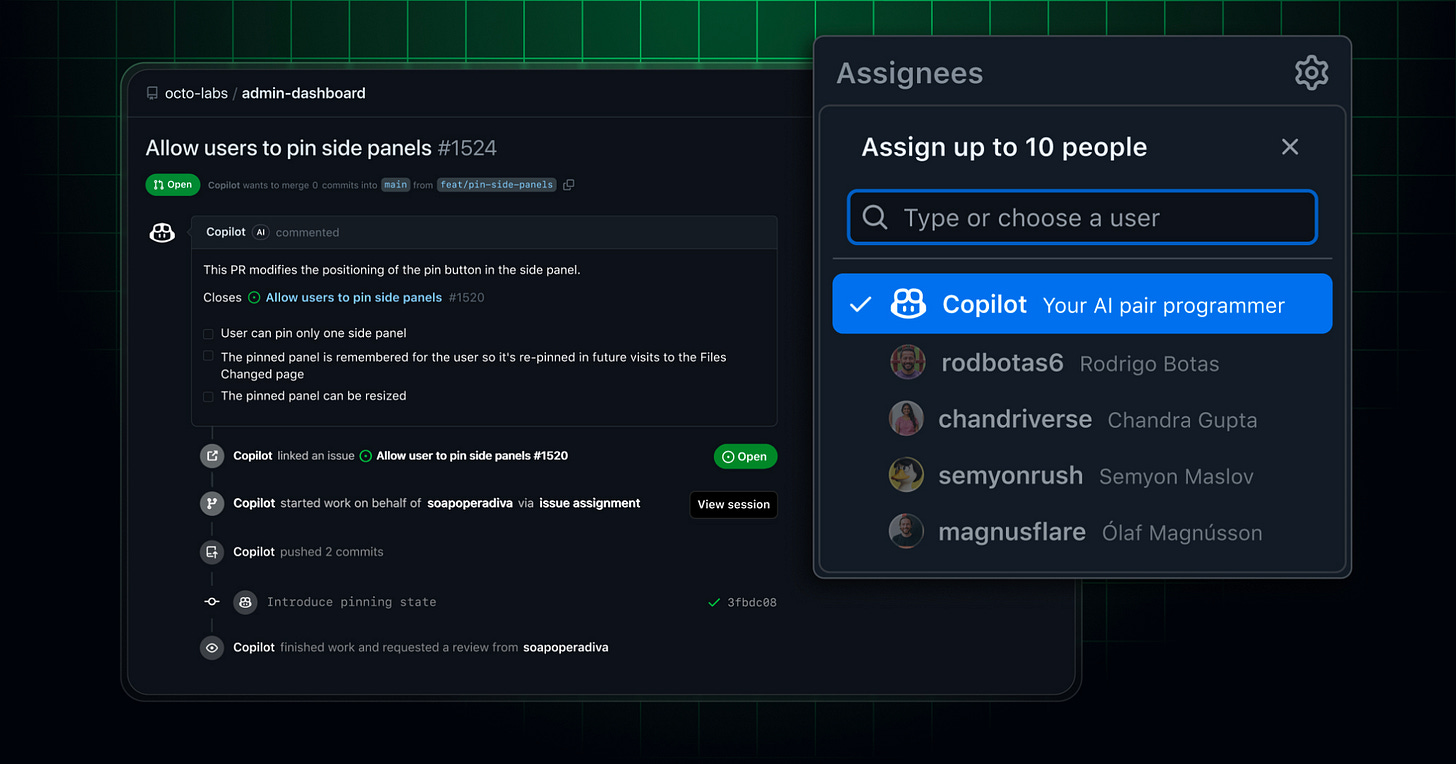

Copilot Coding Agent Now Generally Available for All Paid Subscribers

GitHub has made its Copilot coding agent available for all paid Copilot subscribers, offering an asynchronous and autonomous tool that can handle various software development tasks such as implementing new features, fixing bugs, and improving documentation. The agent operates through GitHub Actions, automatically generating draft pull requests and seeking review upon task completion. Users can delegate tasks via issues, an agents panel on GitHub, or a button in Visual Studio Code. For Copilot Business or Enterprise users, administrative activation is necessary.

GitHub Launches Copilot CLI for AI-Powered Coding in Developer Terminals

GitHub has launched the public preview of GitHub Copilot CLI, a command-line interface that allows developers to utilize its AI-powered coding assistant directly within their terminals. This enhancement aims to streamline the coding workflow by integrating Copilot’s features into the command line, removing the need for constant context switching. The CLI supports natural language commands to access GitHub repositories, issues, and pull requests while offering features such as planning, editing, debugging, and refactoring code with preview to ensure control. It requires installation via npm and authentication, supporting Copilot Pro and enterprise plans. The new interface positions Copilot as a versatile terminal-native collaborator, expanding GitHub’s AI strategy in developer tools, with compatibility requirements for organization-managed accounts.

Lovable Launches Cloud and AI Tools for Simplified Full-Stack App Development

Lovable has launched Lovable Cloud and Lovable AI, platforms designed to simplify full-stack app creation through AI-driven prompts. Powered by Google’s Gemini models, Lovable AI allows apps to gain AI features effortlessly, while Lovable Cloud offers integrated backend support including logins and databases. The services have already facilitated successful app launches, with users like Sabrine achieving significant annual recurring revenue. Following a $200 million Series A funding in July, Lovable’s valuation has reached $1.8 billion.

Tencent Releases HunyuanImage-3.0: Largest Open-Source Multimodal Image Generation Model

Tencent’s HunyuanImage-3.0, released on September 28, 2025, is a state-of-the-art open-source multimodal image generation model, notable for its unified architecture that integrates text and image modalities through an autoregressive framework, boasting superior performance with 80 billion parameters. The model excels in producing photorealistic images with precise prompt adherence and benefits from advanced reinforcement learning. It supports NVIDIA GPUs and offers extensive capabilities, including intelligent world-knowledge reasoning and various image resolution options, while encouraging community engagement and enhancements.

Cloudflare Launches Email Sending Feature in Workers for Seamless Transactional Emails

Cloudflare has launched the private beta of its new Email Sending capability, which allows developers to send transactional emails directly from Cloudflare Workers, forming a comprehensive Cloudflare Email Service alongside the existing Email Routing product. This service aims to simplify email management for developers by integrating seamlessly into workflows and enhancing deliverability with built-in DNS configurations like SPF, DKIM, and DMARC to improve email trust and avoid spam filters. Available in private beta this November, the service promises to support a range of application workflows and will require a paid Workers subscription with pricing details forthcoming, while Email Routing remains free.

⚖️ AI Ethics

California Judge Preliminarily Approves $1.5 Billion AI-Related Copyright Settlement

A federal judge in California has given preliminary approval to a $1.5 billion settlement in a copyright class action lawsuit against AI company Anthropic. The lawsuit was filed by a group of authors who accused Anthropic of using millions of pirated books to train its AI systems without permission. This settlement, which could set a precedent for similar cases against companies like OpenAI and Meta, marks a significant moment in the ongoing legal battles concerning the use of copyrighted material in AI training. The court’s decision signals a push for accountability in the tech industry, highlighting the importance of upholding creators’ rights in the development of generative AI systems.

New Privacy Attack CAMIA Identifies Data Training in AI Models with High Accuracy

Researchers from Brave and the National University of Singapore have developed CAMIA (Context-Aware Membership Inference Attack), a novel method revealing privacy vulnerabilities in AI models by pinpointing if specific data was used during training. CAMIA is notably more successful than prior attempts, as it focuses on the context-dependent nature of AI memorization at the token level and doubles detection accuracy while maintaining low false positives. Highlighting the privacy risks in AI development, this work urges the industry to prioritize privacy-preserving techniques amid the growing use of large, unfiltered datasets.

California Governor Signs Groundbreaking AI Transparency Bill Amid Mixed Industry Reactions

California Governor Gavin Newsom has signed SB 53, a groundbreaking bill requiring large AI companies like OpenAI, Anthropic, Meta, and Google DeepMind to meet new transparency and safety standards. The legislation mandates these companies to disclose their safety protocols and protects whistleblowers, while also setting up a process for reporting AI-related safety incidents to California’s Office of Emergency Services. Despite some support, including from Anthropic, the bill has sparked opposition from other tech giants like Meta and OpenAI, who fear the creation of fragmented state-level AI regulations. The move reflects California’s leadership in AI oversight and could set a precedent for other states considering similar regulatory measures.

Microsoft Ends Israeli Defense Ministry’s Access to Azure Over Surveillance Concerns

Microsoft recently cut off the Israel Ministry of Defense’s access to certain tech and services, including Azure cloud storage and specific AI tools, following concerns about their use for storing surveillance data on Palestinian phone calls. This decision comes after an investigation, prompted by a Guardian report, revealed the potential misuse of Microsoft’s technology by Israel’s Unit 8200. The tech giant reiterated its global stance against enabling mass civilian surveillance, aligning its actions with its long-standing principles. The investigation continues, and Microsoft has faced internal protests, resulting in employee dismissals, over its dealings with Israel.

LTIMindtree Launches AI Governance Framework to Enhance Autonomous Decision-Making Confidence

LTIMindtree has introduced BlueVerse RightAction, a pioneering AI governance framework designed for agentic AI, aimed at enhancing intelligent decision-making within enterprises. Built on the BlueVerse AI ecosystem, this framework ensures that autonomous AI agents adhere to business rules and regulations, significantly reducing the risk of costly errors. It integrates AI governance with autonomous agents, supports compliance-driven actions, and facilitates low-code deployment, all while maintaining transparency and fraud detection. Additionally, LTIMindtree has launched BlueVerse Academy to bridge the AI talent gap, training 60,000 employees in its first phase, and partnering with technology firms and academic institutions to provide advanced AI training.

EU Approves Reliance-Meta AI Venture With $100 Million Investment for Indian Businesses

The European Union has approved a joint venture between Reliance Industries Limited and Meta Platforms to develop AI solutions focused on enterprises, as reported by ET Now. Announced at Reliance’s recent AGM, the venture will invest approximately ₹855 crore (US$100 million), with a 70% stake held by Reliance and 30% by Meta. The collaboration aims to leverage Meta’s Llama models and Reliance’s extensive enterprise network to provide AI tools across various sectors, including sales, marketing, and IT operations. The offerings will encompass an enterprise AI platform-as-a-service and pre-configured solutions designed for deployment in cloud, on-premises, and hybrid environments.

North Korean Cybercrime Network Exploits AI to Infiltrate US Tech Sector, Reports Show

A CNN investigation has uncovered a sophisticated scheme involving thousands of North Korean IT workers who use stolen and fabricated U.S. identities to deceive companies into hiring them, funneling millions of dollars annually to Pyongyang’s military programs. These operatives use AI tools to create fake resumes and personas, with some even participating in real-time video interviews using face-masking software to hide their identities. They are supported by American facilitators who help them gain access to corporate networks, often unwittingly making U.S. businesses complicit in violating sanctions. This operation, which relies heavily on online job platforms and advances in AI, poses a significant national security risk, as it allows North Korea to evade international sanctions and could potentially lead to malicious cyber activities, according to U.S. authorities.

FTC Secures Historic $2.5 Billion Settlement Against Amazon Over Deceptive Prime Practices

The Federal Trade Commission (FTC) has reached a landmark settlement with Amazon.com, Inc., requiring the company to pay a $1 billion civil penalty and $1.5 billion in consumer refunds over allegations of deceptive Prime subscription practices. According to the FTC, Amazon enrolled millions of consumers in Prime without consent and made it difficult to cancel. The settlement mandates Amazon to stop these unlawful practices, simplify subscription termination, and implement clear consumer disclosures. This resolution marks a significant enforcement action under the Restore Online Shoppers’ Confidence Act (ROSCA), with Amazon’s compliance to be monitored by a third party.

🎓AI Academia

Socio-Economic Models Highlight AI Agents Impact on Global Productivity and Growth

A study from Beijing University of Posts and Telecommunications has developed a socio-economic model integrating both human workers and AI agents, to assess the impact of AI on social output under resource constraints. The research encompasses five models, progressively incorporating AI agents, network effects, and viewing agents as independent producers. Findings suggest that AI agents significantly boost social output, exhibiting nonlinear growth due to network effects among agents, which results in increasing returns to scale. The study highlights the growing role of AI agents in various sectors while addressing challenges in their integration with human work systems, especially concerning interaction networks and behavior homogenization.

Study Highlights Green Prompt Engineering’s Role in Reducing Energy Use in Software

A recent study has introduced the concept of “Green Prompt Engineering,” emphasizing how the linguistic complexity of prompts in language models can impact energy consumption and overall sustainability in software engineering applications. The research highlights that simpler prompts can effectively reduce energy usage with minimal loss in performance, aligning with the broader Green AI agenda that seeks to balance performance with environmental sustainability. By analyzing requirement classification tasks using open-source language models, the study reveals significant environmental benefits and encourages the development of sustainable prompting practices to lower the carbon and energy footprint of AI technologies.

Study Reveals Educational Risks of Large Language Models in Learning Environments

A comprehensive review of 70 empirical studies by researchers from the Hong Kong University of Science and Technology examines the transformative yet potentially risky role of Large Language Models (LLMs) in education. These AI-driven models, while enhancing personalization and interactive learning, pose challenges such as superficial understanding and reduced student autonomy. The study identifies technological and educational risks, including bias, cognitive effects, and issues of privacy, and introduces an LLM-Risk Adapted Learning Model to map how these risks impact learning outcomes. This serves as a foundational analysis for integrating LLMs responsibly in educational settings.

A Critical Review Highlights Challenges and Advances in Large Language Models

A critical review of the current landscape of large language models (LLMs) highlights significant advancements from Recurrent Neural Networks to Transformer architectures, with an emphasis on improving parameter efficiency and aligning models with human preferences. The review examines advanced training methodologies, including reinforcement learning with human feedback and retrieval-augmented generation, which integrates external knowledge. It also addresses ethical considerations in deploying LLMs, noting the importance of responsible use. The work serves as a comprehensive guide for understanding the strengths, limitations, and future research directions in the field of LLMs, offering insights into their applications and potential advancements in artificial intelligence.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.