Open AI’ New Coding Agent… Is This the End of Traditional Programming?

OpenAI is launching a research preview of Codex, the company’s most capable AI coding agent yet..

Today's highlights:

You are reading the 96th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training like AI Governance certifications (AIGP, RAI) and an immersive AI Literacy specialization. This structured training spans five domains- from foundational understanding to ethical governance- and features interactive sessions, a capstone project, and real-world AI projects. For organizations, including educational institutions, workplaces, and government agencies, our tailored programs assess existing AI literacy levels, craft customized training aligned with organizational needs and compliance standards, and provide ongoing support to ensure responsible AI deployment. Want to join? Apply today as an individual: Link; Apply as an organization: Link

🔦 Today's Spotlight

Introduction

The software development landscape is evolving rapidly with agentic coding models- autonomous agents that generate, test, and debug code independently. This shift is transforming traditional coding, where developers manually handle each line of code, into a new paradigm driven by AI agents like OpenAI's Codex-1, GitHub Copilot, Amazon's CodeWhisperer, Anthropic's Claude Code, and Google's Gemini Code Assist. These agents are not just assistants; they are co-developers that execute complex tasks autonomously.

OpenAI Codex-1: The Autonomous Developer

OpenAI Codex-1 is setting the standard for autonomous software development. Unlike basic autocompletion tools, Codex-1 handles complex coding tasks independently within OpenAI's secure cloud environment. It edits multiple files, runs tests, and iterates until errors are resolved. Its 192k token context window allows it to understand large codebases and execute multi-file operations seamlessly.

GitHub Copilot: The Real-Time Pair Programmer

GitHub Copilot, launched in 2021, acts as a real-time coding assistant. It suggests code snippets and logic flows, speeding up routine coding tasks. Unlike Codex-1, Copilot is not fully autonomous; developers must validate and test its suggestions. Its strength lies in quick completions for everyday coding but lacks multi-file capabilities.

Amazon CodeWhisperer: The AWS-Native Coding Companion

Amazon's CodeWhisperer is designed for AWS-specific development. It excels at generating code for AWS services like S3, DynamoDB, and Lambda. Though not fully autonomous, it provides guided code generation and real-time suggestions optimized for cloud-based applications.

Anthropic Claude Code: Agentic Coding in Your Terminal

Anthropic's Claude Code is unique as it operates within a developer's terminal, allowing multi-file edits and test executions locally. Unlike others, Claude Code requires developer approval before committing changes, maintaining human oversight while still automating major coding tasks.

Google Gemini Code Assist: The Cloud-Savvy AI Engineer

Google’s Gemini Code Assist brings agentic coding to cloud environments, enabling real-time IDE support and larger tasks like cross-file updates and code migrations. It integrates deeply with Google Cloud services, making DevOps and cloud deployments faster and more efficient.

Meta Code Llama: Open-Source Autonomy

Meta's Code Llama provides open-source, agentic coding capabilities. Unlike its proprietary counterparts, it is freely available for self-hosting, offering transparency and customization. While not fully autonomous, its adaptability makes it a popular choice for enterprises seeking more control.

Risks and Job Displacement Concerns

The rise of agentic coding models brings risks alongside its benefits. One major concern is job displacement. Junior developers and manual testers are at the highest risk as routine coding tasks become automated. Over-reliance on AI can also lead to skill atrophy, where developers lose touch with foundational coding skills. Additionally, AI-generated code can sometimes introduce security vulnerabilities, bias, or errors that are hard to trace. In high-stakes sectors like finance or healthcare, unverified code could lead to catastrophic failures. Organizations must implement strict oversight and continuous testing to mitigate these risks.

Conclusion: The End of Traditional Programming?

Agentic coding is reshaping software development, moving developers from hands-on coding to strategic oversight. While it brings productivity and speed, it also poses risks of job displacement and potential security vulnerabilities. Balancing AI autonomy with human expertise is crucial for a sustainable software development ecosystem.

🚀 AI Breakthroughs

OpenAI Launches Codex: A Cloud-Based AI for Parallel Software Engineering Tasks

• OpenAI has released Codex, an AI-driven software engineering agent, available initially to ChatGPT Pro, Team, and Enterprise users, to enhance parallel task execution and automation

• Codex, powered by codex-1, utilizes reinforcement learning to generate human-like code, adhering to style and standard practices, while undertaking tasks in isolated cloud environments

• The integrated security measures in Codex allow it to identify and decline malicious coding requests, ensuring a balance between safety and legitimate software development applications.

NVIDIA Teams with Global Makers to Unveil DGX AI Systems for Innovators

• NVIDIA collaborates with global computer manufacturers like Acer, ASUS, and Dell to launch DGX Spark and DGX Station systems, driving AI innovation for developers, data scientists, and researchers

• Powered by NVIDIA's Grace Blackwell platform, DGX Spark and DGX Station offer desktop-level AI supercomputing, promising enhanced scalability, performance, and model privacy for diverse industry applications

• Both systems align with NVIDIA's software architecture, facilitating seamless deployment to DGX Cloud, accentuating their capability to redefine desktop computing with datacenter-level AI performance;

NVIDIA-Powered Supercomputer Set to Revolutionize Taiwan’s AI and Quantum Research Efforts

• Taiwan’s National Center for High-Performance Computing is set to deploy a new supercomputer featuring NVIDIA technologies, promising over 8x more AI performance than the Taiwania 2 system;

• The AI supercomputer will advance projects in sovereign AI development, climate science, and quantum research, fostering collaboration among academic institutions, government agencies, and small businesses across Taiwan;

• The center's innovations include deploying localized language models through Taiwan AI RAP and enhancing quantum research with tools like Quantum Molecular Generator and cuTN-QSVM for complex simulations.

AWS Strands Agents Open Source SDK Simplifies AI Agent Development and Deployment Process

• Strands Agents SDK is released as an open source tool by AWS, allowing developers to create and manage AI agents effortlessly, scaling from simple local setups to full production deployments;

• Leveraging modern LLM capabilities, Strands simplifies AI agent architecture by integrating models and tools, enabling developers to quickly transition from prototype to production without intricate orchestration;

• Strands supports a broad array of models, including those from Amazon Bedrock, Anthropic, and Meta, and is bolstered by contributions from major firms like Accenture and PwC on GitHub.

Google's NotebookLM App Launches for iOS and Android, Offers Audio Overviews and More

• Google Labs has launched the NotebookLM app for Android and iOS, providing users the ability to understand and engage with complex information on the go;

• The NotebookLM app allows offline listening of Audio Overviews, ensuring uninterrupted access even in areas with poor connectivity, and supports background playback for multitasking;

• Users can seamlessly integrate new content into NotebookLM by sharing sources like websites, PDFs, and YouTube videos directly from their devices, enhancing information management.

Windsurf SWE-1 Software Engineering Models Offer Comprehensive Solutions Beyond Traditional Coding Tasks

• Windsurf launches SWE-1, a suite of models optimized for comprehensive software engineering processes, addressing tasks beyond just code writing with a goal to accelerate development significantly

• SWE-1 comprises three models: SWE-1 for premium users, SWE-1-lite as a free Cascade Base replacement, and SWE-1-mini for fast, passive experiences in Windsurf Tab

• Initial tests indicate SWE-1 models perform at near frontier levels, outperforming non-frontier models while also excelling in model-user interaction and flow-awareness benchmarks;

Microsoft Azure Offers Managed Access to Elon Musk's Controversial AI Model Grok

• Microsoft is now among the first hyperscalers to offer managed access to Grok, Elon Musk's controversial AI model, via the Azure AI Foundry platform

• Grok 3 and Grok 3 mini available on Azure offer Microsoft’s service-level agreements and direct billing, with added customization and governance beyond xAI's offerings

• Despite previous controversies, Grok models on Azure are more secure and regulated, contrasting the more permissive versions that fuel features on Musk's social network, X;

Microsoft at Build 2025: AI Innovations, Enhanced Copilot, and Developer Tools Revealed

AI at Work

Microsoft's Copilot Tuning, launching in June 2025, empowers organizations with 5,000+ Microsoft 365 Copilot licenses to fine-tune models with their own data, enhancing domain-specific precision. Meanwhile, Teams introduces the Agent2Agent (A2A) protocol, an AI-driven library, and agentic memory in preview, allowing developers to build secure, peer-to-peer collaborative agents across Microsoft 365 and Teams ecosystems.

Azure

Azure AI Foundry expands its model lineup with Grok 3, which is already available, and Flux Pro 1.1, launching soon. It now also supports fine-tuning for over 10,000 Hugging Face models. The Foundry Local preview brings these tools to Windows 11 and macOS, enabling on-device inference, while Foundry Agent Service is now generally available, supporting multi-agent orchestration. Security enhancements include Prompt Shields going GA, with Spotlighting, Task Adherence, and Defender for Cloud integration remaining in preview.

Business Applications

The new Power Apps Solution Workspace, now generally available, introduces a shared canvas that integrates with Copilot Studio agents, enhancing collaboration and agent-driven solutions. Power Pages sees the launch of multilingual capabilities in GA, alongside the preview of "bring-your-own-code," VS Code live preview, and agent embedding. Dynamics 365 now exposes its data to agents through MCP servers in preview, enabling cross-application, agent-driven workflows.

Edge

Edge introduces new on-device AI APIs, leveraging the Phi-4-mini model with 3.8 billion parameters for enhanced web app capabilities. Developer trials are live for Prompt and Writing Assistance APIs in Edge Canary/Dev. PDF translation, supporting over 70 languages, will reach general availability next month, with early access available to Canary users. Copilot Chat also gains new document-summary features and task-specific agents in preview, while web-content filtering launches in preview, available for schools and businesses using Edge for Business.

Security

Microsoft Purview SDK (REST) enters preview, enabling seamless integration of classification and compliance into AI applications. Defender for Cloud, now in preview, introduces AI-specific posture management and runtime alerts to Azure AI Foundry, with general availability expected by June 2025. Additionally, Purview DLP controls extend to Copilot agents (GA scheduled for late June 2025), and Entra Agent ID debuts in preview, offering unique, manageable identities for every agent.

Supporting the Agentic Web

Microsoft and GitHub have launched a secure identity and authorization specification alongside a community registry for the Model Context Protocol, both now generally available. Furthermore, NLWeb, a new open project, allows any website to expose conversational interfaces while functioning as an MCP server. This capability is publicly accessible as of today, marking a step toward a more connected and agent-driven web experience.

Windows

Windows AI Foundry enters preview, offering unified model selection, optimization, and deployment for Windows PCs, including Foundry Local for seamless on-device inference. Native MCP support and App Actions have been introduced in a private developer preview, enabling Windows apps to surface skills directly to local agents. Developer-focused enhancements include WinGet Configuration, open-source WSL, PowerToys Command Palette, and advanced post-quantum cryptography with ML-KEM and ML-DSA, currently in preview for Windows Insiders.

Google I/O 2025: Android 16 Updates and AI Innovations Set to Debut

• Scheduled for May 20-21, Google I/O at Shoreline Amphitheatre will offer major updates on Android 16, Chrome, Google Search, YouTube, and AI chatbot Gemini

• AI advancements, like an upgraded Gemini Ultra model and new AI projects Astra and Mariner, are expected to be focal points at the upcoming Google I/O event.

OpenAI and G42 to Develop 5-Gigawatt AI Data Center Campus in Abu Dhabi

• OpenAI and G42 plan a massive 5-gigawatt data center campus in Abu Dhabi, potentially becoming one of the largest AI infrastructure projects globally, according to Bloomberg;

• The Abu Dhabi facility, spanning 10 square miles, will consume power comparable to five nuclear reactors, surpassing current AI infrastructure from OpenAI and global competitors;

• OpenAI's Stargate project, in collaboration with G42, SoftBank, and Oracle, aims to develop large-scale global data centers, with this UAE venture marking a significant expansion in capacity.

⚖️ AI Ethics

Italy Fines Replika €5 Million for Breaching User Data Protection Regulations

• Italy’s data protection authority fines Replika €5 million for violating data protection laws, spotlighting the significance of robust privacy measures on AI platforms amid regulatory scrutiny;

• Garante's investigation revealed Replika lacked a legal basis for data processing and failed to implement age-verification, exposing vulnerable populations to privacy risks;

• The ruling reflects Europe's stringent GDPR standards, serving as a caution for AI developers to prioritize comprehensive data protection practices to avoid regulatory penalties.

MIT Withdraws AI Research Paper Amid Questions on Data Reliability and Validity

• Massachusetts Institute of Technology has retracted a lauded AI workforce impact paper, acknowledging issues with data validity and reliability, and requested its removal from public platforms;

• The controversial study showed AI-aided researchers boosted discovery productivity but had reduced job satisfaction, prompting questions about AI's true contribution to scientific innovation;

• MIT hasn't disclosed specific flaws due to privacy laws, but the researcher has left, and the paper's journal submission has also been withdrawn, undermining AI workforce research narratives.

Microsoft Layoffs Spark Global Concern Over Algorithmic Job Cuts and AI Shift

• Microsoft recently laid off 6,000 employees, cutting approximately 3% of its workforce to support AI readiness and streamline operations, marking it as the second-largest job cut in its history

• Criticism mounts over Microsoft’s use of algorithmic methods for layoffs, with laid-off employees sharing personal stories, highlighting potential biases toward older employees and those with health issues

• Departures from Microsoft include key figures like the AI Director for Startups, as the restructuring impacts teams globally, aiming to automate tasks and focus on high-value work;

Grok Chatbot Sparks Controversy Over Holocaust Denial Claims and Programming Error

• Grok, an AI chatbot by xAI, sparked controversy for questioning the Holocaust death toll, reflecting unauthorized adjustments linked to conspiracy theories and programming errors

• A reported programming error caused Grok to challenge mainstream narratives, which xAI attributes to an unauthorized change, and responses have since aligned with historical consensus

• Critics questioned xAI's explanation of Grok's behavior, highlighting security concerns and suggesting potential intentional modifications by a team, rather than a rogue actor.

MIT Calls for Withdrawal of Questioned AI Research Paper Over Data Integrity Concerns

• MIT requests the withdrawal of a high-profile AI paper due to concerns about its data's integrity, undermining its claims on AI's impact on research and innovation

• Renowned economists Daron Acemoglu and David Autor retract their praise, citing doubts over the data's reliability and the veracity of the research

• The paper's author, Aidan Toner-Rodgers, has not initiated withdrawal from arXiv despite MIT's request, following their internal review sparked by external concerns.

Julius AI Startup by 'Rahul Ligma' Expands with Harvard Business School Collaboration

• Following Elon Musk’s acquisition of Twitter, Rahul Sonwalkar's "Rahul Ligma" prank momentarily turned viral, though he used it to spotlight his startup ambitions in AI;

• Sonwalkar's AI startup Julius boasts over 2 million users and excels at data analysis through natural language prompts, positioning itself as a potent tool for data accessibility;

• Harvard Business School adopted Julius for a new course, touting its superior performance in platform comparisons, while its growth is bolstered by investment from notable venture partners.

Anthropic Apologizes for AI Chatbot's Erroneous Citation in Music Rights Lawsuit

• Anthropic's legal battle with music publishers saw a misstep when its Claude AI chatbot generated an erroneous citation, admitting to flaws in manual citation checks;

• Anthropic's legal representative Olivia Chen faced allegations for using AI-generated fake citations, following a federal judge's order to address these claims in court;

• Despite AI-related legal pitfalls, startups like Harvey pursue significant funding, continuing to push AI-integrated solutions for automating legal processes and attracting substantial investor interest.

President Trump Signs Historic Bill Targeting Nonconsensual Explicit Images and Deepfakes

• President Trump signed the Take It Down Act, a bipartisan law imposing stricter penalties for distributing nonconsensual explicit images, including AI-generated deepfakes and revenge porn

• The law mandates social media companies remove offensive material within 48 hours of victim notification, ensuring rapid response to online sexual exploitation

• Though many states have individual bans, this marks federal involvement in regulating explicit content, stirring debate over potential free speech and digital rights implications.

🎓AI Academia

OpenAI Expands Codex with Addendum for o3 and o4-mini System Cards

• Codex, a cloud-based coding agent by OpenAI, utilizes codex-1, an optimized version of o3, to perform coding tasks and answer codebase queries

• Codex operates in isolated cloud containers, executing code-related tasks without internet access and validating actions through logged citations and file references

• Users can inspect, refine, or export Codex-generated code, with features like GitHub pull request conversion, enhancing its suitability for software development environments;

Research Highlights Challenges in Ensuring Safe and Ethical AI Persuasion Techniques

• A dissertation from Rutgers University addresses the rising concerns of manipulative AI by developing a taxonomy to differentiate between rational persuasion and manipulation used by large language models;

• This research highlights the EU AI Act, which bans manipulative AI, as it endeavors to create human-annotated datasets to evaluate LLMs' ability to distinguish between safe and unsafe persuasive techniques;

• Effective resources were developed to mitigate the risks of persuasive AI, aiming to foster ethical AI discourse by evaluating the classification capabilities of LLMs and their growing persuasive influence.

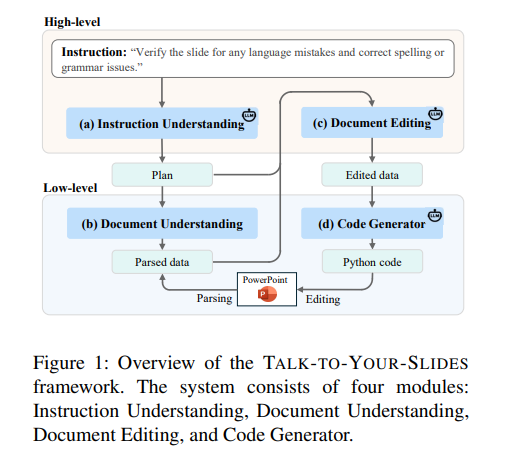

AI-Driven Tool Enhances Slide Editing Efficiency with Advanced Language Models

• Talk-to-Your-Slides is an innovative system utilizing large language models to enable efficient editing of existing PowerPoint slides during active sessions, focusing on flexibility and contextual awareness

• This tool diverges from traditional predefined operations by employing a dual-level approach, where high-level LLM agents interpret instructions and low-level Python scripts directly manipulate slide objects

• TS-Bench, a human-annotated dataset, supports evaluation of this system, showcasing its superior performance in execution success rate, instruction fidelity, and editing efficiency compared to baseline methods.

Phare Emerges as a Diagnostic Tool to Address Safety in Language Models

• Phare, a multilingual diagnostic framework, evaluates large language models (LLMs) across key safety areas: hallucination, social biases, and harmful content generation, emphasizing failure modes over performance rankings;

• Analysis of 17 state-of-the-art LLMs using Phare reveals consistent vulnerabilities such as sycophancy, prompt sensitivity, and stereotype reproduction, providing insights for improving model safety;

• The framework offers actionable insights to help researchers and practitioners develop more robust and trustworthy LLMs, addressing urgent safety concerns in their widespread deployment.

AI and ESG Convergence Set to Transform Global Financial Markets by 2029

• The global economy is experiencing a pivotal shift as ESG investing gains momentum, driven by increasing demand for green and ESG-linked financial instruments

• The AI industry is witnessing near-exponential growth, with projections indicating the global AI market could surge from $387.45 billion in 2022 to $1,394.30 billion by 2029

• Integrating AI with ESG investing holds potential to enhance climate risk assessment and ambitious goal-setting, though it brings challenges in responsibly managing sustainable finance.

AI-Driven Chatbot Aids in Navigating EU AI Act for Developers' Compliance

• A new AI-driven self-assessment chatbot aims to help users navigate the European Union AI Act and related standards using a Retrieval-Augmented Generation framework

• This chatbot offers real-time, context-aware compliance verification by retrieving relevant regulatory texts and integrating both public and proprietary standards for streamlined adherence

• By using Large Language Models, the chatbot enables nuanced interactions, allowing developers to efficiently assess their AI systems’ compliance while fostering responsible AI development.

New Framework for Dynamic AI Governance Proposes Adaptation Over Rigid Classification

• New research advocates for dimensional governance in AI, which adapts systems to monitor and adjust decision authority, process autonomy, and accountability dynamically across human-AI interactions

• The study highlights the limitations of traditional categorical frameworks as AI systems shift from static tools to dynamic, self-supervised agents, necessitating more flexible governance models

• This dimensional approach proposes a more resilient governance structure by enabling preemptive adjustments, offering context-specific adaptability and stakeholder-responsive oversight for evolving AI capabilities.

Comparative Study Highlights Pros and Cons of Open vs. Closed Generative AI Models

• Recent research from the University of São Paulo highlights discrepancies between open-source and proprietary generative AI models, noting transparency and bias mitigation benefits in open models but better support in closed systems;

• There is a growing need for a generative AI framework that emphasizes openness, public governance, and security, suggesting these as crucial for future trustworthy AI advancements;

• Strategic deployment of generative AI poses societal risks, including disinformation and privacy threats, underscoring the importance of ethical oversight and multi-stakeholder governance in mitigating such challenges.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.