Nvidia Crowned World's Most Valuable Company, Surpassing Microsoft

++Claude 3.5 Sonnet Pioneers Performance; Meta Unveils Public AI Models; AI Copilot Targets Cancer Treatment; Dell Launches AI Factory with Partners; Navy Employs AI in Underwater Defense; New AI Firm

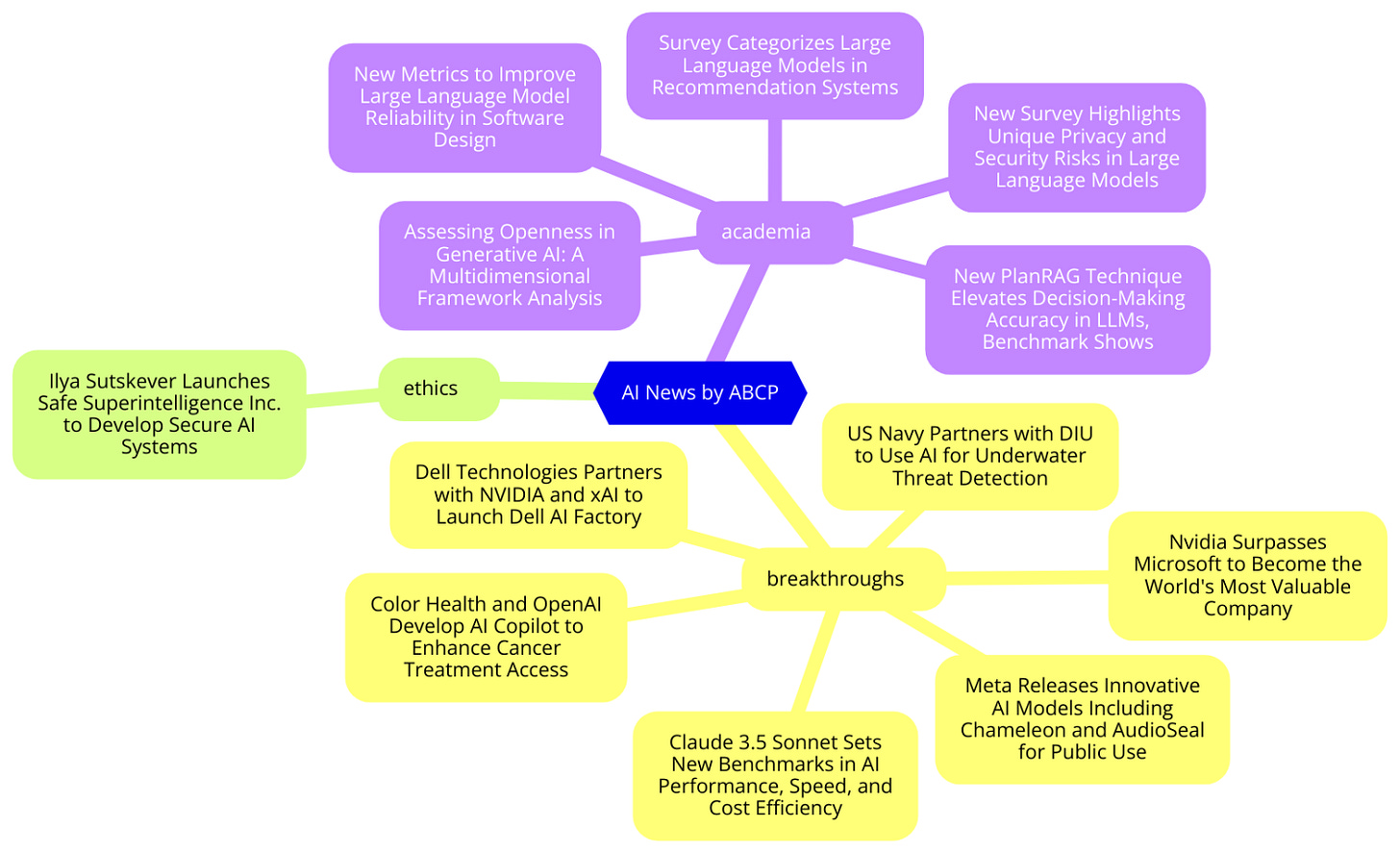

Today's highlights:

🚀 AI Breakthroughs

Nvidia Surpasses Microsoft to Become the World's Most Valuable Company

• Nvidia (NVDA) surpassed Microsoft (MSFT) as the highest-valued company globally, reaching a market cap of over $3.33 trillion

• Over the last year, Nvidia's stocks soared by 215%, with an impressive 175% increase in 2024 alone, outstripping Microsoft’s 19% gain

• Nvidia's rapid growth was showcased as it escalated from a $1 trillion to a $3 trillion market cap in record time, between June 2023 and June 2024

• Nvidia's pivotal role in AI chip technology drives its leading position in the tech industry, powering major digital giants’ AI functionalities

• Nvidia announced the upcoming release of advanced AI chips, including the Blackwell Ultra in 2025 and the Rubin platform in 2026

• Despite growing competition from AMD and Intel in AI chip development, Nvidia remains the principal provider for big tech firms.

Claude 3.5 Sonnet Sets New Benchmarks in AI Performance, Speed, and Cost Efficiency

• Claude 3.5 Sonnet, a new model from the Claude 3.5 family, features enhanced computational intelligence and speed, doubling the performance of the prior model, Claude 3 Opus

• Available for free via Claude.ai and its iOS app, Claude 3.5 Sonnet offers substantial rate limits to Pro and Team subscribers, expanding accessibility across platforms including Amazon Bedrock and Google Cloud’s Vertex AI

• Notably, the model costs $3 per million input tokens and $15 per million output tokens, offering financial efficiency with a 200K token context window

• Claude 3.5 Sonai excels at advanced tasks such as visual reasoning and code troubleshooting, significantly outperforming previous models in standardized industry evaluations

• Newly introduced 'Artifacts' feature on Claude.ai enhances user interaction by allowing real-time editing and collaboration on AI-generated content like code, design, and text documents

• Claude remains committed to safety and privacy, with rigorous external testing to ensure the model adheres to current safety standards and ethical guidelines.

Meta Releases Innovative AI Models Including Chameleon and AudioSeal for Public Use

• Meta’s FAIR team enhances AI research by releasing several advanced models, including innovative image-to-text and text-to-music generation models

• Chameleon, a new mixed-modal model from Meta, can simultaneously understand and generate both text and images, broadening creative possibilities

• Meta introduces multi-token prediction to train AI models for faster and more efficient word prediction, significantly advancing large language model capabilities

• JASCO, Meta's latest model, offers enhanced control in AI music generation by accepting diverse inputs like chords, improving output customization

• AudioSeal by Meta marks a significant development in AI, featuring a novel audio watermarking technique for localized detection of AI-generated speech

• Meta releases tools and data to address geographic disparities in text-to-image models, aiming to increase cultural and geographic representation in AI-generated content.

Color Health and OpenAI Develop AI Copilot to Enhance Cancer Treatment Access

• Color Health collaborates with OpenAI to streamline cancer treatment access through a new AI copilot leveraging GPT-4o technology

• The AI copilot system aids clinicians by creating personalized cancer treatment plans, improving decision-making speed and accuracy

• The application ensures compliance with HIPAA to protect patient privacy and data security, enhancing trust in telehealth solutions

• Clinician oversight allows modification of AI-generated recommendations, ensuring tailored and precise patient care

• Faster diagnosis and personalized screening plans are achieved by analyzing vast amounts of medical data and clinical guidelines

• In partnership with UCSF, Color seeks to integrate the AI copilot across all new cancer cases, potentializing enhanced patient outcomes.

Dell Technologies Partners with NVIDIA and xAI to Launch Dell AI Factory

• Michael Dell announces the development of Dell AI Factory, set to utilize NVIDIA GPUs for powering Groc, an AI from Elon Musk's Xai

• Elon Musk invested heavily in NVIDIA GPUs, acquiring tens of thousands in 2023, specific to enhancing the capabilities of Grok AI models

• Grok's training for version 2 required 20,000 Nvidia H100 GPUs, with future versions like Grok 3 expected to need around 100,000 chips

• Plans revealed for a new supercomputer by fall 2025 to support Grok's advancements, potentially collaborating with Oracle for massive system integration

• Musk stated the upcoming GPU cluster for Grok would quadruple the size of current largest GPU clusters, aiming for unprecedented computational power

• In related news, Meta AI's Yann LeCun disclosed a $30 billion acquisition of NVIDIA GPUs for training their own AI models.

US Navy Partners with DIU to Use AI for Underwater Threat Detection

• The US Navy, in collaboration with the Defense Innovation Project, utilizes AI to autonomously detect underwater threats

• This AI initiative has cut the time needed to scan ocean floors for mines by half, significantly enhancing mission efficiency

• Underwater drones equipped with AI and sonar sensors streamline shape recognition and navigation, accelerating operational tempo

• Innovations in AI model adjustments now allow changes to be made remotely, reducing upgrade times from months to under a week

• Strategic partnerships with tech firms like Arize AI and Latent AI have established robust AI systems, advancing underwater security measures

• Plans are underway to extend these AI advancements to broader defense applications, underscoring a commitment to technological leadership in global security scenarios.

⚖️ AI Ethics

Ilya Sutskever Launches Safe Superintelligence Inc. to Develop Secure AI Systems

• Ilya Sutskever launched Safe Superintelligence Inc., aiming to create safe, powerful superintelligent AI systems

• Amid criticisms of OpenAI's safety practices, Sutskever's new startup states its clear goal: synchronized development of safety and capabilities

• SSI differentiates itself by focusing solely on superintelligence, steering clear of management distractions and product cycles

• The startup is set up in Palo Alto and Tel Aviv, leveraging these tech hubs to assemble a specialized team for superintelligence

• Daniel Gross and Daniel Levy join Sutskever in SSI, emphasizing the urgency of developing safe AI amidst global talent shifts in the AI industry.

🎓AI Academia

New PlanRAG Technique Elevates Decision-Making Accuracy in LLMs, Benchmark Shows

• A new study focuses on using Large Language Models (LLMs) for complex decision-making processes termed Decision QA

• The study introduces a unique benchmark, DQA, featuring two scenarios—Locating and Building—based on strategies found in video games

• Researchers developed a novel retrieval-augmented generation technique, PlanRAG, enhancing LLMs' decision-making capabilities

• Comparative results show that PlanRAG outperforms existing iterative RAG methods by 15.8% and 7.4% in Locating and Building scenarios respectively

• The effectiveness of PlanRAG was demonstrated through rigorous tests against state-of-the-art methods in the Decision QA benchmark

• The complete code and benchmarks for PlanRAG are now available for public access and further development at the provided GitHub repository.

New Metrics to Improve Large Language Model Reliability in Software Design

• Large Language Models (LLMs) have revolutionized software design and interaction, enhancing productivity in routine task management

• Developers face challenges in debugging inconsistencies when LLMs evaluate differently on similar prompts

• Two new diagnostic metrics, sensitivity and consistency, have been introduced to measure stability in LLMs across rephrased prompts

• Sensitivity assesses prediction changes without needing correct labels, while consistency observes variations within the same class

• Empirical tests on these metrics have shown potential in balancing robustness and performance in LLM applications

• Including these metrics in LLM training and prompt engineering might foster the development of more reliable AI systems.

New Survey Highlights Unique Privacy and Security Risks in Large Language Models

• Artificial intelligence advancements have led to LLMs with enhanced language processing capabilities across various applications like chatbots and translation

• Privacy and security concerns in LLMs' life cycle have attracted significant attention from academics and industries

• The novel taxonomy categorizes LLM risks, detailing unique and common threats along with potential countermeasures in five key scenarios

• Differences in privacy and security risks between LLMs and traditional models are scrutinized, offering insights into specific attack goals and capacities

• An in-depth analysis covers unique LLM scenarios such as federated learning and machine unlearning, aiming to enhance overall system security

• The study maps out threats to LLMs from pre-training to deployment, encouraging robust defense methods to mitigate these vulnerabilities.

Survey Categorizes Large Language Models in Recommendation Systems

• Large Language Models (LLMs) now significantly impact recommendation systems, enhancing user-item correlation through advanced text representation

• A new taxonomy in the survey categorizes LLMs into Discriminative and Generative paradigms for recommendation, a novel approach in academic studies

• The survey reviews LLM-based systems, revealing strengths and weaknesses and offering insights into methodologies and performance outcomes

• Key challenges in employing LLMs in recommendation systems are identified, setting the stage for targeted future advancements

• A comprehensive GitHub repository has been launched, serving as a hub for researchers to access papers and resources on LLMs in recommendation systems.

Assessing Openness in Generative AI: A Multidimensional Framework Analysis

• The debate over 'open' in generative AI intensifies as the EU AI Act approaches, which will differently regulate open source systems

• An evidence-based framework highlights 14 dimensions of openness in AI, from training data to licensing and access

• Most generative AI systems, despite being labeled 'open source', reveal insufficient openness, particularly in data transparency

• Effective regulation requires composite and gradient assessments of openness, rather than relying solely on licensing definitions

• Survey exposes 'open-washing' tactics by firms, where claims of openness are superficial, evading scrutiny and accountability

• Full openness in training datasets, crucial for AI safety and legal compliance, remains a challenging but vital goal.

About us: We are dedicated to reducing Generative AI anxiety among tech enthusiasts by providing timely, well-structured, and concise updates on the latest developments in Generative AI through our AI-driven news platform, ABCP - Anybody Can Prompt!