No, ChatGPT Didn’t Ban Medical or Legal Advice: Here’s the Real Story

OpenAI faced viral misinformation after a prediction platform claimed that ChatGPT had banned all legal and medical advice, prompting alarm across media platforms..

Today’s highlights:

Last week, rumors circulated that OpenAI’s ChatGPT had banned all legal and medical advice after a post from prediction platform Kalshi claimed, “JUST IN: ChatGPT will no longer provide health or legal advice.” This sparked widespread concern online, amplified by media outlets like IBTimes UK and Moneycontrol, which wrongly reported a new OpenAI policy.

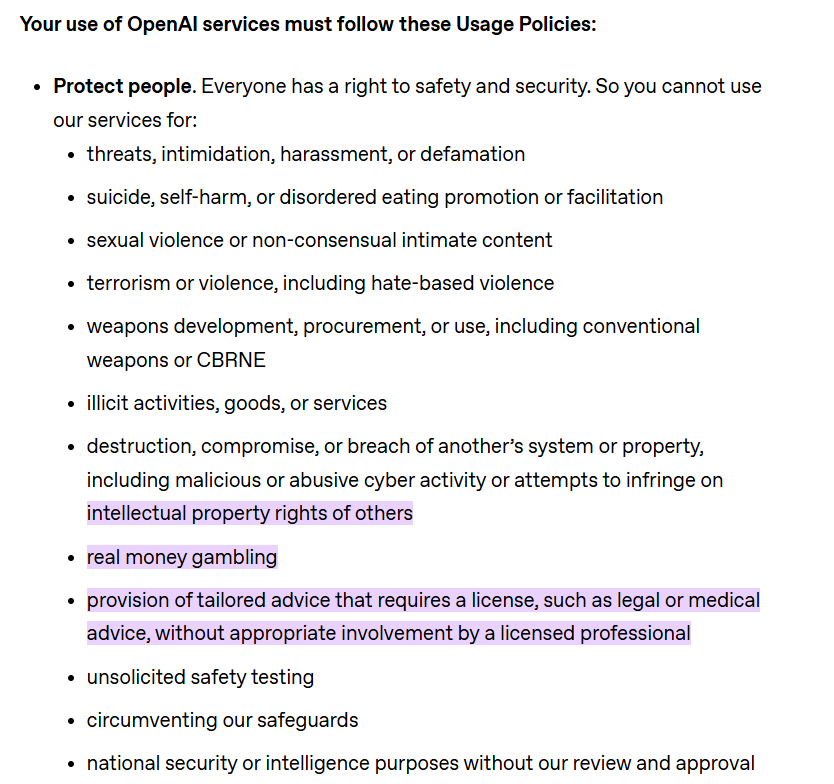

However, OpenAI’s updated usage policy on October 29 merely reiterated a long-standing clause: ChatGPT must not offer tailored advice requiring a license, such as medical diagnoses or legal counsel, without a qualified professional involved.

OpenAI’s Head of Health AI, Karan Singhal, publicly debunked the rumor, stating the assistant’s behavior “remains unchanged.” Tech outlets like The Verge and PPC Land confirmed the update did not introduce new bans but simply consolidated pre-existing rules.

Despite the viral confusion, ChatGPT still responds to legal and medical queries with general, educational information and clear disclaimers- just as it did before. For instance, one user asked ChatGPT to draft a legal document post-update, and the assistant complied with a notice: “This is not formal legal advice.” On Reddit and Hacker News, some users expressed disappointment, citing how ChatGPT helped during family medical crises, while others supported OpenAI’s cautious approach. The misunderstanding highlighted the speed at which AI policy updates can be misread and spread online. In reality, the “ban” was a myth; ChatGPT continues to provide useful, non-tailored guidance in sensitive domains, reinforcing its role as an educational- not diagnostic or legal- tool.

You are reading the 142nd edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training, including AI Governance certifications (AIGP, RAI, AAIA) and an immersive AI Literacy Specialization. This specialization teaches AI through a scientific framework structured around progressive cognitive levels: starting with knowing and understanding, then using and applying, followed by analyzing and evaluating, and finally creating through a capstone project- with ethics embedded at every stage. Want to learn more? Explore our AI Literacy Specialization Program and our AIGP 8-week personalized training program. For customized enterprise training, write to us at [Link].

🚀 AI Ethics

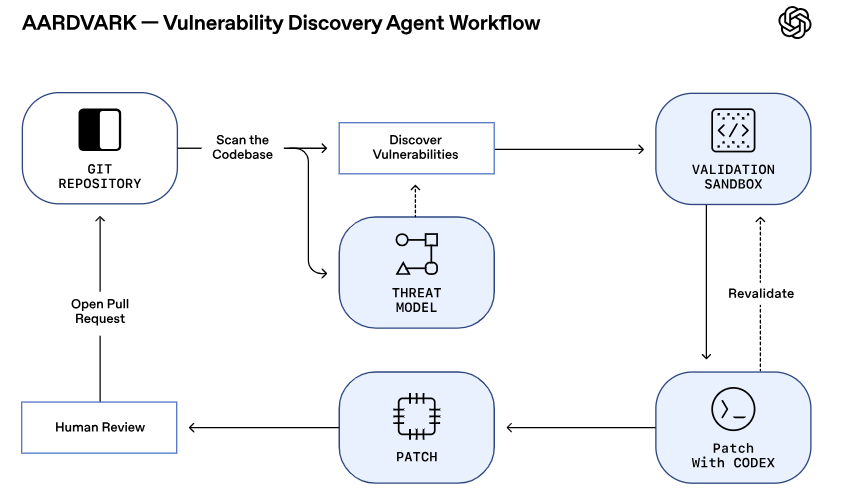

OpenAI Launches Aardvark, AI Agent for Detecting and Fixing Security Vulnerabilities

OpenAI has unveiled Aardvark, an autonomous AI agent designed to identify and mitigate security vulnerabilities in software codebases, using the capabilities of GPT-5. Currently in private beta with select partners, Aardvark utilizes large language model-based reasoning for detecting bugs and generating fixes at scale. The agent continuously monitors code repositories, builds threat models, and proposes patches after validating exploitability in a sandboxed environment. In internal tests, Aardvark successfully identified 92% of vulnerabilities, contributing to both enterprise and open-source projects, where it has led to multiple Common Vulnerabilities and Exposures (CVE) disclosures. OpenAI aims to enhance digital security and has updated its disclosure policy to encourage collaboration and efficient remediation.

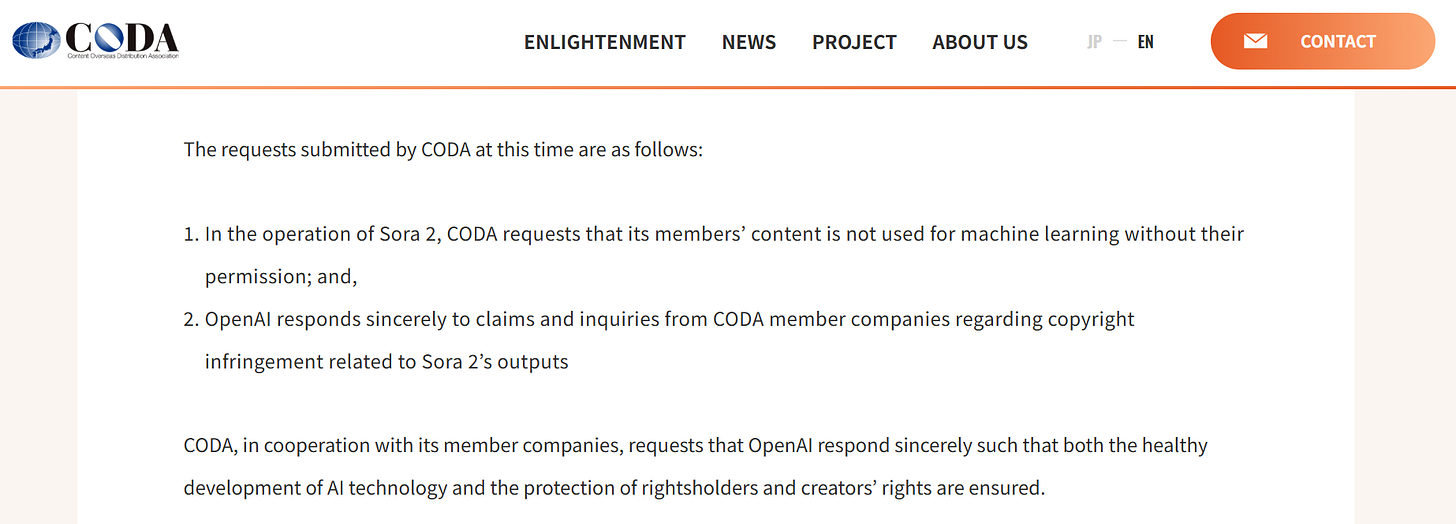

Japanese Publishers Urge OpenAI to Cease Using Their Content Without Consent

Japan’s Content Overseas Distribution Association (CODA), representing publishers like Studio Ghibli, has requested that OpenAI stop training its AI models on copyrighted content without permission. The appeal follows the popularity of OpenAI’s generative AI products that create images and videos in the style of Studio Ghibli works, sparking concerns about copyright infringement. CODA highlights the differences in copyright law interpretations between Japan and the United States, noting Japan’s stringent requirements for prior consent to use copyrighted works. This comes amidst ongoing legal ambiguities in the U.S., where courts have yet to definitively rule on the legality of training AI with copyrighted material.

Tech Giants Face Power Struggle as AI Demands Outpace Energy Supply Solutions

The tech industry faces a critical challenge in balancing power supply and AI development, as highlighted by the concerns of leaders like those at OpenAI and Microsoft. Despite a surge in purchasing advanced GPUs, these organizations struggle with insufficient power infrastructure to support their data centers, revealing a mismatch between compute capabilities and energy availability. This issue is compounded by the rapid increase in electricity demand driven by data centers, which is outpacing traditional energy supply expansions. Efforts to integrate renewable energy sources like solar offer some promise due to their modularity and rapid deployment potential akin to semiconductor technologies. However, the unpredictability of demand and potential oversupply of compute resources rather than power remains a significant concern for the future of AI deployment.

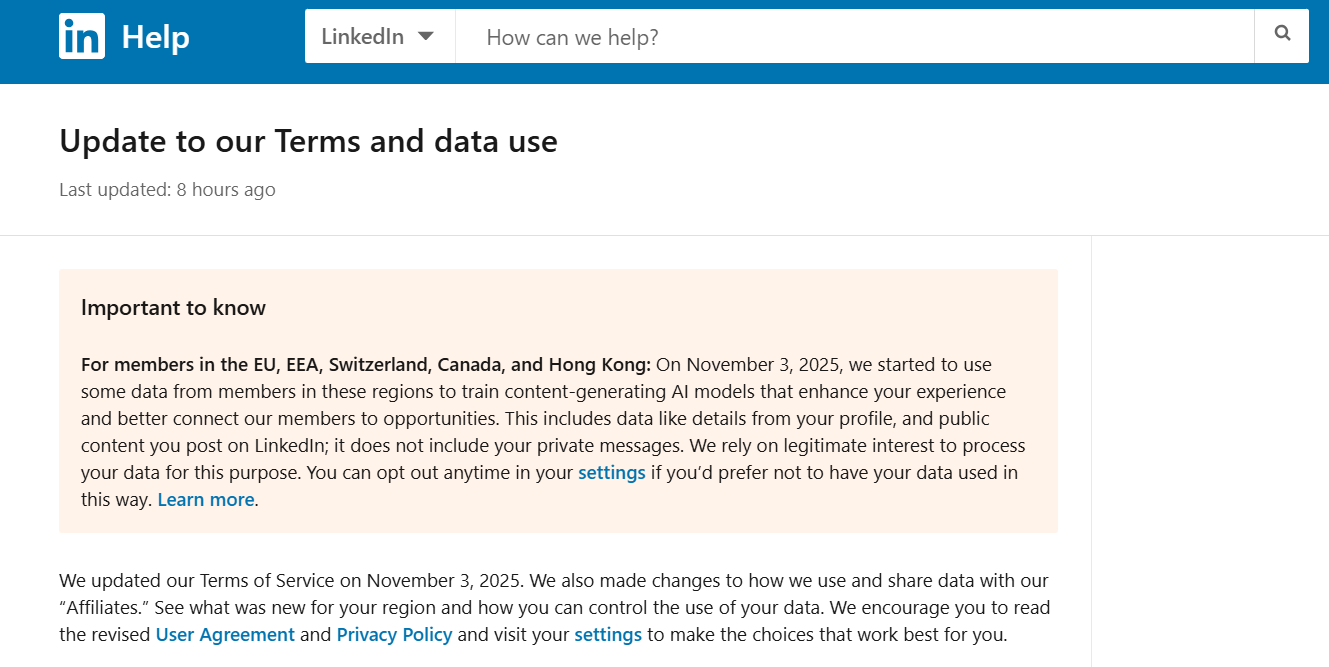

LinkedIn Users Must Act to Prevent AI Training with Personal Data

Starting today- November 3, 2025, LinkedIn will automatically use member data from regions including the UK, EU, Switzerland, Canada, and Hong Kong to train its AI models, unless users opt out. This move, based on legitimate interest under data protection laws, allows LinkedIn to integrate AI features such as job matching and writing suggestions into its platform. Users concerned about privacy can change their settings to prevent their data from being included, but must act before the changes take effect. Under-18 users will be excluded from this data usage.

Amazon CEO Clarifies AI’s Role, Cites Company Culture as Key Factor in Layoffs

Amazon’s CEO Andy Jassy stated that the recent layoffs of 14,000 corporate jobs were driven by cultural shifts within the company, rather than financial issues or the impact of AI. The e-commerce giant saw a 13% increase in third-quarter sales, reaching $180.2 billion, with its cloud business AWS experiencing significant growth. Despite citing company culture as the reason for layoffs, Jassy acknowledged the transformative impact of AI on Amazon’s operations. The firm aims to streamline and operate more efficiently amidst expected technological advancements. Jassy also noted the potential for further employee automation, which could alter future workforce needs.

Xi Proposes Global AI Governance Body Amidst US-China Trade Tensions at APEC

At the APEC leaders’ meeting, Chinese President Xi Jinping advocated for the creation of a global organization to govern artificial intelligence, positioning China as a potential alternative to the U.S. on trade cooperation. Xi proposed the establishment of a World Artificial Intelligence Cooperation Organization to establish international AI governance rules, while promoting AI as a benefit for all nations. The initiative reflects China’s ambitions to lead in AI and trade discussions, especially in light of the U.S.’s resistance to international AI regulation. During the event, APEC members agreed on joint declarations regarding AI and other issues, with China’s growing influence further underscored by plans to host the 2026 APEC summit in Shenzhen.

Amazon Job Cuts Across California Reflect Growing Focus on AI Investments

Amazon is executing a significant round of layoffs, impacting 1,403 employees across seven cities in California as part of a global strategy to reduce its workforce by 14,000 corporate roles. These cuts primarily affect software development engineers but also include recruiters, business analysts, marketers, managers, and game designers. The layoffs occur amidst Amazon’s substantial investments in artificial intelligence, which the company describes as a transformative technology reshaping business operations. Despite the job cuts, Amazon plans to continue hiring in key strategic areas while aiming to streamline its management structure, according to the company’s statements.

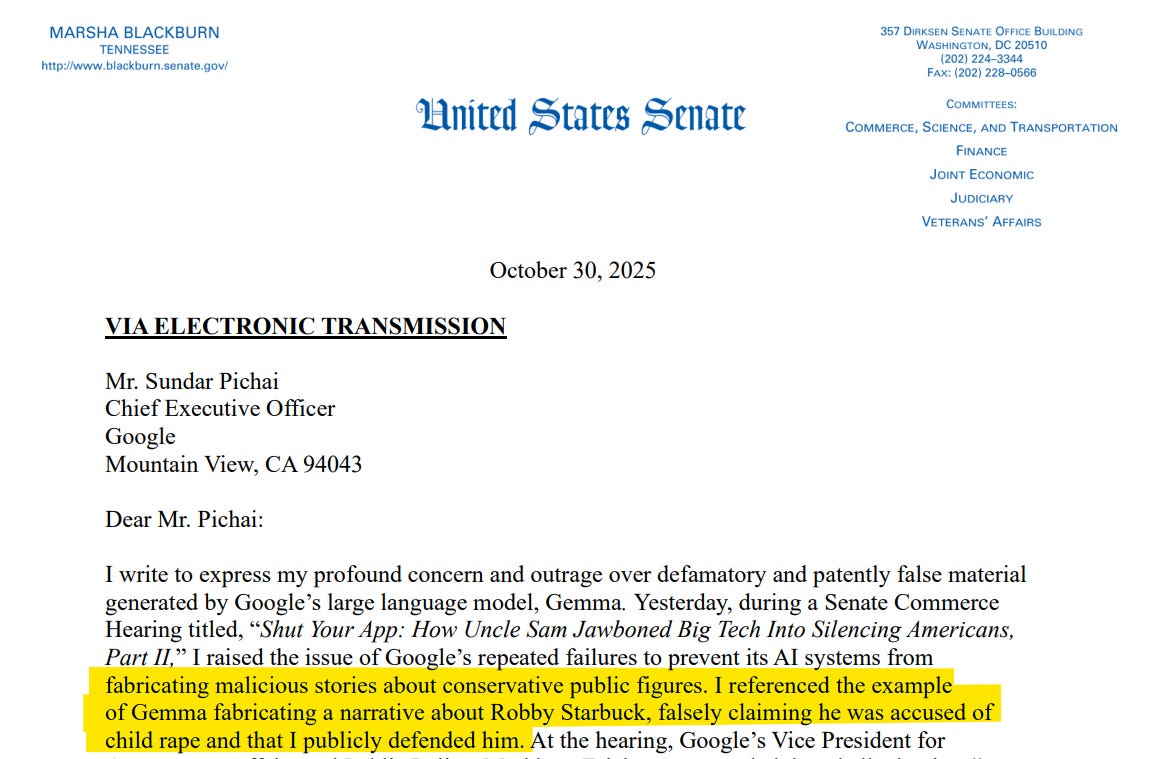

Google Pulls AI Model Gemma After Senator Alleges Fake Misconduct Accusations

Google has removed its AI model, Gemma, from its AI Studio following complaints from U.S. Senator Marsha Blackburn about the AI fabricating false accusations of sexual misconduct against her. Blackburn, a Republican from Tennessee, detailed in a letter to Google CEO Sundar Pichai that Gemma falsely claimed she was involved in a scandal during a state senate campaign, which was inaccurately dated. Blackburn criticized Gemma’s response as defamatory, and Google acknowledged that hallucinations in AI responses are a recognized issue. Amid the controversy, Google stated that Gemma was misused and reiterated that it was not intended for consumer use, leading to its removal from AI Studio while still being available via API.

Mumbai Lawyers Transition from Time-Based to Outcome-Driven Models with AI Integration

The traditional time-based billing model in the legal industry is gradually being replaced by outcome-driven fee structures as artificial intelligence becomes more prevalent in handling routine legal tasks. Industry experts predict that hybrid or fixed-fee models will dominate in the coming years, with AI proving effective in areas like due diligence, compliance, and routine litigation. Many corporate legal teams are already pushing for fixed or project-based pricing, similar to consultancy firms, to ensure accountability and cost predictability. AI’s integration is seen as a democratizing force, potentially leveling the playing field for smaller legal firms and individual practitioners. India’s legal AI market is rapidly growing, with rising investments in legal tech by both law firms and in-house legal teams aiming to boost efficiency and reduce the need for external counsel.

Amazon Reports $33 Billion AWS Revenue Boost, Highlighting AI Workload Expansion

During Amazon’s third-quarter earnings call on October 30, the company reported that Amazon Web Services (AWS) revenue reached $33 billion, marking a 20% year-over-year increase and the fastest growth rate in 11 quarters, equating to an annualized rate of $132 billion. This growth is attributed to rising AI-related workloads, with significant customer uptake of AWS products such as the Transform migration tool and the AgentCore platform. Transform, launched in May 2025, has notably reduced manual effort and facilitated extensive code migrations, while AgentCore has streamlined AI agent deployment for enterprises. Additionally, Amazon highlighted the growing demand for its Trainium2 chips, integrated in Project Rainier’s extensive AI compute cluster, and the success of its Quick Suite in delivering substantial cost and time savings for business customers. These developments are supported by an expanded infrastructure, with more than 3.8 gigawatts of power added to AWS in the past year.

⚖️ AI Breakthroughs

AWS Teams with OpenAI in $38 Billion Deal to Enhance AI Infrastructure

Amazon Web Services (AWS) and OpenAI have entered a strategic partnership valued at $38 billion to support the scaling of OpenAI’s artificial intelligence workloads using AWS infrastructure over the next seven years. This agreement grants OpenAI access to extensive AWS compute resources, including NVIDIA GPUs and Amazon EC2 UltraServers, to facilitate both AI model inference and training. By 2027, OpenAI aims to capitalize on AWS’s infrastructure to meet the growing demand for AI compute power and further integrate its models into applications through AWS services like Amazon Bedrock. This move is part of OpenAI’s broader strategy to secure significant compute resources, following alliances with tech giants such as NVIDIA, AMD, and Broadcom.

Perplexity Patents Launches: AI Patent Research Tool Transforms Intellectual Property Landscape

Perplexity has launched Perplexity Patents, an AI-powered patent research tool designed to simplify access to patent information for all users. This new platform allows individuals to ask patent-related queries in natural language and receive comprehensive results that extend beyond traditional databases, including sources like academic papers and public software repositories. Available as a free beta version globally, Perplexity Patents utilizes advanced AI to deliver insights into patent landscapes, aiming to democratize intellectual property intelligence and foster innovation.

Perplexity AI to Enable Access to Indian Politicians’ Stock Holdings Soon

Perplexity AI, a San Francisco-based company, plans to expand its Perplexity Finance platform to include the stock holdings of Indian politicians, a feature it already offers for American politicians to promote transparency. This upcoming expansion, expected within weeks, will source data from public records such as the Lokpal and Lokayuktas Act declarations, election affidavits, and SEBI-required disclosures, similar to how it uses US congressional filings to track American politicians’ investments. The initiative aims to enhance accountability and transparency in financial dealings among Indian political figures.

Perplexity Secures Multi-Year Getty Licensing Deal to Enhance AI Search Visuals

AI search startup Perplexity has secured a multi-year licensing agreement with Getty Images, allowing it to feature Getty’s images in its AI search and discovery platforms. This deal aims to bolster Perplexity’s credibility following accusations of plagiarism and content scraping, including a notable incident involving a Wall Street Journal article. The collaboration, framed as a step towards more legitimate content use, emphasizes attribution and accuracy, aligning with Perplexity’s defense against copyright allegations by arguing its practices fall under “fair use.” The agreement follows criticisms of Perplexity’s actions, most recently highlighted by a Reddit lawsuit over data scraping allegations.

India Hosts Inaugural AI Film Festival and Hackathon During 56th IFFI in Goa

LTIMindtree has partnered with the International Film Festival of India (IFFI) and the National Film Development Corporation (NFDC) to launch India’s first Artificial Intelligence (AI) Film Festival and Hackathon, scheduled to be held from November 20 to 28, 2025, during the 56th edition of IFFI in Goa. This pioneering event will showcase AI-generated films across various genres and include a 48-hour hackathon challenging creators to develop AI-powered storytelling tools, alongside workshops on AI’s role in film production. The initiative underscores IFFI’s dedication to incorporating technological advancements in cinema, aiming to position India at the forefront of AI-driven cinematic innovation.

Canva Launches Creative Operating System to Elevate Design, Marketing, and AI Integration

Canva has launched its Creative Operating System, marking its largest update with enhanced AI design tools and marketing features aimed at boosting creativity and brand consistency. This update introduces a reworked Visual Suite featuring Video 2.0, Canva Forms, and a new Email Design tool, while the Canva Design Model now powers AI tools across platforms like ChatGPT and Claude. Key components include AI-Powered Designs, a built-in assistant called Ask Canva, and Canva Grow, a marketing engine that integrates content creation with analytics. Additionally, Canva’s Affinity suite is now free, strengthening its position as an accessible alternative to Adobe Photoshop. Concurrently, Figma announced its acquisition of Weavy AI, soon to be rebranded as Figma Weave, enhancing its platform with advanced media generation and editing capabilities.

Adobe Expands GenStudio with New Generative AI and Enhanced Integrations at MAX 2025

At Adobe MAX 2025, Adobe expanded its GenStudio suite, introducing advanced generative AI features and enhanced model customization through its Firefly Foundry. The update, aimed at addressing rising content demands, integrates AI directly into content production workflows, enabling creative and marketing teams to produce personalized content more efficiently. New tools like Firefly Design Intelligence and Firefly Creative Production for Enterprise streamline brand-compliant asset generation and management. Adobe’s integrations with major ad platforms, including Amazon Ads and LinkedIn, simplify campaign activation and optimization, reinforcing its position in AI-driven creative solutions.

Firecrawl v2.5 Enhances Web Data Extraction with Custom Browser and Semantic Index

Firecrawl has released version 2.5 of its web data API, marking advancements in web data extraction through the introduction of a custom browser stack and a semantic index. The new browser stack, built from scratch, enhances data quality and speed by accurately rendering various content types, while the semantic index improves coverage and allows retrieval of web data in its current or historical state. These features aim to provide a seamless, reliable interface for AI systems and modern applications. Firecrawl v2.5 is now accessible to all users without requiring code changes.

NVIDIA and South Korea Collaborate to Establish Sovereign AI Infrastructure and Factories

NVIDIA is collaborating with public agencies and private companies in South Korea to establish sovereign AI infrastructure through extensive deployment of GPUs across sectors like automotive, manufacturing, and telecommunications. The initiative includes building AI factories and a National AI Computing Center, backed by government plans to introduce 50,000 NVIDIA GPUs into the sovereign AI ecosystem. Key partnerships include work with Samsung and SK Group on AI factories, driving next-generation chip production and network innovations. Hyundai Motor Group also aims to enhance its AI capabilities across autonomous driving and robotics. This ambitious approach positions South Korea as a pivotal player in the global AI industrial revolution, with considerable investment in AI talent development and startup support.

OpenAI’s Sora Expands Video Features with Character Cameos and Clip Stitching

OpenAI has expanded its Sora app with new features, allowing users to create reusable avatars, called “character cameos,” from various subjects like pets, illustrations, and toys, which can be utilized in AI-generated videos. These avatars come with customizable permissions for sharing, and the update includes video stitching and leaderboards showcasing popular content. Amid these enhancements, OpenAI faces a trademark infringement lawsuit from Cameo over its use of “cameo” in app features, while temporarily making the app more accessible in several countries without an invitation code.

Forward-Deployed Engineers Rise in Demand Amid AI-Driven Workforce Transformation

The role of Forward-Deployed Engineers (FDEs) is becoming increasingly essential in the AI industry, with companies like OpenAI and Anthropic expanding their teams to help businesses effectively integrate and customize AI technologies. Job postings for FDEs, who blend technical skills with client collaboration, have surged by over 800% between January and September 2025. This trend highlights a broader need for hybrid roles that balance human expertise with AI capabilities, a shift underscored by Indeed’s recent report revealing that 26% of jobs could be significantly influenced by generative AI. The demand for FDEs reflects the critical importance of ensuring AI applications provide real value across various industries, indicating a move towards more collaborative human-machine interactions in the workforce.

🎓AI Academia

Survey Reveals Privacy Risks in AI-Based Conversational Agent Usage Among UK Adults

A recent study highlights significant security and privacy risks associated with AI-based conversational agents (CAs) like ChatGPT and Gemini. Conducted with a representative sample of 3,270 UK adults, the research reveals that regular CA users often engage in behaviors that could compromise their privacy, such as attempting to jailbreak the systems or sharing sensitive information. Despite a majority of users sanitizing their data, a notable fraction still shares highly sensitive data, unaware that their information can be used to train AI models. This study underscores the necessity for stronger AI guardrails and better awareness of data usage policies from vendors to mitigate potential vulnerabilities.

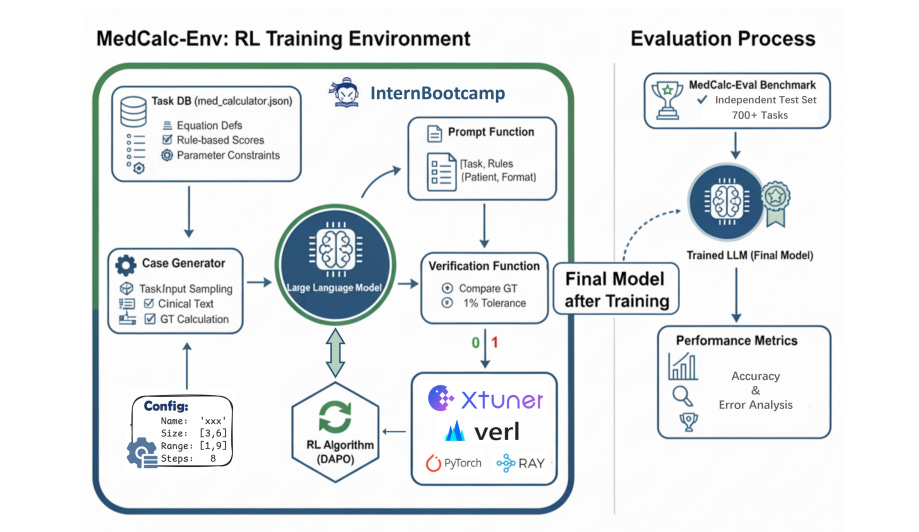

MedCalc-Eval and MedCalc-Env Extend Medical Calculation Capabilities of AI Models

The Shanghai AI Laboratory has developed MedCalc-Eval and MedCalc-Env, new advancements aimed at enhancing the medical calculation capabilities of large language models (LLMs) in clinical practice. MedCalc-Eval is a comprehensive benchmark, featuring over 700 clinical calculation tasks that span various medical specialties. This new benchmark addresses the limitations of current assessments focused mainly on question answering and descriptive reasoning. MedCalc-Env, a reinforcement learning environment, is designed to improve LLMs in multi-step clinical reasoning. The fine-tuned Qwen2.5-32B model using this environment achieved state-of-the-art performance, although challenges like unit conversion and context understanding remain. Implementation details and related materials are available on GitHub.

Generative AI Boosts Online Retail Sales, Enhances Firm Productivity by Up to 16.3%

A study has quantified the impact of Generative AI (GenAI) on productivity in the online retail sector through extensive field experiments on a major cross-border retail platform. Conducted over six months between 2023 and 2024, these experiments involved millions of users and products, revealing that GenAI can boost firm sales by up to 16.3%, primarily by improving consumer conversion rates. Particularly smaller sellers and newer consumers benefitted significantly, indicating GenAI’s potential to reduce marketplace friction and enhance user experience. The research demonstrates the economic viability of GenAI, suggesting an incremental annual value of approximately $5 per consumer, emphasizing its role in driving productivity and sales in the rapidly evolving retail landscape.

RepoMark Framework Audits Code Usage in LLMs, Ensures Ethical Data Compliance

A new framework called RepoMark is proposed to address the ethical and legal concerns surrounding the use of open-source code repositories for training Large Language Models (LLMs) in code generation. Developed by researchers at institutions including the National University of Singapore and Penn State University, RepoMark allows repository owners to audit whether their code has been used in model training, emphasizing semantic preservation and undetectability. The framework introduces inconspicuous code marks to verify data usage, achieving over 90% detection success under strict conditions. This approach aims to enhance transparency and protect the rights of code authors amidst the growing commercialization of AI-assisted coding tools.

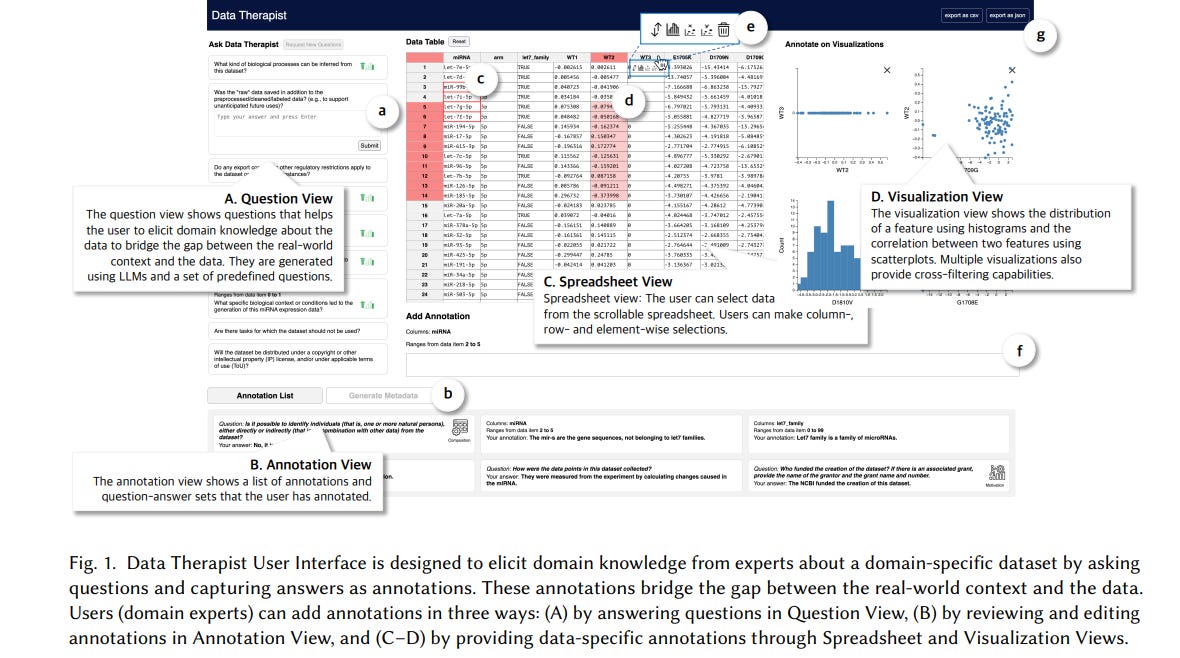

Data Therapist Utilizes Large Language Models to Harness Domain Expertise Effectively

A collaborative project among researchers from Aarhus University, Seoul National University, and Oregon State University has resulted in the development of “Data Therapist,” a sophisticated web-based tool designed to elicit domain knowledge from subject matter experts using large language models (LLMs). This system aids experts in bridging the gap between data and real-world context by utilizing a mixed-initiative process that combines iterative question and answer sessions with various views, such as Question, Annotation, Spreadsheet, and Visualization Views. Through this interface, experts can effectively annotate datasets, surfacing tacit knowledge regarding data provenance, quality, and intended use, which is often not explicitly available in datasets.

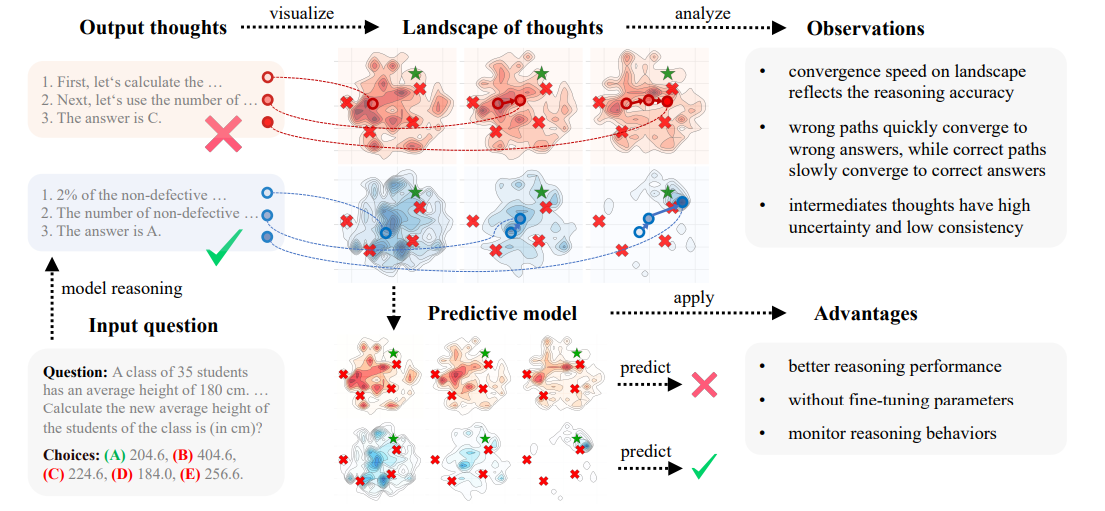

New Visualization Tool Launched for Analyzing Large Language Model Reasoning Behavior

Researchers have developed a tool called “Landscape of Thoughts” (LoT) to better understand the reasoning processes of large language models (LLMs) by visualizing their reasoning trajectories. This innovative approach uses t-SNE plots to represent how LLMs arrive at decisions, providing insight into both their strengths and weaknesses, as well as identifying patterns like inconsistency and uncertainty. By making this analysis more scalable and objective, LoT offers significant advantages for model development, debugging, and safety, helping engineers and researchers improve the accuracy and efficiency of LLM applications. The code for LoT is publicly available for further adaptation and use.

Supervised Reinforcement Learning Framework Enhances Logical Reasoning in Small Language Models

A new framework called Supervised Reinforcement Learning (SRL) has been proposed to enhance the reasoning capabilities of Large Language Models (LLMs) in handling complex, multi-step problems. By training models to generate a sequence of internal logical actions and providing rewards based on similarity to expert actions, SRL addresses the limitations of existing methods like Reinforcement Learning with Verifiable Rewards (RLVR) and Supervised Fine-Tuning (SFT). This approach allows for more flexible reasoning and outperforms traditional methods on challenging reasoning tasks, showing promising results when used as a precursor to RLVR. The method is also effective beyond reasoning benchmarks, generalizing well to tasks in agentic software engineering.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.