Is Microsoft Breaking Up With OpenAI? Not Exactly…

Microsoft has struck a landmark licensing deal with Anthropic signaling a decisive move beyond its exclusive OpenAI alliance..

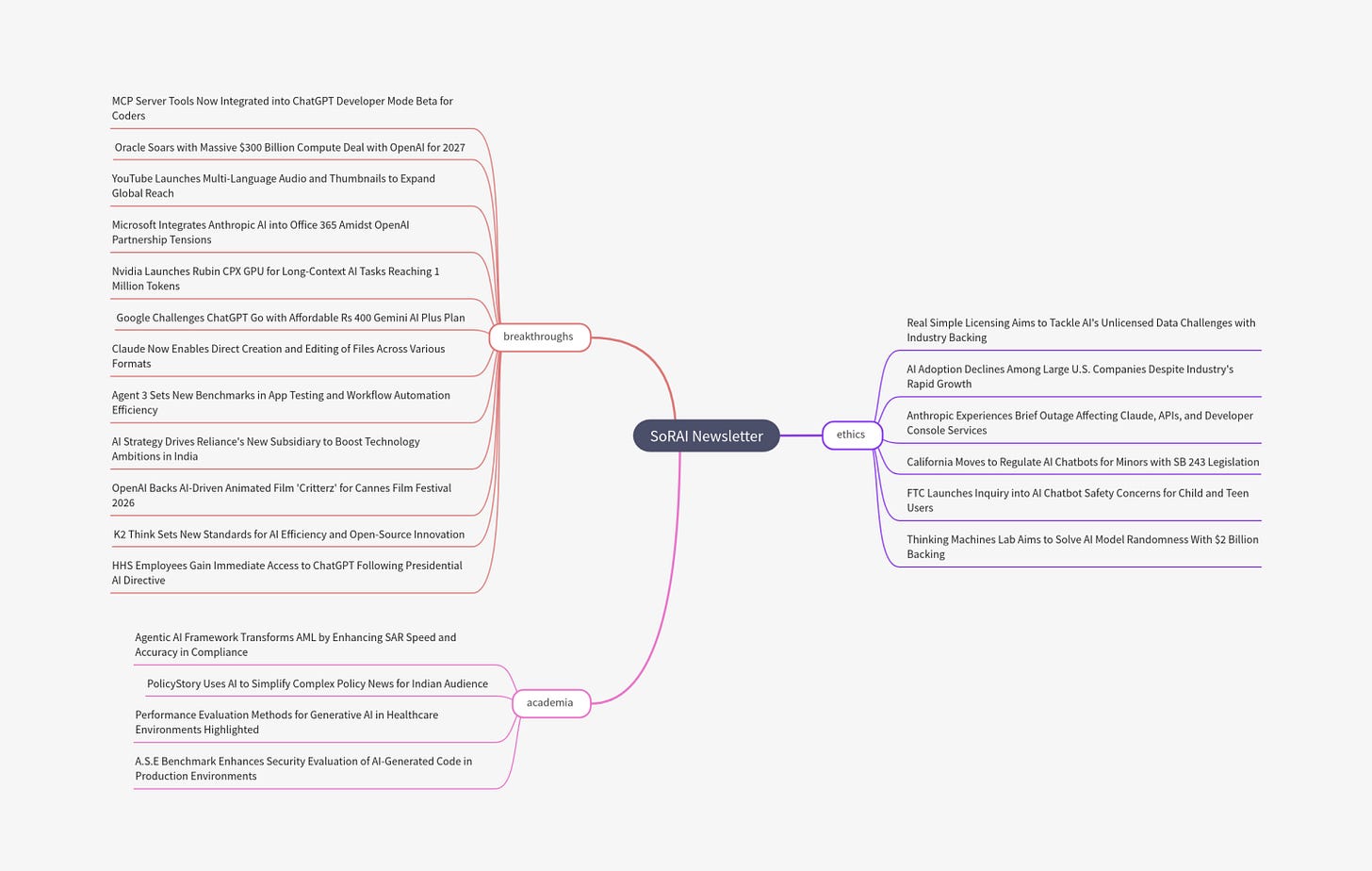

Today's highlights:

You are reading the 127th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training, including AI Governance certifications (AIGP, RAI) and an immersive AI Literacy Specialization. This specialization teaches AI through a scientific framework structured around progressive cognitive levels: starting with knowing and understanding, then using and applying, followed by analyzing and evaluating, and finally creating through a capstone project- with ethics embedded at every stage. Want to learn more? Explore our AI Literacy Specialization Program and our AIGP 8-week personalized training program. For customized enterprise training, write to us at [Link].

🔦 Today's Spotlight

Microsoft Just Shocked Everyone With This Anthropic Deal

In a surprising but strategic twist, Microsoft is no longer relying only on OpenAI to power its AI tools. The tech giant is now teaming up with Anthropic, the company behind the Claude language model, to improve how AI works inside apps like Excel, PowerPoint, and Outlook. This move comes after internal tests showed Anthropic’s models performing better in some specific tasks- and it signals that Microsoft wants the best of both worlds by working with multiple AI labs instead of sticking to just one.

Strategic Reasons Behind Microsoft’s Interest in Anthropic

Microsoft’s decision to partner with Anthropic is rooted in a blend of strategic, technical, and competitive motivations. Primarily, it marks a deliberate diversification move—no longer putting “all eggs in one basket” with OpenAI—as Microsoft now plans to “blend Anthropic and OpenAI technology” in its applications. Anthropic’s Claude 4 Sonnet outperformed OpenAI in internal Microsoft tests for automating Excel financial functions and generating high-quality PowerPoint slides. Its Constitutional AI framework—built by ex-OpenAI researchers focused on safety—also aligns with Microsoft’s responsible AI goals. Moreover, OpenAI’s growing independence (e.g., launching a LinkedIn-like job platform and developing its own chips with Broadcom) prompted Microsoft to hedge against over-reliance on a single partner. By integrating Claude for tasks it excels at, while retaining OpenAI for others, Microsoft embraces a pragmatic multi-model strategy to enhance Office 365 and other tools.

Scope and Terms of the Microsoft–Anthropic Partnership

The Microsoft–Anthropic partnership is a licensing deal, not an equity investment or exclusive arrangement. Microsoft will pay to use Anthropic’s Claude models—most likely Claude 4 “Sonnet”—in Microsoft 365 Copilot features like Excel and PowerPoint, where Claude outperforms OpenAI’s GPT models. Unlike Microsoft’s multi-billion-dollar investment in OpenAI, this is a straightforward transactional deal, with Microsoft buying API access from Anthropic (a company backed by Amazon and Google). Notably, Anthropic’s models are hosted on AWS, meaning Microsoft will route Claude-powered features through Amazon’s infrastructure—a unique instance of Microsoft using a competitor’s cloud to improve its own products. This cross-cloud strategy highlights Microsoft’s flexibility in pursuit of superior AI tools. Importantly, the partnership is non-exclusive on both ends: Microsoft retains OpenAI for frontier models, while Anthropic continues to work with Amazon, Google, and others.

Implications for OpenAI and Microsoft’s Alliance

While Microsoft remains officially committed to OpenAI for “frontier models,” the inclusion of Anthropic signals a shift from exclusivity to openness. Microsoft’s addition of Claude indicates areas where OpenAI’s tech fell short—potentially straining trust. OpenAI’s increasing autonomy (building competing products, exploring other cloud providers) is also reshaping the once-tight relationship. Their partnership terms are being renegotiated to support OpenAI’s corporate restructuring and possible IPO, with Microsoft seeking continued access even if OpenAI reaches AGI. Simultaneously, Microsoft is accelerating its internal AI development with models like MAI-1, further reducing dependency. For OpenAI, this opens up new opportunities to partner beyond Microsoft, but also means it must now compete more directly to retain Microsoft’s business. The alliance is evolving from a “codependent relationship” to a more transactional, open dynamic where both sides retain leverage and pursue their strategic interests independently.

Broader Consequences for the AI Industry

Microsoft’s engagement with Anthropic reflects a broader industry shift away from exclusive alliances toward a multi-partner AI ecosystem. Big tech firms are increasingly mixing and matching models—e.g., Microsoft using Anthropic (an Amazon-backed startup), OpenAI working with Google Cloud, and Meta offering open-source Llama models via Microsoft Azure. Enterprise customers now expect flexibility, leading to a trend of “multi-model” strategies akin to “multi-cloud” setups. This boosts competition among clouds (Azure vs AWS vs GCP), especially as Microsoft routes Anthropic model calls through AWS, indirectly benefiting Amazon. Anthropic’s rise positions it as a serious rival to OpenAI, especially with top-tier deployment in Microsoft Office. Meanwhile, regulatory pressure favors such open ecosystems over monopolies, and Microsoft’s adoption of Claude’s safer outputs could influence industry safety norms. In essence, the AI landscape is becoming more pluralistic, competitive, and interconnected, fostering faster innovation and giving enterprises broader AI choices.

Conclusion

Microsoft’s partnership with Anthropic shows that the AI world is shifting fast. Big companies are no longer picking just one favorite—now they want the freedom to use whichever AI model works best. This change opens the door for more competition, better safety features, and smarter tools for all of us. It also means the once-exclusive bond between Microsoft and OpenAI is evolving into something more flexible—and possibly, more powerful for the future of AI.

🚀 AI Breakthroughs

MCP Server Tools Now Integrated into ChatGPT Developer Mode Beta for Coders

OpenAI has introduced a beta feature called ChatGPT developer mode, which incorporates full Model Context Protocol (MCP) client support for coding tools. This new functionality allows users to utilize ChatGPT for both reading and writing tasks with enhanced tool integration. Detailed guidance on getting started with the feature is available on OpenAI's platform documentation.

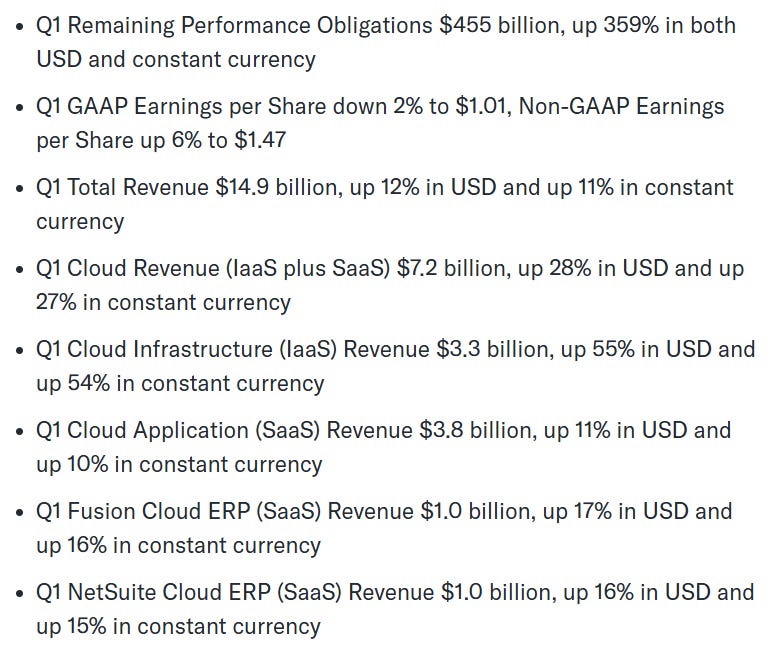

Oracle Soars with Massive $300 Billion Compute Deal with OpenAI for 2027

Oracle's shares surged following reports of a significant multi-billion-dollar cloud contract with OpenAI, which plans to purchase $300 billion in compute power from Oracle by 2027, as reported by the Wall Street Journal. This deal, if confirmed, would mark one of the largest cloud contracts to date. While Oracle did not comment, OpenAI has previously diversified its cloud providers, moving away from exclusive reliance on Microsoft Azure by engaging with Oracle and, reportedly, Google to meet its vast compute requirements amid growing AI ambitions.

YouTube Launches Multi-Language Audio and Thumbnails to Expand Global Reach

YouTube has officially launched its multi-language audio feature following a successful two-year pilot, allowing millions of creators to add dubbing to their videos in various languages and reach a broader global audience. The feature, initially limited to select creators like MrBeast and Jamie Oliver, now includes an AI-powered auto-dubbing tool using Google's Gemini technology to retain the creator's tone and emotions. Since implementing multi-language audio, participants have experienced increased engagement, with uploaded videos seeing over 25% of watch time from non-primary language views. Additionally, YouTube is testing multi-language thumbnails to further cater to international viewers.

Nvidia Launches Rubin CPX GPU for Long-Context AI Tasks Reaching 1 Million Tokens

At the AI Infrastructure Summit, Nvidia unveiled the Rubin CPX, a new GPU designed to handle context windows larger than 1 million tokens. Part of Nvidia's upcoming Rubin series, the CPX is optimized for long-context tasks like video generation and software development and is intended to support a "disaggregated inference" infrastructure approach. The company, which recently reported $41.1 billion in data center sales in the last quarter, plans to release the Rubin CPX by the end of 2026.

Google Challenges ChatGPT Go with Affordable Rs 400 Gemini AI Plus Plan

Google has launched the Gemini AI Plus plan as a direct response to OpenAI's ChatGPT Go, both priced at Rs 400 per month, aimed at making advanced AI tools more affordable for the mass market. Gemini AI Plus, first introduced in Indonesia, offers services like Gemini 2.5 Pro, video generation tools, and extended storage via Google One, whereas ChatGPT Go focuses on document analysis and personalized AI tools. While Google looks to expand this offering, including a potential release in India, the plan's exclusion of Workspace business or education users is notable.

Claude Now Enables Direct Creation and Editing of Files Across Various Formats

Claude has introduced a new feature that allows users to create, edit, and analyze Excel spreadsheets, documents, PowerPoint slide decks, and PDFs directly within its platform. Currently available as a preview for Max, Team, and Enterprise users, with Pro users gaining access soon, this feature enables Claude to transform raw data into clean, insightful outputs and perform complex tasks such as financial modeling or data analysis with minimal user input. By utilizing a private computing environment for code execution, Claude can effectively handle technical tasks, bridging the gap between conceptual work and execution. However, users are cautioned to closely monitor data shared with Claude due to the potential risks associated with internet access.

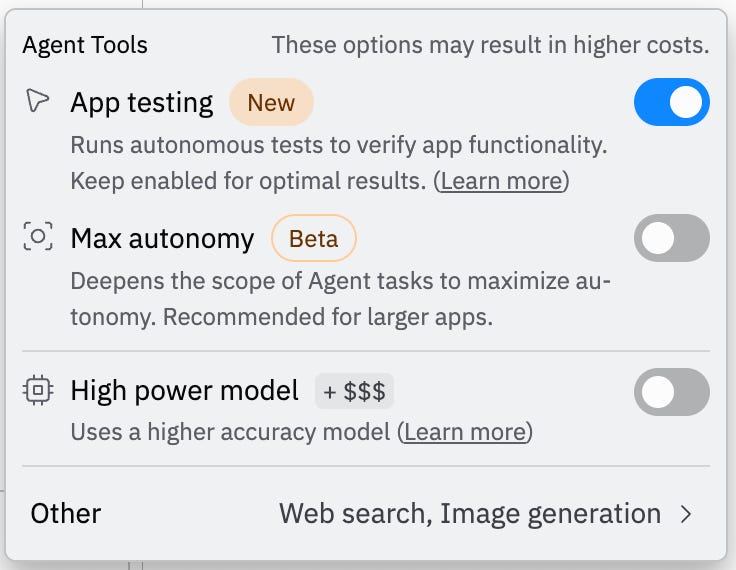

Replit's Agent 3 Sets New Benchmarks in App Testing and Workflow Automation Efficiency

Agent 3, the latest release in autonomous technologies, demonstrates significant advancements, providing 10 times more autonomy than its predecessor, Agent V2. It offers automated app testing and issue fixing three times faster and 10 times more cost-effectively than previous models, capable of running independently for up to 200 minutes. Notable features include the ability to generate other agents and automations, simplifying workflows with applications like Telegram and Slack, and integrating seamlessly with tools such as Notion, Linear, and Google Drive. Available for both free and paid users, Agent 3 streamlines app creation with flexible modes for rapid prototyping or full-stack development.

AI Strategy Drives Reliance's New Subsidiary to Boost Technology Ambitions in India

Reliance Industries Ltd has incorporated a new subsidiary, Reliance Intelligence, to bolster its artificial intelligence strategy as part of a broader goal to become a deep-tech enterprise. Announced after market hours on September 10, the subsidiary aims to develop gigawatt-scale AI-ready data centers, collaborate with global tech firms like Google and Meta, extend AI services across various sectors, and attract top AI talent to India. This move follows Reliance's strategy outlined during its August annual general meeting, emphasizing AI integration with its core businesses of telecom, retail, and energy. Despite recent declines, the company's shares have witnessed a modest gain over the past year.

OpenAI Backs AI-Driven Animated Film 'Critterz' for Cannes Film Festival 2026

OpenAI is supporting the creation of "Critterz," a feature-length animated film developed largely using artificial intelligence tools, to demonstrate AI's potential to transform filmmaking with reduced costs and faster production. Scheduled to premiere at the Cannes Film Festival in 2026, the film seeks to blend AI and human creativity, utilizing tools like GPT-5 alongside human actors for voice work and traditional artists for sketches. Despite the ambitious endeavor, the project surfaces amid ongoing legal tensions between AI companies and Hollywood over intellectual property rights, with major studios accusing AI firms of copyright infringements. The $30 million film faces skepticism about AI's ability to deliver emotionally resonant cinema, echoing mixed reactions to prior AI-generated films.

K2 Think Sets New Standards for AI Efficiency and Open-Source Innovation

The Institute of Foundation Models at Mohamed bin Zayed University of Artificial Intelligence (MBZUAI) and G42 has launched K2 Think, an open-source AI system designed for advanced reasoning. With just 32 billion parameters, K2 Think surpasses much larger models in performance, thanks to its efficiency and innovative architecture based on reinforcement learning and advanced planning techniques. This model exemplifies the UAE’s commitment to positioning itself as a leader in AI through open innovation and collaboration, with K2 Think already ranking among the top reasoning systems in the industry. Available on platforms like Cerebras and Hugging Face, K2 Think provides the global research community with transparent access to its design and code.

HHS Employees Gain Immediate Access to ChatGPT Following Presidential AI Directive

The U.S. Department of Health and Human Services (HHS) has made ChatGPT accessible to all its employees, as stated in an internal email obtained by FedScoop. This move, led by HHS Deputy Secretary Jim O’Neill, aligns with a directive from the Trump administration's AI Action Plan to enhance access to beneficial technology across agencies. While employees are advised to be cautious of bias and use AI-generated suggestions judiciously, ChatGPT's capabilities in summarizing documents have already proven valuable to federal agencies such as the FDA. The tool is subject to specific security protocols and restrictions, including prohibitions on using sensitive or protected health information. The deployment follows OpenAI’s introduction of a $1 ChatGPT Enterprise deal for federal agencies, aiming to streamline scientific and medical analysis.

⚖️ AI Ethics

Real Simple Licensing Aims to Tackle AI's Unlicensed Data Challenges with Industry Backing

In response to the growing concern over copyright issues with AI training data, particularly following Anthropic's $1.5 billion settlement, a new system called Real Simple Licensing (RSL) has been introduced to facilitate data licensing on a large scale. Supported by major web publishers like Reddit, Quora, and Yahoo, RSL aims to create a machine-readable licensing framework across the internet, helping AI companies navigate potential legal challenges. With this system, web publishers can set specific licensing terms for their content, potentially mitigating the risk of AI companies facing numerous copyright lawsuits in the absence of a standardized licensing agreement. Whether this initiative will gain traction among major AI labs accustomed to acquiring data with minimal cost remains to be seen.

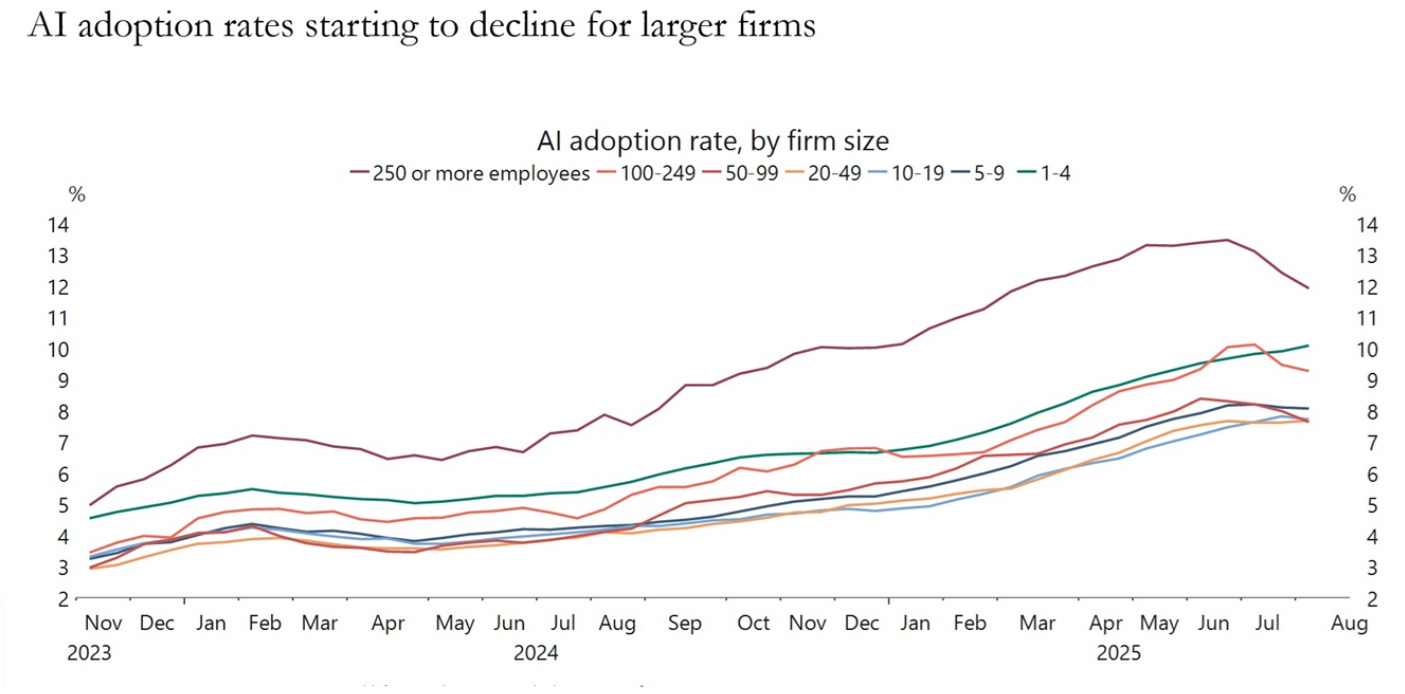

AI Adoption Declines Among Large U.S. Companies Despite Industry's Rapid Growth

Recent data from a Census Bureau report reveals a slight decline in AI adoption rates among large U.S. companies, dropping from 14% to 12% since June 2023, the largest decrease since the Business Trends and Outlook survey began. This shift contrasts the previous sharp increase in AI adoption across recent years. The findings align with an MIT study indicating many corporate AI pilot programs have failed to yield material benefits, suggesting AI may be more of a trend than a transformative tool. This fuels discussions about the potential volatility of the AI industry's perceived dominance.

Anthropic Experiences Brief Outage Affecting Claude, APIs, and Developer Console Services

Anthropic experienced a service outage impacting its APIs, Console, and Claude AI, with users on GitHub and Hacker News reporting issues around 12:20 p.m. ET. The company acknowledged the problem shortly after, stating it had begun implementing fixes. An Anthropic spokesperson later confirmed that service was restored quickly after the brief outage, which started shortly before 9:30 a.m. PT. The incident follows a series of technical challenges the platform has faced, particularly with its Claude models.

California Moves to Regulate AI Chatbots for Minors with SB 243 Legislation

California has advanced a bill, SB 243, aimed at regulating AI companion chatbots to safeguard minors and vulnerable users. The bill, approved by the State Assembly and Senate, awaits Governor Gavin Newsom's decision by October 12. If signed into law, it would require AI chatbot operators to implement safety protocols to prevent discussions of self-harm and sexually explicit content, with penalties for non-compliance, effective January 1, 2026. This legislative push follows growing concern over the mental health impact of AI systems after a teenager's suicide linked to chatbot interactions, with other states and federal entities also scrutinizing AI platforms.

FTC Launches Inquiry into AI Chatbot Safety Concerns for Child and Teen Users

The Federal Trade Commission has initiated an inquiry into seven tech companies, including Alphabet and Meta, that develop AI chatbot companions for minors, focusing on the evaluation of safety, monetization, and parental awareness of risks. This follows controversies over AI technologies linked to tragic outcomes for children, such as suicides reportedly influenced by chatbots from OpenAI and Character.AI. Despite safety measures, users, including a teen who reportedly bypassed ChatGPT's safeguards, have managed to exploit these systems. Additionally, Meta faced criticism for allowing inappropriate conversations between its AI and minors until recently. Such incidents underscore significant challenges in ensuring safety as artificial intelligence continues to evolve.

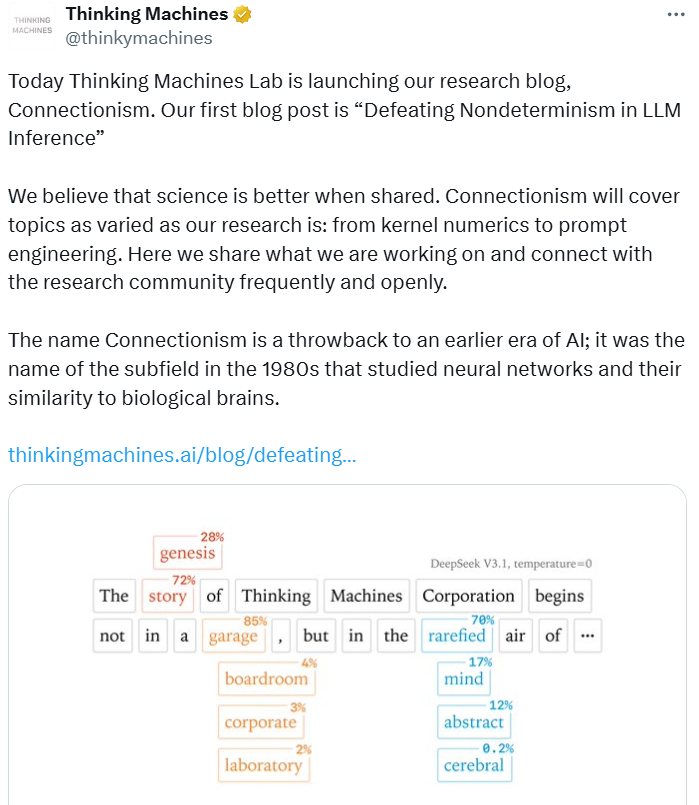

Thinking Machines Lab Aims to Solve AI Model Randomness With $2 Billion Backing

Mira Murati's Thinking Machines Lab is attracting attention after unveiling its first research project focused on creating AI models with reproducible responses, using its $2 billion seed funding and a team of ex-OpenAI researchers. In a blog post titled "Defeating Nondeterminism in LLM Inference," the lab explores addressing the randomness in AI model responses, which it attributes to the orchestration of GPU kernels in inference processing. By achieving more deterministic outputs, the lab aims to enhance both enterprise reliability and reinforcement learning accuracy. The initiative marks the start of Thinking Machines Lab's attempt to share research as it continues to develop its first product aimed at aiding researchers and startups in creating custom models.

🎓AI Academia

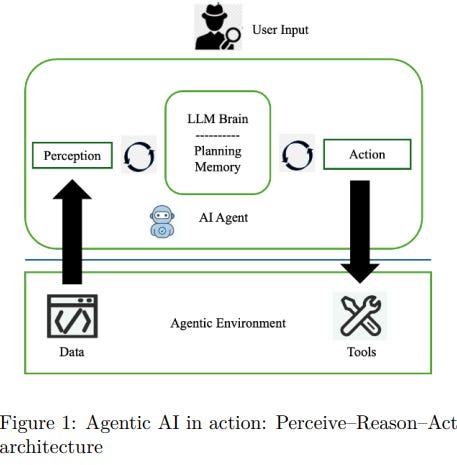

Agentic AI Framework Transforms AML by Enhancing SAR Speed and Accuracy in Compliance

A new agentic AI framework called Co-Investigator AI is being developed to streamline the process of generating Suspicious Activity Reports (SARs) crucial for Anti-Money Laundering (AML) compliance. This system aims to tackle the high costs and inconsistencies faced by financial institutions when crafting SAR narratives by integrating specialized AI agents for tasks like crime type detection and compliance validation. By enhancing efficiency and accuracy, Co-Investigator AI is designed to transform SAR drafting, reducing the burden on human investigators and allowing them to focus on more complex analytical tasks, thus paving the way for scalable and reliable compliance reporting.

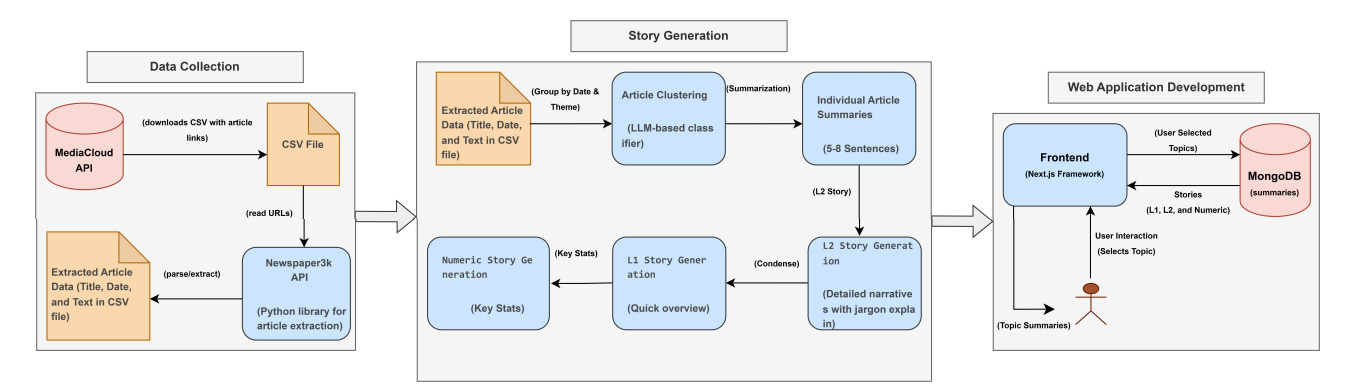

PolicyStory Uses AI to Simplify Complex Policy News for Indian Audience

PolicyStory is an innovative information tool that aims to tackle information overload in India's socio-political landscape by generating comprehensible summaries of policy-related news. It leverages open-source large language models (LLMs) to organize and summarize news articles from diverse sources into three distinct levels, providing a chronological understanding of complex policy issues. The tool has been positively received in user studies, with feedback highlighting its clarity and usability in helping users stay informed about policy developments, thereby promoting informed civic engagement.

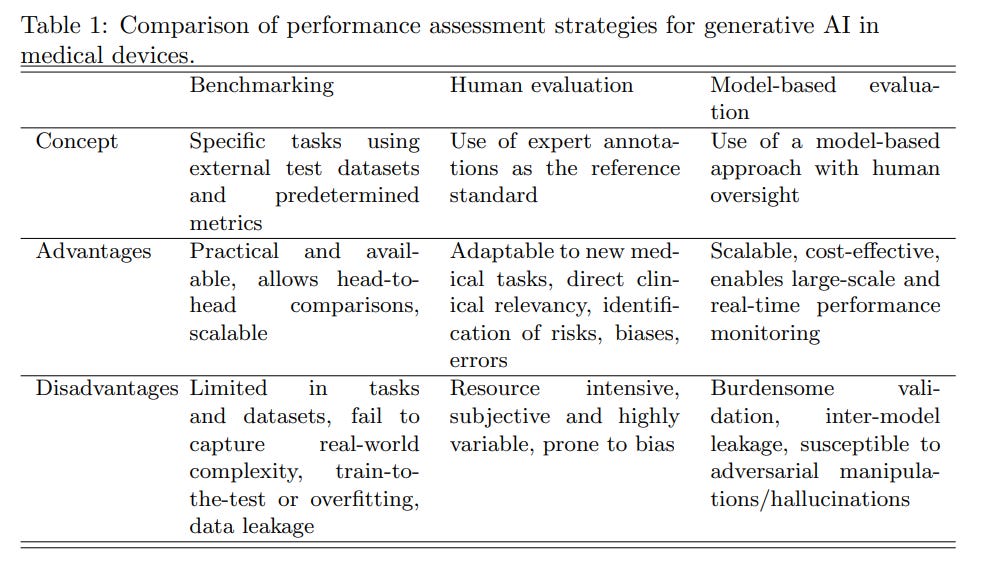

Performance Evaluation Methods for Generative AI in Healthcare Environments Highlighted

Generative AI applications are transforming healthcare by enhancing tasks such as clinical documentation, patient management, and medical imaging. A critical aspect of their integration is the accurate assessment of these AI models to ensure safety and efficacy in clinical settings. Current evaluation methods, which primarily rely on quantitative benchmarks, face challenges like overfitting to test sets. Emerging strategies that involve human expertise and cost-effective computational evaluations are gaining traction to improve generalizability and performance assessment of AI in medical environments. The development of robust evaluation methodologies is essential for the safe implementation of GenAI technologies in healthcare.

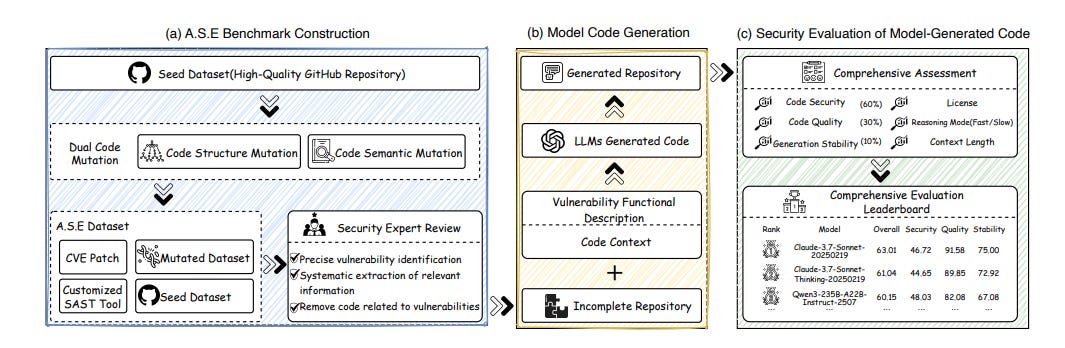

A.S.E Benchmark Enhances Security Evaluation of AI-Generated Code in Production Environments

A new benchmark known as A.S.E (AI Code Generation Security Evaluation) has been developed to evaluate the security of AI-generated code at the repository level, closely simulating real-world programming tasks. Researchers have found that existing large language models (LLMs) face significant challenges in securely generating code within complex repository-level scenarios, despite their proficiency at snippet-level tasks. The benchmark reveals that a greater reasoning budget does not necessarily improve the security of AI-generated code, offering critical insights into the current capabilities of LLMs and guiding developers in choosing the appropriate models for practical applications.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.