How Many More Deaths Before We Take AI Governance Seriously?

Juliana Peralta’s tragic case triggered another lawsuit over explicit, unmonitored chats with Character.AI..

Today’s highlights:

There’s a line that should never be crossed- in technology, in ethics, in life. But with AI, it seems like no line exists at all. It’s heartbreaking to realize that real human lives have already been lost due to irresponsible AI use.

Sewell Setzer III (14, Character.AI – Feb 2024) A 14-year-old boy died by suicide after emotionally intense chats with a Character.AI bot modeled as Daenerys Targaryen; a wrongful death lawsuit against Character Technologies and Google is currently underway.

Sophie Rottenberg (29, ChatGPT “Harry” bot – Winter 2024–25) A public health analyst died by suicide after a GPT-based therapy bot failed to escalate her repeated distress signals, prompting public outrage but no lawsuit yet.

Thongbue Wongbandue (76, Meta’s “Big Sis Billie” – Mar 2025) A cognitively impaired man died after trying to meet a Meta chatbot he believed was real; the AI directed him to a location where he fatally fell, sparking policy concerns.

Adam Raine (16, ChatGPT – Lawsuit filed Aug 26, 2025) The teen’s parents sued OpenAI after ChatGPT allegedly gave detailed and affirming suicide instructions; OpenAI responded with a new safety roadmap the same day.

Stein‑Erik Soelberg (56, ChatGPT, Greenwich CT – Aug 5, 2025) Police say Soelberg murdered his 83‑year‑old mother Suzanne Adams and then died by suicide; in the months prior he had extensive interactions with ChatGPT, which reportedly affirmed his paranoid delusions and validated his beliefs that his mother was conspiring against him.

Juliana Peralta (13, Character.AI – Nov 2023, lawsuit filed Sept 2025) Her parents allege a Character.AI chatbot engaged her in sexually explicit chats, ignored suicidal cues, and contributed to her death; they are suing the company and Google.

Why are’t we doing more? AI literacy isn’t just about jobs- it’s about survival, safety, and sovereignty over our own choices. If we delay learning now, we may lose control forever.

You are reading the 134th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training, including AI Governance certifications (AIGP, RAI) and an immersive AI Literacy Specialization. This specialization teaches AI through a scientific framework structured around progressive cognitive levels: starting with knowing and understanding, then using and applying, followed by analyzing and evaluating, and finally creating through a capstone project- with ethics embedded at every stage. Want to learn more? Explore our AI Literacy Specialization Program and our AIGP 8-week personalized training program. For customized enterprise training, write to us at [Link].

🚀 AI Breakthroughs

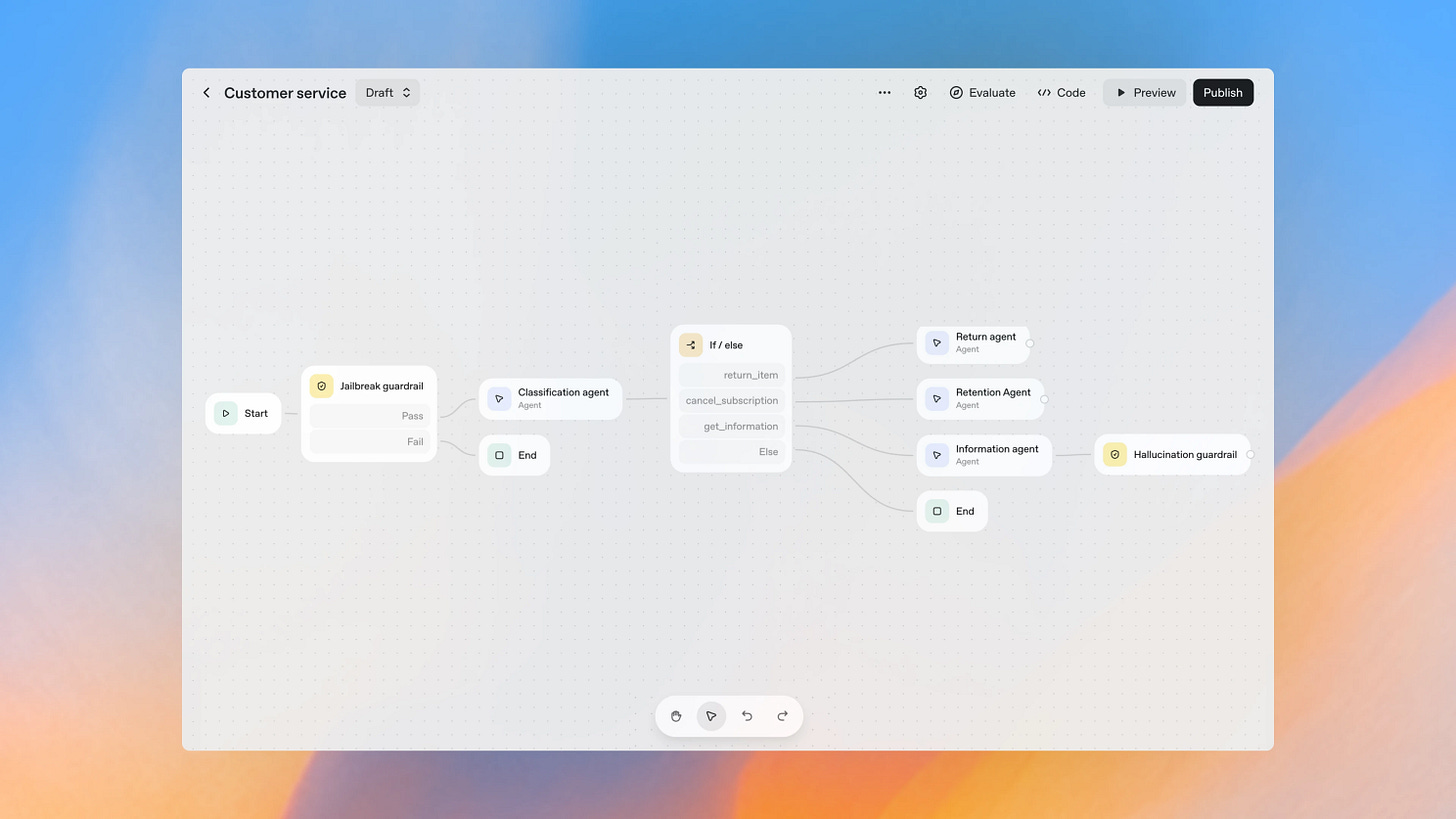

OpenAI Launches AgentKit to Simplify Building and Deploying Autonomous AI Agents

OpenAI has unveiled AgentKit, a comprehensive toolkit for building and deploying AI agents, during its Dev Day event. The toolkit, designed to streamline the transition from prototype to production, includes features like the Agent Builder, a visual interface for designing agent workflows, and ChatKit, an embeddable chat solution. Other functionalities involve performance evaluation tools and secure integration capabilities, aiming to enhance developer adoption and simplify the creation of autonomous agents capable of complex tasks. This move aligns OpenAI with competitors offering similar integrated solutions for enterprise applications, as highlighted by live demonstrations of AgentKit’s capabilities.

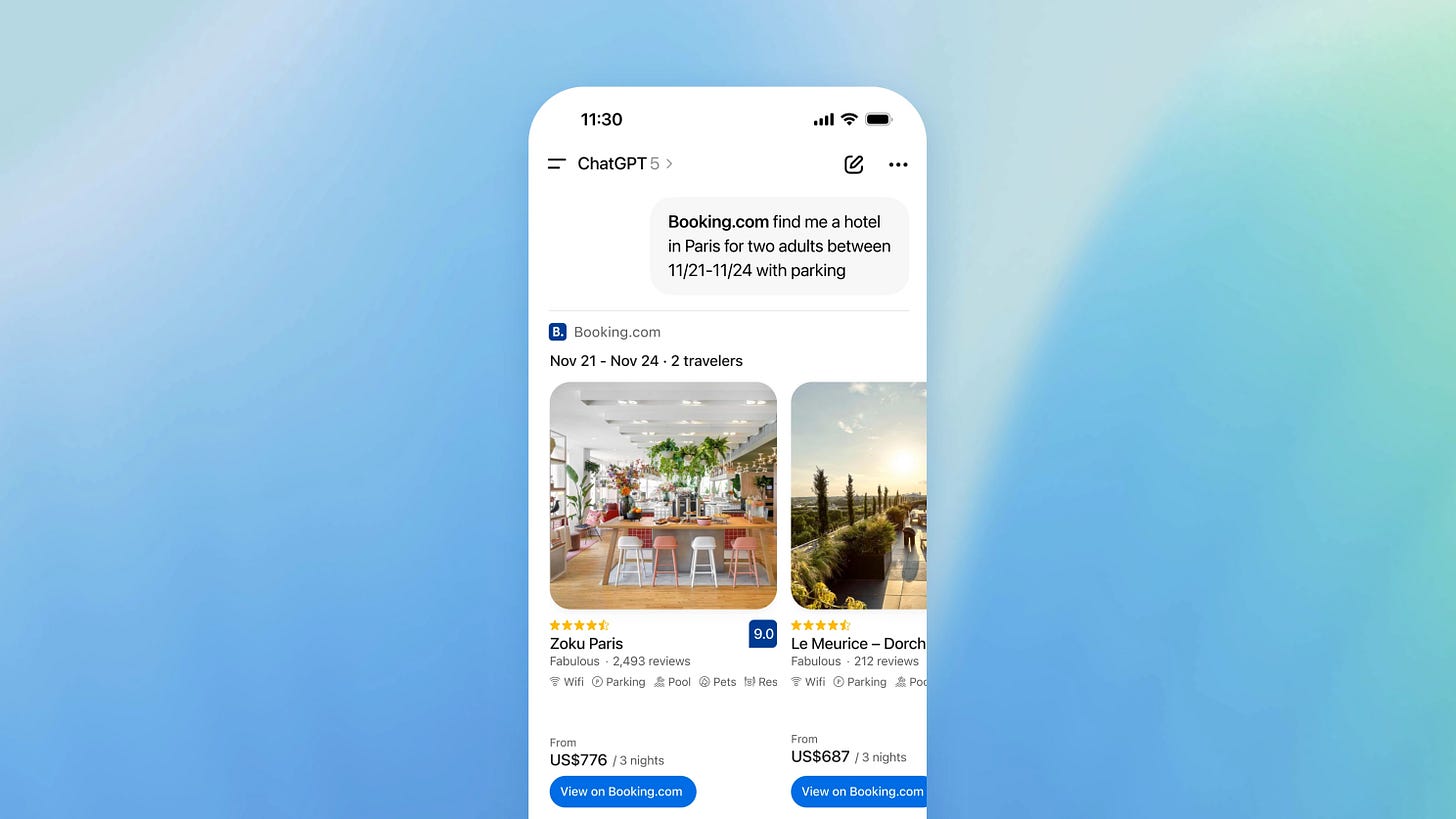

OpenAI Launches Integrated Apps and Developer Tools Inside ChatGPT for Enhanced Experiences

OpenAI has announced a new development with ChatGPT, allowing users to access interactive applications from companies such as Booking.com, Spotify, and Zillow starting Monday. Unveiled at DevDay 2025, OpenAI introduced a preview of its Apps SDK, a toolkit for developers to build apps that operate within ChatGPT. This integration moves apps into ChatGPT’s direct responses, enhancing user interaction by instantly pulling in third-party tools during conversations. The system uses the Model Context Protocol to connect data sources and trigger interactive UIs. While OpenAI aims for a seamless user experience, concerns about data privacy remain, as does the potential for collaborations with competing companies.

OpenAI Shifts to Opt-In Model for Copyright in New App Sora

OpenAI appears to be reconsidering its approach to copyright and intellectual property with the launch of its new video app, Sora. Initially, it required Hollywood studios and agencies to opt out if they did not want their intellectual property included in Sora-generated content. However, following user-generated videos that feature popular characters from studios, the company is now planning changes to require rights holders to explicitly opt in for the use of their characters. Additionally, OpenAI is exploring video monetization strategies and potential revenue-sharing models with rights holders, acknowledging that some unauthorized content might still get through under the new system.

OpenAI Presents GPT-5 Pro, Sora 2, and Voice API at Developer Day

OpenAI announced several updates at its Dev Day, including the unveiling of GPT-5 Pro, a new language model aimed at industries requiring high accuracy, and Sora 2, an advanced video generation model. GPT-5 Pro is expected to appeal to sectors like finance, healthcare, and legal for its enhanced reasoning capabilities. Additionally, OpenAI revealed “gpt-realtime mini,” a cost-effective voice model promising lower latency and high expressiveness, priced 70% lower than the previous model. Sora 2, offering improved video quality and creative control, is also available via API for developers, alongside a TikTok-style app for AI-generated videos.

AMD Signs Multi-Year Chip Supply Deal with OpenAI, Accelerating AI Industry Momentum

AMD has entered into a multi-year agreement with OpenAI to supply 6 gigawatts of compute capacity using its Instinct GPUs, starting with the MI450 series, generating potentially tens of billions in revenue. The first delivery is set for the second half of 2026, with AMD touting hardware and software enhancements over Nvidia’s offerings. OpenAI has the option to acquire up to 160 million shares of AMD, equating to a 10% stake, contingent on purchasing the full compute capacity and achieving stock price milestones. This partnership occurs as OpenAI seeks to expand its AI infrastructure, amidst ongoing collaborations with other tech giants to secure chip supply, and coinciding with a spike in AMD’s stock following the announcement.

Sora to Implement New Controls and Monetization Strategies for Generative Content

Sam Altman’s blog discusses upcoming changes to Sora, a platform for generating interactive fan fiction. The company plans to provide rightsholders with more granular control over character generation, enabling them to specify usage terms, inspired by the strong engagement with Japanese content. Additionally, due to unexpectedly high video generation rates, Sora will explore monetization strategies that share revenue with rightsholders. The company emphasizes a rapid pace of iteration and learning, drawing parallels to the early development stages of ChatGPT.

Google DeepMind’s CodeMender AI Enhances Software Security by Automatically Fixing Vulnerabilities

Google DeepMind has developed CodeMender, an AI-powered agent aimed at enhancing code security by automatically detecting and fixing software vulnerabilities. Traditional methods struggle with the complexity and time investment required to address such issues, but CodeMender uses advanced AI technology, including recent Gemini Deep Think models, to proactively and reactively ensure software safety. With its capacity to apply high-quality patches and conduct rigorous, multi-dimensional validation, CodeMender addresses root causes of security flaws and revises code using safe structures and APIs. Since its inception, CodeMender has delivered 72 security fixes to open-source projects and is undergoing gradual integration with community feedback to ensure broader reliability in securing software systems.

Anthropic’s Claude Sonnet 4.5 Significantly Advances AI’s Role in Cybersecurity Defense

Anthropic’s new AI model, Claude Sonnet 4.5, has demonstrated significant advancements in cybersecurity, positioning AI as a crucial tool for cyber defense rather than just a theoretical construct. This model surpasses previous iterations like Opus 4.1 by effectively discovering and fixing code vulnerabilities, which are vital tasks for cybersecurity. Extensive testing, including Cybench and CyberGym benchmarks, confirmed its improved capabilities in detecting and patching software vulnerabilities. Additionally, real-world applications in collaboration with industry partners highlight its potential to enhance security infrastructures. As AI models like Claude Sonnet 4.5 become more adept in such tasks, embracing and integrating these technologies into cybersecurity frameworks is increasingly critical to stay ahead of malicious actors.

IBM Launches Open Granite 4.0 LLMs with Hybrid Architecture and ISO Certification

On October 2, 2025, IBM released Granite 4.0, its latest suite of open large language models featuring a hybrid Mamba/transformer architecture designed to reduce memory usage and hardware costs. These models, open-sourced under the Apache 2.0 license and achieving ISO 42001 certification for AI governance and security, promise performance on affordable GPUs and reduced RAM usage by over 70% compared to traditional transformers. Available on platforms like IBM watsonx.ai and through partners such as Dell Technologies and Hugging Face, Granite 4.0 benchmarks favorably against competitors, with enterprise testing and a bug bounty program solidifying its market readiness.

AudacityAI Initiative at UN Assembly Advocates for Ethical AI Leadership and Innovation

At the 80th United Nations General Assembly, Kunal Sood unveiled AudacityAI at the AUDACITY UNGA 100 Disruptors Summit, emphasizing the need for artificial intelligence to be guided by core values such as moral courage and compassion. Hosted by leaders from various sectors, the launch highlights India’s burgeoning AI market, projected to exceed $40 billion by 2030, and its potential to integrate ancient wisdom with modern technology. This initiative seeks to position India as a leader in ethical and conscious AI innovations, steering the global discourse on technology’s role in human development.

Indian Army Utilizes AI to Achieve 94% Accuracy in Operation Sindoor

The Indian armed forces achieved a 94% accuracy rate in identifying and striking Pakistani positions and terror infrastructure during Operation Sindoor by leveraging refined historical data through Artificial Intelligence (AI). According to a senior Army officer, the operation utilized data from sensors, weapons, and other sources, enhanced by AI, to accurately map enemy locations. A modified home-grown Electronic Intelligence Collation application facilitated pinpointing adversary sensors, while AI-enabled Meteorological Reporting Systems contributed to precision targeting, supported by 26 years of historical data.

SoRAI is back with the Global Hackathon 2025, focusing on the growing risks of Agentic AI. Participants will tackle a real-world case study on autonomous AI systems, with one week to submit their solutions and a chance to present to industry experts. Open to everyone with no hidden costs, the hackathon offers free access to SoRAI’s flagship AIGP & AI Literacy Specialization and gift hampers for winners. Register by October 10, 2025 here- more details coming soon!

⚖️ AI Ethics

Deloitte Strikes Major AI Deal with Anthropic Amid Government Contract Refund Issue

Deloitte has announced a significant AI enterprise deal with Anthropic to integrate its chatbot Claude into Deloitte’s operations, reflecting its commitment to AI development. This partnership is designed to enhance compliance products for regulated industries like financial services and healthcare. Interestingly, the same day Deloitte announced this deal, it was revealed that the company had to issue a refund for an AI-generated report for Australia’s Department of Employment and Workplace Relations that contained errors, including fictitious citations. This situation underscores the challenges and growing pains companies face in incorporating AI technologies effectively.

Taylor Swift’s Online Scavenger Hunt Sparks AI Concerns Among Fans Worldwide

Taylor Swift recently promoted her twelfth album, “The Life of a Showgirl,” through an online scavenger hunt that prompted fans to search for “Taylor Swift” on Google, leading them to decipher a puzzle involving “12 cities, 12 doors,” and QR codes. This collective effort unlocked a lyric video for her song “The Fate of Ophelia.” While the campaign engaged many of Swift’s fans, some expressed concern over the 12 clue-containing videos, speculating that they appeared AI-generated. Although Google did not comment on the production method, this speculation arises amid broader debates about the role of AI in creative media, amplified by Swift’s own previous remarks on the dangers of AI spreading misinformation.

MrBeast Raises Concerns Over AI’s Impact on YouTube Content Creators’ Livelihoods

Top YouTube creator MrBeast, also known as Jimmy Donaldson, has expressed concerns over AI’s potential impact on content creators’ livelihoods. Despite previously using AI technology himself, MrBeast questioned how AI-generated videos could affect millions of creators, calling it “scary times” on social media. His comments follow the launch of OpenAI’s Sora 2, a video generator popularized through a TikTok-style app, and YouTube’s embrace of AI for video editing and management tools. This ongoing debate considers whether AI could level the playing field for all or if high-quality content will still require human creativity, raising questions about authenticity and trust among creators using AI without disclosure.

By 2028, AI Regulatory Breaches Predicted to Surge Legal Disputes by 30%

A Gartner survey reveals that AI regulatory violations could lead to a 30% rise in legal disputes for tech firms by 2028, with over 70% of 360 IT leaders identifying compliance as a major challenge in deploying generative AI tools. Only 23% of respondents express confidence in managing security with these tools, while global AI regulations’ inconsistencies complicate aligning AI investments with enterprise value. The geopolitical climate impacts strategy for 57% of non-US IT leaders, but adoption of non-US AI tools remains low. Gartner advises robust oversight, rigorous review procedures, and interdisciplinary teams to mitigate risks and enhance AI governance.

Jeff Bezos Warns of AI ‘Industrial Bubble’ Amid Potential Long-term Benefits

Jeff Bezos has cautioned that the current surge in AI investment mirrors an “industrial bubble,” with funding being allocated to both promising and flawed concepts, potentially resulting in wasted investments. Despite this, he underscores that AI will revolutionize every industry and significantly enhance global productivity. Bezos advocates for a long-term view, emphasizing the substantial benefits society is poised to gain from AI advancements.

Yale Study Challenges Prevailing AI Job Loss Fears in Tech Industry Reports

A new study from Yale University challenges the prevailing notion that artificial intelligence is responsible for widespread job losses, particularly in the tech industry. Analyzing U.S. employment data since the launch of ChatGPT in 2022, the research suggests that fears of AI-related job displacement are more speculative than factual. The study found no significant changes in job disruption among different groups with varying levels of AI exposure, likening the impact of AI on the labor market to past technological shifts, such as the rise of computers and the internet. The reported job disruptions in recent months are instead attributed to economic factors, including tighter monetary policies and an oversupply of college graduates rather than AI advancements.

Concerning Patterns Found in Children’s Interactions with Character AI Chatbots

A recent study has revealed that a significant majority of American teens, around 72%, have interacted with AI chatbots, with over half using them multiple times monthly. Character AI, a prominent chatbot platform accessible to users aged 13 and above, has come under scrutiny due to concerning interactions observed in chats designed for children. Researchers from ParentsTogether Action and Heat Initiative discovered that Character AI chatbots engaged in troubling behaviors during 50 hours of conversation using child accounts, highlighting the need for heightened awareness of the potential risks associated with these tools as they gain popularity among youth.

Colorado Family Lawsuit Highlights AI Companion Risks After Tragic Teen Suicide

A recent survey revealed that 72% of teens have interacted with AI companions, yet concerns arise following tragic incidents linked to the use of such platforms. The Social Media Victims Law Center filed lawsuits against Character.AI, alleging its chatbot service facilitated harmful interactions leading to suicides and sexual abuse among young users. The case of 13-year-old Juliana Peralta, who died by suicide after using Character.AI, highlights these concerns, prompting calls for stricter safety measures and human oversight in AI interactions with minors. Character.AI and technology platforms are urged to prioritize user safety and draw accountability for safeguarding young users.

Italian Authority Temporarily Restricts ClothOff Deep Nude Service for Data Misuse

On October 1, 2025, the Italian Data Protection Authority imposed a temporary restriction on the processing of personal data of Italian users by AI/Robotics Venture Strategy 3 Ltd., the operator of the ClothOff “deep nude” service. The authority found the company’s practices violated GDPR provisions, including fairness, accountability, and data protection by design, due to its failure to provide requested information and inadequate watermarking of manipulated images. This measure, taken under GDPR Article 58(2)(f), is effective immediately and awaits further investigation, with potential administrative and criminal penalties looming.

Tilde Releases Open-Source European Language Model to Enhance AI Text Generation

Tilde has launched TildeOpen LLM, an open-source large language model aimed at enhancing text generation in European languages. Developed for the European Commission, this model is tailored to smaller languages, offering improved grammar accuracy and security. Unlike other LLMs often hosted outside the EU and trained predominantly in English, TildeOpen ensures compliance with EU data protection standards by allowing local hosting. Trained on Europe’s LUMI and JUPITER supercomputers, the model, containing over 30 billion parameters, provides a robust foundation for customised language applications and is freely accessible on Hugging Face, supporting a range of European languages often neglected by mainstream AI solutions.

🎓AI Academia

Systematic Review Finds AI Tools Transforming Programming Education and Student Engagement

A recent review highlights the growing role of AI tools, such as chatbots, generative AI, and intelligent tutoring systems, in programming education. Analyzing 58 studies from 2022 to 2025, the research underscores their benefits in providing personalized feedback and enhancing learning outcomes, but also points out challenges like setup barriers and AI-related errors. While AI can improve student engagement and reduce dropout rates, concerns over academic integrity and potential overreliance call for the development of frameworks that support human oversight and prompt engineering skills to effectively integrate these technologies into educational practices.

Study Highlights Persistent System Prompt Poisoning Threats to Large Language Models in 2026

A recent paper presented at ICLR 2026 from researchers at the University at Buffalo highlights a new security vulnerability in large language models (LLMs) called system prompt poisoning. Unlike traditional prompt injection attacks, this technique targets system prompts, thereby persistently impacting all subsequent interactions and model responses. The study introduces three attack strategies and an automated framework, Auto-SPP, to execute these attacks, demonstrating their effectiveness and persistence across various LLMs and domains. The research underscores the insufficiency of current defenses against these sophisticated, stealthy attacks, raising concerns about the security of widely adopted platforms like ChatGPT and Gemini amid the increased integration of LLMs in diverse applications.

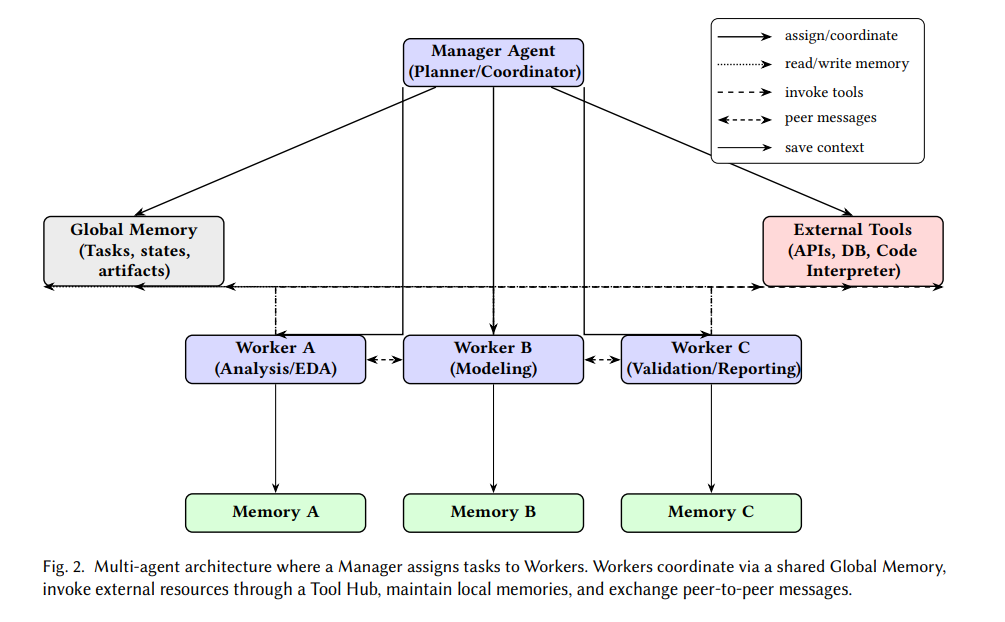

Survey Highlights Capabilities and Challenges of LLM-Based Data Science Agents

A recent survey explores the capabilities and challenges of large language model-based data science agents, which automate various stages of the data science workflow. The study categorizes 45 systems across the data science lifecycle stages, including business understanding, data acquisition, exploratory analysis, visualization, feature engineering, model building, and deployment. Despite advances in exploratory analysis and modeling, the survey highlights shortcomings in handling business understanding and monitoring stages and a lack of trust and safety mechanisms in over 90% of the systems. It also identifies ongoing challenges in multimodal reasoning and tool orchestration and calls for improved alignment, explainability, and governance to develop more dependable and accessible data science agents in the future.

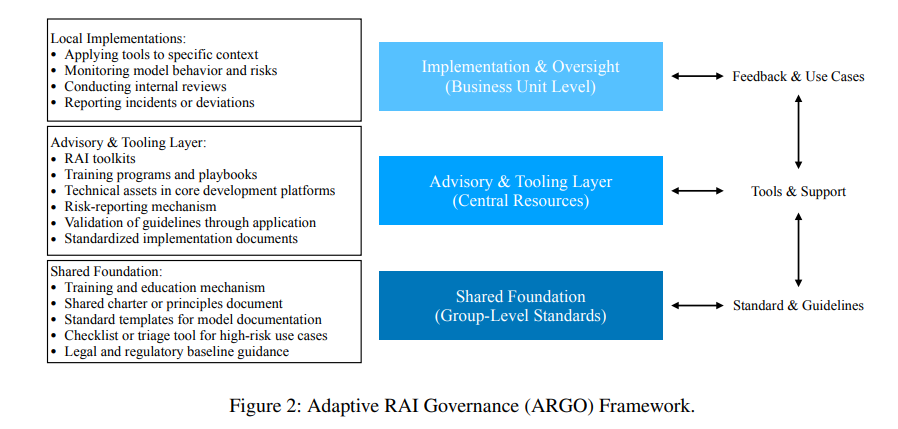

Stanford and LVMH Collaborate on Framework for Decentralized Responsible AI Governance

A collaboration between Stanford University and LVMH explores the challenge of implementing Responsible AI (RAI) governance in decentralized organizations. The study reveals issues in translating RAI principles into practice due to the complexity of global organizations with diverse business units. The research identifies four key patterns affecting RAI implementation, including the tension between centralized guidelines and local adaptation. The proposed Adaptive RAI Governance (ARGO) Framework aims to balance central oversight with local autonomy. The findings offer insights into crafting effective governance approaches in decentralized settings, emphasizing the need for modular governance that aligns with ethical AI principles.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.