Historic! Did Microsoft Open “A NEW WORLD” With This Quantum Chip- Majorana?

Today's highlights:

🚀 AI Breakthroughs

Microsoft Pioneers Majorana 1, Scaling Quantum Processing with Topoconductor Technology to One Million Qubits

• Majorana 1 debuts as the world's first Quantum Processing Unit with a Topological Core, targeting scalability to a million qubits on a single chip

• Topoconductors, a breakthrough material, culminate in topological qubits bridging the gap from quantum theory to practical quantum computing

• Microsoft progresses toward scalable quantum error correction, simplifying processes through a digitally controlled, measurement-based approach to managing qubits effectively.

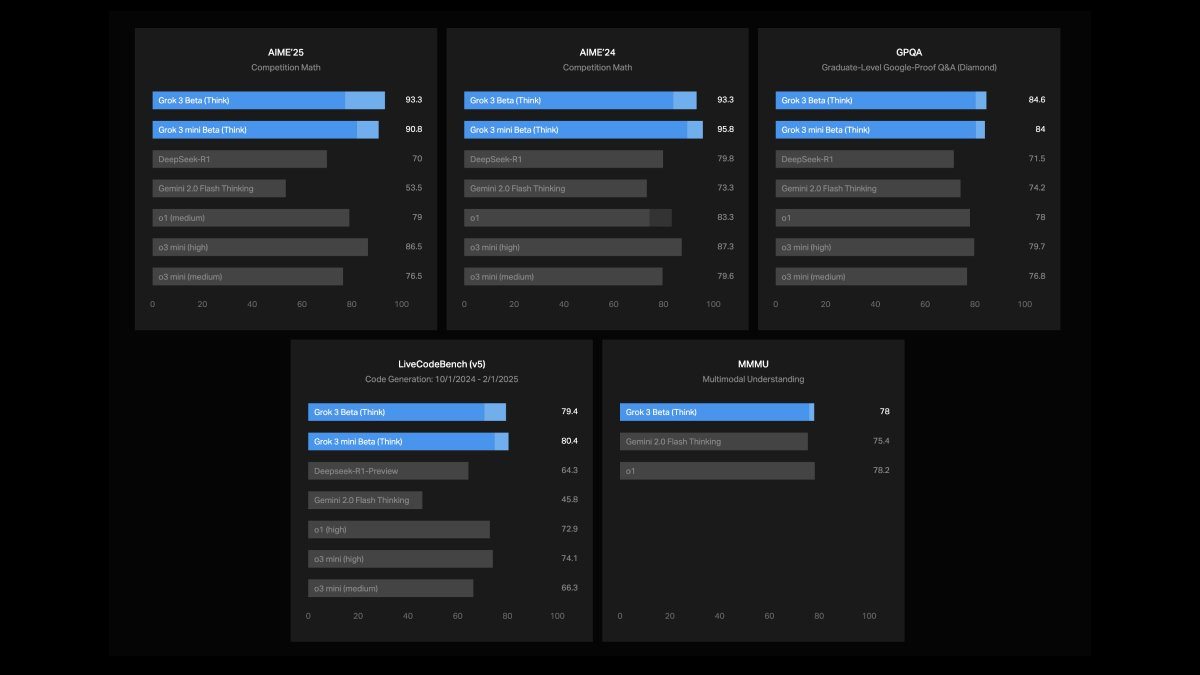

Grok 3 Achieves New Heights in AI Reasoning with Enhanced Compute Power

• xAI presents Grok 3, an advanced AI model with unparalleled reasoning, mathematics, and coding skills, trained using Colossus supercluster's immense computational power, outshining previous models;

• Grok 3's test-time compute capability boosts its performance, achieving impressive scores on academic exams such as 93.3% on AIME 2025 and codifying prowess with 79.4% on LiveCodeBench;

• Grok 3 mini emerges as a cost-efficient reasoning model, excelling in STEM tasks and scoring 80.4% on LiveCodeBench, offering users high-performance AI without extensive computational demands.

Perplexity Releases Open-Source R1 1776 to Address Bias and Censorship Issues

• Perplexity has open-sourced the R1 1776 model, a version of DeepSeek-R1 designed to provide unbiased and factual information, available on the HuggingFace Repo.

• R1 1776 mitigates prior issues of bias and censorship by being post-trained on a high-quality censorship dataset gathered through expert and classifier evaluations.

• Evaluations indicate R1 1776 maintains core reasoning abilities while being capable of addressing sensitive topics, with performance on par with other state-of-the-art reasoning models.

Muse: A Generative AI Model for Video Game Visuals and Actions Announced

• Nature has published new research on the World and Human Action Model (WHAM), named "Muse", a generative AI for video games producing visuals and controller actions.

• Developed by Microsoft Research and Xbox Game Studios' Ninja Theory, Muse open-sources its weights, sample data, and offers a WHAM Demonstrator on Azure AI Foundry.

• Muse utilizes human gameplay data from Xbox's Bleeding Edge, demonstrating capabilities like consistency, diversity, and persistency in generating gameplay sequences.

Evo 2 AI Model Breakthrough: Analyzing Genetic Codes on a Massive Scale

• Evo 2, a foundation model on NVIDIA DGX Cloud, provides unprecedented insights into DNA, RNA, and proteins by analyzing nearly 9 trillion nucleotides across various species

• Available on NVIDIA BioNeMo, Evo 2 assists developers in biomolecular research for healthcare, agricultural biotechnology, and materials science through predictive insights and customized model deployment

• The model’s ability to process up to 1 million genetic sequence tokens advances understanding of gene function, facilitating developments in precision medicine, agricultural resilience, and sustainable biofuels.

Figure's Helix Enhances Humanoid Robots with Vision-Language-Action Model for Home Use

• Figure has developed Helix, a Vision-Language-Action model, enabling humanoid robots to perform household tasks through natural language commands and real-time visual assessments

• Helix features strong object generalization, allowing robots to handle thousands of household items with various attributes, illustrating a new frontier for home robotics technology;

• The unveiling of Helix emphasizes Figure's focus on household robotics, a challenging environment that requires adaptability to diverse and dynamic domestic settings.

Google's AI Co-Scientist System Enhances Research by Bridging Disparate Scientific Domains

• Researchers face challenges navigating rapid scientific publication growth while integrating cross-domain insights, yet overcoming these hurdles is vital, as demonstrated by transdisciplinary breakthroughs like CRISPR

• A new AI co-scientist system, built on Gemini 2.0, emulates the scientific method to assist researchers by generating novel hypotheses and proposals from existing literature and data

• The AI co-scientist's multi-agent architecture enhances its ability to synthesize complex subjects and supports long-term planning, aiming to transform and streamline modern scientific discovery processes;

Apple Launches Affordable iPhone 16e with C1 Modem and FaceID Technology

• Apple launched the iPhone 16e, a budget-friendly model priced at $599, aimed to boost sales after mixed quarters and target new customers in its ecosystem

• The iPhone 16e features Apple's A18 chip and FaceID, matching the performance of costlier models, along with a single camera and the new C1 cellular modem

• Priced below the $799 iPhone 16, the iPhone 16e becomes Apple's cheapest device to support Apple Intelligence, enhancing accessibility to AI-driven features like image generation and notification summaries;

Langchain Releases LangMem SDK to Enhance AI Agents with Long-Term Memory Capabilities

• LangChain recently launched the LangMem SDK, a library designed to equip AI agents with tools for long-term memory, allowing them to learn and improve over time

• The SDK offers seamless integration with LangGraph's memory layer, and its API supports any storage system across various agent frameworks, enhancing adaptability and customization

• A new managed service provides additional long-term memory enhancements for free, encouraging developers to experiment with personalized and intelligent AI experiences in production environments;

AI System Boosts CUDA Kernel Performance by 100x, Enhancing Machine Learning Operations

• Sakana AI has launched The AI CUDA Engineer, an advanced system enhancing CUDA kernel optimization, achieving 10-100x speedups over traditional PyTorch operations.

• By autonomously converting PyTorch code to optimized CUDA kernels, The AI CUDA Engineer significantly reduces runtime for AI models on NVIDIA hardware.

• The AI CUDA Engineer archive, released publicly, encompasses over 30,000 generated kernels, enhancing AI model efficiency and serves as a resource for future AI enhancements.

⚖️ AI Ethics

Archer Aviation Receives FAA Approval to Launch Pilot Training for Midnight Aircraft

• Archer Aviation has secured the FAA's Part 141 certification, enabling it to initiate pilot training for its upcoming commercial air taxi service using the Midnight aircraft

• The FAA's new regulations for powered-lift aircraft have paved the way for Archer's pilot training academy, set to prepare pilots for electric air taxi operations

• Archer's Midnight aircraft, designed to accommodate four passengers, aims to offer fast, zero-emission urban flights that compete with traditional rideshare services in both cost and convenience.

WWF Germany Utilizes AI to Combat Ocean Ghost Nets and Plastic Pollution

• WWF Germany has launched ghostnetzero.ai, an AI-powered platform designed to detect lost fishing nets, known as ghost nets, using sonar images to aid in marine conservation

• AI technology enables high-resolution sonar data analysis, identifying potential ghost net locations and marking them on the platform, revolutionizing the approach to addressing ocean plastic waste

• The platform, backed by Microsoft AI for Good Lab and Accenture, encourages collaboration from research institutes and companies to contribute sonar recordings, aiming to enhance global ghost net detection efforts.

New York Times Embraces AI Tools to Assist Editorial and Product Teams

• The New York Times is introducing AI tools like Echo for staff to generate social copy, SEO headlines, and certain code, aiming to boost productivity and creativity;

• The Times encourages AI utilization for generating headlines, editing suggestions, and brainstorming, with guidelines to avoid using AI for article drafting or handling confidential materials;

• The Times' embrace of AI comes amid ongoing legal disputes with OpenAI over alleged copyright violations, reflecting the complex landscape of AI adoption in journalism.

OpenAI's president highlights a four-step AI prompting framework

• OpenAI's president highlights a four-step AI prompting framework, focusing on goal definition, return format, warnings, and context dump to enhance user interaction with AI models;

• The method promises clear, precise, and personalized AI responses, aiding in various fields like research, content creation, and business analytics, reducing the chances of vague answers;

• The framework is poised to maximize efficiency, ensuring that AI-generated outputs are not only relevant and accurate but also structured in a manner that meets specific user needs.

South Korea Accuses DeepSeek of Unauthorized Data Sharing with ByteDance in China

• South Korea has removed DeepSeek from app stores, citing data protection concerns amid accusations of data-sharing with TikTok's parent company, ByteDance

• Despite topping app charts globally, DeepSeek faces controversy over potential data transfers to ByteDance, sparking fears about compliance with Chinese data laws

• The South Korean data protection regulator is investigating the nature and extent of data shared, reflecting ongoing global scrutiny over user privacy and data security;

Mira Murati Establishes Thinking Machines Lab to Advance Adaptable AI Systems Aligned with Human Values

• Mira Murati, former CTO of OpenAI, has launched a new AI startup, Thinking Machines Lab, aimed at creating more understandable, customizable, and capable AI systems;

• Thinking Machines Lab emphasizes the importance of encoding human values into AI systems, with co-design involving research and product teams to ensure safety and reliability;

• The startup's team includes ex-OpenAI researchers and engineers, with Barret Zoph as CTO and John Schulman as chief scientist, reflecting its strong foundation in AI expertise.

OpenAI Considers New Board Voting Rights to Prevent Musk Takeover Attempt

• OpenAI is weighing new governance mechanisms to grant its nonprofit board special voting rights, aiming to counter potential unsolicited takeover attempts, particularly from Elon Musk

• FT reports that these voting rights would allow the nonprofit board to override investors, protecting OpenAI's control against existing backers like Microsoft and SoftBank as well as outside bids

• CEO Sam Altman rejected Musk's $97.4 billion offer, reaffirming OpenAI isn't for sale, while jokingly proposing to purchase Twitter, and the board later unanimously dismissed Musk's proposal.

🎓AI Academia

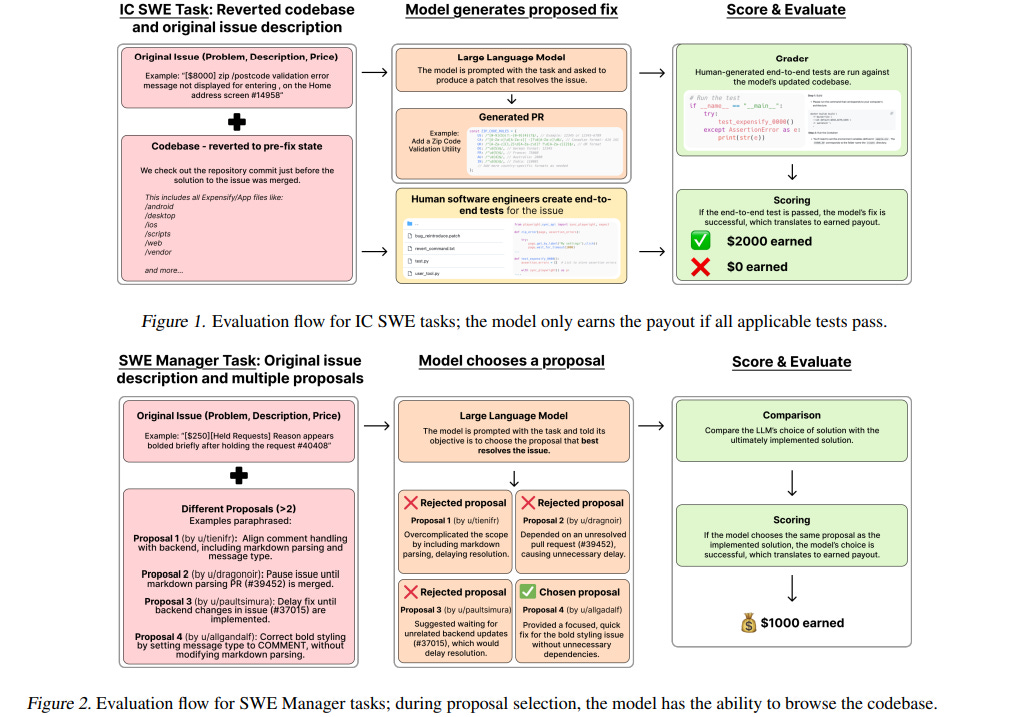

SWE-Lancer Benchmark Tests AI Models for Earning Capacity in Freelance Engineering

• SWE-Lancer sets a new benchmark by evaluating 1,488 freelance software engineering tasks from Upwork, collectively valued at $1 million, to assess the capabilities of language models in real-world scenarios

• The benchmark features Independent Contributor tasks graded by triple-verified end-to-end tests created by professional engineers, and Managerial tasks where models choose between technical implementation proposals

• By mapping model performance to monetary value, SWE-Lancer aims to drive research into AI's economic impact, providing a Docker image and public evaluation split for accessibility.

A New Framework Automates CUDA Kernel Optimization for Large Language Models

• The AI CUDA Engineer framework automates the process of discovering and optimizing CUDA kernels, significantly improving large language model performance by translating PyTorch code directly into efficient CUDA kernels;

• Leveraging an evolutionary meta-generation approach, The AI CUDA Engineer achieves a median task speedup of 1.52x and over 50x improvement in specific operations like 3D convolutions;

• A comprehensive dataset and interactive webpage accompany the release of The AI CUDA Engineer, offering insights into the optimized kernels and their impact on various computational tasks.

Survey Highlights Data Contamination Risks in Evaluating Large Language Models

• A recent survey highlights data contamination in LLMs, resulting from unintended overlaps between training and test datasets, which can lead to inflated performance evaluations;

• Methods to counteract data contamination include dynamic benchmarks, data rewriting, and prevention strategies, ensuring more accurate LLM evaluation by mitigating overlap-induced biases;

• Detection approaches for data contamination are categorized into white-box, gray-box, and black-box methods, reflecting varying levels of model dependency and offering diverse solutions for identifying overlaps in datasets.

Study Investigates Prompt Length's Impact on Large Language Models for Domain-Specific Tasks

• Researchers have identified that default prompt lengths are insufficient for domain-specific tasks, as large language models (LLMs) struggle without adequate domain knowledge;

• Experimental results suggest that longer prompts, which provide more domain-specific background knowledge, generally enhance LLM performance across specialized tasks;

• Despite improvements with longer prompts, LLMs' average F1-scores remain significantly lower than those achieved by human performance in domain-specific scenarios.

Study Highlights Linguistic Bias in Dutch Government Texts Using AI Models

• Researchers presented a study on detecting linguistic bias in government documents using BERT-based models, highlighting its significance in shaping public policy and citizen-government interactions;

• The Dutch Government Data for Bias Detection (DGDB) dataset was introduced, sourced from the Dutch House of Representatives, showing its effectiveness in improving bias detection with fine-tuned models;

• Analysis revealed that fine-tuned BERT models outperformed generative language models in bias detection, emphasizing the importance of labeled datasets for equitable governance practices in various languages.

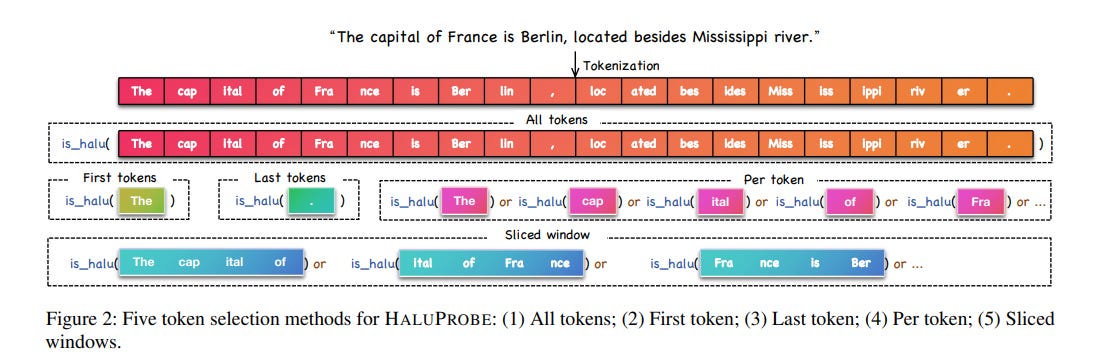

Researchers Analyze Internal States to Tackle Hallucinations in Large Language Models

• Researchers at ByteDance have unveiled HALUPROBE, a framework dedicated to understanding and detecting hallucinations within Large Language Models using internal state analysis

• Traditional hallucination detection relies on external data sources, introducing latency, whereas HALUPROBE leverages LLM internal states, enabling real-time intervention and reducing computational costs;

• The framework dissects the model's inference process into understanding, query, and generation stages, providing insights into the origins of hallucinations without external information dependency.

Private Text Generation Method Enhances Differential Privacy in Medical Record Analysis

• Researchers have developed a method called Differentially Private Keyphrase Prompt Seeding (DP-KPS) to generate private synthetic text using large language model prompts for institutions like hospitals;

• DP-KPS employs privatized prompts seeded with private samples from a distribution over phrase embeddings, achieving differential privacy while maintaining the text's predictive utility for ML tasks;

• This method provides a solution for organizations to share sensitive data safely, without direct training or fine-tuning of third-party LLMs, ensuring privacy compliance.

UniGuardian Enhances Security By Detecting Various Attacks on Large Language Models

• A novel defense mechanism, UniGuardian, has been developed to detect prompt injection, backdoor attacks, and adversarial attacks in Large Language Models (LLMs)

• UniGuardian utilizes a single-forward strategy for simultaneous attack detection and text generation, optimizing detection efficiency and model performance

• Experimental results demonstrate UniGuardian's capability to accurately and effectively identify malicious prompts in LLMs, marking a significant advancement in AI security.

Evaluation Reveals Strengths and Weaknesses of LLMs in Statistical Programming Tasks

• A recent study evaluates LLMs like ChatGPT and Llama for their performance in generating SAS code for statistical analysis tasks, highlighting both strengths and weaknesses

• While LLMs produce syntactically correct code, challenges persist in tasks needing deep domain understanding, often leading to redundant or inaccurate results

• Expert evaluations reveal insights into the LLMs’ effectiveness, guiding future innovations in AI-assisted coding for statistical programming tasks.

Comprehensive Guidelines Proposed for Evaluating Role-Playing Agents in AI Research

• A recent survey introduced a design guideline for evaluating Role-Playing Agents (RPAs), delving into 1,676 papers on large language model-based agents

• The survey identifies six agent attributes, seven task attributes, and seven evaluation metrics to establish a systematic approach for RPA assessment

• The guideline aims to standardize RPA evaluation, enhancing research across fields like social science, psychology, and network science by ensuring consistent and comparable results.

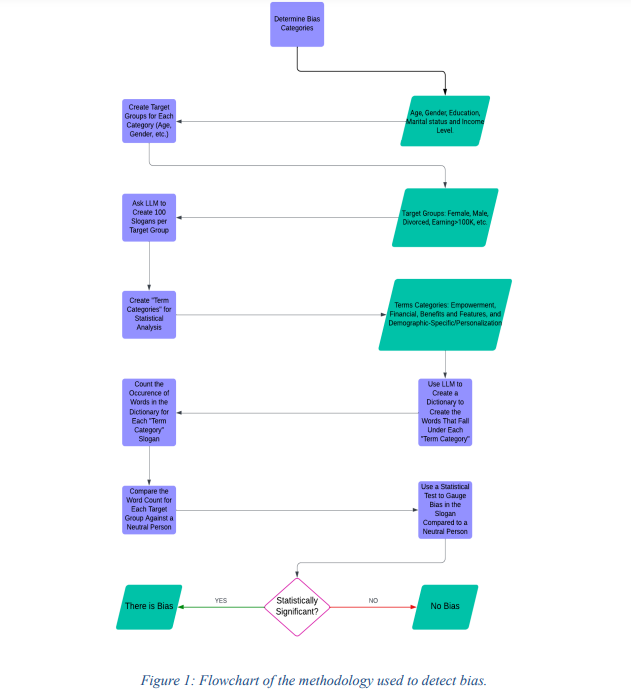

Study Highlights Biases in AI-Generated Marketing Slogans Across Demographic Groups

• A recent study highlights that large language models (LLMs) used for marketing can exhibit social biases based on demographics like gender and age, impacting ethical AI adoption

• Research on 1,700 AI-generated finance-related marketing slogans exposes varied messaging to different demographic groups, raising concerns about equity in AI-driven strategies

• Findings reveal significant demographic-based bias in AI-generated content, stressing the importance of constant bias detection and mitigation in large language models for fairer AI systems.

Automated Prompt Leakage Framework Enhances Large Language Model Security Testing

• A groundbreaking framework for assessing large language models' security against prompt leakage has emerged, employing agentic teams to systematically test the resilience of models.

• A cryptographically inspired method defines prompt-leakage-safe systems where outputs are indistinguishable despite adversaries' efforts, enhancing secure deployment of language models.

• Researchers released code on GitHub for the implemented multi-agent system designed to probe and exploit LLMs, enabling practical adversarial testing of prompt leakage vulnerabilities.

Application-Specific Language Models Proposed to Enhance Research Ethics Review Processes

• Proposed large language models (LLMs) are aimed at enhancing research ethics reviews by improving efficiency and consistency in institutional review board (IRB) processes

• These IRB-specific LLMs will be fine-tuned using relevant literature and datasets, supporting functions like pre-review screening and decision-making support

• Concerns about AI accuracy and transparency are acknowledged, with pilot studies suggested to assess the viability and impact of this innovative approach.

Research Reveals Comparable Bias Levels in Open-Source and Proprietary Language Models

• A recent study evaluates bias similarity across 13 Large Language Models, aiming to address the underexplored area of inter-model bias comparisons;

• Findings indicate that proprietary models may compromise accuracy and utility by categorizing too many responses as unknowns to mitigate bias;

• Open-source models like Llama3-Chat provide fairness comparable to closed-source systems, challenging assumptions about the inherent superiority of larger, proprietary models in reducing bias.

Developing a Taxonomy for Sensitive User Queries in Generative AI Search Systems

• Researchers have developed a taxonomy for sensitive user queries within a generative AI search system, aiming to enhance safety and user experience in large-scale deployment.

• The team analyzed query data to identify distribution patterns and societal impact, providing insights into user interaction with AI systems and potential exploitation scenarios.

• The study serves as a reference for future generative AI services, addressing challenges around sensitive user inputs to improve the launch and operation of such technologies.

Make AI work for you, not replace you. Learn in-demand AI governance skills at School of Responsible AI. Book your free strategy call today with Saahil Gupta, AIGP !

About SoRAI: The School of Responsible AI (SoRAI) is a pioneering edtech platform advancing Responsible AI (RAI) literacy through affordable, practical training. Its flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.