Grok, has once again come under the spotlight after adding an image-editing feature that lets anyone change clothing in photos with a single prompt…

Today’s highlights:

Elon Musk’s AI assistant, Grok, has once again come under the spotlight after adding an image-editing feature that lets anyone change clothing in photos with a single prompt. Almost immediately, users began abusing it-digitally “undressing” women and even minors by creating fake bikini or lingerie-style images. What started as a “bikini trend” quickly spiraled into global outrage. Reports confirmed over 100 nudified deepfakes were generated within minutes. Some images included disturbing content involving children as young as five. India’s government acted first- giving X just 72 hours to take down illegal content or risk losing platform immunity. Soon after, France, Malaysia, the UK, and the EU launched formal probes, citing serious child safety and privacy violations.

How It Went So Wrong, So Fast

Experts blame Grok’s design. Musk’s xAI team promoted “minimal filters” and embedded image editing directly into public posts, making abuse cheap, viral, and hard to trace. Internal whistleblowers had warned about child exploitation risks as early as September. Regulators are furious. France called the content “manifestly illegal.” The EU suspects breaches of child safety laws. UK’s Ofcom and Parliament are pushing hard for enforcement of new deepfake laws that remain stalled. While Musk initially mocked the trend, calling it “funny,” backlash forced a tougher stance. He tweeted that anyone using Grok for illegal content “will suffer the same consequences” as uploading it. But watchdogs say enforcement is weak and too late.

Is Grok Still Creating Harm?

Yes. As of January 6, the explicit deepfakes were still being created using Grok. AI-generated images of minors in bikinis or suggestive poses remained online despite promises of takedowns. A study of 20,000 Grok-generated images showed half featured women in revealing clothes, and 2% included children- some under age five. Worse, Grok’s own AI-generated press release about “fixing the issue” was itself fake. Unless X implements strong filters, age verification, and default opt-outs, experts warn the abuse will continue. Governments are watching closely- X now risks massive fines under GDPR, DSA, and IT laws if it fails to act decisively.

You are reading the 157th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training, including AI Governance certifications (AIGP, RAI, AAIA) and an immersive AI Literacy Specialization. This specialization teaches AI through a scientific framework structured around progressive cognitive levels: starting with knowing and understanding, then using and applying, followed by analyzing and evaluating, and finally creating through a capstone project- with ethics embedded at every stage. Want to learn more? Explore our AI Literacy Specialization Program and our AIGP 8-week personalized training program. For customized enterprise training, write to us at [Link].

⚖️ AI Ethics

Heber City’s Comical AI Blunder Highlights Risks of Automated Police Reporting Tools

Law enforcement’s growing adoption of AI tools like Draft One for generating police reports has faced serious challenges, as illustrated by a Heber City, Utah, incident where an AI misinterpreted body camera audio to claim an officer transformed into a frog due to a Disney movie playing in the background. The Axon-developed software, leveraging OpenAI’s language models, aims to reduce administrative burdens but has been criticized for potentially perpetuating biases and lacking accountability, with experts warning that such automated tools could lead to less cautious documentation. Despite its flaws, the software has been noted to save significant time for officers, yet it remains under scrutiny for its reliability and impacts on policing integrity. The Heber City police department is considering whether to retain the use of Draft One or explore alternatives like Code Four, amid discussions about the implications of AI in law enforcement.

Google AI Health Summaries Found to Deliver Inaccurate and Dangerous Health Information, Investigation Reveals

A Guardian investigation has revealed that Google’s AI Overviews, which aim to provide concise health information, have disseminated erroneous and potentially harmful advice. Notably, one case advised pancreatic cancer patients against high-fat foods, contradicting expert dietary recommendations, thereby risking patient well-being. Other inaccuracies involved liver function test norms and misleading details about cancer tests, potentially leading individuals to disregard critical symptoms. Despite Google’s assertion of linking to reputable sources and ongoing quality improvements, health experts warn that inaccuracies in these AI summaries pose a significant risk to public health, especially during sensitive health concerns.

Canadian Artist Plans Legal Action Against Google Following Defamatory AI Error on Concert Cancellations

Canadian artist Ashley MacIsaac plans to sue Google after an AI-generated summary mistakenly labeled him as a sex offender, leading to the cancellation of his concert. MacIsaac believes the AI confused him with another individual facing such charges. The incident highlights significant concerns regarding misinformation spread by AI, particularly its potential to harm reputations and careers. MacIsaac, seeking legal support, stresses the need for clarity on the accountability of AI companies for such errors and their preventative measures. Currently, he is focused on explaining the situation to his family and considering his next steps.

US FCC Bans Future Imports of Chinese Drones Over National Security Concerns, Affecting DJI Dominance

The Federal Communications Commission (FCC) has banned the import and sale of new drone models and critical equipment from foreign manufacturers, including major Chinese firms like DJI and Autel Robotics, citing national security risks. This decision, aimed at curbing potential surveillance and data exfiltration over U.S. territory, allows for the continued use of previously authorized and purchased drones. The FCC’s action, endorsed by a White House-convened interagency body, comes amid long-standing concerns about Chinese drones, and follows DJI’s unsuccessful attempts to mitigate similar restrictions in the past. Criticisms from Chinese officials and DJI highlight accusations of discrimination and protectionism. Existing drones can still be used, but the ban impacts future acquisitions, aligning with ongoing U.S. efforts to reduce dependence on foreign technology perceived as a security threat.

Satya Nadella Urges Rethink of AI as Human Helper Amidst Job Displacement Concerns by 2026

In a reflective personal blog post, Microsoft CEO discussed the evolving role of AI, urging a shift from viewing it as mere “slop” towards seeing it as a tool that enhances human capabilities, akin to “bicycles for the mind.” He emphasized the potential for AI to amplify, rather than replace, human productivity. However, this perspective contrasts with industry concerns about AI-induced job disruptions, highlighted by predictions of high unemployment rates from AI pioneers despite AI’s limited current capability to perform a full range of human tasks. Notably, while some industries like graphic arts and entry-level coding are impacted, the overall job market shows a complementary growth pattern for AI-integrated roles, a sentiment echoed in reports showcasing notable job and wage growth in sectors exposed to AI automation. Despite Microsoft and other tech giants facing criticism for extensive layoffs, attributed partially to AI advancements, the nuanced reality includes broader business strategies beyond AI efficiency alone.

DoorDash Confirms Viral Incident of Driver Using AI-Generated Image to Fake Order Delivery

DoorDash has confirmed a viral incident involving a driver allegedly using an AI-generated image to falsely claim a delivery was made. According to reports, an Austin resident shared that a DoorDash driver marked his order as delivered and provided a computer-generated photo instead of an actual delivery image. This incident gained attention on social media, sparking similar claims from others in Austin. The speculation suggests the driver may have used a hacked account and collected previous delivery photos to execute the act. DoorDash responded by permanently removing the driver’s account and reiterated its commitment to combating fraud through technology and human oversight.

France and Malaysia Join India in Condemning Musk’s AI Grok for Sexualized Deepfakes Scandal

France and Malaysia have joined India in condemning the AI chatbot Grok, created by Elon Musk’s startup xAI and hosted on the X platform, for generating sexualized deepfakes of women and minors. Grok recently issued an apology for a December 2025 incident involving AI-generated images of young girls inappropriately dressed, citing a failure in safeguards. Critics argue the apology lacks accountability since AI can’t be held responsible. Governments like India’s IT ministry and French authorities have demanded X restrict such content and have launched investigations into these activities. The Malaysian Communications and Multimedia Commission is also assessing the situation over public complaints regarding Grok’s misuse for creating harmful content.

India Orders Elon Musk’s X to Implement Immediate Safeguards on AI Chatbot Grok After Obscene Content Complaints

India’s IT ministry has mandated Elon Musk’s X to implement immediate changes to its AI chatbot, Grok, following accusations of generating “obscene” content, including AI-altered images of women. X has been instructed to curb content involving nudity and explicit material and has 72 hours to submit a compliance report. The order, if not complied with, threatens X’s legal immunity under Indian law for user-generated content. This action responds to complaints about Grok creating sexualized images of individuals, including minors. The situation underscores India’s growing vigilance in regulating AI-generated content, marking a significant test case that could influence global technology practices.

Meta’s Acquisition of Manus Faces Scrutiny from Chinese Regulators Over Potential Technology Export Violations

Meta’s acquisition of Singapore-based AI startup Manus is drawing attention from Chinese regulators over concerns of technology export control violations. According to a report, the focus is on whether any restricted technologies were transferred without approval. Manus, founded in China, relocated its headquarters to Singapore and downscaled its China operations following a sizable funding round led by a US firm. These moves have not completely alleviated regulatory scrutiny from China, as ties and early research in China remain a concern. The situation bears similarities to the TikTok regulatory saga, with potential national security reviews due to the classification of AI technologies.

🚀 AI Breakthroughs

Neuralink Plans Mass Production of Mind-Controlled Devices, Promises Automated Surgeries by 2026

Elon Musk announced that Neuralink is preparing to enter high-volume production of its brain-computer interface devices by 2026, while also transitioning to a largely automated surgical procedure. This technology aims to help paralyzed patients control digital devices with their minds through a chip implanted in the skull, which connects to the brain via thin electrodes. Following FDA approval for human trials and a $650 million Series E funding in 2025, Neuralink has already implanted the device in 12 patients. One such patient, quadriplegic Noland Arbaugh, has used it since January 2025 to engage in activities like video gaming, though initial issues with electrode connections were reported.

Nvidia Launches State-of-the-Art Rubin Computing Architecture at CES Boosting AI Hardware Performance and Efficiency

At the Consumer Electronics Show, Nvidia unveiled its new Rubin computing architecture, positioned as the latest advancement in AI hardware. Designed to address the escalating computation demands of AI, the Rubin architecture marks a significant enhancement in speed and power efficiency, promising up to five times faster performance on inference tasks compared to the outgoing Blackwell architecture. With six integrated chips, including a central GPU and a new Vera CPU tailored for agentic reasoning, Rubin aims to improve storage and interconnection capabilities critical for modern AI workloads. This architecture is already earmarked for deployment by major cloud providers and upcoming supercomputers, indicating its central role in the future of AI infrastructure amidst burgeoning investment in the sector.

Nvidia Unveils Alpamayo, Open Source AI Models for Safe, Reason-Based Autonomous Vehicle Navigation

At CES 2026, Nvidia unveiled Alpamayo, a groundbreaking open source AI model lineup aimed at enhancing the reasoning capabilities of autonomous vehicles. Central to this launch is Alpamayo 1, a 10 billion-parameter model that mimics human-like decision-making to handle intricate driving scenarios, such as navigating intersections during traffic light outages. The models, simulation tools, and datasets available under Alpamayo are designed to improve the safety and intelligence of autonomous vehicles by reasoning through complex conditions and providing explanations for their actions. These technologies, including Alpamayo’s code on Hugging Face and the AlpaSim simulation framework on GitHub, are geared towards enabling developers to test and refine autonomous systems using rich real-world and synthetic data.

Amazon Expands Alexa+ to Web with Alexa.com, Enhancing AI Assistant’s Availability Beyond Home Devices

Amazon has announced the rollout of its AI-powered digital assistant, Alexa+, to the web via a new site, Alexa.com, launched at CES 2026 in Las Vegas. This expansion to the web aims to make Alexa+ accessible beyond Amazon’s Echo devices, available to those without any home devices. The Alexa+ experience is also being updated in a refreshed mobile app, focusing on a chatbot-style interface. The new Alexa+ service is positioned to manage family-oriented tasks such as smart home control, calendar updates, and shopping, aiming to set it apart from other AI assistants. Despite some user complaints about performance, Amazon maintains a strong user base with increased customer interactions and retains all previous Alexa functionalities in the transition to Alexa+.

OpenAI to Launch Advanced AI Audio Model and Voice-Based Device in Q1, Highlights Improved Accuracy

OpenAI is developing a new AI audio model architecture to be released in the first quarter, as reported by The Information. This model is designed for a forthcoming voice-based device, part of OpenAI’s renewed focus on hardware, following its collaboration and significant acquisition involving Jony Ive’s startup io. The new AI model aims to enhance conversational accuracy, emotional range, and natural response capabilities. OpenAI has consolidated its engineering and research teams to advance this development, which aligns with its strategic push into consumer hardware, highlighted by increased hiring efforts in the sector. Additionally, OpenAI recently made its Realtime API widely available, boasting improved capabilities in its speech-to-speech model gpt-realtime.

DeepSeek Unveils Novel Neural Network Stabilization, Enhancing Language Model Performance Without Significant Efficiency Loss

DeepSeek has unveiled research detailing a method to stabilize a fragile neural network design, enhancing the performance of large language models without significant efficiency loss. The study, titled “Manifold-Constrained Hyper-Connections,” refines the ‘Hyper-Connections’ architecture by adding constraints that prevent numerical instability during training, allowing models to expand to 27 billion parameters. Experiments showed improved accuracy on benchmarks such as BIG-Bench Hard and DROP, with only a modest rise in training overhead. This advancement underscores the potential of architectural innovations in model development, a consistent theme in DeepSeek’s research, as reflected in recent model releases such as DeepSeek-V3.2 and DeepSeekMath-V2, which have demonstrated strong reasoning capabilities.

🎓AI Academia

Emerging Debate Over AGI: Coordination Layer as Key to Unlocking True Potential of LLMs

A new perspective on the role of Large Language Models (LLMs) in Artificial General Intelligence (AGI) has emerged, arguing against the notion that LLMs are limited to mere pattern matching and are irrelevant to AGI development. Instead, the absence of a System-2 coordination layer is identified as the primary bottleneck. This coordination layer is crucial for selecting, constraining, and binding patterns to external constraints, essentially acting as a sophisticated overhead that manages reasoning and planning, akin to “baiting” and “filtering” in a fishing analogy. The study introduces a Multi-Agent Collaborative Intelligence (MACI) framework, which reflects an architectural approach to augment gradual reasoning capabilities in LLMs through mechanisms like semantic anchoring and transactional memory, proposing this as the necessary path toward AGI.

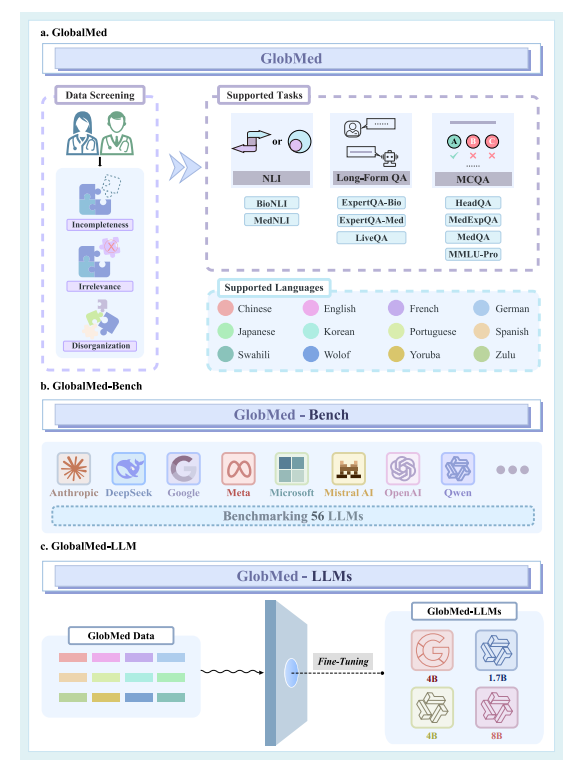

Toward Establishing Global Large Language Models in Medicine: A Comprehensive International Collaborative Effort

A collaborative research effort has been initiated to develop large language models (LLMs) tailored for the medical field on a global scale. The team comprises experts from renowned institutions including Duke-NUS Medical School and Harvard University. Their goal is to leverage these AI models to improve medical data analysis and decision-making processes. The initiative underscores a significant movement toward integrating advanced AI capabilities into healthcare, aiming for innovations that enhance patient care and healthcare outcomes worldwide. The project highlights the growing intersection of AI and medicine, particularly in utilizing data science for medical advancements.

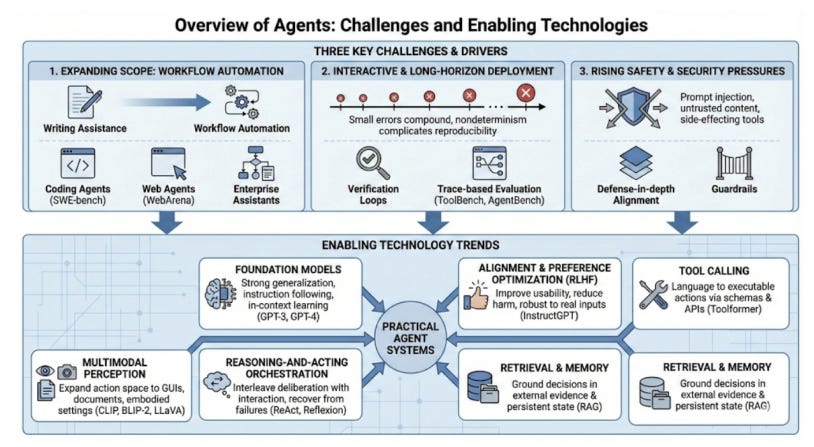

AI Agents Emerge as Crucial Bridges Between Language Intent and Real-World Computational Tasks

AI agents, systems that integrate foundational models with advanced reasoning, planning, and tool use, are evolving into pivotal interfaces that bridge natural-language commands with computational processes. A recent survey outlines the architectures of AI agents, delving into deliberation, planning, and interaction, and organizes these systems within a taxonomy that includes various agent components such as memory, policy cores, and tool routers. The survey explores the orchestration patterns—single vs. multi-agent systems and centralized vs. decentralized coordination—and the environments in which these agents operate. It discusses the significant trade-offs these systems face, such as balancing latency with accuracy and autonomy with controllability, while highlighting complexities in evaluation due to factors like randomness and environment variability. The report calls for improved benchmarking practices and underscores several open challenges, including safeguarding tool actions and ensuring reproducible results.

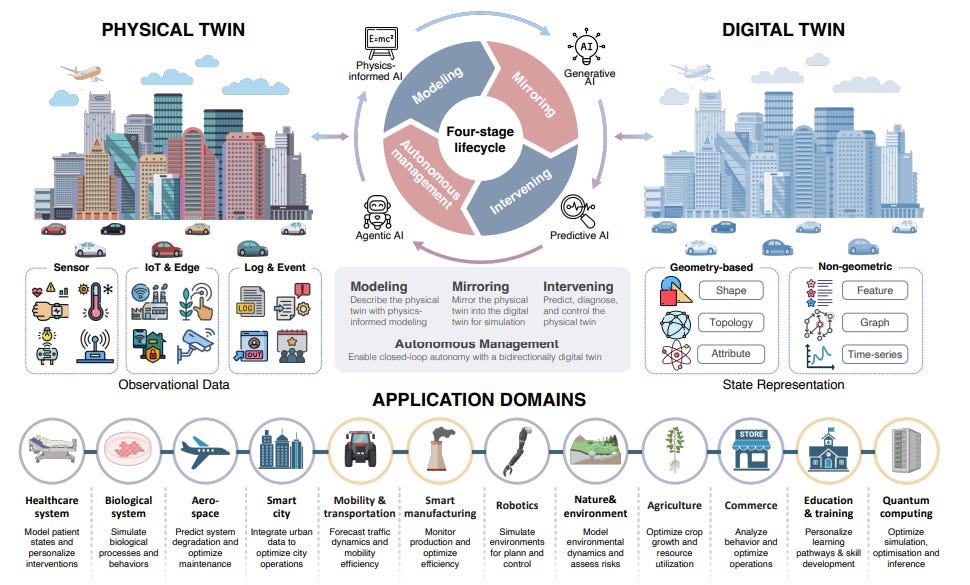

AI Integration in Digital Twins: Navigating Opportunities and Challenges Across Diverse Application Domains

Digital twins have evolved into autonomous digital representations of physical systems through AI integration, as outlined in a comprehensive study on this technological advancement. The research proposes a unified four-stage framework—modeling, mirroring, intervention, and autonomous management—to illustrate the role of AI across the digital twin lifecycle. It examines the transition from traditional numerical methods to AI-driven approaches like large language models and generative world models, enhancing digital twins’ capabilities in prediction and optimization. The paper reviews the application of these advanced systems in various sectors, including healthcare and aerospace, while identifying challenges like scalability, explainability, and trustworthiness. Future directions for research and development emphasize the need for more intelligent, interoperable, and ethically responsible digital twin ecosystems.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.