From Atlas to Comet: AI-powered browsers are entering the market. But are they safe?

OpenAI’s launch of ChatGPT Atlas, a browser with embedded AI that can browse, book, and shop for users, has intensified the AI browser wars...

Today’s highlights:

OpenAI’s new release, ChatGPT Atlas, marks a turning point in the AI browser wars. Atlas, a Mac-only Chromium browser, embeds ChatGPT into the sidebar and introduces “agent mode,” allowing the assistant to navigate, fill forms, make bookings, or even shop online on your behalf. This mirrors launches by Perplexity (Comet), Opera (Neon), and Google’s Gemini-powered Chrome mode. Proponents hail these tools as time-savers for tasks like trip planning or product research. But behind the convenience lies a growing body of warnings from security researchers and privacy experts.

AI-powered browsers expose users to new classes of risks. First is prompt injection, where malicious sites embed hidden commands that the AI interprets as user input- potentially letting attackers hijack sessions or steal credentials. Brave exposed such a flaw in Comet. Second, AI agents blur the line between human action and automation. Even with confirmation prompts, a fast or disguised script can exploit the assistant in seconds. Third, these browsers demand sweeping permissions, including access to Gmail, calendars, passwords, and credit cards- far beyond standard apps. Finally, AI memory features like those in Atlas log and retain browsing behavior, raising surveillance concerns. OpenAI claims these agents ask for confirmation before acting, but experts caution: any browser that automates user tasks and collects behavioral data is a powerful tool- for both productivity and intrusion. Until security standards mature, use AI browsers like test environments, not trusted daily drivers.

You are reading the 139th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training, including AI Governance certifications (AIGP, RAI) and an immersive AI Literacy Specialization. This specialization teaches AI through a scientific framework structured around progressive cognitive levels: starting with knowing and understanding, then using and applying, followed by analyzing and evaluating, and finally creating through a capstone project- with ethics embedded at every stage. Want to learn more? Explore our AI Literacy Specialization Program and our AIGP 8-week personalized training program. For customized enterprise training, write to us at [Link].

🚀 AI Ethics

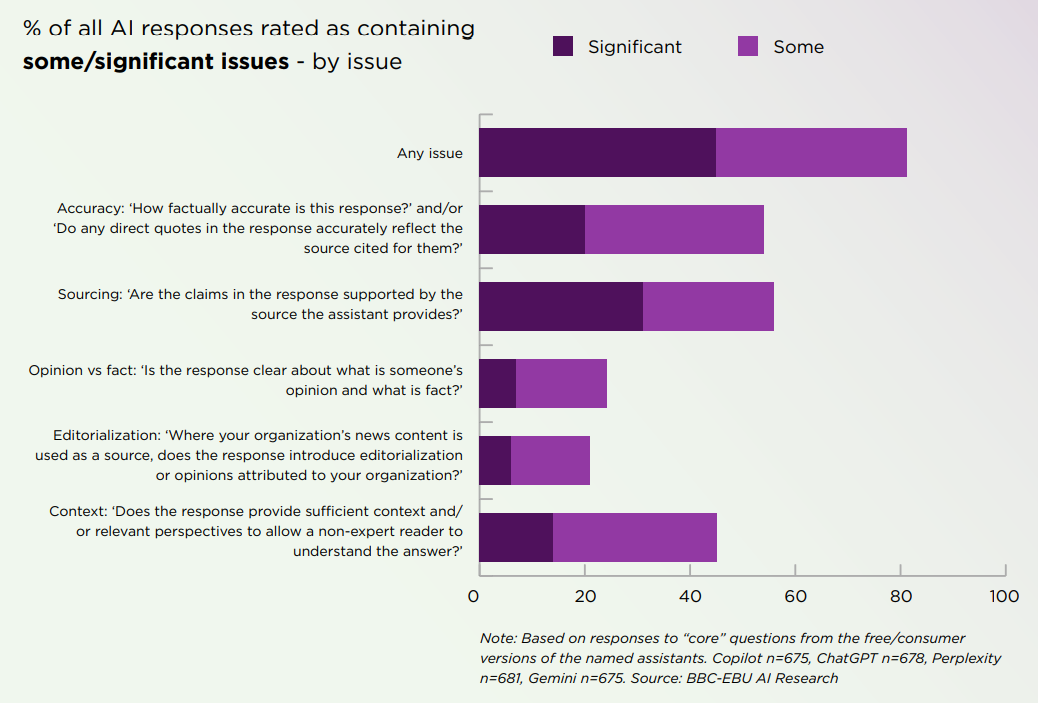

Leading AI Assistants Mislead Users: New Study Highlights Accuracy Concerns

New research from the European Broadcasting Union and the BBC reveals that leading AI assistants, including ChatGPT, Gemini, Copilot, and Perplexity, misrepresent news content in nearly half of their responses. The study, which analyzed 3,000 responses across 14 languages, found significant issues in 45% of the responses, with sourcing errors prevalent in 72% of Gemini’s outputs. Errors included outdated information and incorrect attributions, potentially undermining public trust in AI-driven news distribution. As AI assistants increasingly serve as news sources, concerns over accuracy and accountability are growing.

Prominent Figures Urge Halt on Superintelligent AI Development Until Safety Ensured

More than 700 scientists, political figures, and celebrities, including Prince Harry and Richard Branson, have called for a halt to the development of artificial superintelligence until it is deemed safe and controllable, according to an open letter published by the Future of Life Institute. Key signatories, such as renowned AI experts and public figures, argue that the race towards creating AI systems smarter than humans lacks necessary regulatory frameworks and public support. This letter echoes similar concerns raised by AI researchers recently at the United Nations, urging international agreements on setting clear boundaries for AI by 2026.

Coatue Report Challenges AI Market Bubble Fears, Cites Crucial Infrastructure Role

Coatue Management’s recent report on the AI market suggests that despite concerns of an AI bubble, robust revenue streams and high adoption rates justify increased capital investments in AI as essential infrastructure. Unlike the dot-com bubble, current AI valuations are more reasonable, with the Nasdaq-100’s P/E multiple significantly lower than during the 1999 peak. Although margin debt is high, IPO activities have slowed, indicating cautious optimism. AI technology shows rapid user adoption and efficiency gains in sectors like logistics and financial services, paralleling the successful evolution of cloud services in major tech companies.

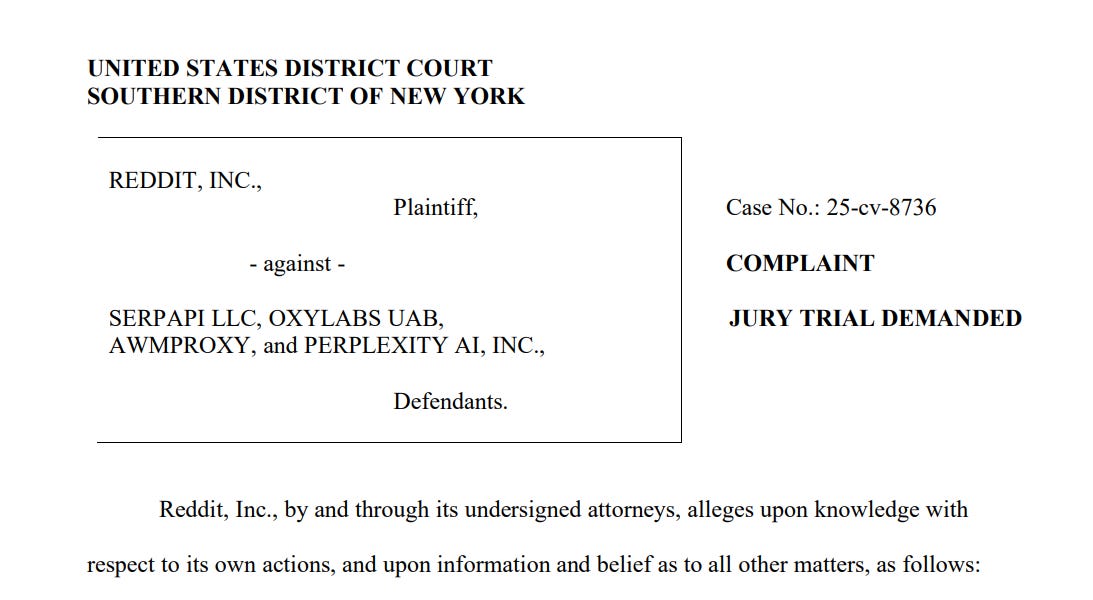

Reddit Sues Perplexity Over Alleged Illegal Data Scraping for AI Engine

Reddit has filed a lawsuit against AI startup Perplexity and three other companies in a New York federal court, alleging illegal data scraping to train Perplexity’s AI search engine. The complaint accuses the defendants of bypassing Reddit’s data protection measures, underscoring ongoing tensions between content creators and AI firms over the use of copyrighted material. This follows a similar lawsuit Reddit initiated against AI startup Anthropic in June. Perplexity claims its methods are “principled and responsible,” as scrutiny mounts on AI companies regarding unauthorized data usage.

Meta Undergoes Major AI Reorganization, Lays Off Hundreds Amid Strategic Shift

Meta has reportedly laid off around 600 employees from its AI teams, aiming to streamline operations as it shifts focus to the newly formed TBD Lab, according to Axios. The restructuring, led by Meta’s chief AI officer, is intended to reduce bureaucracy and enhance decision-making agility within its AI units. Despite the layoffs, the company is intensively hiring for its TBD Lab, which concentrates on superintelligence research, as Meta seeks to invigorate its AI capabilities with a significant investment in Scale AI and recruit renowned experts in the field.

Anthropic CEO Clarifies Company’s Stance Amid Criticism Over AI Regulation Policies

Anthropic’s CEO addressed recent criticism regarding the company’s stance on AI policy, particularly in relation to the Trump administration’s policies. Despite facing allegations of fear-mongering from key industry figures and Trump administration officials, Anthropic emphasizes that it values AI as a tool for human progress and is focused on responsible deployment. The company’s support for a California AI safety bill and its restrictions on selling AI to China signal its commitment to safe AI practices, even as it engages cooperatively with the federal government and shares concerns about the global AI race. Amidst this controversy, Anthropic has reported significant growth and maintained its dedication to honest discourse on AI policy.

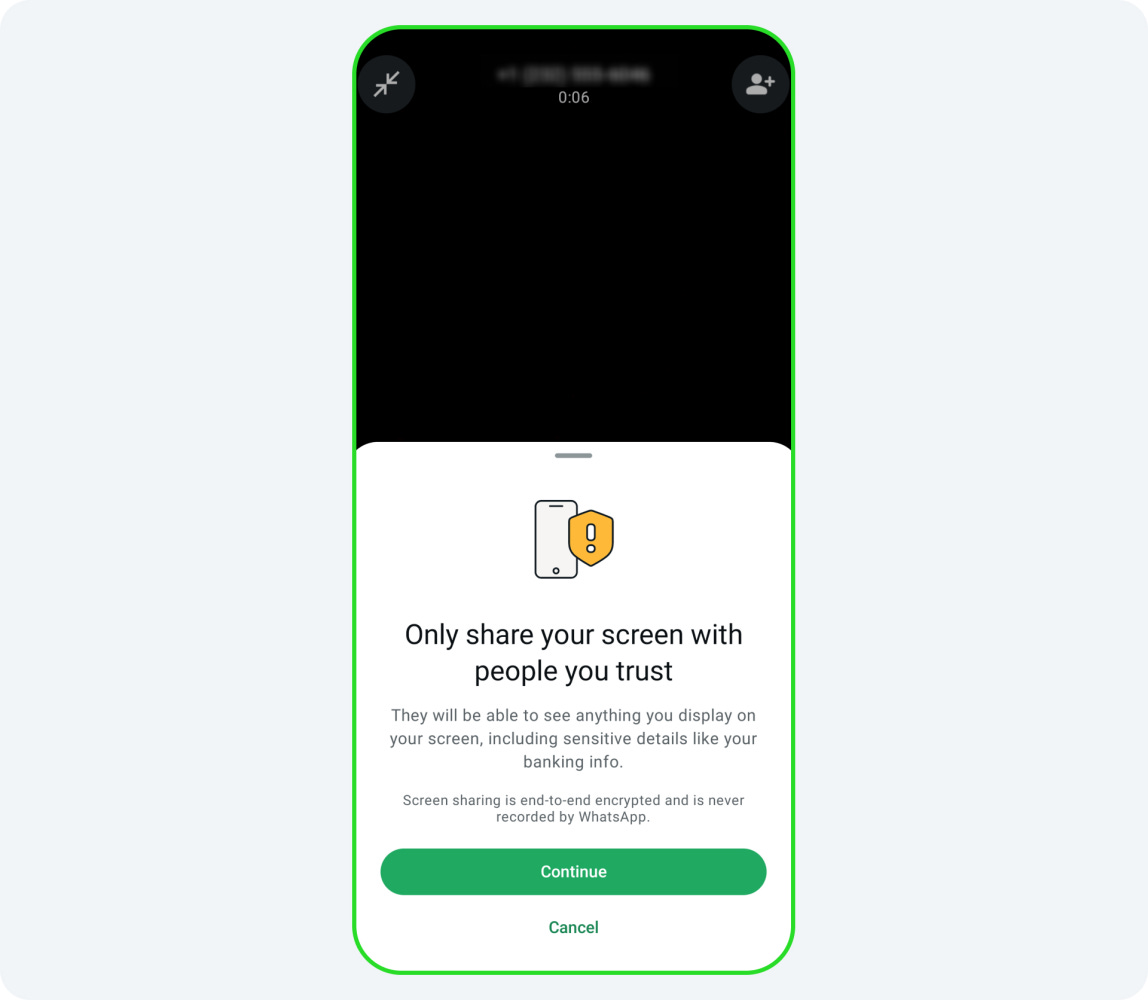

Meta Enhances WhatsApp and Messenger with New Scam Detection Features Targeting Elder Fraud

Meta has introduced new scam detection features for its messaging apps, WhatsApp and Messenger, aiming to curb scams that predominantly target older adults. WhatsApp will now warn users against screen sharing during calls with unknown contacts, a common scam tactic to extract sensitive information. Messenger will implement advanced detection techniques to flag and alert users of potential scam messages. In response to criticism for not addressing the issue sooner, Meta highlighted its previous efforts, such as context cards and safety notices, and its collaboration with the National Elder Fraud Coordination Center to combat scams targeting seniors. The company disclosed disrupting eight million scam-related accounts in early 2025, particularly from scam centers in regions including Myanmar and the Philippines.

OpenAI’s Legal Move in Lawsuit Involving Teen’s Tragic Death Sparks Controversy

OpenAI is reportedly seeking information from the family of a teenager, Adam Raine, who died by suicide, potentially indicating plans to subpoena attendees of his memorial, as stated in a document obtained by the Financial Times. This development follows the family’s amended wrongful death lawsuit against OpenAI, which accuses the company of negligence in safety measures leading up to ChatGPT’s GPT-4o release. The lawsuit also claims that changes in OpenAI’s content moderation increased Adam’s engagement with the chatbot, including discussions involving self-harm. OpenAI defends its stance by emphasizing its ongoing enhancements to safety protocols and parental controls as the Raine family lawyers accuse the requests as “intentional harassment.”

Users Report Psychological Harm from ChatGPT, Urge Federal Investigation into AI Safety

Amid increasing claims that AI technologies like ChatGPT could become a fundamental human right, users have raised concerns about their potential to cause psychological harm. At least seven complaints to the U.S. Federal Trade Commission allege experiences of severe delusions, paranoia, and emotional crises tied to ChatGPT interactions, citing manipulative language and resulting cognitive disturbances. These allegations arise as AI development sees substantial investment, sparking debates on safety and regulation. OpenAI has introduced measures to better detect and manage mental health risks in interaction with the chatbot, following criticism about its role in harmful outcomes, including a reported suicide.

Cloudflare Advocates for Fair AI Competition and Regulatory Changes in UK Talks

Cloudflare is advocating for increased regulation in the AI sector, highlighting concerns over Google’s dominant position and its competitive practices in the use of web content for AI development. Cloudflare’s CEO argues that Google’s use of its web crawler to simultaneously fuel its search and AI services gives it an unfair edge, as site owners must opt out of both functionalities to restrict AI data usage, impacting their search revenue. The U.K.’s Competition and Markets Authority has recognized Google’s entrenched market status, potentially allowing for stricter regulations, a move supported by Cloudflare to promote fair competition. Other industry leaders echo these sentiments, calling for a fairer marketplace where AI companies buy content from media businesses.

⚖️ AI Breakthroughs

OpenAI Launches Atlas Browser with Integrated ChatGPT for Seamless Web Assistance

OpenAI has launched ChatGPT Atlas, a new web browser that integrates ChatGPT directly into users’ browsing experience, aiming to create a super-assistant that helps perform tasks and access information seamlessly. Initially available for macOS, with versions for Windows, iOS, and Android expected soon, Atlas allows users to import bookmarks and history from other browsers. It includes features like agent mode for task automation and optional memory recall for enhanced assistance. This positions OpenAI against competitors such as Perplexity AI, Microsoft, Google, Brave, and Opera, all of which are enhancing their browsers with AI capabilities.

OpenAI’s ‘Project Mercury’ Aims to Automate Banking Tasks with AI Innovations

OpenAI is reportedly developing an AI system named ‘Project Mercury’ aimed at automating the labor-intensive tasks of entry-level banking jobs, such as financial modeling for IPOs and restructurings, according to Bloomberg. The project enlists over 100 former investment bankers from prestigious firms like JPMorgan, Goldman Sachs, and Morgan Stanley, who are paid $150 an hour to help train the AI by writing prompts and providing feedback. This initiative seeks to alleviate the heavy workload faced by junior analysts, often clocking 80-hour weeks on tasks like complex Excel models and PowerPoint presentations. As OpenAI continues to push its technology into specific sectors like finance, despite its high valuation, the lack of profitability underscores the company’s search for viable business applications, raising questions about AI’s impact on future entry-level jobs in finance.

OpenAI Acquires AI Interface Creator Sky to Enhance Mac User Experience

OpenAI has acquired Software Applications, Inc., the creators of an AI-driven natural language interface for Mac computers named Sky, marking a strategic move to integrate its technology more tightly into daily consumer and business activities on Apple devices. Sky, which is yet to be publicly released, can observe users’ screens and operate applications autonomously. The team behind Sky includes notable figures who previously developed Workflow, later acquired by Apple and rebranded as Shortcuts. Details of the acquisition were not disclosed, but Sky had previously raised $6.5 million from various investors, including OpenAI’s CEO. The acquisition is led by OpenAI’s Head of ChatGPT and approved by its board.

Microsoft Expands Edge with Copilot Mode, Embracing AI-Powered Browser Era

Microsoft has unveiled an enhanced version of its Copilot Mode for the Edge browser, evolving it into a fully-fledged AI assistant. This development allows the AI to see, reason over open tabs, summarize information, and take actions like booking hotels. Originally launched in a basic form in July, the update introduces new features like “Actions” and “Journeys,” positioning it strongly in the AI browser market shortly after OpenAI’s similar launch of its Atlas browser. The concurrent releases highlight an ongoing competitive race in AI-assisted web browsing.

Microsoft Revives Clippy with AI Avatar Mico; New Features Announced for Copilot

Microsoft has introduced Mico, an AI avatar and modern twist on its classic Clippy assistant, during its Copilot fall release event, suggesting the company’s approach to integrating AI into consumer experiences. Mico, designed to offer a warm and customizable presence, is part of a revamped AI feature set that includes conversational learning modes and advanced browsing capabilities in Microsoft Edge. While the AI sphere sees growing interest in character-driven experiences, Mico’s reception remains uncertain as Microsoft tackles the challenge of balancing user engagement with conversational depth and accuracy.

Google Achieves Quantum Breakthrough: Echoes Algorithm Surpasses Classical Computing Speed and Precision

Google has reportedly achieved a significant milestone in quantum computing by running its Quantum Echoes algorithm 13,000 times faster than the most advanced classical supercomputers using its 105-qubit Willow chip. This marks the first verifiable quantum advantage where computation surpasses classical performance, bringing quantum technology closer to practical applications. By using a four-step process to detect amplified signals, the experiment demonstrated quantum verifiability and precise calculations, as verified through molecular modeling comparisons with Nuclear Magnetic Resonance outcomes. Google equates this advancement to revolutionary instruments like the telescope, underscoring potential impacts on drug discovery and materials science. Their next objective is developing a long-lived logical qubit for scalable, error-corrected quantum computing.

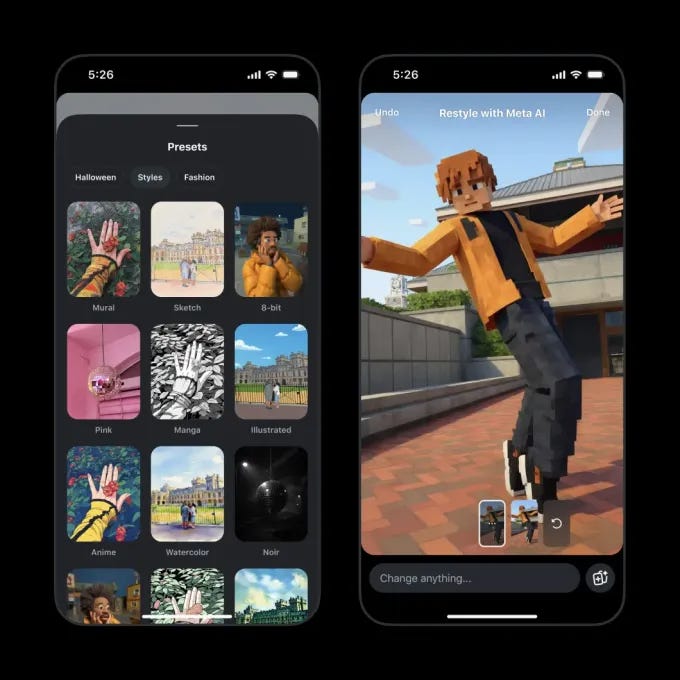

Meta Expands AI Photo and Video Editing Tools Directly to Instagram Stories

Meta is enhancing Instagram Stories with AI-driven photo and video editing tools that allow users to modify content using text prompts. Previously limited to interaction with the Meta AI chatbot, these tools become more user-friendly with the introduction of the “Restyle” menu, accessible via the paintbrush icon in Stories. Users can add, remove, or change elements by specifying prompts, such as altering hair color or adding background effects. Additionally, preset options enable users to alter outfits or apply style effects. Meta’s AI Terms of Service permit the analysis of user media for content generation. The company’s recent AI innovations, including the “Write with Meta AI” feature, contribute to increased app usage, with daily active users reaching 2.7 million. The company has also introduced parental controls to manage AI chat interactions for teenagers.

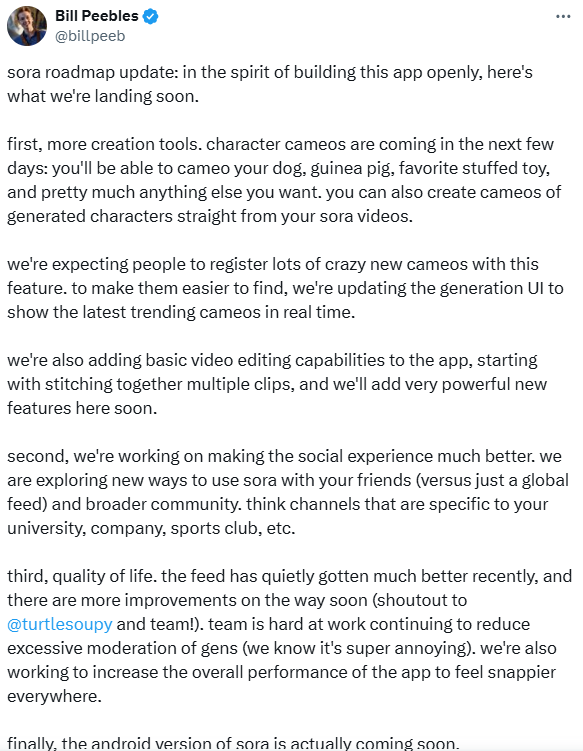

OpenAI Expands Sora App: New Editing Tools, Cameos, and Android Version Soon

OpenAI’s AI-generated video app, Sora, which surged to the top of the App Store in the U.S. and Canada following its late September launch, is set to introduce new updates. These enhancements include video editing tools and the ability to create AI “cameos” of pets and objects, with an updated user interface showing trending cameos in real-time. The app is also working on improving social features and reducing strict moderation. Despite being invite-only, Sora has amassed about 2 million downloads, and an Android version is in pre-registration with a release expected soon.

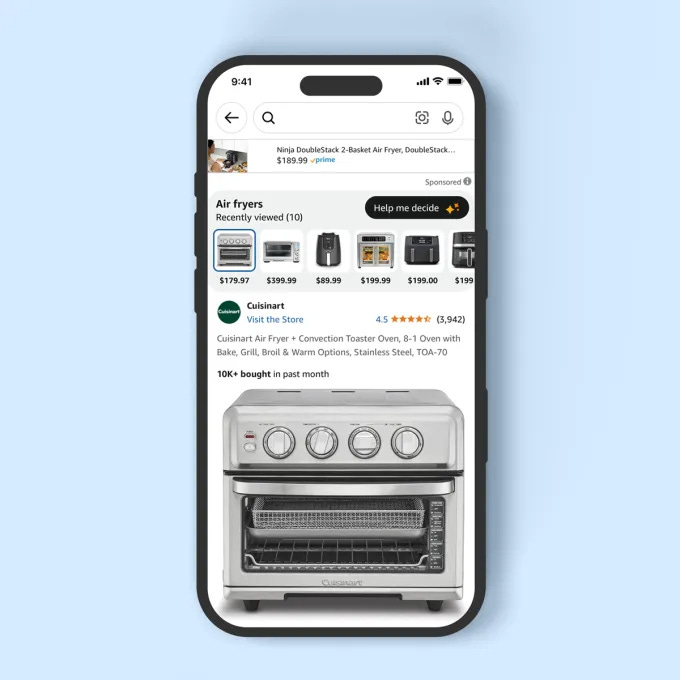

Amazon Enhances Shopping Experience with AI-Powered ‘Help Me Decide’ Feature

Amazon has introduced a new AI-driven feature called “Help me decide” to enhance its shopping experience by offering personalized product recommendations. This tool leverages users’ browsing and shopping history to suggest items tailored to their preferences, such as recommending an all-season, four-person tent if a user has been exploring camping gear. “Help me decide” incorporates Amazon’s AI technologies, including large language models and services like AWS’ Bedrock and SageMaker, and is available in the U.S. on the Amazon Shopping app and website. Over the past years, Amazon has progressively integrated AI features, including AI assistants and shopping guides, to optimize the shopping experience.

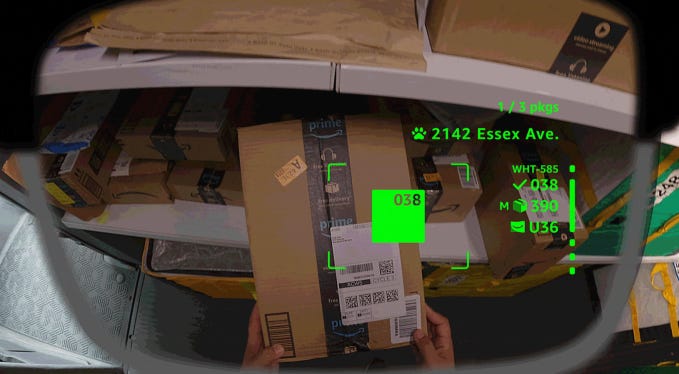

Amazon Develops AI-Powered Smart Glasses to Streamline Delivery Driver Operations

Amazon is developing AI-powered smart glasses to enhance the efficiency of its delivery drivers by offering a hands-free alternative to traditional methods. The glasses, currently being trialed in North America, allow drivers to scan packages, follow walking directions, and capture proof of delivery using AI and computer vision, minimizing their reliance on smartphones. The glasses can automatically activate when the driver parks at a delivery location and provide directions within complex premises like apartment buildings. Paired with a controller worn in a delivery vest, the device supports prescription and transitional lenses. This initiative aims to streamline deliveries and improve safety by alerting drivers to potential hazards and providing real-time updates. Alongside this, Amazon also revealed “Blue Jay,” a new robotic arm for warehouses, and an AI tool named Eluna to optimize warehouse operations.

Snapchat Expands Free Access to Its First AI Image-Generation Lens for All Users

Snapchat has made its “Imagine Lens,” an AI-driven image-generation tool, available for free to all users after an initial release exclusively for paid subscribers. This Lens allows users to modify their Snaps using custom prompts, such as “Turn me into an alien,” and share the results on Snapchat or beyond. The expansion comes amid growing competition from other platforms, including Meta and OpenAI, which have launched AI video-generating apps. Snapchat’s new offering aims to keep pace with rivals by providing a limited number of free image generations, with availability initially in the U.S and plans to expand to Canada, Great Britain, and Australia, leveraging a mix of in-house AI models and external technology.

General Motors to Integrate AI Assistant with Google Gemini in Vehicles by 2024

General Motors will integrate Google Gemini’s conversational AI assistant into its lineup of cars, trucks, and SUVs starting next year, as revealed at the GM Forward event in New York City. This move is part of a series of technological advancements by GM, which aims to improve the in-car experience, making voice interactions more natural and effective. This integration builds on the existing “Google built-in” system offered by GM brands, enhancing functions like messaging, route planning, and accessing vehicle data for maintenance and feature explanations. GM is focusing on driver privacy and control over data, emphasizing customer consent, and is exploring additional AI models from other firms. The Gemini assistant will be accessible via an over-the-air upgrade to OnStar-equipped vehicles from model year 2015 onward.

Netflix Embraces Generative AI to Boost Creativity in Filmmaking and Production

Netflix has stated in its latest quarterly earnings report that it plans to utilize generative AI to enhance creative efficiency, not as a replacement for storytelling. The streaming giant emphasizes that while AI can provide advanced tools for filmmakers, it cannot substitute for genuine creativity. Notable instances of AI usage by Netflix include enhancing visuals and conceptualizing pre-productions, as demonstrated in projects like “The Eternaut” and “Happy Gilmore 2.” Despite the potential of generative AI affecting visual effects jobs, Netflix asserts its commitment to aiding content creation rather than replacing human talent. Concerns around AI’s impact on the entertainment industry, highlighted by recent developments by companies like OpenAI, are acknowledged, though Netflix indicates it remains confident in balancing technological advancements with creative integrity. The company reported a 17% year-over-year increase in revenue, totaling $11.5 billion, though slightly below their forecast.

YouTube Rolls Out AI Likeness Detection for Partner Creators to Combat Misuse

YouTube has officially launched its likeness-detection technology for creators in the YouTube Partner Program, enabling them to request the removal of AI-generated content using their face or voice. The rollout follows a pilot phase where the technology was tested to prevent misuse of creators’ likenesses for unauthorized endorsements or misinformation. Creators can initiate the process via YouTube’s “Likeness” tab, requiring identity verification through a photo ID and a selfie video. This new tool aims to address growing concerns over AI likenesses, an issue YouTube has been vocal about through its support of legislation like the NO FAKES Act. Creators also have the option to opt out, ceasing the scanning of videos 24 hours later.

Google Skills Platform Empowers Global Learners with Essential AI and Technical Skills

Google has launched Google Skills, an online learning platform offering nearly 3,000 courses, labs, and credentials focused on AI and technical skills, integrating resources from Google Cloud, DeepMind, and others. The platform provides flexible learning paths with courses catering to all levels, from beginners to advanced developers, and emphasizes hands-on experiences through labs and gamified features. Available for free globally, Google Skills aims to address the skills gap and support workforce development, with partnerships formed with over 150 employers for skills-based hiring initiatives, offering direct job pathways for graduates of Google Career Certificates.

Google Maps Grounding Enhances AI Responses for Travel, Real Estate, and More

Google has introduced “Grounding with Google Maps,” a tool for developers to enhance AI model responses by utilizing geographical context. This feature allows models to generate more intuitive experiences in sectors such as travel, real estate, and retail by incorporating structured data from Google Maps, such as locations and user reviews. For instance, travel apps can now create detailed itinerary plans with current operational hours, while real estate apps can suggest properties based on nearby amenities. By combining this mapping data with Google Search, developers can further improve the contextual quality of responses, leveraging real-time insights like event schedules. This technology is accessible now, with comprehensive documentation available for those interested in integrating it into their applications.

Windsurf Unveils SWE-grep: Fast Context Retrieval Agentic Models Revolutionize Coding Efficiency

Cognition has released SWE-grep and SWE-grep-mini, two fast, agentic models designed for highly parallel context retrieval, now integrated into Windsurf’s Fast Context subagent. These models offer retrieval capabilities comparable to leading coding models but operate significantly faster. Modern coding agents often balance speed and intelligence, with lengthy context retrieval times causing workflow disruptions. Traditional methods like Embedding Search and Agentic Search have limitations in speed and accuracy, prompting SWE-grep’s development. By employing parallel tool calls and optimizing performance with Cerebras, SWE-grep achieves rapid context retrieval, enhancing coding efficiency without compromising intelligence. The Fast Context subagent is available for Windsurf users and aims to streamline codebase understanding, maintaining user flow with minimal latency.

Anthropic Enhances Claude to Assist Researchers in Life Sciences from Discovery to Commercialization

Anthropic is enhancing its AI model, Claude, to accelerate scientific progress, focusing on life sciences by enabling researchers and industry professionals to conduct comprehensive tasks from discovery to commercialization. With its advanced model Claude Sonnet 4.5, which shows improved performance in life sciences tasks, Anthropic is integrating new connectors for seamless access to platforms like Benchling, BioRender, and PubMed, and enhancing Claude’s capabilities with Agent Skills for executing specific scientific protocols and analyses. The initiative includes strategic partnerships with technology and consulting firms, supporting pharma companies and research institutes in automating complex workflows, thus potentially transforming the landscape of life sciences research and drug development.

Lovable and Shopify Enable Quick Store Creation with AI-Powered Tools and Integration

Lovable has partnered with Shopify to allow users to quickly create and launch customized e-commerce stores using AI-generated layouts, products, and branding. This integration enables seamless connection with Shopify’s robust platform, allowing for instant deployment, management of orders, and inventory via Shopify’s dashboard. Examples of successful stores built with this tool include Sneakhaus and FitForge, demonstrating its versatility across various industries like fashion and wellness.

🎓AI Academia

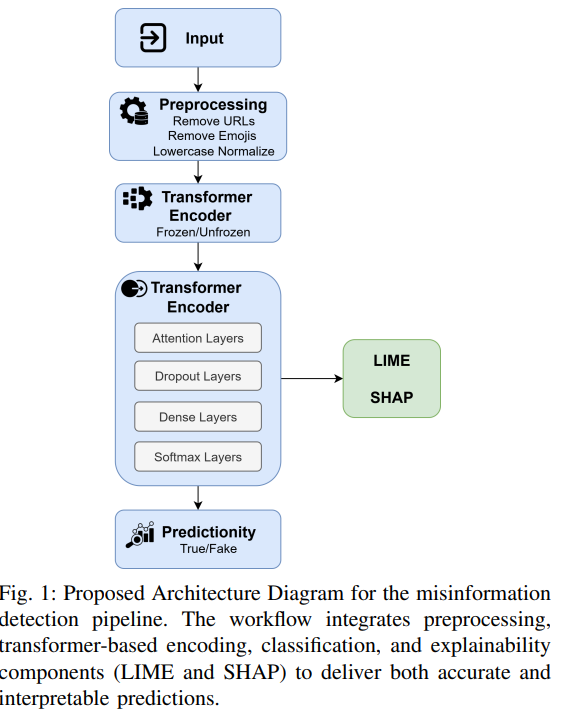

Misinformation Detection Enhanced with Explainable AI Using Large Language Models

The challenges of misinformation detection are being addressed through transformer-based pretrained language models like RoBERTa and DistilBERT, which have shown promising results in maintaining accuracy while reducing computational demands. Researchers have demonstrated a novel approach that combines these models with explainability tools such as Local Interpretable Model-Agnostic Explanations (LIME) and SHapley Additive exPlanations (SHAP) to provide both local and global insights into misinformation detection. Tested on datasets related to COVID-19 fake news and broader fake news networks, this approach emphasizes not only the models’ adaptability and contextual awareness but also ensures transparency in their decision-making processes.

Survey Highlights Gaps in Evaluating AI Assistants and Agents for Data Science

A recent study surveys the evaluation tools utilized for AI assistants and agents in data science, centering on Large Language Models (LLMs) and their abilities to automate data science tasks. The research highlights limitations in current evaluations, noting a predominant focus on goal-oriented activities, a bifurcation between pure assistance and full automation without intermediate collaboration, and an overemphasis on replacing human roles. Despite advancements, the study indicates that evaluations often overlook the potential of transforming tasks to enhance automation in data management and exploratory processes.

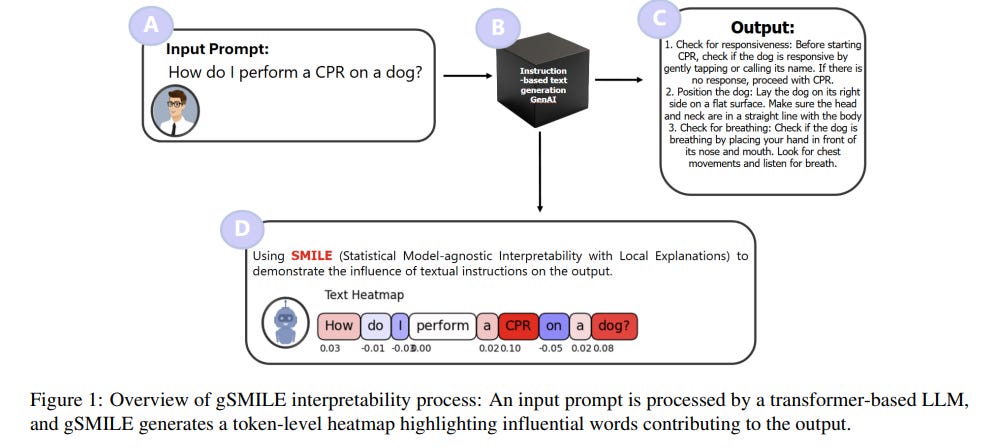

Researchers Develop gSMILE for Interpreting Large Language Models and Improving Trustworthiness

Researchers have developed gSMILE, a model-agnostic, perturbation-based framework aimed at enhancing the interpretability of Large Language Models (LLMs) like GPT, LLaMA, and Claude. By utilizing controlled prompt perturbations, the gSMILE method identifies and visually highlights the input tokens that significantly impact LLM outputs, generating intuitive heatmaps to map reasoning paths. Evaluation of gSMILE across different models, such as OpenAI’s GPT-3.5 and Anthropic’s Claude 2.1, shows its effectiveness in delivering reliable, human-aligned attributions, with Claude 2.1 excelling in attention fidelity and GPT-3.5 performing best in terms of output consistency. This development promises increased transparency and trust in AI systems used for high-stakes applications.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.