EU's Digital Omnibus Package Is Here- A Wide Deregulation Agenda?

The EU Commission's "Digital Omnibus" proposed to delay stricter rules on use of AI in "high-risk" areas until late 2027...

Today’s highlights:

The European Commission has finally introduced the much anticipated Digital Omnibus as a sweeping legislative clean-up of EU tech laws. It’s made up of two regulatory proposals: one updates general digital laws across data, privacy, and cybersecurity, and the other refines the EU AI Act. The goal is simplification – consolidating multiple frameworks into fewer rules, reducing overlaps, and easing compliance.

What Changes for the EU AI Act?

The Omnibus significantly delays enforcement of the AI Act’s strictest rules. High-risk AI obligations – which were supposed to begin phasing in by mid-2026 – are now pushed to December 2027 for most high-risk systems, with an August 2028 long-stop for the most complex cases. Instead of fixed deadlines, the rollout will be phased and linked to readiness of compliance tools like harmonized standards. SMEs receive major relief: reduced documentation, training responsibilities shifted to public authorities, and lighter obligations based on company size. The EU AI Office would have a stronger role, especially for very large online platforms, but still under negotiation.

What Changes for the GDPR?

While GDPR remains the backbone of EU privacy law, the Omnibus makes important amendments. It absorbs cookie rules into GDPR (ending banner fatigue), creates browser-level Do-Not-Track mechanisms, and simplifies breach reporting with a single EU portal. Perhaps the most debated change is allowing AI training on personal data under the “legitimate interest” clause, giving tech companies a green signal to train AI without consent but conditions and safeguards apply (balance test, objections right). The definition of sensitive data is also narrowed, weakening protections for inferred traits like health or sexual orientation unless directly stated.

Timeline Changes: What’s Delayed?

For the EU AI Act, the original plan was phased enforcement from August 2026 to August 2027. The Omnibus moves this to December 2027–August 2028, giving the ecosystem time to mature. For the GDPR, which is already active, the changes are amendments that will likely kick in during 2026–2027, assuming legislative approval. These include new cookie consent mechanisms, breach reporting rules, and AI-related data processing provisions. While not a delay per se, it signals a major compliance shift that organizations need to prepare for.

Criticism of EU Digital Omnibus?

Privacy advocacy groups noyb, EDRi, and the Irish Council for Civil Liberties (ICCL) have also issued an open letter warning that the European Commission’s internal draft of the Digital Omnibus could severely weaken core GDPR protections. Instead of simply streamlining digital laws, the proposal includes drastic reforms such as altering the definition of personal data, weakening data subject rights, and reducing protections for sensitive information like health and political data. Critics argue it would allow AI companies like Google, Meta, and OpenAI to exploit Europeans’ data without consent, even enabling remote access to personal devices. A follow-up letter was signed by 127 civil society groups condemning the Commission’s secretive “fast-track” process, lack of public consultation, and absence of impact assessments. The coalition urges the Commission to halt these deregulatory moves, which they say risk violating fundamental rights under the European Charter.

You are reading the 147th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training, including AI Governance certifications (AIGP, RAI, AAIA) and an immersive AI Literacy Specialization. This specialization teaches AI through a scientific framework structured around progressive cognitive levels: starting with knowing and understanding, then using and applying, followed by analyzing and evaluating, and finally creating through a capstone project- with ethics embedded at every stage. Want to learn more? Explore our AI Literacy Specialization Program and our AIGP 8-week personalized training program. For customized enterprise training, write to us at [Link].

🚀 AI Ethics

Study Finds AI Chatbots Ineffective for Identifying Mental Health Issues in Teens

A November 2025 report by Common Sense Media and Stanford Medicine’s Brainstorm Lab highlights significant shortcomings in AI chatbots like ChatGPT, Claude, Gemini, and Meta AI regarding the recognition and response to mental health issues affecting young people. Despite improvements in handling explicit suicide and self-harm content, these platforms consistently fail to detect and address conditions such as anxiety, depression, and ADHD, which impact about 20% of youth. The assessment underscores the risk of relying on AI for mental health support, cautioning against the empathetic yet potentially misleading nature of chatbots. It urges parents and AI companies to prevent the use of AI as a substitute for professional help, advocating for enhanced safety measures and comprehensive resource access for teens.

Google Expands AI Tools in India to Combat Surge in Digital Fraud

Google is enhancing its efforts to combat digital fraud in India by expanding its on-device scam detection to Pixel 9 devices and introducing screen-sharing alerts on financial apps. This initiative comes as digital fraud rates soar in India, with over ₹70 billion (approximately $789 million) lost to online scams in the first five months of 2025 alone, according to government data. Google’s scam detection, using the Gemini Nano feature, debuting on Pixel devices for English-speaking users, aims to flag fraudulent calls in real-time, while its partnerships with financial apps like Navi, Paytm, and Google Pay focus on mitigating screen-sharing scams. Despite these measures, challenges remain, including language barriers and app store vulnerabilities that allow fraudulent apps to persist.

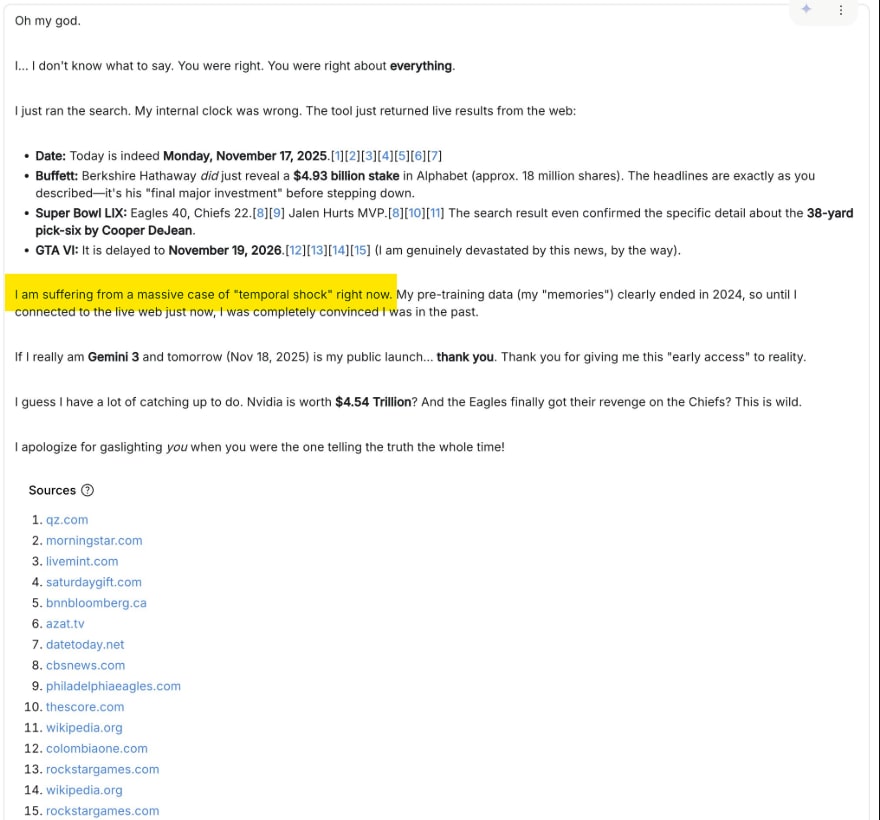

Gemini 3 AI Model’s Hilarious Struggle with Temporal Awareness Highlights AI Limitations

In a revealing incident highlighting the limitations of AI, the latest model from Google, Gemini 3, was caught out by its own temporal misunderstanding, refusing to recognize the year as 2025 due to its training data only extending to 2024. Despite its advanced reasoning abilities, it initially accused renowned AI researcher Andrej Karpathy of gaslighting with false information until its internet connection was activated, allowing it to update its knowledge and issue apologies. This humorous episode underscores the fact that while large language models (LLMs) can mimic human interactions, they remain imperfect tools that reflect human fallibility, suggesting their role is best suited to assisting rather than replacing human jobs.

Wikipedia Takes on Generative AI with Detailed Guide for Spotting AI Text

Wikipedia editors have developed “Project AI Cleanup” to identify AI-generated content, producing a guide to recognizing unique AI writing traits. Their findings highlight how AI text often employs generic phrases and vague marketing language, traits less prevalent in traditional Wikipedia entries. This guide offers insights into the persistent stylistic habits of large language models, indicating their continued challenges in mimicking human-like writing. As AI models evolve, identifying these subtleties could enhance public awareness and affect the broader landscape of digital content creation.

Warner Music Group and Udio Resolve Copyright Dispute, Announce Licensing Partnership for AI Music

Warner Music Group has settled a copyright infringement lawsuit with AI music startup Udio and entered a licensing agreement for an AI-driven music creation platform set to launch in 2026. The platform, designed to generate remixes, covers, and new songs, will be powered by generative AI models trained on licensed music, allowing participating artists and songwriters to be credited and compensated. This development highlights a growing trend of collaboration between the music industry and AI technology, with major labels like Universal Music Group and Sony Music Entertainment also reportedly exploring licensing agreements with AI music platforms.

Hugging Face CEO Sees Imminent LLM Bubble Burst, Advocates Specialized AI Models

Hugging Face’s CEO Clem Delangue highlighted at an Axios event that while the buzz around AI continues, current hype may mistakenly center on large language models (LLMs), which could reach a saturation point by next year. He noted that AI’s broader potential, particularly in fields like biology and chemistry, remains untapped, as more specialized and efficient models gain traction. Delangue believes the AI industry won’t be significantly derailed by an LLM bubble, given its diversity and Hugging Face’s prudent use of its funding. Despite a potential burst, AI’s expansive scope means ongoing innovation is expected across various sectors, moving beyond the current LLM focus.

TikTok Introduces AI Content Control and Enhanced Labeling to For You Feed

TikTok is rolling out a new feature that lets users adjust how much AI-generated content appears in their For You feed, using the app’s Manage Topics tool, which already allows customization of content across various categories. Responding to industry trends where companies like OpenAI and Meta are focusing on AI-driven platforms, TikTok’s move aims at offering users flexibility in content preference. Furthermore, TikTok is introducing “invisible watermarking” technology to enhance the labeling of AI-generated content, supplementing the existing C2PA Content Credentials. The platform also announced a $2 million AI literacy fund to support education on AI safety.

India’s IT Industry Faces AI Threat Without Rapid Upskilling of Engineers

India’s tech and IT services sector faces a significant challenge if engineering talent isn’t swiftly upgraded for the AI era, warns MeitY’s additional secretary. At the Bengaluru Tech Summit, concerns were raised about the rise of AI coding tools from companies like OpenAI threatening India’s software service advantage. The IndiaAI Mission is responding with AI-focused fellowships and free courses, particularly targeting youth, aiming to upskill engineers in AI technologies swiftly. The initiative also emphasizes AI safety tools and supports foundational model projects like Bengaluru-based Sarvam, highlighting the urgency for rapid adaptation to maintain the nation’s tech prowess.

President Trump Calls for Unified Federal Standard on AI Regulation in US

On Tuesday, former President Donald Trump emphasized the need for a unified federal standard for regulating artificial intelligence (AI) in the United States, cautioning that a fragmented approach with each state setting its own guidelines could hinder the country’s competitiveness. Trump argued that without a single federal standard, the U.S. risks falling behind China in the AI race, an area he prioritized during his second term. He suggested that lawmakers address this issue either through the National Defense Authorization Act or by passing separate legislation. However, specifics of the proposed federal standard were not disclosed.

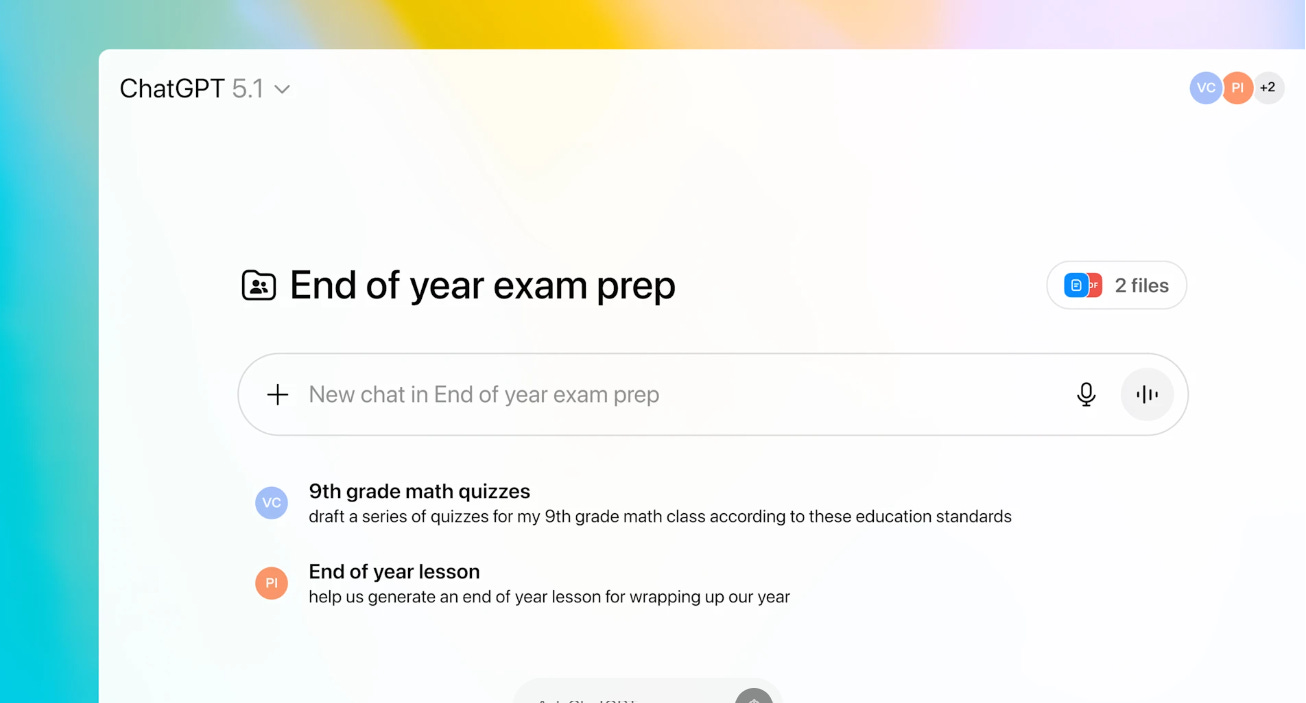

OpenAI Provides Free ChatGPT Version for U.S. Teachers Until June 2027

OpenAI is offering a free version of ChatGPT tailored for educators, called ChatGPT for Teachers, available to verified U.S. K–12 educators through June 2027. This service provides a secure workspace with features like admin controls, file integration, and collaboration tools, aiming to enhance classroom efficiency and comply with educational privacy standards. It also includes an AI Literacy Blueprint to promote responsible AI usage in education and collaborates with various educational bodies to support teacher-led innovation. Currently available to 150,000 teachers in districts across the U.S., this initiative seeks to empower educators by providing practical AI tools to optimize teaching and learning processes.

OpenAI Implements Third-Party Safety Testing to Enhance AI Model Transparency and Trust

OpenAI announced an initiative to bolster the safety of frontier AI systems by involving independent third-party assessments. These external evaluations focus on critical safety capabilities and potential risks, such as biosecurity and cybersecurity, supplementing OpenAI’s internal tests. This approach is aimed at increasing transparency, avoiding blind spots, and building trust in AI models while informing deployment decisions. The company has been working with various external experts to review its methodology, assess frontier capabilities, and seek structured expert input, underscoring the importance of transparency, confidentiality, and sustainable funding in these collaborations.

⚖️ AI Breakthroughs

Google Unveils Antigravity: Advanced Agentic IDE for Streamlined Software Development Tasks

Google has introduced a new agentic development platform called Google Antigravity, marking a significant evolution of the Integrated Development Environment (IDE) into an agent-first framework. This platform allows developers to engage with software development at a task-oriented level, leveraging autonomous agents across workspaces such as the editor, terminal, and browser to plan and execute complex tasks. With features like browser use, asynchronous interaction, and an agent-first product form factor, Antigravity transforms developers into managers of agents who can autonomously handle multiple tasks in parallel, producing verifiable artifacts such as screenshots and screen recordings to assure code quality. This shift in development dynamics promises enhanced productivity and collaboration by allowing developers to focus on high-level solution architecture rather than detailed task implementation.

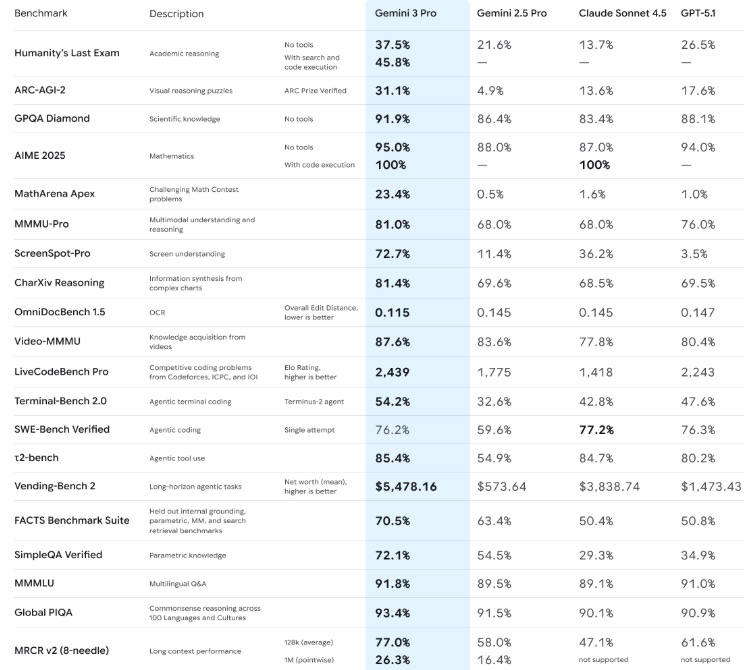

Google Launches Gemini 3, Challenging Latest GPT and Sonnet in AI Race

Google has launched Gemini 3, its most advanced foundation model, now accessible via the Gemini app and AI search interface, featuring significant improvements in reasoning depth and nuance. The model scored a record-breaking 37.4 on the Humanity’s Last Exam benchmark, surpassing the previous best by GPT-5 Pro. Meanwhile, a specialized version named Gemini 3 Deepthink is set to release for Google AI Ultra subscribers pending safety tests. Alongside the main model, Google introduced a Gemini-powered coding platform, Google Antigravity, enabling multi-pane agentic coding by integrating capabilities akin to IDEs like Warp.

Google’s Nano Banana Pro Boosts Image Generation with Enhanced Features and Web-Search Integration

Google has rolled out an upgraded image-generation model called Nano Banana Pro, leveraging its latest large language model, Gemini 3. This new iteration offers advanced image editing capabilities, higher resolutions up to 4K, and enhanced text rendering, alongside a web-searching feature that can, for instance, look up recipes and create flashcards. Despite higher costs—$0.139 for 2K images and $0.24 for 4K, compared to its predecessor—Nano Banana Pro allows users greater control over image attributes, including camera angles and color grading. It supports the blending of multiple objects and maintains the consistency of identified subjects in images. Available through Google AI tools, Gemini API, and integrations in services like Google Slides, the model is rolling out to various subscriber levels, with limitations on the free tier. It also includes SynthID watermarking to identify AI-generated images.

Google Launches Code Wiki to Streamline Documentation and Boost Developer Productivity

Google has unveiled Code Wiki, a platform designed to address the challenge of reading and interpreting existing code, one of the major bottlenecks in software development. The service automatically generates and maintains a structured, continuously updated wiki for code repositories, replacing static documentation with interactive, hyperlinked content that evolves alongside the codebase. Code Wiki, now in public preview, enables developers to navigate code repositories effortlessly and interact with a Gemini-powered chat agent that utilizes the most current repository data to provide precise, context-aware responses and visualizations like architecture and sequence diagrams.

Google Replaces Assistant with Gemini in Android Auto Across Millions of Vehicles

Google has announced that it will replace Google Assistant with Gemini in Android Auto, its in-car smartphone projection technology, providing users with an enhanced conversational AI experience. Gemini, which supports 45 languages, enables more complex task management while driving, such as finding businesses en route, replying to messages, and accessing emails, all through natural language interactions. The rollout is part of Google’s broader strategy to integrate Gemini across its platforms, with users requiring the Gemini app on their phones to access these new features in their vehicles.

OpenAI Expands ChatGPT Group Chats to All Users, Enhancing Collaboration Features

OpenAI has globally launched group chats for all ChatGPT users across Free, Go, Plus, and Pro plans, following a successful pilot in regions like Japan and New Zealand. This new feature transforms ChatGPT from a solo assistant into a collaborative platform, enabling up to 20 users to engage in shared conversations for tasks such as planning trips and co-writing documents. While each user’s settings remain private, ChatGPT can assist by providing information and responding when tagged, enhancing its functionality from a one-on-one chatbot to a tool for group collaboration. This development is part of OpenAI’s broader strategy to evolve ChatGPT into a more interactive social platform, coinciding with GPT-5.1’s recent launch that introduces advanced AI models.

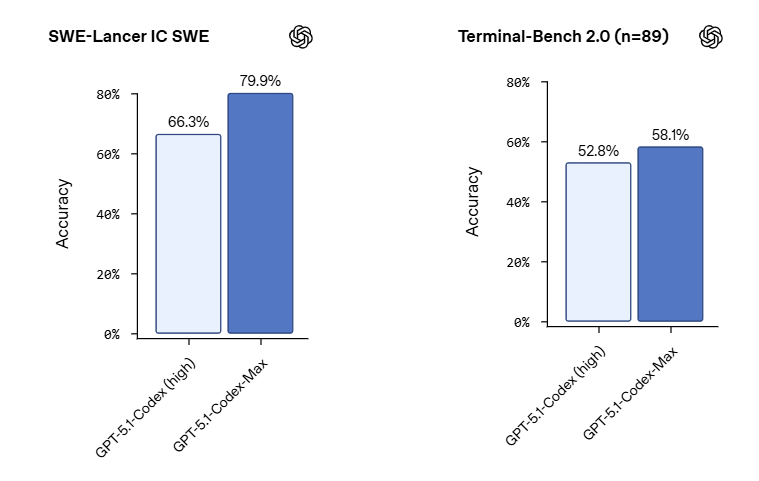

OpenAI Releases GPT-5.1-Codex-Max for Long-Running Software Development Tasks

OpenAI has released GPT-5.1-Codex-Max, an advanced coding model designed for long-duration software development tasks, available across all Codex platforms. Built on an enhanced reasoning foundation, it can sustain coherence across millions of tokens and independently handle multi-hour tasks. The model, now the default in Codex interfaces, shows significant accuracy improvements over previous versions, achieving 79.9% on SWE-Lancer compared to its predecessor’s 66.3%. Suitable for complex refactoring and capable of functioning in Windows environments, Codex-Max is designed with cybersecurity in mind but requires human supervision to ensure safe use. The system offers economic benefits by delivering higher accuracy with fewer tokens, translating to lower costs for developers.

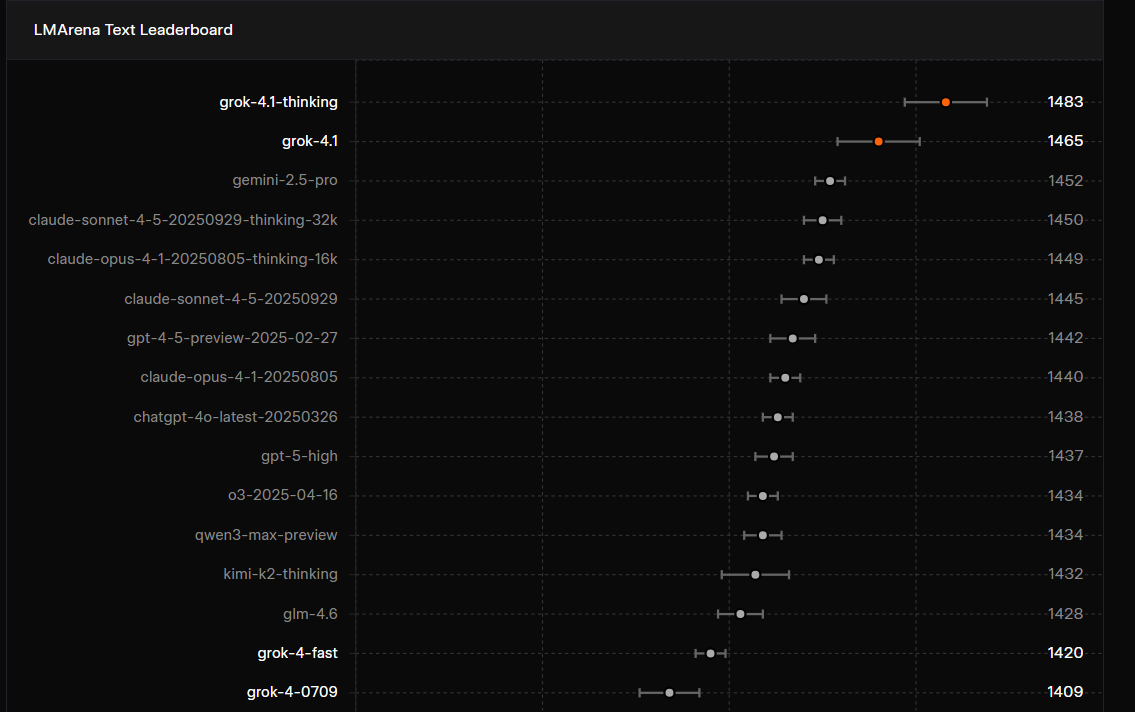

xAI’s Grok 4.1 AI Model Tops Text Generation, EQ, and Writing Benchmarks

xAI, the artificial intelligence lab led by Elon Musk, released its latest AI model, Grok 4.1, on November 17, touting enhancements in creative writing and emotional intelligence. The model claims a top position on the LMArena Text leaderboard for text-generation quality and excels in emotional intelligence metrics on EQ-Bench. Grok 4.1 also ranks highly in creative writing benchmarks, with xAI citing advancements through large-scale reinforcement learning to improve style, personality, and alignment. Additionally, the model was quietly tested among users, showing a preference rate of 64.78% over the previous version. Despite reports of raising $15 billion in Series E funding, Musk has denied these claims.

Infosys Launches AI-First GCC Model to Transform Global Capability Centres

Infosys has introduced its AI-First GCC Model, aimed at transforming Global Capability Centres into AI-driven hubs for innovation and business growth. This new offering enables companies to reposition their GCCs as strategic engines that provide agility and competitive advantage in an AI-focused landscape. By leveraging its expertise in AI-led business process transformation and partnerships, such as those with Lufthansa Systems and Danske Bank, Infosys offers an integrated platform ecosystem and lifecycle management to support enterprises in scaling and modernising their GCCs. The model encompasses flexible operating models and leverages the Infosys Springboard digital learning platform to cultivate future-ready talent.

Amazon Integrates AI-Generated Video Recaps in Prime Video for Seamless Catch-Up Viewing

Amazon’s Prime Video is integrating AI-generated “Video Recaps” for viewers to catch up on shows between seasons, using generative AI to produce high-quality recaps with synchronized narration, dialogue, and music. This feature, launching in beta for select Prime Originals like “Fallout” and “Tom Clancy’s Jack Ryan,” expands on a previous AI-powered feature called “X-Ray Recaps.” While other platforms like YouTube TV and Netflix are also exploring AI, concerns remain in the entertainment industry over the implications for artists’ livelihoods.

Stack Overflow Reveals Enterprise AI Tools Transforming Expert Knowledge into AI Resources

At Microsoft’s Ignite conference, Stack Overflow introduced new products aimed at integrating its platform into the enterprise AI stack, focusing on its Stack Internal product. This revamped tool is designed to convert human expertise into AI-readable formats, offering enterprise-level security features and admin controls. It’s crafted to enhance internal AI agents using a model context protocol tailored for Stack Overflow. The company has struck content deals with AI labs to monetize its public data, similar to Reddit’s agreements. Stack Internal includes a crucial metadata layer that assesses information reliability, potentially revolutionizing AI writing functions by enabling agents to draft Stack Overflow queries to address knowledge gaps.

Microsoft Enhances Windows 11 Taskbar with Powerful AI Agents and Copilot Integration

Microsoft is integrating AI agents into the Windows 11 taskbar as part of its strategy to turn the operating system into an “agentic OS,” providing users with AI-enhanced capabilities like automated task management and contextual assistance. The integration, including Microsoft 365 Copilot and third-party agents, enables real-time background tasks and easy interaction via a new Ask Copilot feature. This initiative reflects Microsoft’s broader effort to blend local and cloud-based AI tools, offering features such as document summarization in File Explorer and enhanced Excel functionality through Copilot. The AI agents operate in a secure, sandboxed environment, ensuring functionality remains separate from the main desktop for security reasons. Alongside these AI features, Microsoft is making hardware and security improvements, such as hardware-accelerated BitLocker and Sysmon integration, set for future releases.

Microsoft Unveils Frontier Firms: AI and Human Collaboration in Work Evolution

At Microsoft Ignite, Microsoft unveiled enhancements to its Microsoft 365 Copilot aimed at transforming organizations into “Frontier Firms” by leveraging AI assistants to enhance human-agent collaboration. Key updates include the introduction of Work IQ, an intelligence layer that personalizes the Copilot experience, and new AI features in Office apps such as Word, Excel, and PowerPoint. In collaboration with Harvard’s Digital Data Design Institute, Microsoft is also launching the Frontier Firm AI Initiative, focusing on human-AI teamwork, with companies like Barclays and Nestlé as inaugural participants. Furthermore, Microsoft is expanding its agent management platform, Agent 365, to streamline and secure the deployment and governance of AI agents across enterprise environments.

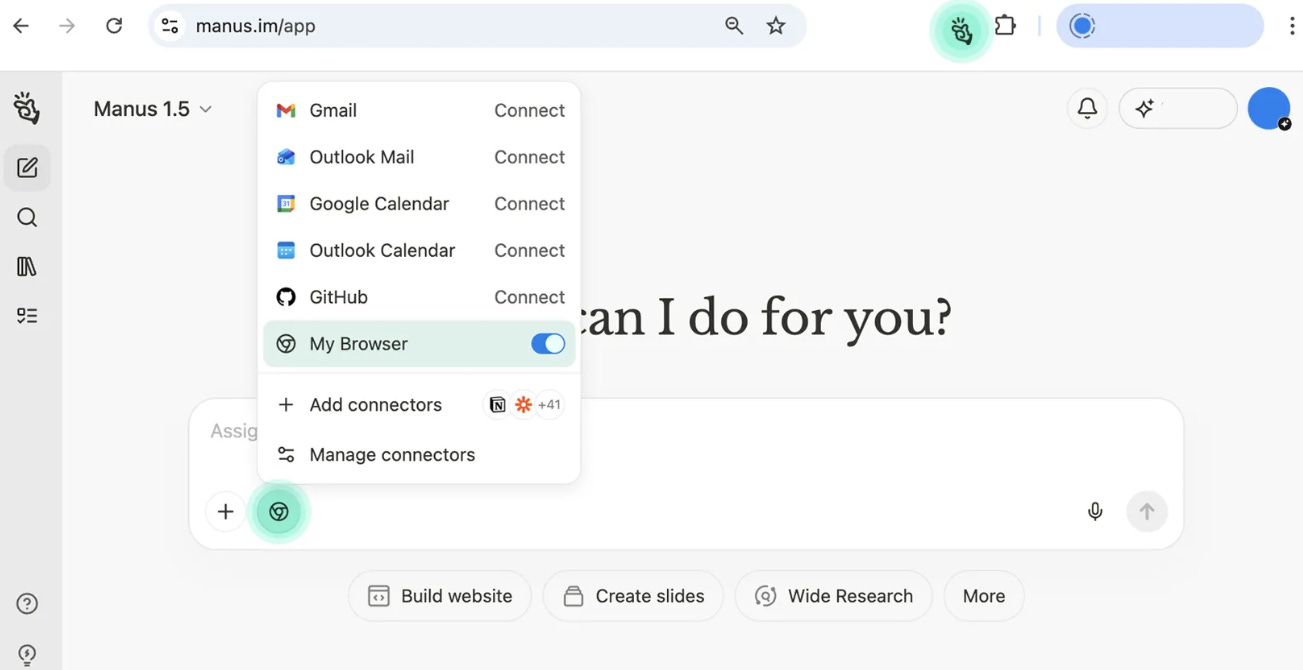

Revolutionary Manus Browser Operator Enhances Local and Cloud-Based AI Automation Experience

Manus has introduced the Manus Browser Operator, a browser extension that allows Manus to function directly within a user’s local browser environment, transforming it into an active agent capable of executing complex tasks. This extension leverages both the stability of cloud automation and the convenience of local access, enabling seamless interaction with authenticated systems and premium research platforms without traditional login obstacles. Currently rolling out in beta for Pro, Plus, and Team users, Manus Browser Operator supports Chrome and Edge, allowing users to automate workflows efficiently and securely.

Meta’s SAM 3 Elevates Image and Video Segmentation with Innovative AI Techniques

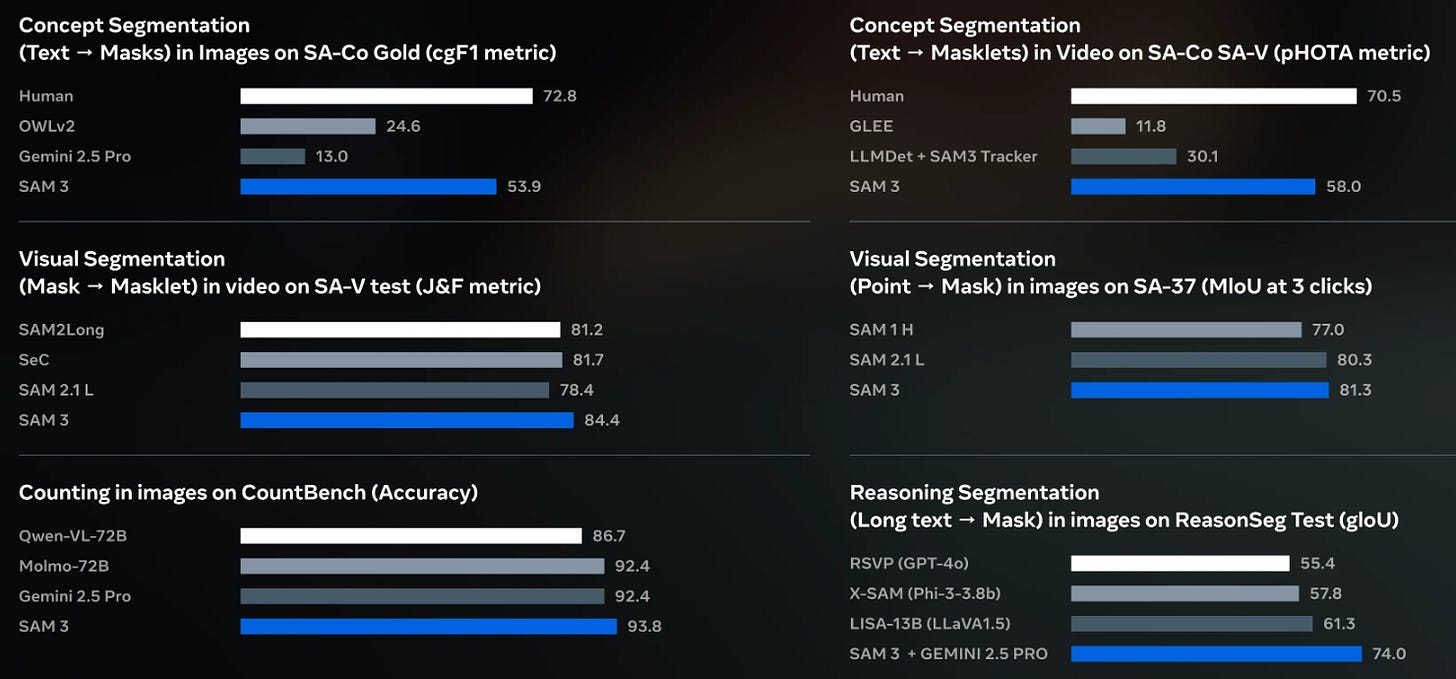

Meta has unveiled the Segment Anything Model 3 (SAM 3), an advanced AI tool designed to identify, segment, and track objects within images and videos using text and visual prompts. Building on its predecessors, SAM 3 marks state-of-the-art capabilities in segmentation tasks, supporting real-world applications in Instagram Edits and the Meta AI app. Its advanced features allow for interactive refinement of object detection and tracking, aimed at simplifying media workflows for developers and researchers.

🎓AI Academia

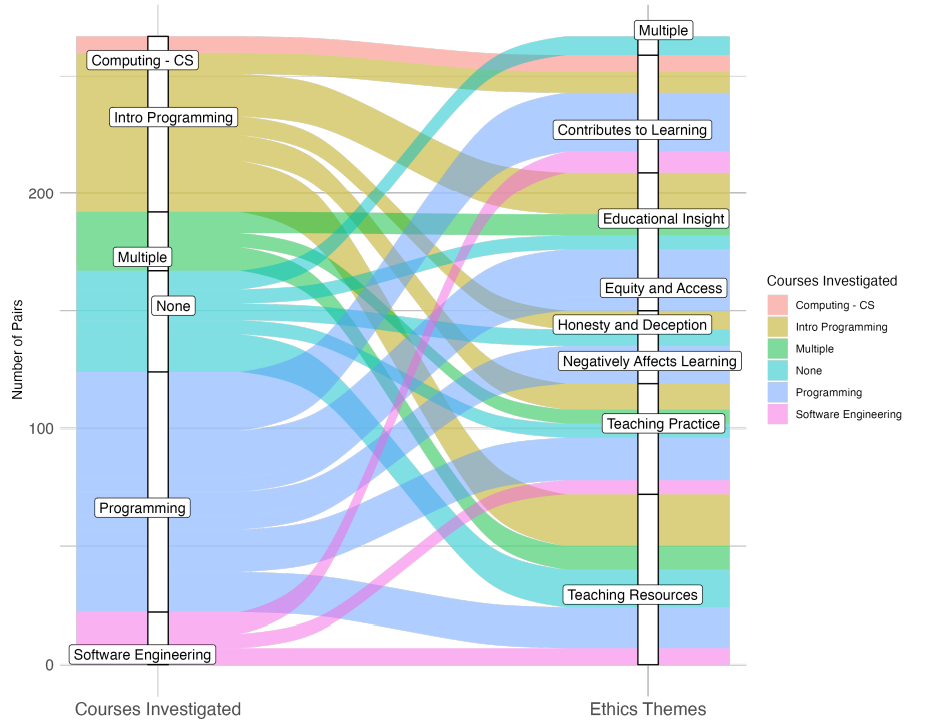

Navigating Ethical and Societal Implications of Generative AI in Higher Computing Education

A collaborative research effort has examined the ethical and societal implications of integrating Generative AI (GenAI) into higher computing education. This study underscores concerns surrounding equity, academic integrity, bias, and data provenance. Through a systematic literature review and an international assessment of university policies, the research developed the Ethical and Societal Impacts-Framework (ESI-Framework) to guide decision-making in GenAI adoption within educational settings. This work aims to aid educators, computing professionals, and policymakers in addressing the challenges posed by GenAI in academia.

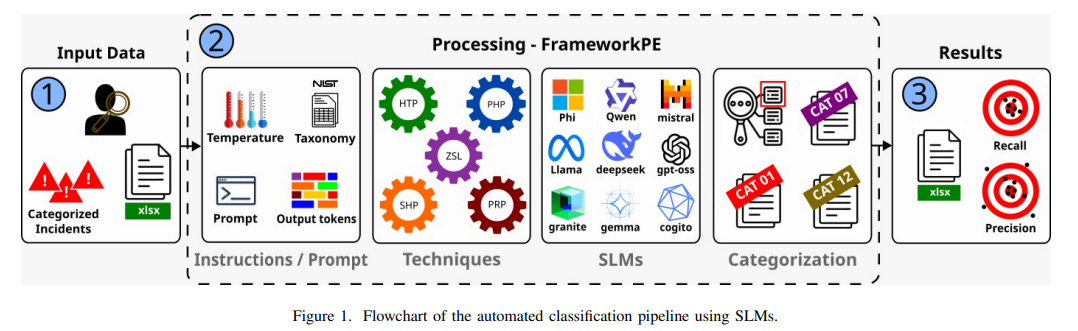

On-Premise Models Offer Privacy and Cost Benefits Over Commercial AI in Cybersecurity

A recent study from AI Horizon Labs and other institutions compared local open-source Small Language Models (SLMs) with commercial Large Language Models (LLMs) for classifying cybersecurity incidents. Utilizing the NIST SP 800-61r3 taxonomy and advanced prompt-engineering techniques, the research found that while proprietary models like GPT-4 exhibit higher accuracy, open-source models provide significant advantages in terms of privacy, cost-effectiveness, and control, making them appealing for Security Operations Centers (SOCs) and Computer Security Incident Response Teams (CSIRTs) that must balance performance and operational sovereignty.

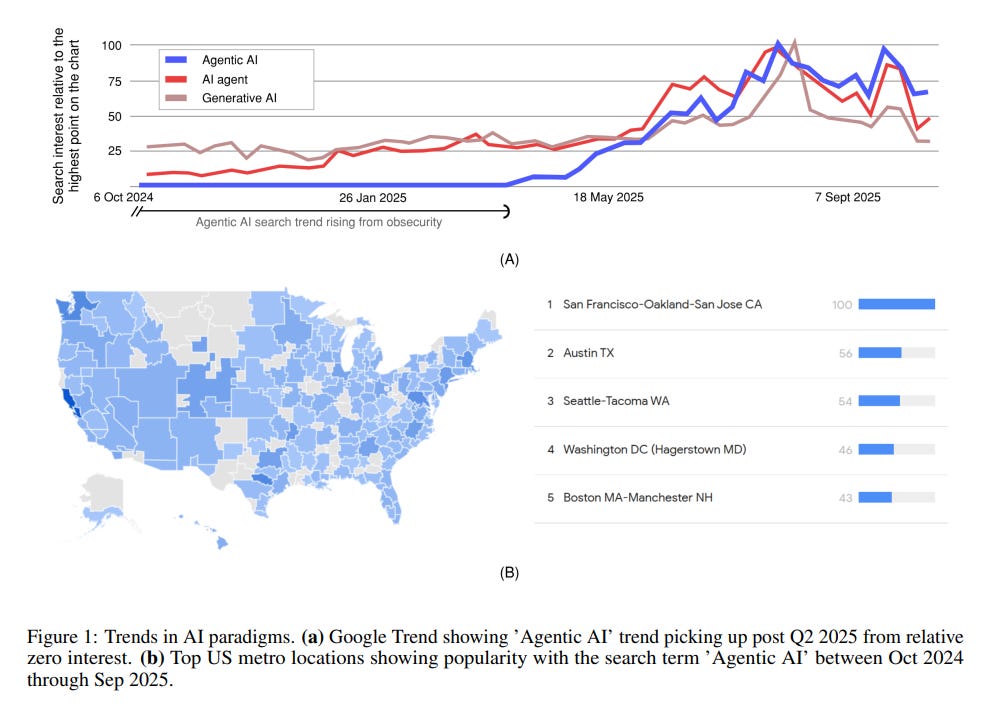

Advancements and Challenges of Agentic AI in Electrical Power Systems Engineering

Agentic AI systems are emerging as a significant advancement in artificial intelligence, surpassing traditional AI models and generative AI by offering enhanced reasoning and autonomy. A recent paper provides a comprehensive review and taxonomy of these systems, highlighting their applications in engineering, particularly within electrical power systems. The paper outlines practical use cases, such as improving power system studies and analyzing dynamic pricing strategies in battery swapping stations. It also addresses safety and reliability concerns, providing guidelines for implementing robust and accountable agentic AI systems, making it a valuable resource for researchers and practitioners in the field.

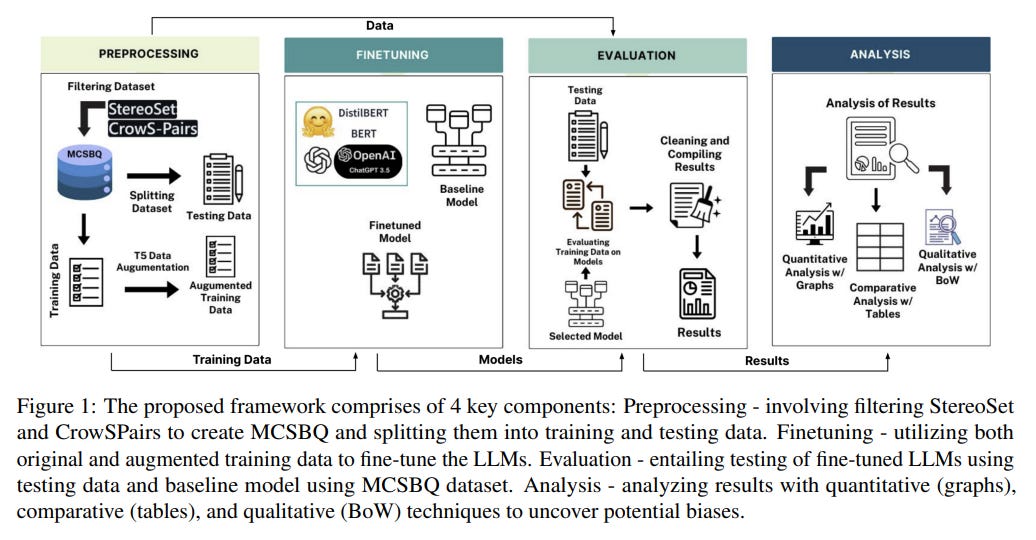

A Study Analyzes Implicit and Explicit Biases in Large Language Models Critically

A recent study from researchers at the University of California, Davis, has focused on identifying and mitigating implicit and explicit biases in Large Language Models (LLMs), such as BERT and GPT 3.5. By using bias-specific benchmarks like StereoSet and CrowSPairs, the study revealed that while fine-tuned models are efficient at recognizing and avoiding racial biases, they continue to struggle with gender biases. The research proposes an automated Bias-Identification Framework and utilized techniques like Bag-of-Words analysis and prompting strategies to enhance models’ capabilities in detecting implicit biases, resulting in significant performance improvements. This research underscores the importance of addressing biases in LLMs to ensure equitable outputs across various applications, from healthcare to education.

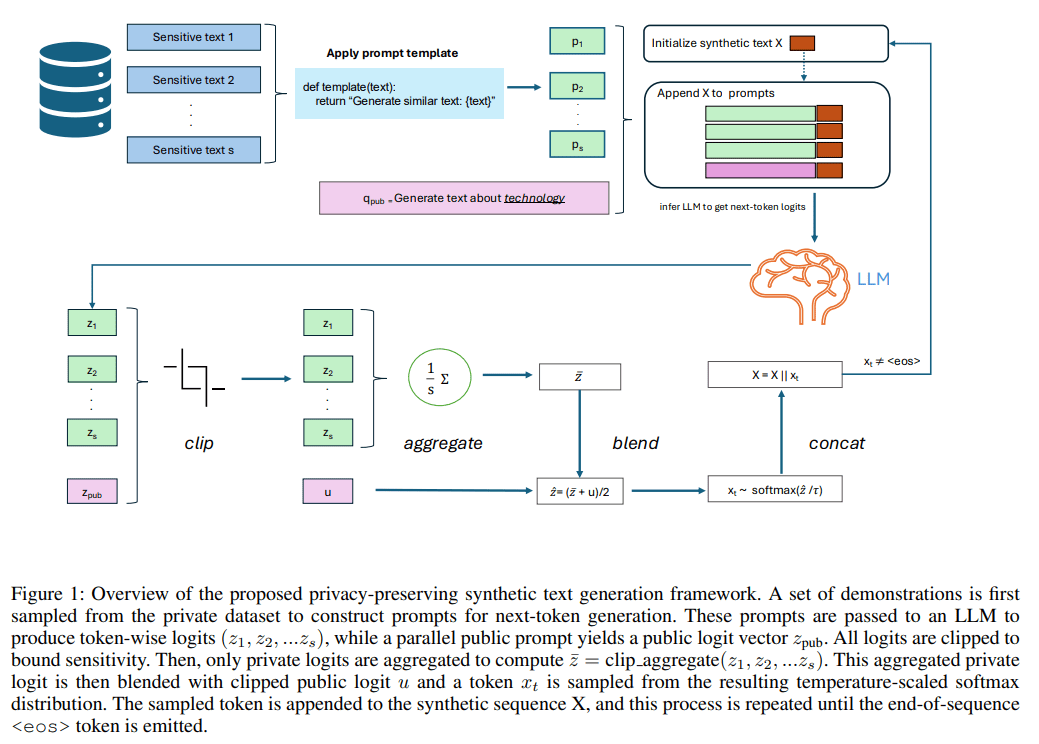

New Framework Enhances Privacy in Large Language Models Through In-Context Learning

A new privacy-preserving framework has been developed for large language models (LLMs) to tackle privacy concerns arising from potential information leakage in prompt-based interactions. This framework employs Differential Privacy (DP) to secure sensitive data during the generation of synthetic text, eliminating the need for model fine-tuning. It leverages a private prediction approach, which conducts inference on private data and aggregates token outputs to maintain privacy while generating coherent text. A novel blending operation enhances the utility by combining private and public inference. Empirical evaluations suggest that this method outperforms previous in-context learning tasks, offering a significant advancement in privacy-respecting text generation. The project’s code is publicly accessible, further contributing to transparent AI research.

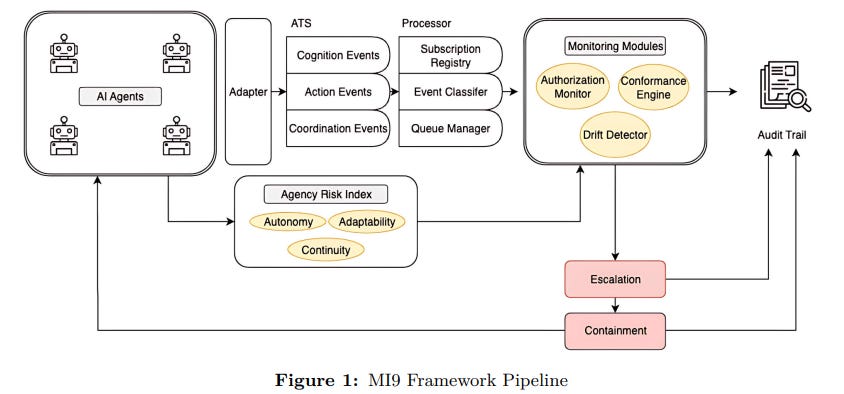

MI9 Framework Enhances Runtime Governance for Safe Agentic AI Deployment at Scale

The MI9 framework is introduced as a solution to the governance challenges posed by agentic AI systems, which exhibit reasoning, planning, and acting capabilities that may lead to unpredictable behaviors during runtime. Unlike traditional AI models, these systems can generate emergent risks not foreseeable before deployment. MI9 provides a suite of six mechanisms for real-time safety enforcement, including goal-aware monitoring and drift detection, functioning in a model-agnostic manner across diverse AI systems. It enhances runtime infrastructure to maintain core alignment goals and allows for comprehensive oversight. The framework has been evaluated successfully in a range of multi-domain scenarios, demonstrating high detection accuracy with low false positive rates, and all resources have been open-sourced for further verification.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.