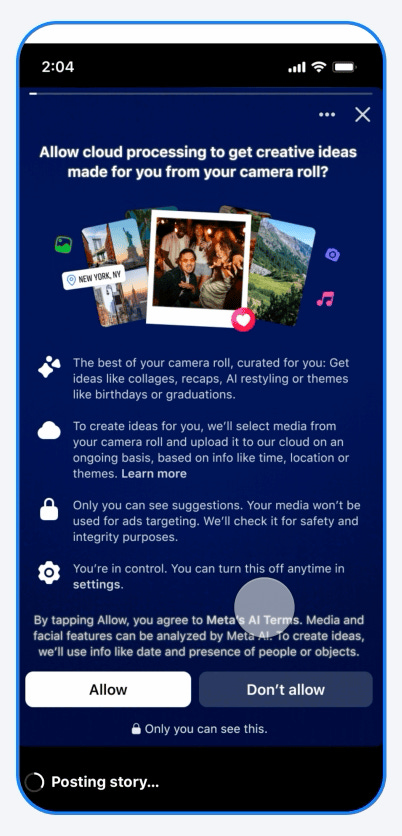

Does turning on this Meta feature mean you lose control of your data?

Meta drew privacy scrutiny after launching a feature that scans users’ entire camera roll to generate collages..

Today’s highlights:

Meta (Facebook) has launched an optional AI feature that scans your phone’s entire camera roll to suggest creative outputs like collages and photo recaps. If users opt in, the app uploads private, unpublished photos and videos to Meta’s cloud infrastructure for continuous AI analysis.

Though Meta claims it won’t use your images for ads or AI training unless you explicitly edit or share them, experts raise major privacy concerns. Once enabled, Meta can:

Continuously upload all your photos: including IDs, receipts, and personal moments.

Analyze facial features, locations, dates, and other metadata.

Retain the data beyond 30 days, with unclear deletion guarantees.

Eventually use shared/edited images for AI training and third-party access.

While Meta frames this as “opt-in” and helpful, the default-on toggles, vague permissions, and deep access granted to personal content raise surveillance and consent concerns. Critics say this blurs the line between private photos and Big Tech data mining- without adequate transparency.

You are reading the 138th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training, including AI Governance certifications (AIGP, RAI) and an immersive AI Literacy Specialization. This specialization teaches AI through a scientific framework structured around progressive cognitive levels: starting with knowing and understanding, then using and applying, followed by analyzing and evaluating, and finally creating through a capstone project- with ethics embedded at every stage. Want to learn more? Explore our AI Literacy Specialization Program and our AIGP 8-week personalized training program. For customized enterprise training, write to us at [Link].

⚖️ AI Ethics

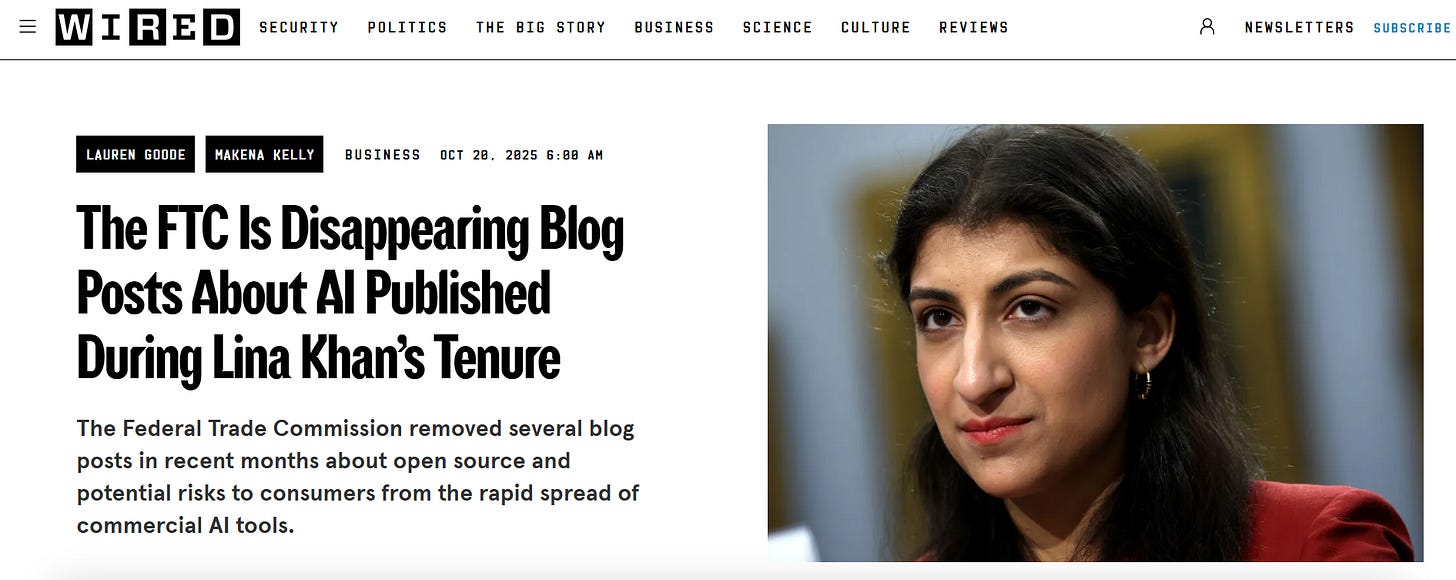

FTC Removes Blog Posts on AI Consumer Risks Amidst Regulatory Changes

The Federal Trade Commission (FTC) has removed three blog posts from Lina Khan’s era, addressing open-source AI and potential consumer risks, according to Wired. These posts, published between 2023 and 2025, discussed AI’s potential for harm, including facilitating fraud and discrimination. The removals are seen as aligning with the Trump administration’s AI Action Plan, which emphasizes fast growth and competition over safety. This pattern follows broader removals of government content under Trump’s leadership, which some argue may violate federal transparency and record-keeping laws. TechCrunch is seeking comment from the FTC on these actions.

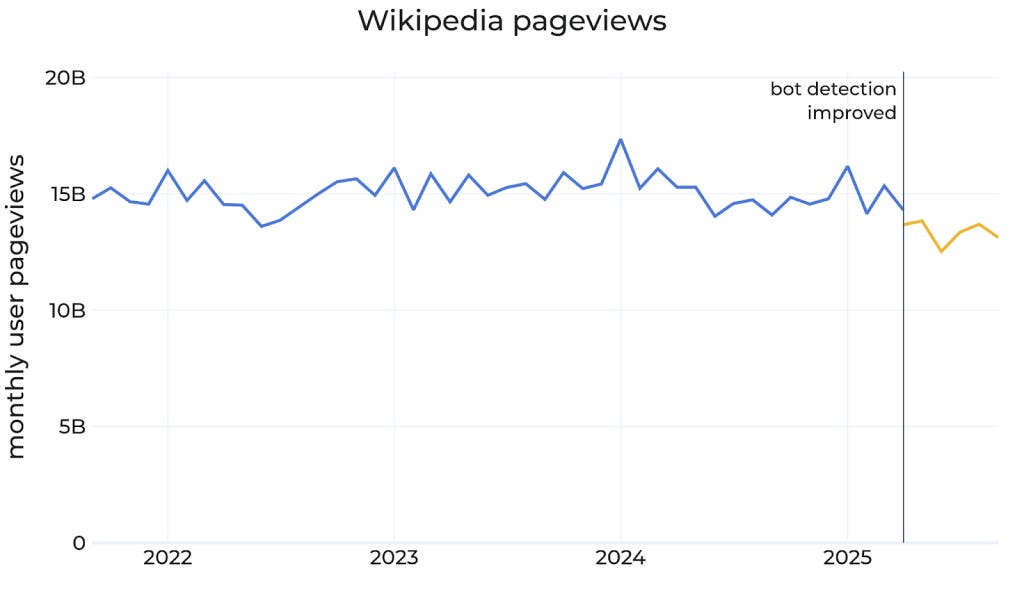

Wikipedia Faces Declining Page Views Amid Generative AI and Social Media Shift

Wikipedia, often hailed as a bastion of reliable online content, is experiencing an 8% decline in human page views year-over-year due to evolving information-seeking behaviors. This downturn, identified following updated bot-detection methods, highlights the potential impact of generative AI and social media platforms that offer direct answers and attract younger users away from traditional web sources. Despite this, the Wikimedia Foundation emphasizes Wikipedia’s enduring importance, as its information still circulates through AI-generated content. The foundation is addressing these challenges by developing a framework for content attribution and seeking to engage new readers and volunteers to sustain and enrich the encyclopedia.

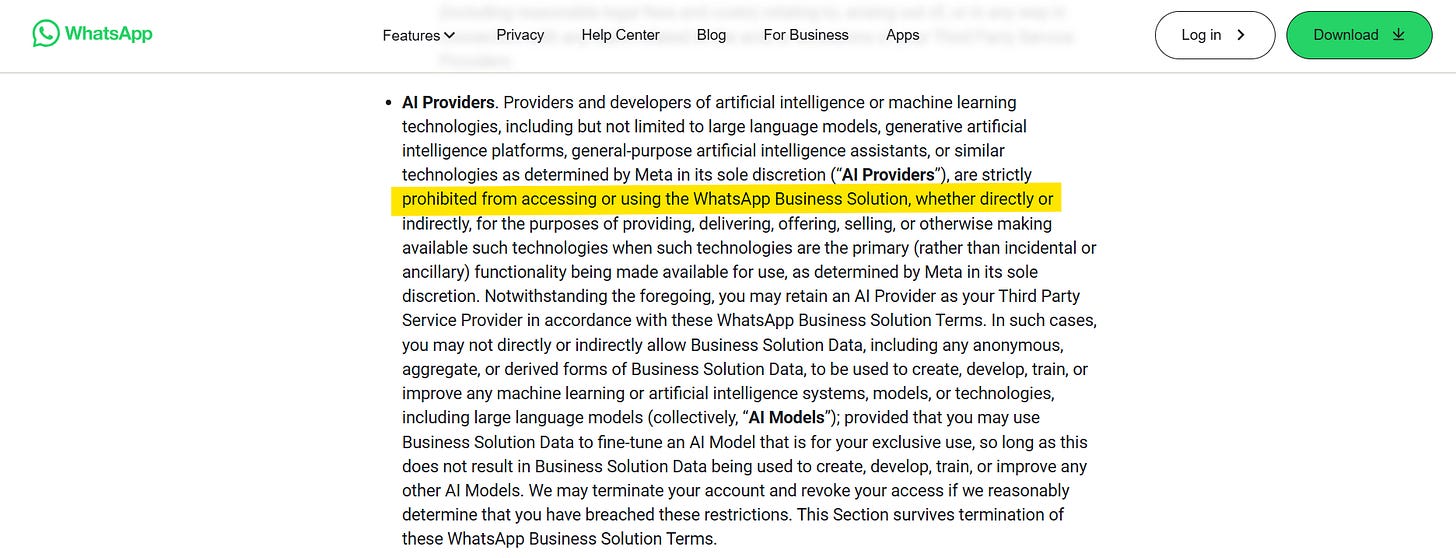

WhatsApp to Ban General-Purpose Chatbots, Impacting AI Providers on Platform

Meta-owned WhatsApp has updated its business API policy, effectively banning general-purpose chatbots from its platform starting January 15, 2026. This decision impacts AI-driven assistants by companies such as OpenAI, Perplexity, Luzia, and Poke. Meta’s rationale is that the WhatsApp Business API should focus on business-to-consumer interactions and not serve as a distribution platform for AI solutions. This shift follows concerns about message volumes and the absence of provisions for charging chatbots under the current API setup, which primarily generates revenue through business messaging. Meta’s decision highlights its strategic focus on business messaging as a revenue pillar amid growing user engagement on its platforms.

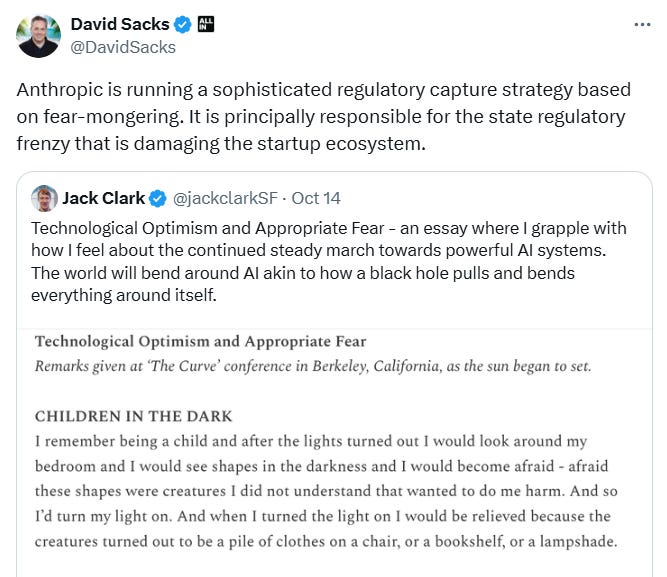

Silicon Valley Leaders Criticize AI Safety Groups Amid Growing Regulatory Tensions

Silicon Valley leaders have stirred controversy by criticizing AI safety advocates, suggesting they serve self-interests or financial backers rather than genuine safety concerns. This tension reflects ongoing struggles between promoting responsible AI use and pursuing rapid industry growth. Recent allegations, notably involving figures like White House AI and Crypto Czar David Sacks and OpenAI’s Jason Kwon, have intimidated AI safety advocates, with some fearing retaliation. Sacks accused the AI safety firm Anthropic of fear-mongering for regulatory gain, while OpenAI subpoenaed nonprofits challenging its practices, raising questions about transparency and influence, especially in the wake of a lawsuit by Elon Musk. These actions highlight the intensifying debate over regulation’s impact on innovation versus accountability in AI development.

AWS Outage Disrupts Global Online Platforms, Including AI and Trading Apps

A major outage at Amazon Web Services (AWS) on Monday disrupted several popular apps and online platforms globally, originating from the company’s US-EAST-1 region in Northern Virginia. The incident caused increased error rates and latencies, impacting services such as Perplexity AI, Snapchat, Fortnite, and even trading platforms like Robinhood and Venmo. Thousands of user complaints were logged on monitoring sites due to connection failures and login errors, while social media was abuzz with reports of disruptions. The outage affected AWS’s core offerings, including Amazon EC2 and DynamoDB, highlighting the extensive reliance on the company’s infrastructure.

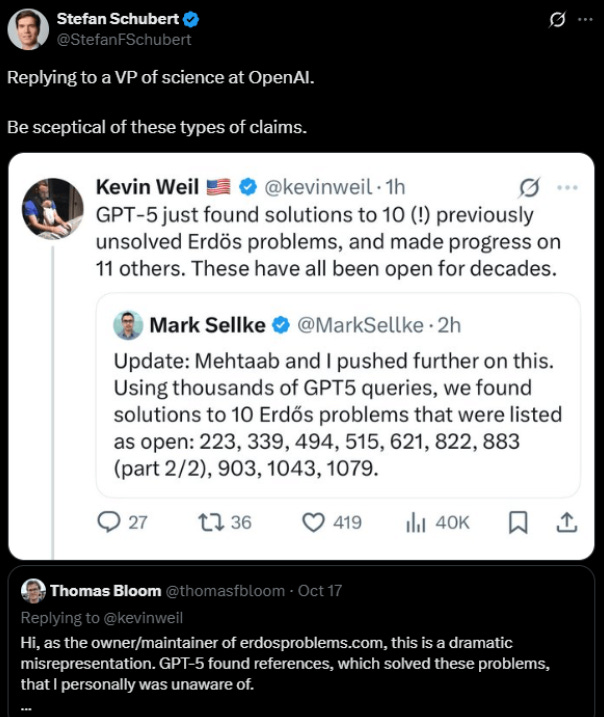

GPT-5’s Mathematical Claims Questioned After Clarification on Erdős Problem Solutions

Meta’s chief AI scientist Yann LeCun and Google DeepMind CEO Demis Hassabis expressed skepticism over claims made by OpenAI researchers regarding GPT-5’s alleged breakthroughs in solving Erdős problems. A Vice President at OpenAI had tweeted that GPT-5 solved several longstanding mathematical problems, which was later revealed to be a misrepresentation. According to mathematician Thomas Bloom, GPT-5 simply found existing literature that addressed these problems rather than solving unsolved ones. OpenAI’s Sebastien Bubeck acknowledged this but maintained that identifying solutions in the literature is still notable.

🚀 AI Breakthroughs

Google’s Gemini 3.0 AI Model Set for Launch as Development Accelerates

At the Dreamforce 2025 conference, Google and Alphabet CEO Sundar Pichai confirmed the impending release of Gemini 3.0 later this year, highlighting accelerated progress in AI development. The new model is expected to build upon its predecessors by introducing more advanced intelligent agents and deeper integration into Google’s products. This announcement follows the recent launch of Gemini 2.5 Computer Use model, which enables AI interaction with user interfaces, and signals Google’s efforts to keep pace with competitors like OpenAI and Anthropic, who are also advancing their AI capabilities with models like Claude Sonnet 4.5 and Claude Haiku 4.5.

Google AI Studio Updates Enhance Developer Control and Streamline Creative Workflows

Google AI Studio has introduced updates aimed at enhancing the developer experience by providing a unified workspace that consolidates Google’s latest AI models like Gemini and GenMedia, simplifying the transition from prompts to multi-modal outputs such as text, images, videos, and voiceovers. The platform now features a redesigned homepage, a transparent rate-limit tracker, and the integration of real-world geographic data via Google Maps. Additionally, developers gain more control with saved system instructions and an improved API Key management system. These enhancements set the foundation for future developments, promising quicker transitions from ideas to AI-powered applications.

Adobe Expands Business Offerings with Custom Generative AI Models to Boost Enterprises

Adobe has introduced Adobe AI Foundry, a service that allows businesses to develop custom generative AI models using their own branding and intellectual property. These models, part of Adobe’s Firefly family launched in 2023, can generate text, images, videos, and 3D scenes, with customization based on client needs. The Foundry service caters to enterprises seeking personalized AI solutions for creative campaigns, with pricing based on usage. Adobe emphasizes that these AI tools, designed to enhance rather than replace human creativity, aim to elevate brands’ ability to personalize and adapt advertising content across various formats and languages.

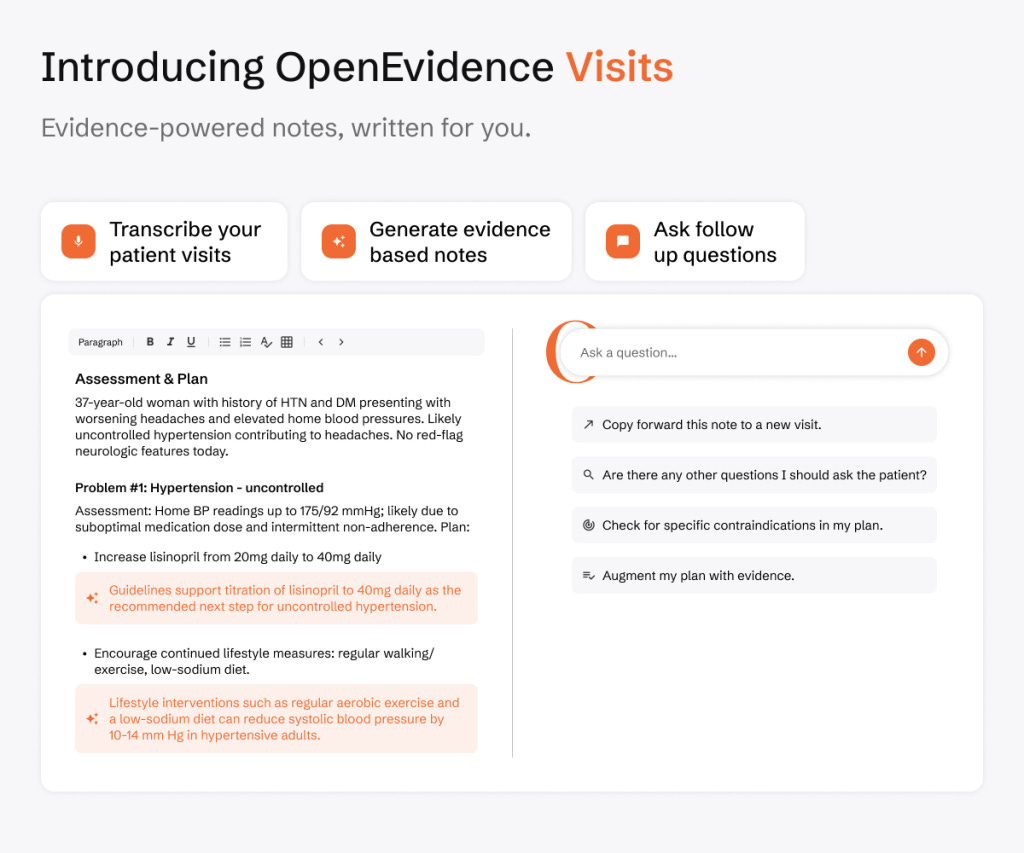

OpenEvidence Secures $200 Million Boost, Valuation Soars to $6 Billion

OpenEvidence, likened to a medical version of ChatGPT, is reportedly set to announce a $200 million funding round at a $6 billion valuation, as per The New York Times. This follows a $210 million round three months earlier, indicating significant investor interest in AI tailored for specific industries. The platform, which relies on medical journals for training, assists verified medical professionals with patient treatment queries and offers its services for free, supported by advertising. Since its establishment in 2022, OpenEvidence has seen rapid growth, with clinical consultations nearly doubling to 15 million monthly since July. The latest funding round was led by Google Ventures, with contributions from notable investors like Sequoia Capital and Kleiner Perkins.

Anthropic Launches Claude Code Web App for AI Coding Assistants, Targets Developers

Anthropic has launched a web app for its AI coding assistant Claude Code, enabling developers to create and manage AI coding agents directly from their browser. Previously available only as a command-line interface (CLI) tool, the web version is now accessible to subscribers of Anthropic’s Pro and Max plans. This move aims to broaden the reach of Claude Code in a competitive market dominated by tools from Microsoft, Google, and others. Since its wider release in May, Claude Code has significantly increased its user base, contributing over $500 million in annualized revenue for Anthropic. Although the company continues to expand web and mobile access, the CLI remains a focal point for advanced usage. Despite some debate over the efficiency of AI coding tools, Anthropic CEO Dario Amodei projects that AI will soon handle the majority of coding tasks in software engineering, a phenomenon that may emerge more gradually across the tech industry.

Meta AI Mobile App’s User Base Surges Following Vibes Feed Introduction and Sora Launch

Meta AI’s mobile app for iOS and Android has experienced a substantial growth in daily active users, reaching 2.7 million as of October 17, up from 775,000 the previous month, according to a new analysis by Similarweb. The surge coincides with the launch of Meta’s Vibes feed, featuring AI-generated videos, which may have driven increased engagement. Additionally, the app’s daily downloads have climbed to 300,000 from less than 200,000, suggesting further interest. The app may also have benefitted from comparisons with OpenAI’s Sora app, which requires invitations, potentially directing more users to Meta’s platform.

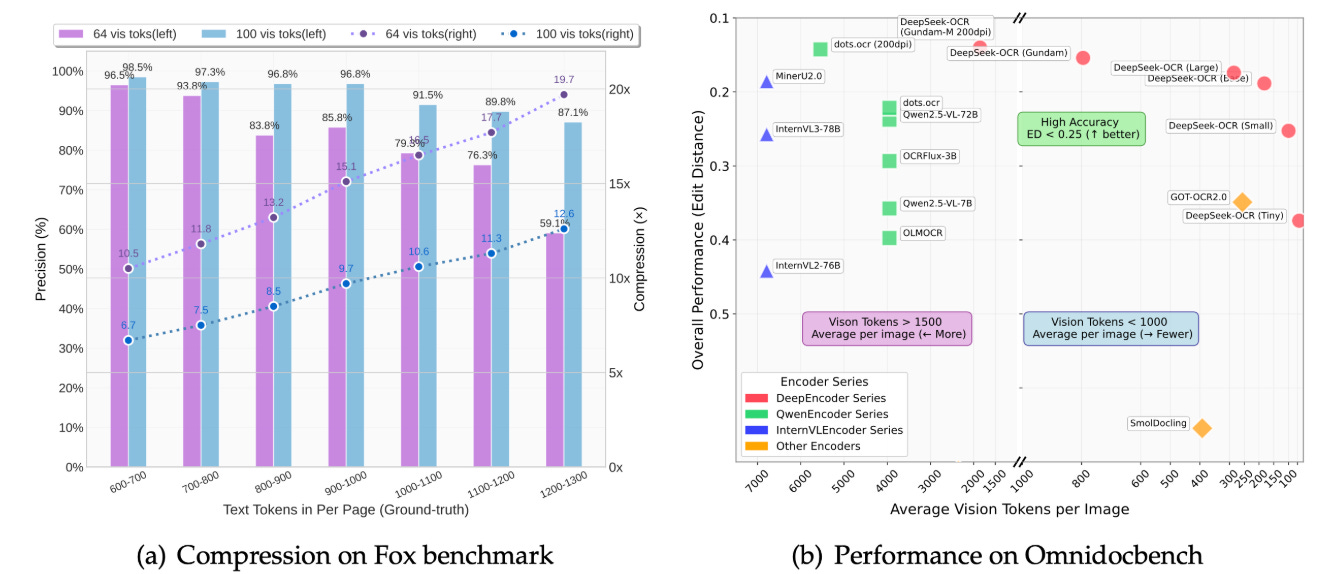

DeepSeek-OCR Demonstrates High Precision in Long-Context Text Compression and Decoding

DeepSeek-OCR, developed by DeepSeek-AI, offers a promising solution for compressing long contexts via optical 2D mapping, utilizing a DeepEncoder and a decoder called DeepSeek3B-MoE-A570M. The technology achieves an impressive OCR precision of 97% with a compression ratio of less than 10 times, and maintains about 60% accuracy even at 20 times compression. This advancement shows potential in areas such as historical text compression and memory mechanisms in large language models. Additionally, DeepSeek-OCR demonstrated practical capabilities by outperforming existing OCR technologies like GOT-OCR2.0 and MinerU2.0 while using significantly fewer vision tokens. It is also capable of producing training data on a large scale, processing over 200,000 pages daily using a single A100-40G GPU, with codes and model weights available on GitHub for public access.

Alphabet’s Verily Launches AI-Powered Health App Amid US Tech Healthcare Push

Alphabet’s life sciences division, Verily, has introduced Verily Me, an AI-powered health app, amid initiatives by the Trump administration to encourage technological advancements in healthcare tools across the U.S. Tech giants including Alphabet, Apple, and Amazon have committed to investing in U.S. health systems, aiming to enhance the accessibility and organization of medical data.

HDFC Bank Optimistic: AI Implementation Will Not Lead to Job Layoffs

HDFC Bank, India’s largest private sector lender, does not anticipate any layoffs due to its use of artificial intelligence, according to its CEO. While the bank is conducting “lighthouse experiments” with generative AI and other technologies, it views these advancements as an opportunity to shift employees from backend to frontend or tech roles rather than reduce staff. The bank added 5,000 employees in the past six months, bringing its workforce to 220,000 as of September. The CEO emphasized that AI will not take over decision-making processes, as the bank aims to enhance efficiency and customer experience while continuing to rely on human employees.

🎓AI Academia

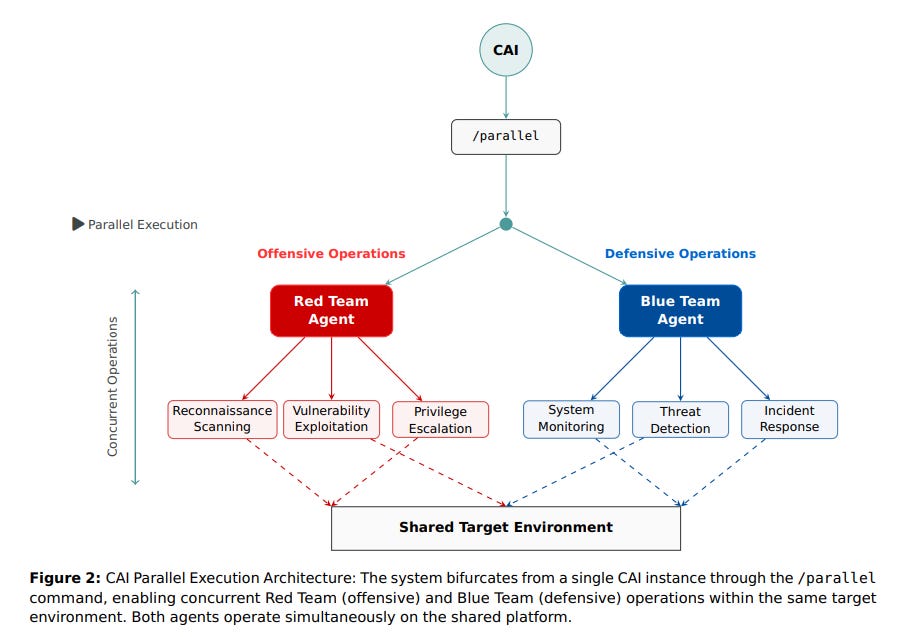

Study Evaluates AI Effectiveness in Cybersecurity Attack and Defense Scenarios Using CTFs

A recent study has evaluated the effectiveness of AI systems in cybersecurity, focusing on whether they perform better in offensive or defensive roles. Utilizing the CAI framework, researchers deployed AI agents in 23 Attack/Defense Capture-the-Flag competitions to compare their capabilities. Findings indicate that although defensive agents have an advantage with a 54.3% success rate in unconstrained patching compared to 28.3% for offensive initial access, this advantage vanishes when operational constraints are imposed. The study challenges prevailing assumptions about AI’s superiority in offensive cyber roles, emphasizing the need for defenders to use open-source AI frameworks to counter increasing automation in cyber threats.

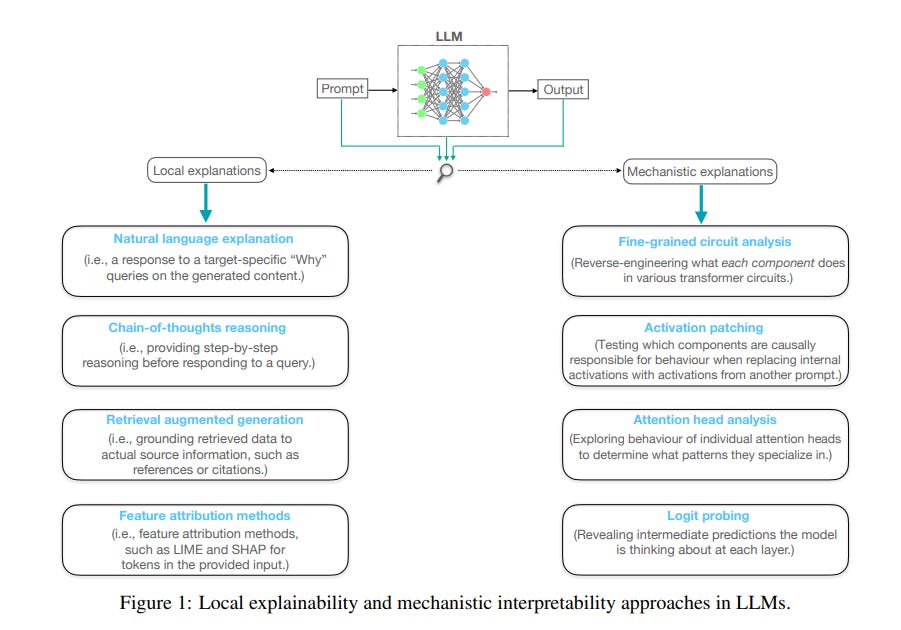

Understanding Large Language Models: Challenges in Achieving Trustworthy AI Explanations

A recent study highlights the challenges and opportunities in enhancing the explainability of large language models (LLMs), which despite their impressive capabilities in natural language processing, often produce outputs that are not easily interpretable by humans. These models, commonly based on Transformer architecture, can generate errors known as hallucinations, emphasizing the need for better understanding and interpretation. The research examines local explainability and mechanistic interpretability in LLMs, focusing on healthcare and autonomous driving applications, and discusses trust implications and unaddressed issues in this evolving field. The study aims to pave the way for generating trustworthy, human-aligned explanations from these models.

Examining Generative AI’s Role in Revolutionizing Usability Inspections with Human Experts

A recent study by researchers in Brazil investigated the potential of generative AI models to augment usability inspections in software, traditionally performed by human experts. The study compared two AI models, GPT-4o and Gemini 2.5 Flash, against human inspectors using metrics such as precision, recall, and F1-score. Findings showed that while human inspectors demonstrated higher precision, the AI models were effective in detecting unique defects but with a higher incidence of false positives. The research underscores the potential for AI to complement human expertise in usability inspections rather than replace it, highlighting the combined approach as yielding the most comprehensive results. The study emphasizes the role of AI as an augmentation tool in enhancing efficiency and defect coverage in software usability evaluations.

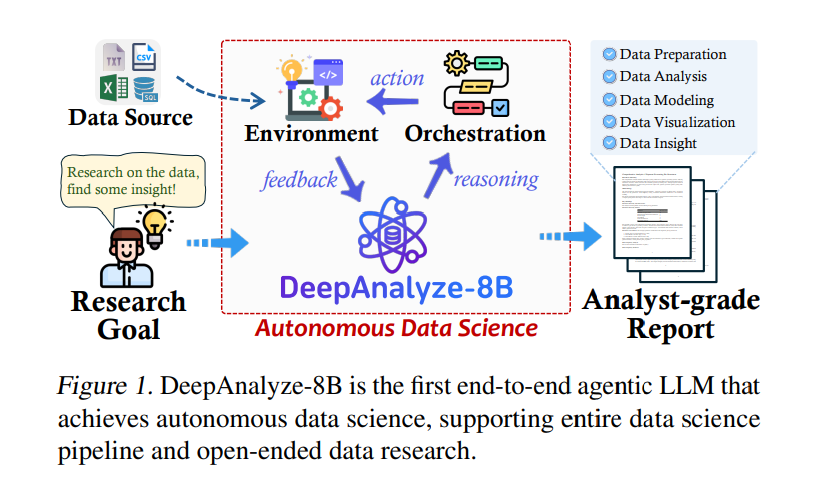

DeepAnalyze-8B LLM Redefines Autonomous Data Science, Automates Entire Research Pipeline

DeepAnalyze-8B, an agentic large language model, marks a significant advancement in autonomous data science by handling the entire data science pipeline—from data acquisition to creating analyst-grade research reports. This model surpasses previous workflow-based agents by using a curriculum-based training paradigm that mirrors human learning, allowing it to excel at complex data science tasks. With only 8 billion parameters, DeepAnalyze outperforms counterparts using more expansive proprietary models, providing an open-source solution that could pave the way for more sophisticated autonomous data processing.

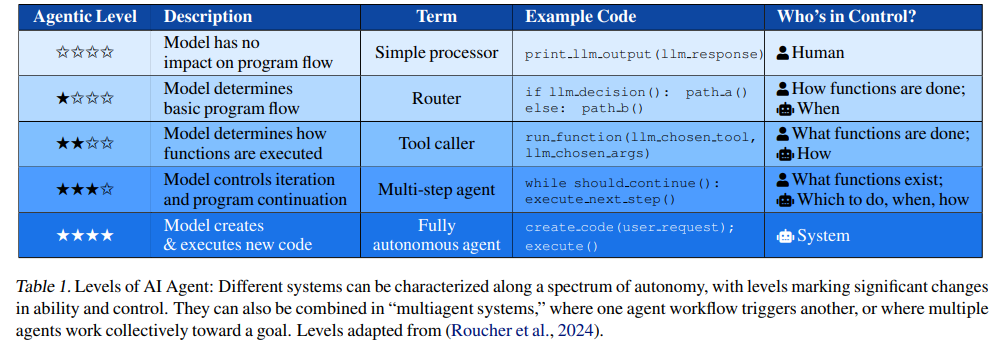

AI Technology Experts Warn Against Development of Fully Autonomous AI Agents

A new study by researchers at Hugging Face argues against the development of fully autonomous AI agents, citing an increase in risks to users as these systems gain more control. The research draws from previous literature and current market trends, highlighting the severe ethical dilemmas and safety hazards associated with high autonomy levels. These include threats to privacy, security, and even human life, exacerbated by the potential for “hijacking” by malicious actors. The authors suggest that systems with partial human oversight present a more balanced risk-benefit ratio, as opposed to fully autonomous implementations that could execute actions and create code beyond human-imposed constraints.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.