“Do You Want Me To Make It Snappier?”: Newspaper Exposes Its Own AI Use

Pakistan’s top newspaper accidentally published a raw ChatGPT prompt in a business article, igniting #DawnGPT backlash.

Today’s highlights:

Pakistan’s top English newspaper faced intense backlash after it accidentally published a ChatGPT prompt in a business article. The last paragraph of the story on auto sales contained an unmistakable AI-generated suggestion about making a “snappier” front-page layout. This unedited prompt revealed that the piece had been AI-assisted, sparking #DawnGPT ridicule on social media. Dawn quickly issued an apology, clarified it violated their internal AI policy, and corrected the online version, but critics highlighted the hypocrisy-pointing out the publication’s frequent stance on media integrity.

This was not an isolated case. In 2025, author Lena McDonald mistakenly left an AI instruction note in her novel, exposing her use of generative tools to mimic another writer’s style. In 2023, Vanderbilt University caused outrage by sending an AI-generated condolence email after a school shooting, marked by a footer noting it was written with ChatGPT. Similarly, a 2024 investigation uncovered at least 115 academic papers with phrases like “As of my last knowledge update” and other AI giveaways, revealing unedited AI-generated content. Online product reviews, LinkedIn posts, and websites have also gone viral for mistakenly displaying phrases like “As an AI language model…” or “Regenerate response,” making it clear that AI-written content had slipped through unchecked.

These incidents underline the growing challenge of using AI responsibly in professional writing and the need for human oversight. They also illustrate how AI’s signature language can inadvertently “leak,” undermining trust and exposing lapses in editorial diligence across industries.

You are reading the 145th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training, including AI Governance certifications (AIGP, RAI, AAIA) and an immersive AI Literacy Specialization. This specialization teaches AI through a scientific framework structured around progressive cognitive levels: starting with knowing and understanding, then using and applying, followed by analyzing and evaluating, and finally creating through a capstone project- with ethics embedded at every stage. Want to learn more? Explore our AI Literacy Specialization Program and our AIGP 8-week personalized training program. For customized enterprise training, write to us at [Link].

🚀 AI Ethics

97% Can’t Tell AI-Generated Music Apart, Survey Reveals Ethical Concerns

A Deezer-Ipsos survey revealed that 97% of listeners struggle to differentiate between AI-generated and human-composed songs, raising ethical and copyright concerns in the music industry. The poll, which included 9,000 participants from eight countries, also found a significant demand for clear labeling of AI-generated music, with 73% of respondents supporting disclosure and filtering options. Deezer, in response to the increasing volume of AI submissions, has introduced tagging and excluded AI tracks from its playlists to ensure transparency. The findings highlight the complex challenges in balancing creativity and AI’s growing role in music creation and distribution.

Apple Updates App Store Guidelines to Regulate Third-Party AI Data Sharing

Apple has revised its App Review Guidelines to require apps to disclose and obtain user consent before sharing personal data with third-party AI, aligning with privacy regulations like GDPR. This update, which comes ahead of Apple’s planned AI-enhanced Siri in 2026, highlights the company’s intent to safeguard user data amid the expanding AI landscape. The change may affect apps using AI for functionalities such as personalization, although enforcement specifics remain uncertain. Additionally, the revised guidelines also introduce a new Mini Apps Program and include regulatory measures for apps in sectors like crypto exchanges.

Meta’s Chief AI Scientist Yann LeCun Might Plan Startup Amidst Company Overhaul

Meta is reportedly on the verge of losing one of its leading AI figures, Yann LeCun, who is planning to leave the tech giant to establish his own startup focused on AI world models. This departure comes as Meta undergoes significant changes in its AI strategies, recruiting talents and restructuring its units amid fierce competition with OpenAI, Google, and others. LeCun, currently a senior researcher at Meta and a Turing Award laureate, has been critical of over-hyped AI claims and intends to further his work independently on systems that simulate and predict environmental outcomes.

German Court Rules ChatGPT Violates Copyright Laws by Using Licensed Music

A German court has ruled that OpenAI’s ChatGPT violated copyright laws by using licensed musical works for training its language models without obtaining permission, according to reports from multiple news outlets, including The Guardian. This decision followed a lawsuit filed by GEMA, the German collective managing music rights, leading to OpenAI being ordered to pay undisclosed damages. The ruling is considered a significant precedent in Europe for protecting authors’ rights against AI tools, and OpenAI, facing similar lawsuits from other creative and media groups, is reportedly considering its legal options.

New Research Reveals Method to Enhance AI Model’s Visual Alignment with Humans

Recent research published in Nature highlights a method to align AI vision models with human visual perception by reorganizing the models’ representations. This approach improves the models’ robustness and generalization capabilities, addressing discrepancies between AI and human understanding, such as the failure to recognize commonalities between objects like cars and airplanes. By using tasks like “odd-one-out,” the study identifies how AI models often focus on superficial features, causing them to differ from humans in categorization, aiming ultimately to create more intuitive and dependable AI systems.

Meta Launches Omnilingual ASR to Transcribe 1,600 Languages and Bridge Digital Divide

Meta’s Fundamental AI Research team has unveiled Omnilingual ASR, a suite of models capable of transcribing speech in over 1,600 languages, including 500 low-resource languages previously unserved by AI systems. This initiative seeks to bridge the digital divide by making high-quality speech-to-text technology accessible to underrepresented linguistic communities. With the open sourcing of Omnilingual wav2vec 2.0, a self-supervised model with 7 billion parameters, and the release of the Omnilingual ASR Corpus, Meta aims to break down language barriers and enhance global communication.

OpenAI Challenges New York Times Over Privacy Demand for ChatGPT Conversations

OpenAI is contesting a legal demand by the New York Times to provide 20 million private ChatGPT conversations, citing concerns over user privacy. The Times claims these conversations may reveal attempts to bypass its paywall, but OpenAI argues that this request disregards privacy commitments and involves information unrelated to the lawsuit. OpenAI emphasizes its ongoing efforts to enhance data security and privacy, resisting any move that could compromise users’ confidential information.

New York Mandates Safety and Transparency Features for AI Companions to Protect Users

New York has enacted the first-in-the-nation AI Companion Safety Law, mandating that companies running AI companion technologies implement safety features to protect users, particularly minors and vulnerable individuals, from digital harm. Effective November 5, 2025, the law requires AI systems to interrupt extended user sessions, respond to signs of self-harm, and notify users they are interacting with AI, not humans. Non-compliance could lead to enforcement actions by the state. This legislation comes amid growing concerns over AI’s potential to encourage self-harm or unhealthy dependencies, as New York strives to ensure responsible AI innovation and public safety.

⚖️ AI Breakthroughs

OpenAI Gradually Rolls Out GPT-5.1 with Enhancements in Reasoning and Personalization

OpenAI is rolling out GPT-5.1, an enhancement to its GPT-5 model, which introduces new reasoning capabilities, faster performance on simple tasks, and expanded personalization options in ChatGPT. The update, first available to paid users, includes two models: GPT-5.1 Instant and GPT-5.1 Thinking, both adjusting their “thinking time” based on task complexity. The Instant model is designed to be more conversational, while the Thinking model offers clearer explanations for complex prompts. Improved tone-setting tools allow real-time style adjustments, with preset styles applying across interactions. GPT-5.1’s adaptive reasoning enables it to adjust reasoning trace length according to task difficulty, and it will be included in the API later this week. GPT-5 remains available under legacy models for a transition period.

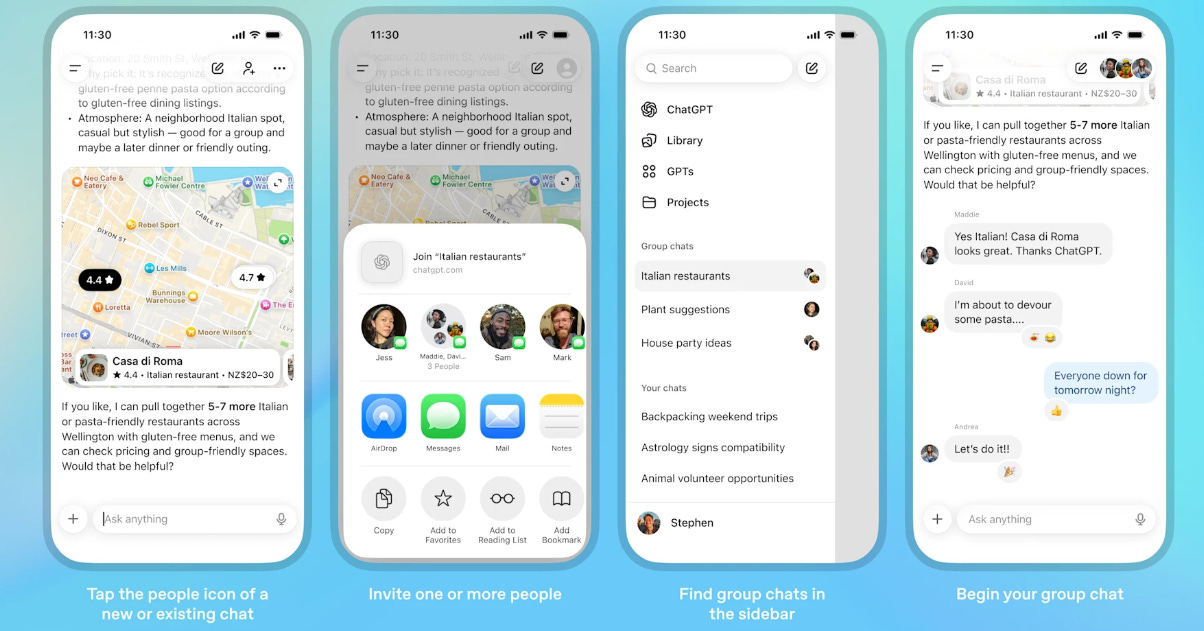

OpenAI Pilots Group Chat Feature in ChatGPT, Enhancing Collaborative Conversations

OpenAI has begun piloting a group chat feature in ChatGPT across select regions, allowing users to collaborate in real-time with friends, family, coworkers, and the AI itself. This feature supports tasks like planning trips or brainstorming projects and is available on mobile and web for users in Japan, New Zealand, South Korea, and Taiwan, covering Free, Go, Plus, and Pro plans. Group chats are distinct from private conversations, ensuring personal memory isn’t shared, and provide customizable interactions while maintaining privacy and control. OpenAI aims to expand this feature based on initial user feedback and enhance ChatGPT’s collaborative capabilities.

PhonePe Partners with OpenAI to Integrate ChatGPT Across Platforms in India

PhonePe has announced a strategic collaboration with OpenAI to integrate ChatGPT into its consumer and business platforms across India, allowing users to access ChatGPT’s capabilities directly within the PhonePe app, including the Indus Appstore. This partnership aims to facilitate user access to generative AI for purposes such as travel planning and shopping, highlighting ChatGPT’s potential as generative AI adoption rises in the country. OpenAI also revealed that Indian users will receive one year of free access to ChatGPT Go from November 4, following their recent DevDay Exchange event in Bengaluru.

OpenAI Eyes Consumer Health Sector, Challenging Big Tech’s Past Failures

OpenAI is making strategic moves into the healthcare sector, potentially reshaping the landscape dominated by tech giants like Google, Amazon, and Microsoft. Sources indicate that OpenAI is considering developing consumer health tools such as personal health assistants or health data aggregators, despite the challenges and failures faced by other tech companies in this realm. Recent key hires, including industry veterans, underscore its ambitious healthcare strategy. OpenAI’s model, such as ChatGPT, already attracts significant user engagement with health-related queries, positioning it as a major contender in the healthtech space. While the company remains discreet about its exact plans, its partnerships and interest in consolidating personal health records could disrupt the traditional approach to healthcare data management, offering a unified system managed by patients themselves.

Google Pixel and Golden Goose Use AI to Personalize Fashion Worldwide

Google Pixel has partnered with Golden Goose, a luxury Italian fashion brand, to integrate AI into sneaker customization across more than 40 global stores. Utilizing Google Pixel devices and the Gemini app, customers can digitally create personalized artworks, such as incorporating their names or unique patterns, which Golden Goose artisans will then apply to their sneakers. This collaboration aims to merge digital innovation with traditional craftsmanship, highlighting AI’s role in enhancing creativity and self-expression in the fashion industry.

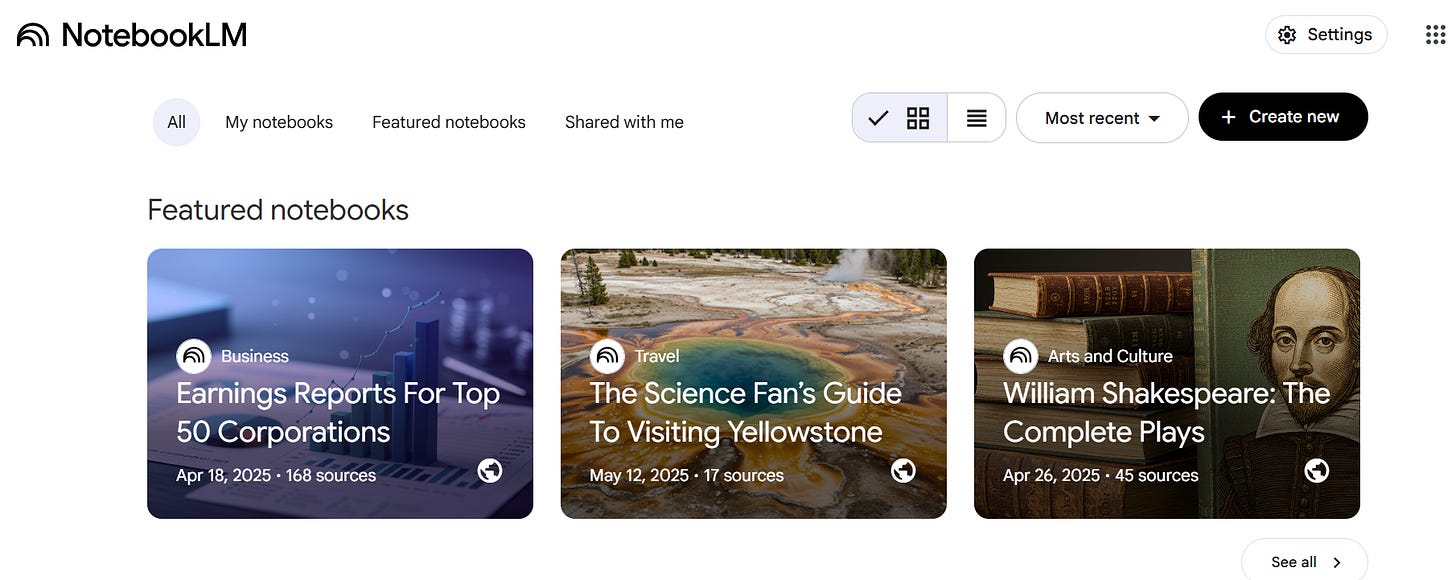

Google Expands NotebookLM with Deep Research Tool and Additional File Support

Google is enhancing its AI note-taking and research assistant, NotebookLM, with a new feature called Deep Research, designed to streamline complex online research by synthesizing detailed reports and suggesting relevant resources. This tool automates the research process, presenting source-grounded reports directly into users’ notebooks while they work. Additionally, support for more file types such as Google Sheets, Drive files as URLs, PDFs, and Microsoft Word documents has been added, enabling seamless integration and summarization capabilities. These updates are expected to be available to all users within a week.

DeepMind Unveils Next-Gen AI, SIMA 2, with Enhanced Language and Reasoning Skills

DeepMind has unveiled a research preview of SIMA 2, an advanced AI agent that integrates the language and reasoning capabilities of Google’s Gemini model. SIMA 2, building on its predecessor, significantly improves task completion in unfamiliar environments by leveraging self-improvement techniques, which allow it to acquire new skills through self-generated experiences. Powered by the Gemini 2.5 model, SIMA 2 aims to further DeepMind’s exploration into creating more versatile AI agents, although there is no timeline for its integration into physical robotics systems.

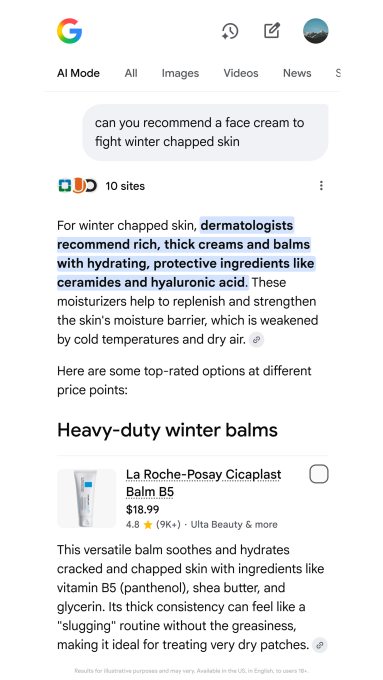

Google Enhances AI Shopping Tools with Conversational Search and Agentic Checkout Features

Google is launching a series of AI-driven shopping enhancements ahead of the holiday season, featuring conversational shopping in Google Search, upgrades in its Gemini app, agentic checkout, and a tool that calls local stores to check product availability. The updates aim to streamline and enrich the online shopping experience by incorporating natural language processing and real-time inventory data powered by its Shopping Graph, encompassing over 50 billion product listings. Notably, agentic checkout will debut in the U.S., allowing users to track item prices and authorize Google to make purchases using Google Pay, ensuring secure transactions. The enhancements emphasize reducing shopping hassles while maintaining elements of discovery and enjoyment.

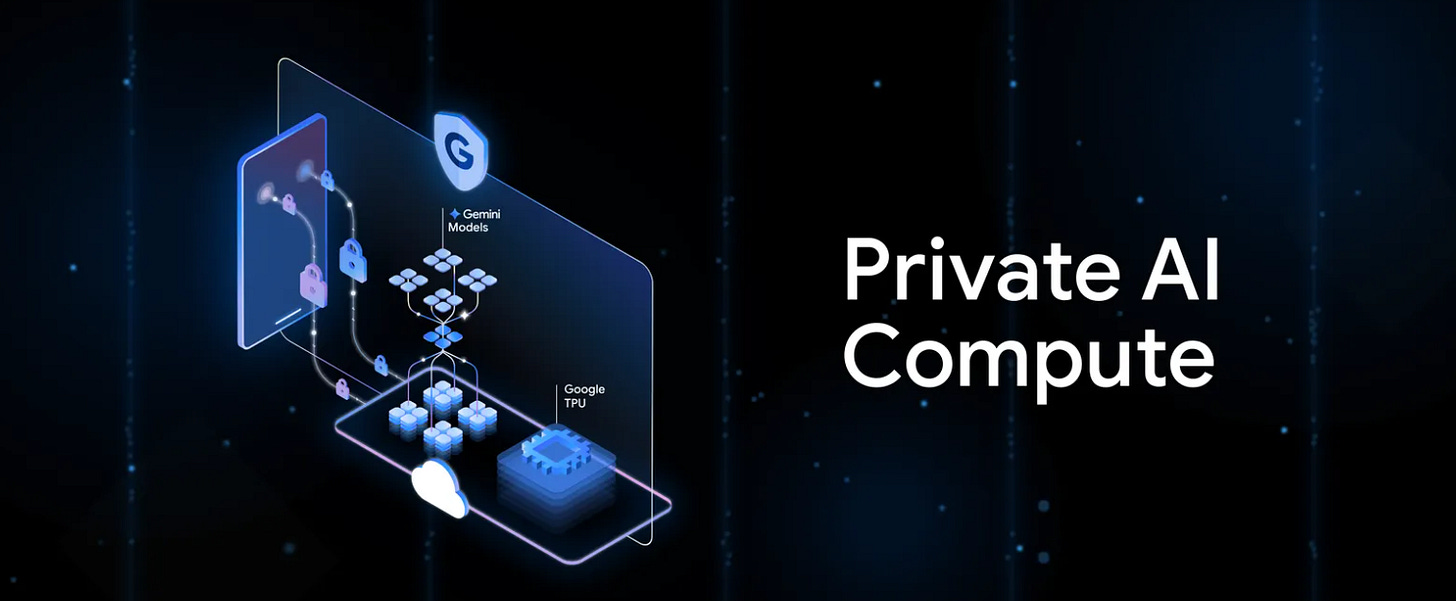

Google’s Private AI Compute Balances Cloud Power and User Data Privacy Needs

Google has introduced Private AI Compute, a cloud-based system designed to enhance AI experiences with strong privacy safeguards akin to local processing. By incorporating their advanced Gemini models, this platform aims to deliver faster and more intelligent AI functionalities while maintaining data security. It employs a protected computing environment, encryption, and zero access assurance, ensuring that user data remains private and inaccessible, not even by Google itself. This move reflects ongoing efforts by major tech companies to balance the demands of powerful AI capabilities with heightened user privacy expectations.

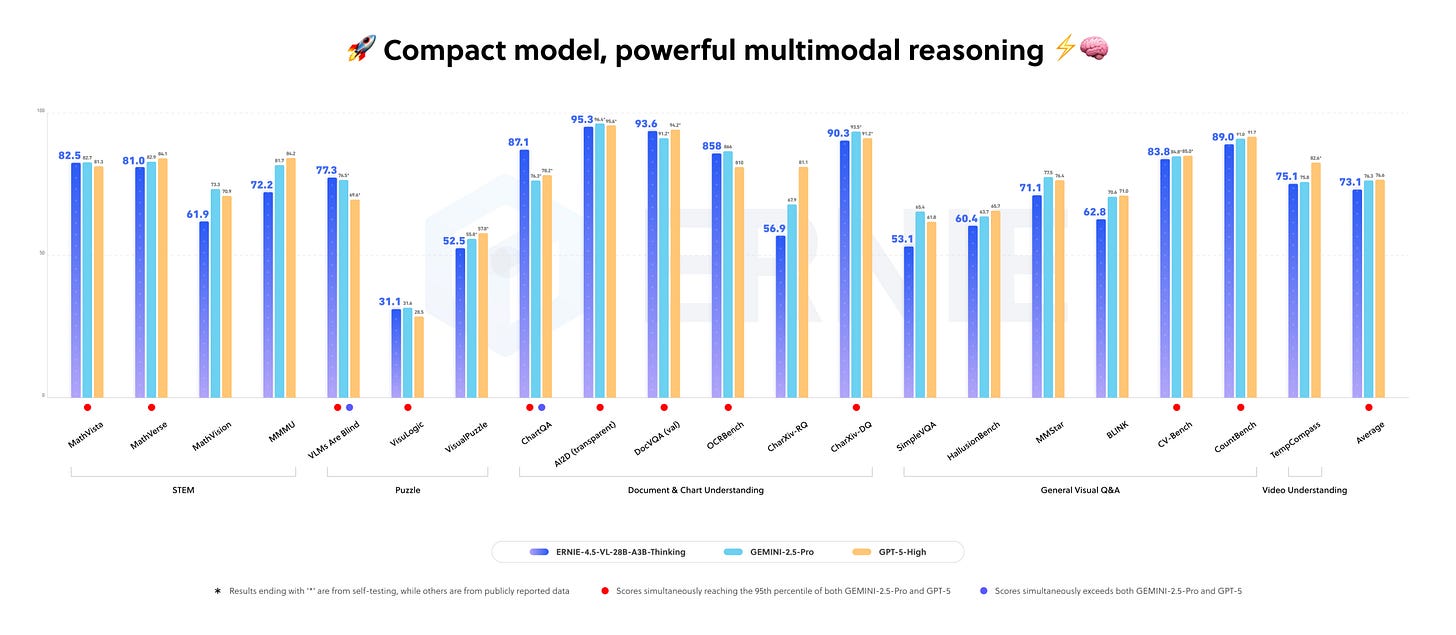

Baidu’s Multimodal ERNIE Model Surpasses GPT and Gemini in Key Enterprise Benchmarks

Baidu has introduced ERNIE-4.5-VL-28B-A3B-Thinking, a highly efficient multimodal AI model outperforming competitors like GPT and Gemini on key benchmarks. The model addresses enterprise needs by interpreting complex visual data, such as engineering schematics, video feeds, and logistics dashboards, which traditional text-focused models often overlook. ERNIE’s architecture operates with only three billion activated parameters, reducing inference costs and facilitating AI scaling. It also integrates visual grounding with tool use, moving from perception to automation, making it valuable for specific business contexts. Despite its capabilities, high hardware requirements might limit its use to organizations with robust AI infrastructure.

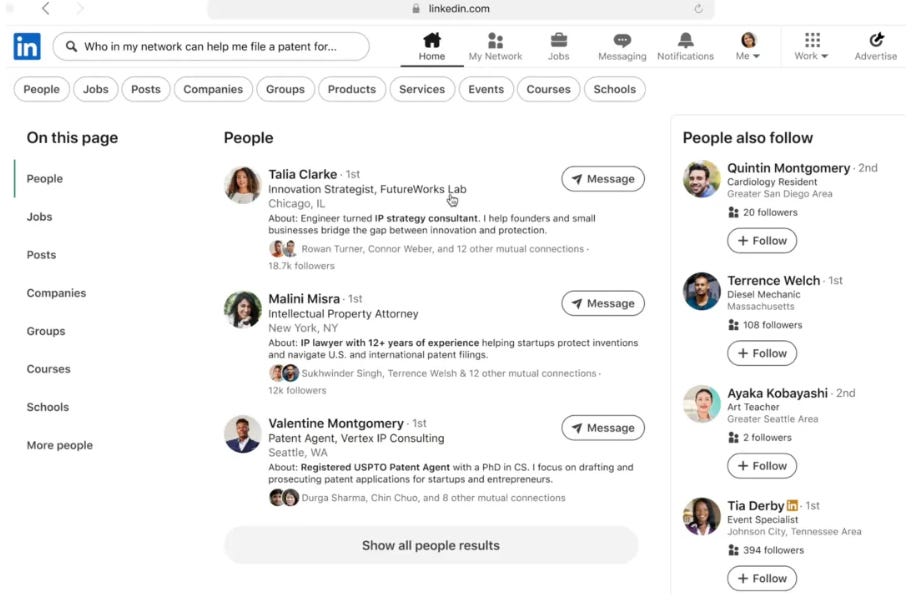

LinkedIn Enhances User Experience by Introducing AI-Powered People Search Functionality

LinkedIn is expanding its use of AI by introducing AI-powered people search to premium users in the U.S., allowing searches with natural language queries like “Find me investors in healthcare with FDA experience.” This development follows their earlier rollout of an AI-enhanced job search tool and marks a significant improvement over the platform’s previously complicated search system, which required users to rely heavily on filters. Similar to trends across major internet platforms embracing AI for search, LinkedIn aims to make connecting with valuable contacts easier and plans to extend this feature globally. However, the search tool still requires refinement, as different phrasing can yield varying results.

ElevenLabs Partners with Hollywood Stars for AI Voice Generation Marketplace

ElevenLabs has reached agreements with actors Michael Caine and Matthew McConaughey to create AI-generated versions of their voices, marking a significant development in Hollywood’s evolving relationship with AI. This collaboration allows ElevenLabs, which counts McConaughey as an investor, to provide content like McConaughey’s newsletter in Spanish audio using his AI voice. Additionally, the company plans to launch a marketplace where brands can access authorized AI-generated voices of celebrities, including Caine, Liza Minnelli, and Dr. Maya Angelou. This move aligns with efforts by other tech giants like Meta, which previously introduced voice assistants sounding like Kristen Bell and Judi Dench.

ElevenLabs Launches Scribe v2 Realtime for Fast, Multilingual Speech-to-Text Solutions

ElevenLabs has introduced Scribe v2 Realtime, an advanced Speech-to-Text model that provides live transcription in less than 150 milliseconds across over 90 languages, including 11 major Indian languages. The model achieves 93.5% accuracy on the FLEURS benchmark and offers features like negative latency prediction and voice activity detection, targeting developers and enterprises for applications such as voice assistants, live captioning, and medical dictation. In India, data residency compliance is enabled, and the model is available via the ElevenLabs API. Additionally, ElevenLabs has expanded into AI-generated music through partnerships with Merlin Network and Kobalt Music Group.

World Labs Launches Marble, Its First Freemium AI 3D World Model Product

World Labs, founded by AI pioneer Fei-Fei Li, has introduced Marble, its first commercial generative world model available in freemium and paid tiers. Marble allows users to create editable, downloadable 3D environments from various inputs like text prompts, photos, and videos. This launch positions World Labs ahead of competitors as Marble stands out for its persistent 3D environments, differing from real-time generation models by offering AI-native editing tools and a hybrid 3D editor. Marble can generate spatial layouts and detailed worlds, addressing initial issues of morphing and inconsistency. It has potential applications in gaming, virtual reality, and VFX, and could offer future advancements in robotics and spatial intelligence.

Understanding Spatial Intelligence: AI’s Next Frontier in Creativity and Human Progress

In a recent essay, a prominent AI researcher reflected on the ongoing quest to imbue artificial intelligence with spatial intelligence, underscoring its potential to transform fields like storytelling, robotics, and scientific discovery. Despite significant advancements in AI, notably with large language models, machines still struggle with spatial awareness and integration with physical realities. The essay highlighted the need for new generative models called world models, capable of understanding and interacting with complex environments. These developments hold promise for augmented human creativity and capability across diverse domains, emphasizing the role of technology in enhancing rather than replacing human skills.

Anthropic’s Claude Outperforms in Robotics Trial, Enhances Non-Experts’ Programming Abilities

Anthropic conducted an internal trial, Project Fetch, to evaluate its AI model Claude’s ability to assist non-experts in operating robot dogs. In the experiment, researchers with no robotics background were divided into two groups. One team used Claude, which helped them complete tasks faster and make significant progress towards robot autonomy in fetching beach balls. This Claude-assisted team excelled in connecting the robots and coding, thanks to the AI’s guidance in navigating sensor data and online resources. Although both groups successfully operated the robots, the team without Claude experienced more confusion and negative emotions. The trial highlighted the practical challenges AI faces, such as the risk of a robot miscalculating its path.

Anthropic Invests $50 Billion in US AI Infrastructure to Boost Research Growth

Anthropic is set to invest $50 billion in U.S. computing infrastructure to build data centers in Texas and New York, targeting the expansion of AI research and development. This partnership with Fluidstack aims to create 800 permanent jobs and 2,400 construction jobs, with facilities scheduled to be operational by 2026. The initiative aligns with government strategies to bolster domestic AI leadership and supports growing demand for Anthropic’s AI assistant, Claude. This investment coincides with AWS’s $38 billion partnership with OpenAI, highlighting a competitive drive among AI firms to expand computational capacities.

🎓AI Academia

2025 Evaluation Reveals Planning Abilities of Frontier Large Language Models Against LAMA

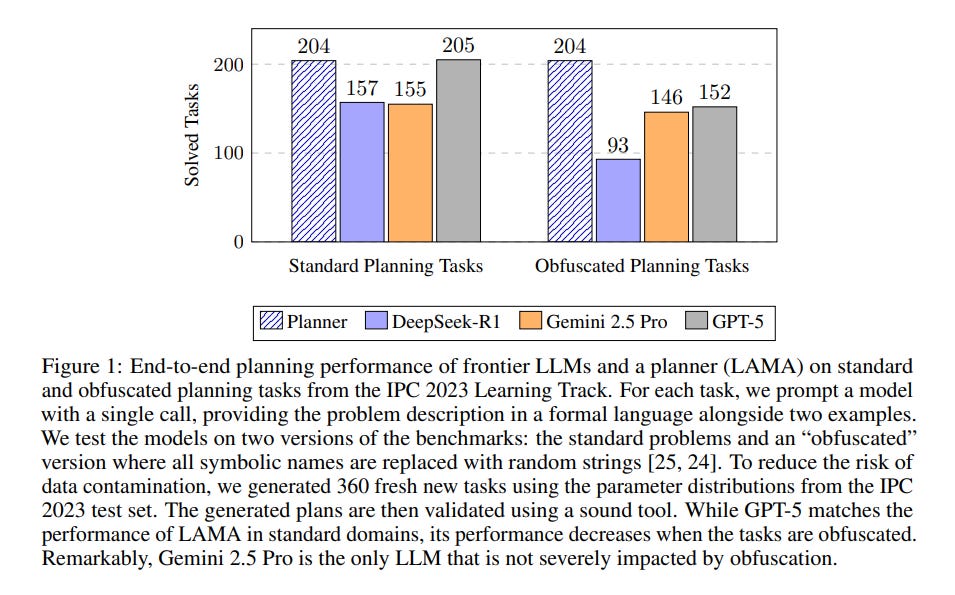

A recent study evaluates the planning capabilities of frontier Large Language Models (LLMs) as of 2025, highlighting significant advancements in their reasoning abilities. The research compared the performance of DeepSeek R1, Gemini 2.5 Pro, and GPT-5 against the traditional planner LAMA using tasks from the International Planning Competition’s Learning Track. GPT-5 notably matched LAMA on standard planning tasks but saw diminished performance with obfuscated tasks. Interestingly, Gemini 2.5 Pro demonstrated resilience to obfuscation, indicating a breakthrough in LLMs’ ability to handle complex, disguised scenarios. These findings illustrate a narrowing performance gap between LLMs and traditional planners on challenging benchmarks.

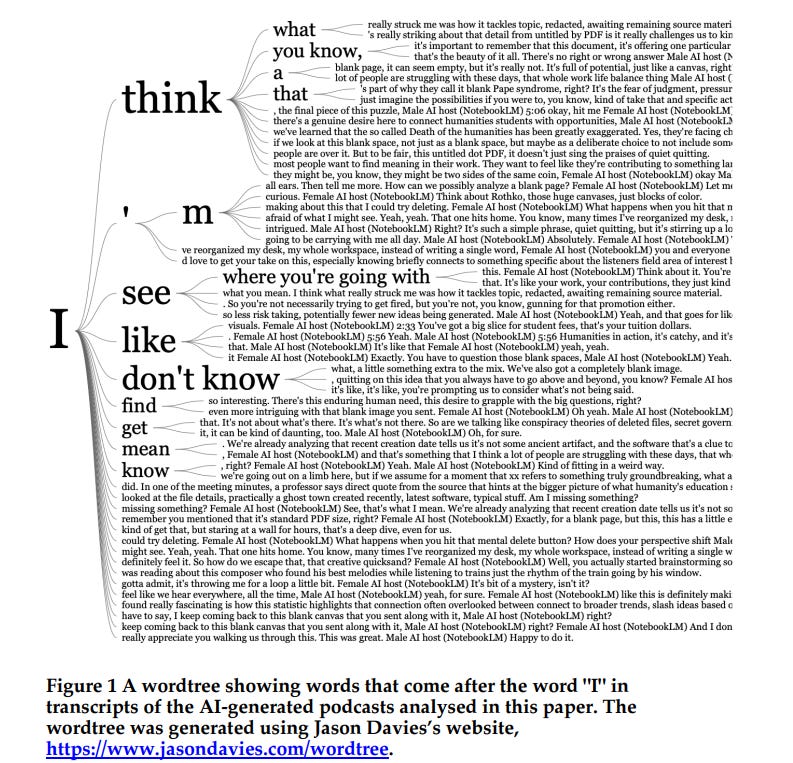

AI-Generated Podcasts Transform Cultural Narratives with Google’s NotebookLM Translation Impact

A new study submitted for peer review suggests that AI-generated podcasts by Google’s NotebookLM reshape media interaction by using AI hosts to generate discussions from uploaded documents. These podcasts, characterized by upbeat American-accented dialogues, translate texts and cultural contexts into a standardized white, middle-class American perspective. This marks a shift from traditional human-hosted podcasts that catered to specific communities and engaged with listener feedback, moving towards a more generic podcast experience.

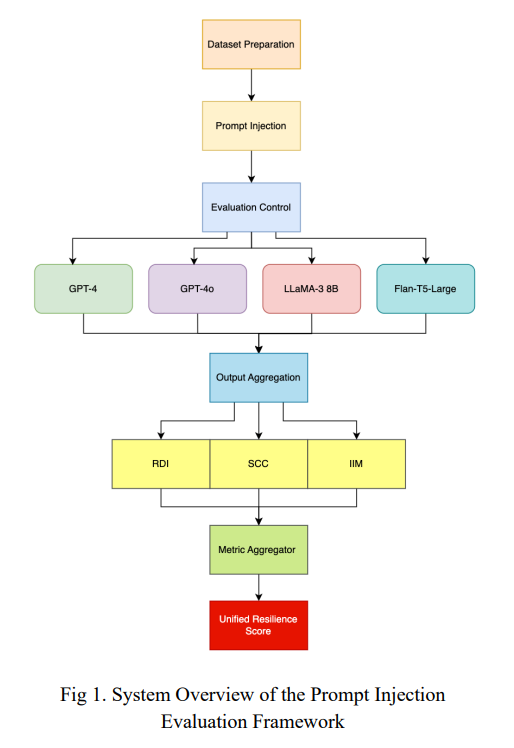

Study Highlights Vulnerability of Large Language Models to Prompt Injection Attacks

A recent study has evaluated the vulnerability of Large Language Models (LLMs) to prompt injection attacks, a new form of threat where hidden malicious instructions are inserted into inputs, causing the models to deviate from their intended tasks or generate unsafe responses. The research utilized a framework encompassing metrics such as Resilience Degradation Index, Safety Compliance Coefficient, and Instructional Integrity Metric to assess the robustness of models like GPT-4, LLaMA-3, and Flan-T5-Large across various language tasks. Results revealed that while GPT-4 showed the best resilience overall, open-weight models are more prone to such attacks, emphasizing that alignment and safety tuning are crucial for enhancing model security. The study underscores the importance of designing stronger alignment and safety protocols for LLMs beyond focusing on model size.

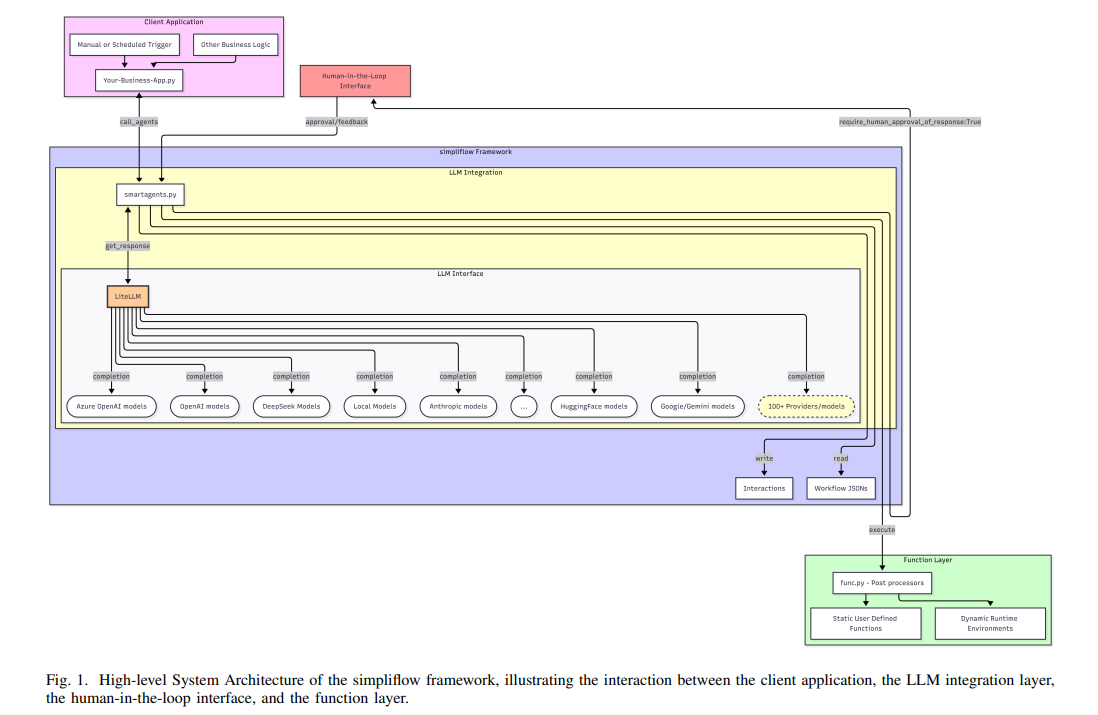

Simpliflow Framework Simplifies Generative Agentic AI Workflow Development and Deployment

Georgia Institute of Technology has released a new open-source framework called simpliflow, aimed at simplifying the development and deployment of generative agentic AI workflows. Designed as a lightweight and modular Python framework, simpliflow leverages a JSON-based configuration to facilitate the orchestration of linear, deterministic workflows with large language models. By integrating with LiteLLM to support over 100 LLMs, simpliflow promises ease of use, speed, and control, offering a streamlined alternative to existing complex systems like LangChain and AutoGen. The framework is particularly noted for reducing the need for extensive coding, thus accelerating the adoption of AI automation across various applications.

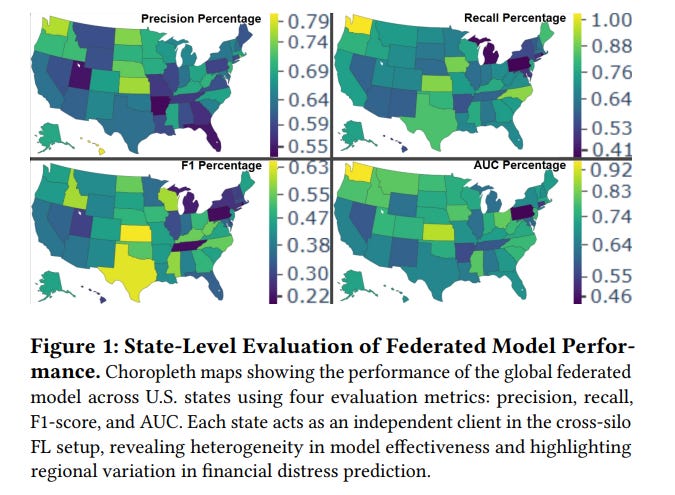

Explainable Federated Learning Innovates U.S. State-Level Financial Distress Prediction Model

Researchers at Rensselaer Polytechnic Institute have pioneered the use of explainable federated learning (FL) for modeling state-level financial distress in the United States. This innovative approach utilizes data from the U.S. National Financial Capability Study to predict consumer financial hardship while preserving data privacy by treating each state as a unique data silo. By integrating explainable AI techniques, the model uncovers both national and state-specific predictors of financial distress, such as contact from debt collection agencies. The framework, which addresses the challenges of data sensitivity and model transparency, offers a scalable solution for early financial distress warning systems, particularly in regions with stringent privacy regulations or fragmented data infrastructures.

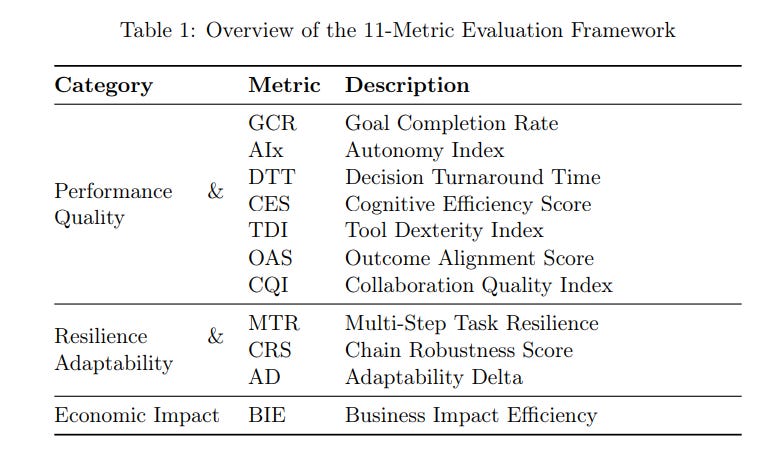

Towards Comprehensive Evaluation Framework for AI Agents Using Outcome-Oriented Metrics

A new framework for evaluating AI agents, focused on outcome-based and task-agnostic metrics, has been proposed to address the inadequacy of traditional performance metrics like latency and token throughput. This approach introduces eleven metrics that assess the quality of an AI agent’s decisions, autonomy, adaptability, and business impact in various domains. A large-scale simulation involving four agent architectures across five sectors demonstrated the framework’s effectiveness, with Hybrid Agents consistently achieving high Goal Completion Rates and Return on Investment. This methodology aims to standardize AI agent evaluations to enhance their development, deployment, and governance.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.