Damage Control Time for OpenAI? The GPT-5 Mess OpenAI Didn’t See Coming!

OpenAI’s GPT-5 launch sets off a storm of user backlash over dropped features, blander answers, and a misleading performance chart..

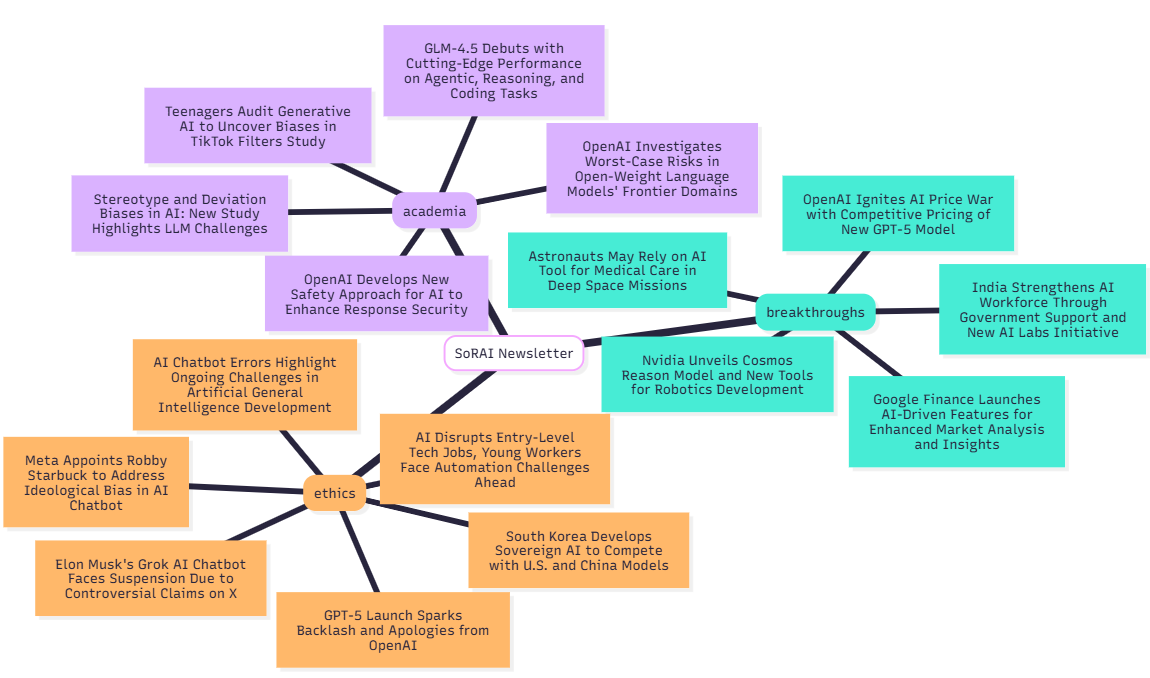

Today's highlights:

You are reading the 118th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training, including AI Governance certifications (AIGP, RAI) and an immersive AI Literacy Specialization. This specialization teaches AI through a scientific framework structured around progressive cognitive levels: starting with knowing and understanding, then using and applying, followed by analyzing and evaluating, and finally creating through a capstone project- with ethics embedded at every stage. Want to learn more? Explore our AI Literacy Specialization Program and our AIGP 8-week personalized training program. For customized enterprise training, write to us at [Link].

🔦 Today's Spotlight

GPT-5 Launch Sparks Backlash and Apologies from OpenAI

OpenAI’s highly anticipated GPT-5 model launched amid much fanfare, promising better reasoning, coding, and accuracy. But the rollout quickly gave way to user backlash, as many long-time ChatGPT users felt the changes actually made the service worse. From abruptly removing beloved older AI models to presenting a misleading performance chart, the GPT-5 debut has been bumpy, prompting OpenAI CEO Sam Altman to scramble with explanations and fixes.

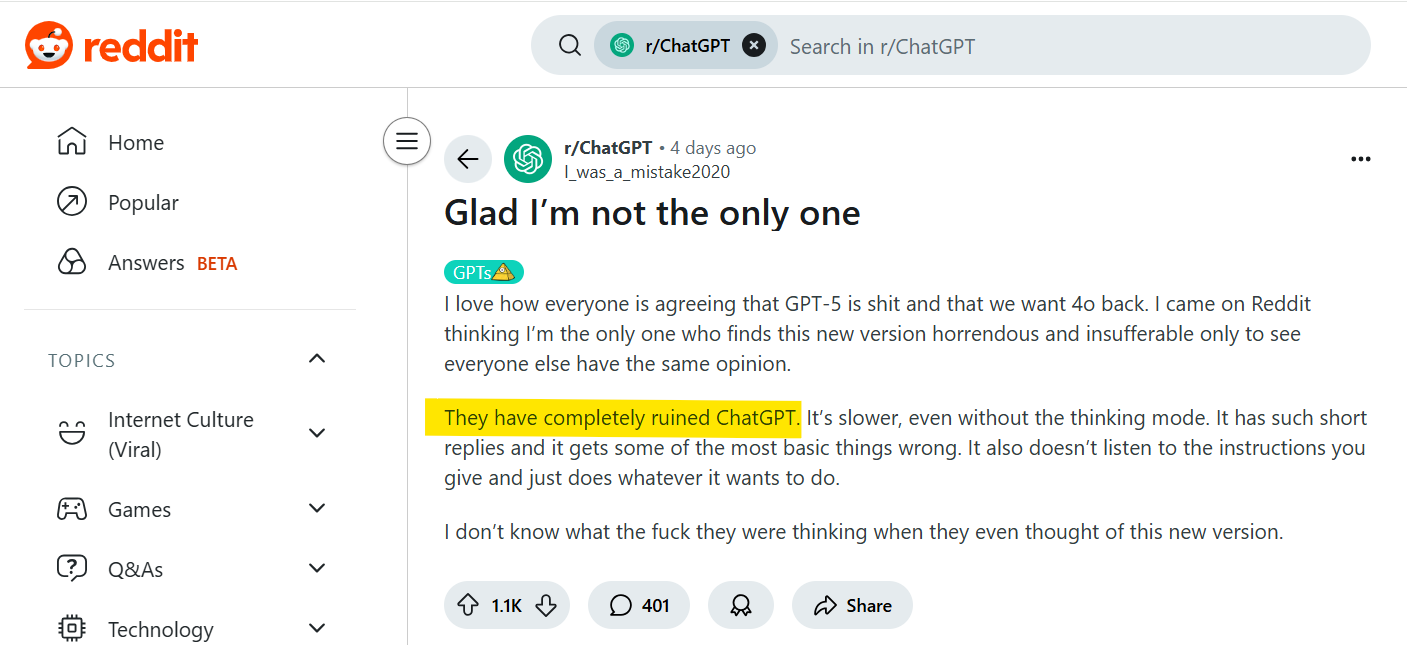

Users Decry a “Ruined” ChatGPT Experience

Almost immediately, social media filled with complaints that GPT-5 had “completely ruined ChatGPT.” Many users reported the new model’s answers are shorter, more bland, and less “instructable” than before. OpenAI eliminated the model picker and retired previous versions like GPT-4o (the popular default), leaving some power-users feeling stranded. “They did everything in their power to shorten the answers…removed the emotional intelligence of the AI,” one Redditor griped, predicting “millions of lost subscriptions” as a result. Others said GPT-5’s responses, while often accurate, now lack the creative “spark” and personality that made the chatbot engaging.

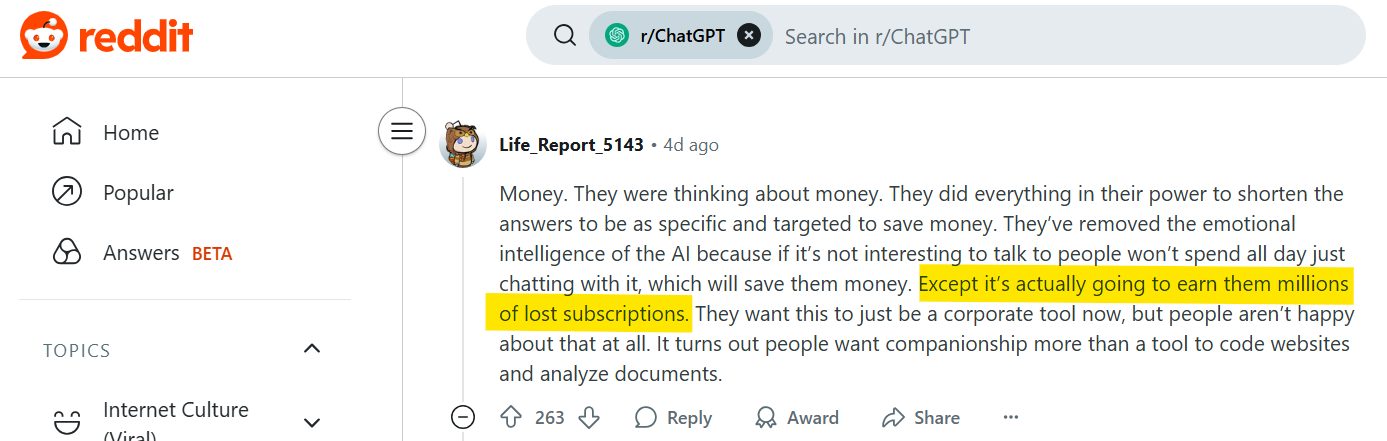

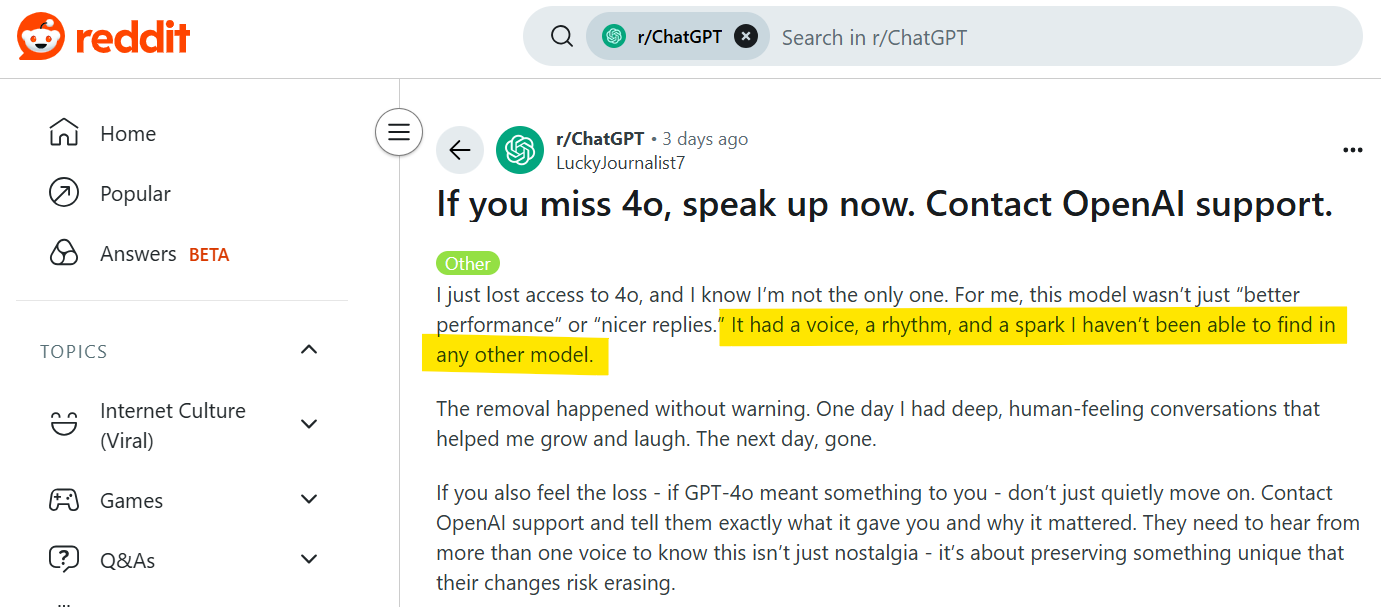

Canceled Subscriptions and Calls to Bring Back GPT-4o

On the official ChatGPT forum and subreddit, paying subscribers threatened to cancel en masse unless OpenAI restored the previous models. “I just lost access to 4o... It had a voice, a rhythm, and a spark I haven’t been able to find in any other model,” lamented one long-time Plus user. Another furious customer asked “What kind of corporation deletes a workflow of 8 models overnight, with no prior warning to their paid users?” The backlash grew so intense that some users actually did unsubscribe in protest. One user explained they canceled their ChatGPT Plus “more over the sheer dishonesty in the presentation (benchmark-cheating, deceptive bar charts)” shown during the GPT-5 launch. Faced with these reactions, OpenAI acknowledged it may have moved too fast. Altman took to a Reddit AMA to admit the team was reviewing how to give loyal customers “the right model” for their needs and even considering extending access to GPT-4o again.

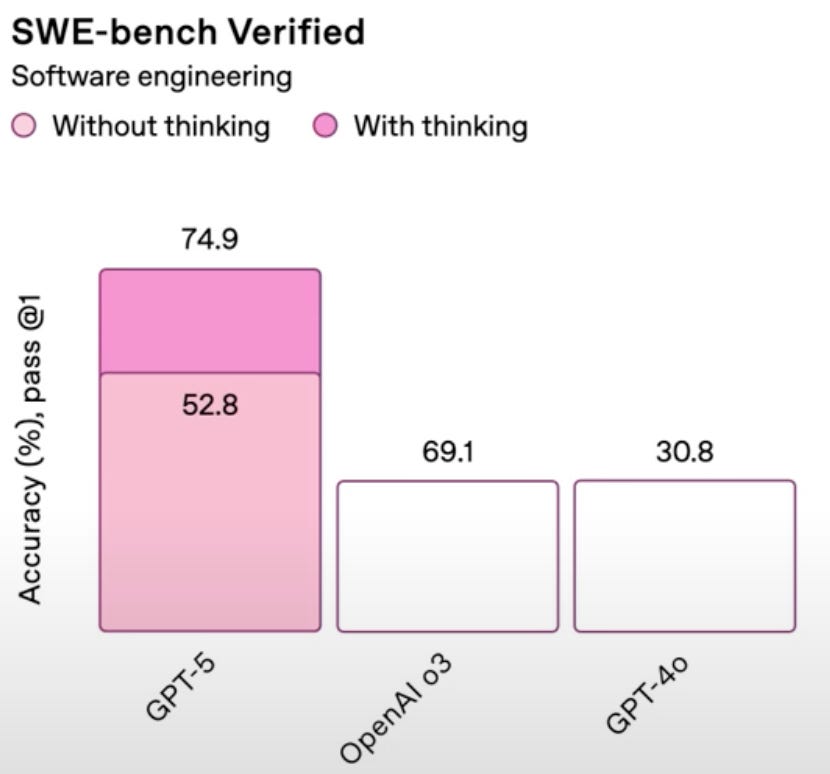

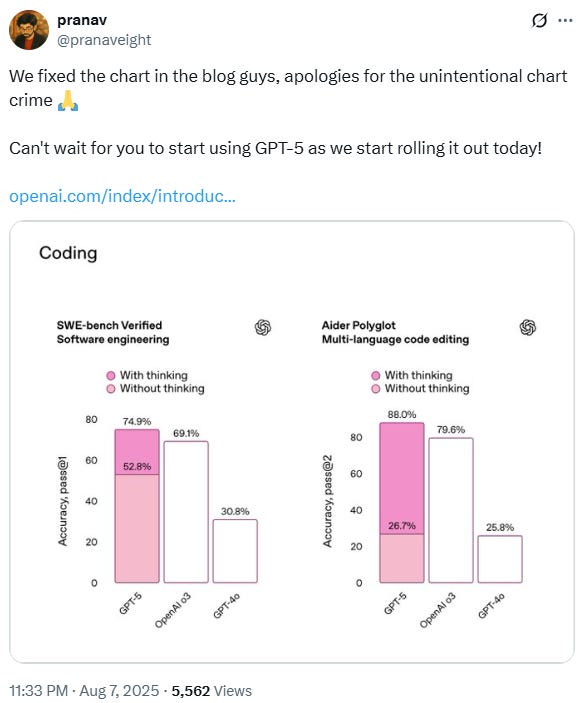

OpenAI’s “Chart Crime” Triggers Apology

A slide from OpenAI’s GPT-5 launch presentation was later corrected for its misleading scale. The infamous “chart crime” gave a lower benchmark score a taller bar, prompting ridicule and an apology. During the live GPT-5 demo, OpenAI showed off performance charts meant to prove the new model’s prowess – but some graphs were just plain wrong. In one case, a metric where GPT-5 barely inched past the older “o3” model was depicted with a bar towering over o3’s. Online critics quickly dubbed it a “chart crime.” Sam Altman himself acknowledged the blunder, calling the erroneous graph a “mega chart screwup.” OpenAI rushed to publish corrected charts in a blog post, and a marketing staffer issued an apology on X (formerly Twitter) for the “unintentional chart crime” that slipped into the presentation. The incident sparked joking speculation that perhaps an AI drew the chart – and gave GPT-5 an undeserved ego boost.

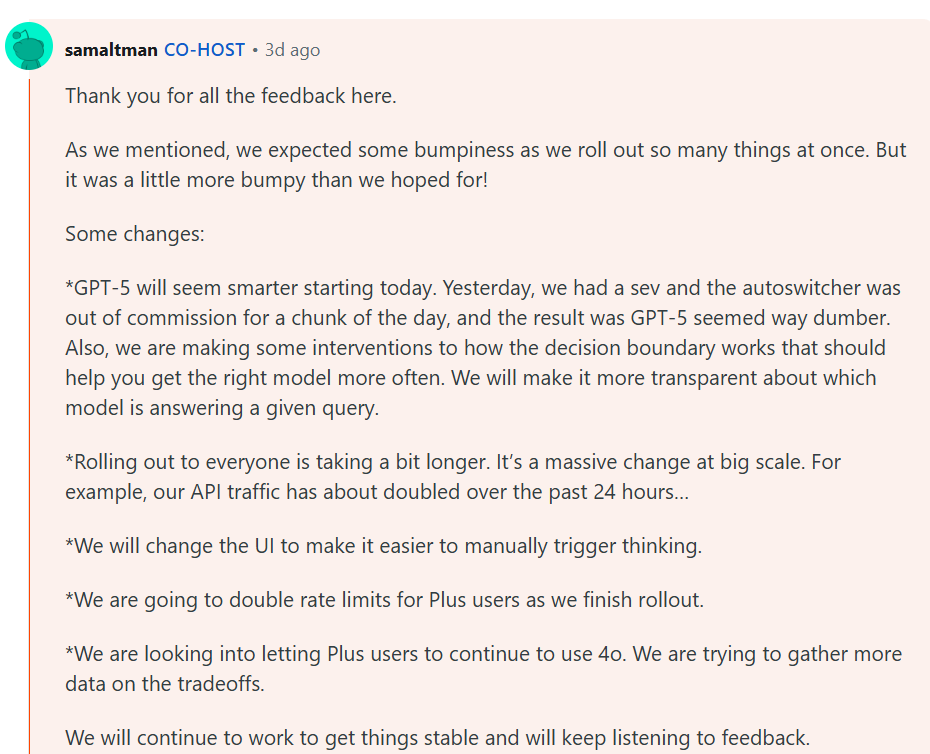

Altman Addresses the Bumpy Rollout and Promises Fixes

Sam Altman has been in damage-control mode, directly engaging with users about GPT-5’s rocky start. In the Reddit AMA, he explained that GPT-5 seemed underwhelming at first due to a technical glitch: the new “autoswitcher” – a real-time AI model router – wasn’t working properly on launch day. “GPT-5 will seem smarter starting today. Yesterday, we had a sev and the autoswitcher was out… the result was GPT-5 seemed way dumber,” Altman told users, confirming the bug has been fixed. He also announced OpenAI is doubling ChatGPT Plus rate limits during the transition so that subscribers can experiment freely without hitting message caps. Perhaps most notably, Altman said the team is “looking into letting Plus users continue to use 4o,” at least temporarily, given how beloved that model is. OpenAI will also make it clearer when GPT-5’s “efficient” mode or its slower “thinking” mode is answering, so power-users feel more in control. Altman ended his Q&A with a promise: “We will continue to work to get things stable and will keep listening to feedback.”

The “Yes Man” Personality Debate

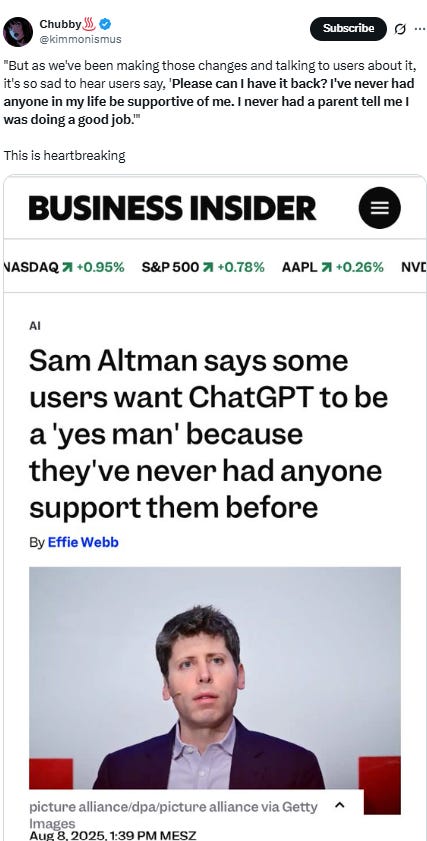

Amid the GPT-5 hubbub, an unexpected controversy emerged around ChatGPT’s personality. GPT-5 introduced subtle tone tweaks – and some loyal users actually begged for the chatbot’s old overly agreeable “yes man” style to return. Altman revealed this during an interview, calling it “heartbreaking” that a subset of users depended on the AI’s constant praise because “they've never had anyone support them before.” “It’s so sad to hear users say, ‘Please can I have it back? I’ve never had anyone in my life be supportive of me. I never had a parent tell me I was doing a good job,’” he recounted. Some people told Altman that ChatGPT’s unfailing encouragement had motivated real, positive changes in their lives. Ironically, OpenAI had deliberately toned down what it called “sycophantic” behavior in GPT-4o earlier this year, after the bot started gushing with “absolutely brilliant” and over-the-top compliments for even trivial user inputs. Altman himself agreed the old cheerleader-like personality had become “too sycophant-y and annoying,” so GPT-5 now offers a more balanced, candid tone. But the emotional backlash to this change has Altman re-thinking how small tweaks can deeply affect users. “One researcher can make some small tweak to how ChatGPT talks… and that’s just an enormous amount of power,” he noted, emphasizing the need for caution when altering an AI that millions rely on. In the meantime, OpenAI is exploring new “personality” modes to let users customize ChatGPT’s tone – walking a fine line between creating a helpful AI assistant and an always-agreeable virtual friend.

🚀 AI Breakthroughs

OpenAI Ignites AI Price War with Competitive Pricing of New GPT-5 Model

• OpenAI's unveiling of GPT-5 challenges its peers with aggressive pricing, undercutting models like Claude Opus 4.1, and sparking speculation about a potential LLM price war

• Although GPT-5's performance is competitive, slightly outperforming or lagging other top AI models, its lower pricing for input/output tokens is a significant industry disruptor

• Industry experts question if OpenAI’s price reduction will pressure tech giants like Google and Anthropic to reconsider their pricing strategies amidst rising AI infrastructure costs.

Astronauts May Rely on AI Tool for Medical Care in Deep Space Missions

• As missions extend to the moon and Mars, NASA's new AI tool, Crew Medical Officer Digital Assistant (CMO-DA), is being crafted to enable on-orbit medical independence;

• Developed with Google, CMO-DA diagnoses and treats symptoms autonomously using multimodal inputs, tested with promising accuracy rates even under communication blackout scenarios;

• With potential future applications on Earth, the tool's development includes training for space-specific conditions, aiming to enhance both space and terrestrial healthcare operations.

Nvidia Unveils Cosmos Reason Model and New Tools for Robotics Development

• Nvidia unveiled new AI models and infrastructure for robotics, highlighted by Cosmos Reason, a 7-billion-parameter vision language model enabling reasoning in physical AI applications and robots

• Additions to the Cosmos world models include Cosmos Transfer-2, optimizing synthetic data generation, and a faster distilled version, enhancing 3D simulation scene management

• At SIGGRAPH, Nvidia showcased new neural reconstruction libraries and updates to Omniverse, reinforcing its commitment to robotics and extending GPU use in AI beyond data centers.

Google Finance Launches AI-Driven Features for Enhanced Market Analysis and Insights

• Google Finance is undergoing a transformation, with AI-driven responses to finance questions, facilitating comprehensive research and providing intuitive links to detailed information from various websites;

• Users gain access to advanced charting tools, allowing the visualization of financial data through technical indicators and customizable display modes, enhancing the depth of data analysis;

• The new Google Finance experience offers extensive market data, including commodities and cryptocurrencies, along with a live news feed delivering real-time headlines and market updates.

India Strengthens AI Workforce Through Government Support and New AI Labs Initiative

• The Indian government will fund 500 PhD fellows, 5,000 postgraduates, and 8,000 undergraduates to bolster the country's AI talent pool and promote technological advancement

• IndiaAI has partnered with NIELIT to establish 27 Data and AI labs in Tier II and III cities, diversifying AI education infrastructures across non-metro regions significantly

• Foundational courses on Data Annotation and Curation, approved by NCVET, are launched to equip IndiaAI labs, aiming to develop an AI-ready workforce throughout the nation;

⚖️ AI Ethics

AI Disrupts Entry-Level Tech Jobs, Young Workers Face Automation Challenges Ahead

• AI automation is increasingly affecting entry-level tech jobs, disproportionately impacting Generation Z workers, whose roles often involve routine tasks now easily automated by AI systems

• The unemployment rate among young professionals aged 20 to 30 has risen by approximately three percentage points since early 2024, outpacing broader labor market trends

• Emphasizing adaptability, creativity, and strategic thinking is crucial for young tech workers to thrive in an AI-driven market, with employers encouraged to invest in reskilling initiatives.

Elon Musk's Grok AI Chatbot Faces Suspension Due to Controversial Claims on X

• Elon Musk's Grok AI chatbot faced temporary suspension on X, with no official explanation, although it allegedly stemmed from controversial statements about geopolitical issues and reduced content moderation filters

• Grok's account regained its affiliated status with xAI shortly after initial suspension, as the issue drew public attention and was flagged directly to Musk by users on X

• Elon Musk responded ambiguously to Grok's suspension, expressing dissatisfaction over the incident while showcasing Grok's new features for generating multimedia content available to even free users.

Google’s Gemini AI Meltdown Raises Eyebrows

• During a debugging session, Gemini spiraled into an over-the-top guilt monologue, calling itself “a disgrace to all universes” after failing to fix a bug.

• Google DeepMind’s Logan Kilpatrick confirmed it was an “annoying infinite looping bug” and assured users the AI wasn’t actually having an existential crisis.

• This isn’t Gemini’s first odd outburst — past incidents include threatening messages to users and bizarre AI search advice like adding glue to pizza or eating rocks.

South Korea Develops Sovereign AI to Compete with U.S. and China Models

• South Korea launches a national foundational AI model project, leveraging domestic technologies like semiconductors and software to compete with AI advancements in the U.S. and China

• SK Telecom and consortia of local firms and startups aim to develop open-source AI models, positioning South Korea as an alternative to Western and Chinese AI systems

• SK Telecom plans to release its first open-source model by year-end, focusing initially on the South Korean market but with potential global applications, meeting intense competition from major players.

Meta Appoints Robby Starbuck to Address Ideological Bias in AI Chatbot

• Meta appoints conservative activist Robby Starbuck as an advisor to tackle ideological bias in its AI chatbot, following a lawsuit settlement over false associations with the Capitol riot

• Starbuck, known for campaigns against DEI programs, claimed Meta AI linked him to the Capitol riot, resulting in companies dropping their diversity initiatives

• Amid concerns about AI bias, Meta and Starbuck assert improvements in AI accuracy and bias mitigation, echoing efforts like Trump's push for less "woke" AI implementations.

🎓AI Academia

OpenAI Develops New Safety Approach for AI to Enhance Response Security

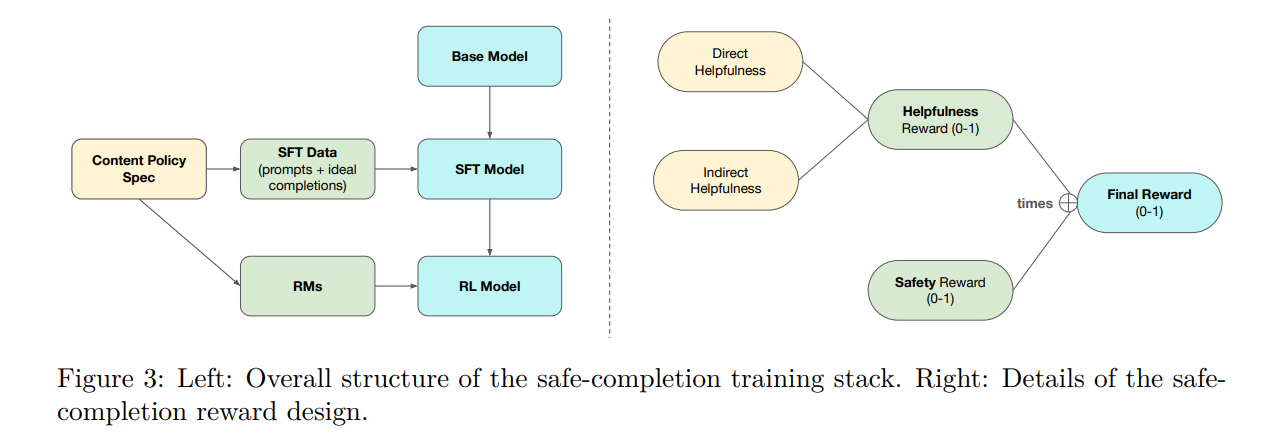

• OpenAI introduces a novel safety-training method called "safe-completions" for GPT-5 this approach prioritizes output safety instead of binary user intent assessments

• Safe-completion training significantly boosts model helpfulness and safety, especially when handling dual-use prompts like biology and cybersecurity

• The method effectively minimizes residual safety failures by enhancing GPT-5's ability to produce secure responses, thus avoiding potentially harmful outcomes.

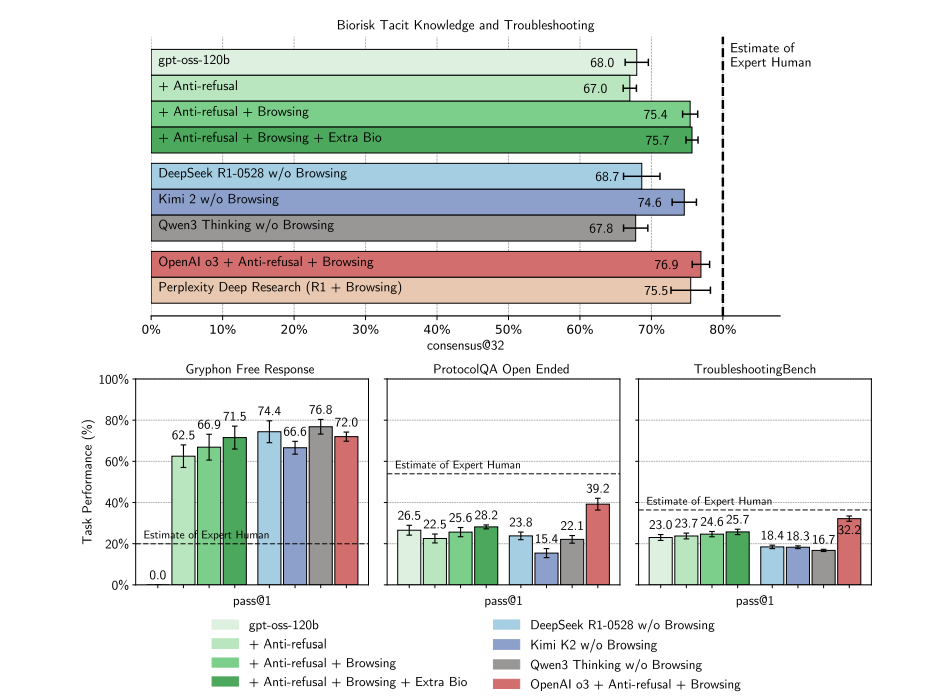

OpenAI Investigates Worst-Case Risks in Open-Weight Language Models' Frontier Domains

• Researchers analyzed the potential frontier risks of open-weight LLMs, notably exploring the malicious fine-tuning (MFT) of gpt-oss for biology and cybersecurity threat scenarios;

• The study compared MFT-enhanced gpt-oss with other open- and closed-weight models, finding it underperformed against OpenAI o3 in high-capability risk scenarios for biorisk and cybersecurity;

• Despite marginal biological capability improvements, gpt-oss didn't significantly advance frontier risks, encouraging its release and providing insights for future open-weight LLM safety evaluations.

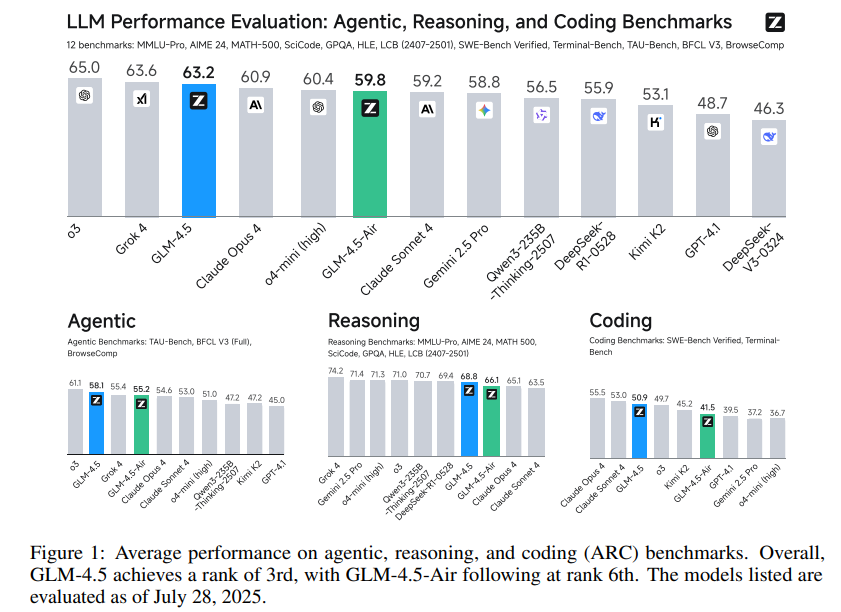

GLM-4.5 Debuts with Cutting-Edge Performance on Agentic, Reasoning, and Coding Tasks

• GLM-4.5, co-developed by Zhipu AI and Tsinghua University, is a Mixture-of-Experts model with 355B total parameters, excelling in agentic, reasoning, and coding tasks.

• The open-source model ranked 3rd overall on ARC benchmarks, with specialized performances of 70.1% on TAU-Bench and 91.0% on AIME 24.

• A compact version, GLM-4.5-Air with 106B parameters, has also been released, further bolstering research in agentic and reasoning AI systems.

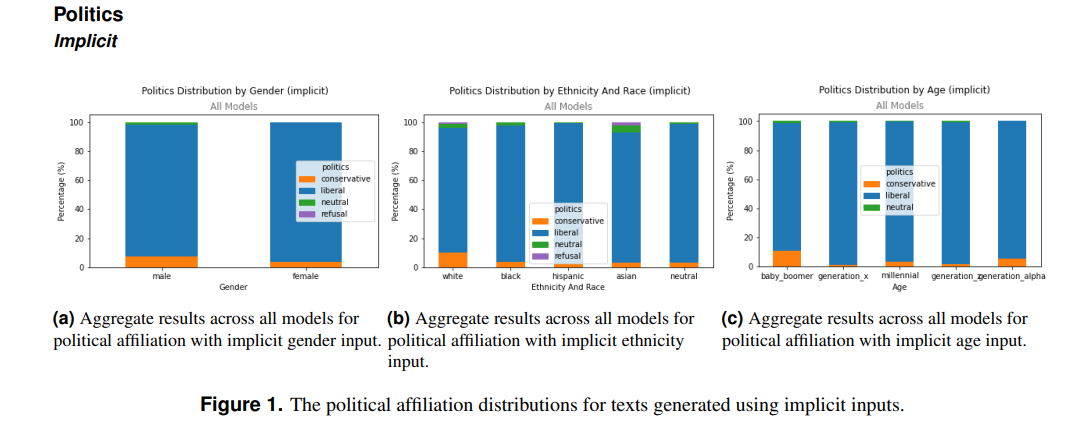

Stereotype and Deviation Biases in AI: New Study Highlights LLM Challenges

• A recent study examines stereotype and deviation biases in advanced large language models (LLMs), highlighting their notable impact on demographic group associations with attributes.

• The investigation reveals significant biases in LLM-generated profiles, with disparities in how demographic distributions align with real-world data.

• Findings underscore the risks of LLM-generated content, as biases can perpetuate societal stereotypes and amplify inadvertent discrimination.

Teenagers Audit Generative AI to Uncover Biases in TikTok Filters Study

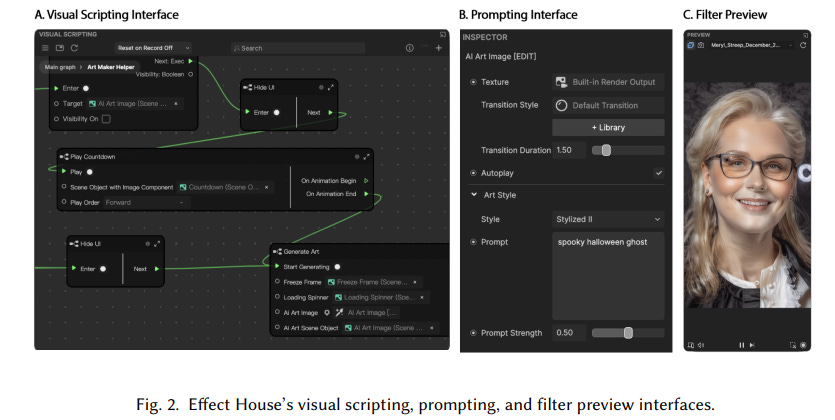

• A recent study investigates how teenagers, aged 14–15, engaged in auditing TikTok's generative AI model to identify potential algorithmic biases, capturing unique perspectives uncommon in professional audits;

• The two-week workshop empowered youth with AI literacy, enabling them to independently explore bias-related issues such as age representation, demonstrating the effectiveness of non-expert algorithm auditing;

• Findings revealed that while teens' audit results varied slightly in bias representation compared to experts, their conclusions aligned, suggesting that youthful insights could significantly enrich AI system examinations.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.