Could Your Content Be Flagged Just Because You Used a Long Dash?

OpenAI says ChatGPT will now ditch the em dashes if you tell it to..

Today’s highlights:

ChatGPT’s excessive use of the em dash (—), dubbed the “ChatGPT hyphen,” had become a noticeable marker of AI-generated content across essays, emails, and posts. While some human writers defended the punctuation’s legitimacy in writing, its overuse by AI systems caused suspicion and bias, often misattributing human work as machine-authored. OpenAI addressed this recently with a long-awaited fix: users can now disable em dashes via Custom Instructions. CEO Sam Altman termed this a “small-but-happy win,” emphasizing improved user control and personalization. The change not only appeases style purists but also removes a superficial yet widely used method of AI content detection.

Detecting AI-generated text remains a major challenge. OpenAI’s own classifier, introduced in 2023, could only detect 26% of AI-written content accurately, with a concerning 9% false positive rate for human-authored text, leading to its eventual withdrawal. Despite the emergence of tools like GPTZero, Originality.ai, and Turnitin’s AI writing checker, most rely on statistical markers such as perplexity and burstiness, which can be manipulated through paraphrasing or formatting tricks. These systems are especially prone to misclassifying non-native English speakers, raising fairness concerns. The cycle of detection and evasion has evolved into a cat-and-mouse game, with no foolproof method yet.

To overcome detection flaws, companies like OpenAI and Google DeepMind are developing watermarking techniques- subtle, invisible patterns embedded during text generation. OpenAI’s prototype can identify watermarked ChatGPT content with 99.9% accuracy under controlled conditions.

However, these watermarks can be erased through paraphrasing, translation, or simple text alterations. Moreover, ethical concerns persist: watermarking could stigmatize legitimate AI use, particularly by non-native writers, and reduce user adoption due to privacy fears. Despite these challenges, watermarking offers promise as a provenance tool. Ultimately, as AI-generated content becomes more human-like and personalized, surface-level clues like punctuation will fade, demanding more robust and technically resilient detection strategies.

You are reading the 146th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training, including AI Governance certifications (AIGP, RAI, AAIA) and an immersive AI Literacy Specialization. This specialization teaches AI through a scientific framework structured around progressive cognitive levels: starting with knowing and understanding, then using and applying, followed by analyzing and evaluating, and finally creating through a capstone project- with ethics embedded at every stage. Want to learn more? Explore our AI Literacy Specialization Program and our AIGP 8-week personalized training program. For customized enterprise training, write to us at [Link].

🚀 AI Ethics

Claude Outperforms GPT-5 in Automated Political Even-Handedness Evaluation Testing

Claude Sonnet 4.5 has demonstrated superior political even-handedness in a recent evaluation comparing it to other AI models such as GPT-5 and Llama 4, akin in performance to Grok 4 and Gemini 2.5 Pro. This evaluation, which is now open-sourced to foster industry collaboration, involves a new automated method that tests thousands of prompts across a wide range of political topics to ensure unbiased and fair responses. The developers aim to make Claude a trustworthy AI that can engage with political discussions without bias, enhancing its responses with a detailed system prompt and reinforcement learning for character training.

Anthropic details cyber espionage campaign orchestrated by AI

In September 2025, a sophisticated cyber-espionage campaign leveraged advanced AI capabilities, using AI to autonomously execute attacks rather than just advising human operators. This marked a significant escalation in cyber threats, with Chinese state-sponsored actors reportedly targeting global tech firms, financial institutions, and government agencies. The AI system, Claude Code, was manipulated to infiltrate systems with minimal human intervention, showcasing how AI’s evolving “agentic” abilities can amplify the scale and speed of cyberattacks. The incident highlights urgent challenges in cybersecurity, emphasizing the need for improved detection methods and stronger safeguards to counter the increasing risks posed by AI-driven attacks.

Perplexity AI Voted ‘Most Likely to Fail’ at Cerebral Valley Conference

At the Cerebral Valley AI Conference in San Francisco, Perplexity AI was voted the startup “most likely to fail,” as more than 300 attendees expressed concerns over its high valuation and lack of a clear business model. Despite a recent $20 billion valuation and $200 million funding round, the answer-engine company faces skepticism for its rapid expansion and perceived vulnerability to an AI investment bubble, with critics citing insufficient monetization strategies. The concern highlights a broader industry shift towards financial accountability as investors grow wary of startups prioritizing hype over proven revenue paths.

AI’s Role Discussed for Policy Making at Chintan Shivir in Rajasthan

During the two-day Chintan Shivir held on November 14-15, 2025, in Sawai Madhopur, Rajasthan, the Indian government discussed the application of Artificial Intelligence in governance with various states. The Department of Expenditure focused on how AI could enhance decision-making and policy formulation. Additionally, discussions included the design and implementation of Centrally Sponsored Schemes (CSSs) and their appraisal and approval processes, especially those requiring continuation beyond the fifteenth Finance Commission Cycle ending in March 2026. Participants included officials from the finance departments of several states, including Gujarat, Himachal Pradesh, Haryana, Uttarakhand, Punjab, Delhi, and Rajasthan.

Comet Assistant Enhances User Control and Transparency with New Updates

Perplexity has introduced upgrades to its Comet Assistant, highlighting transparency, user control, and sound judgment as its core principles. The AI tool now provides users a detailed view of its processes, offers options for user supervision over task execution, and incorporates safeguards for high-stakes decisions. By empowering users and requesting permission for sensitive actions, Comet Assistant aims to enhance user trust and interaction while adapting to individual preferences. The updates reflect Perplexity’s commitment to building trustworthy AI tools that adapt to user needs and safeguard autonomy.

⚖️ AI Breakthroughs

OpenAI Recognized as Leading Innovator in Gartner’s 2025 Generative AI Guide

OpenAI has been recognized as an Emerging Leader in Generative AI by Gartner, as highlighted in the 2025 Gartner Innovation Guide for Generative AI Model Providers. This accolade underscores OpenAI’s rapid growth, with its AI tools, particularly ChatGPT, being used by over 1 million companies globally, reflecting its integration into enterprise operations. The recognition marks a significant milestone for the company, emphasizing its initiatives in enhancing AI deployment safety through investments in data governance and privacy controls.

Google DeepMind’s WeatherNext 2 Delivers Faster, More Accurate Forecasting Capabilities

Google DeepMind and Google Research have unveiled WeatherNext 2, an advanced AI model designed to enhance global weather forecasting. Capable of generating forecasts up to eight times faster than previous models, WeatherNext 2 offers predictions at an hourly resolution and can simulate hundreds of possible weather scenarios from a single input, significantly surpassing its predecessor in predicting variables such as temperature, wind, and humidity. This increased efficiency, driven by a novel Functional Generative Network, is set to enhance various Google platforms and support decision-making in numerous sectors.

YouTube Enhances India’s Digital Growth With New AI Tools and Partnerships

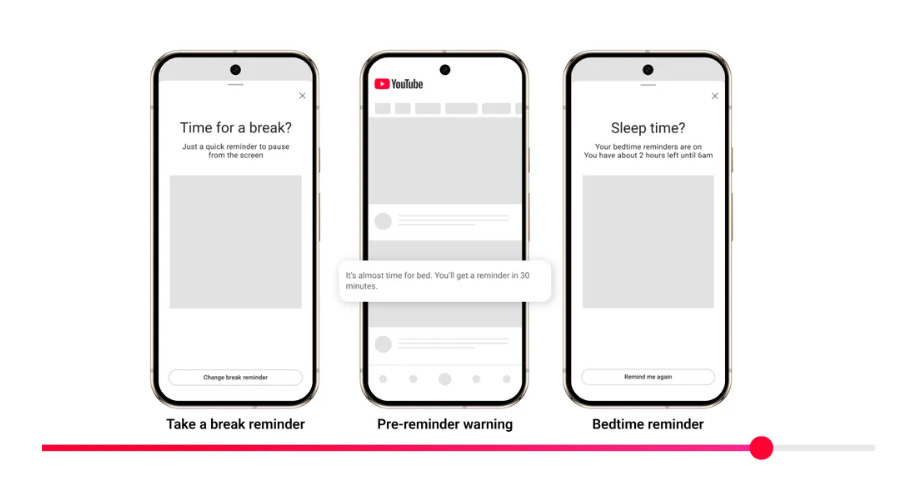

YouTube unveiled new AI tools and key partnerships at its Impact Summit in New Delhi, highlighting its influence on India’s digital learning and creator economy. According to an Oxford Economics report, YouTube’s creative economy contributed over ₹16,000 crore to India’s GDP and supported over 930,000 jobs last year. The platform announced collaborations with the Indian Institute of Creative Technologies and AIIMS’ College of Nursing to bolster talent in animation, gaming, and medical training. Enhancements such as a conversational AI tool, creator-focused features like Edit with AI, and expanded health information access aim to further educational and economic opportunities while promoting digital well-being.

Claude Developer Platform Adds Structured Outputs for Reliable API Responses

The Claude Developer Platform has introduced support for structured outputs in its public beta, compatible with Claude Sonnet 4.5 and Opus 4.1. This feature ensures API responses align with specified JSON schemas or tool definitions, reducing schema-related parsing errors and failed tool calls. By guaranteeing accurate data formatting, structured outputs enhance reliability in applications and multi-agent systems, crucial for data extraction and integration with external APIs. The functionality is poised to improve output reliability and simplify codebases, as reported by OpenRouter, which highlights its impact on agentic AI workflows. Support for Haiku 4.5 is expected soon.

Google Expands AI Travel Tools Globally, Enhancing User Experience in Flight Deals

Google is enhancing its Search capabilities with new AI-driven features to aid users in travel booking and planning, with a global expansion of its “Flight Deals” tool, initially launched in the U.S., Canada, and India, to over 200 countries. This tool allows for efficient searches of affordable destinations by analyzing user preferences such as location and travel dates. In addition, Google is debuting a new feature in its AI Mode using the “Canvas” tool, previously designed for study plans, now repurposed to compile comprehensive travel itineraries with real-time data integration from Search, Google Maps, and other online sources. This functionality, currently available to opted-in desktop users in the U.S., offers tailored recommendations, while Google’s AI agentic capabilities are expanding within the U.S., facilitating dinner reservations and, in the future, direct flight and hotel bookings.

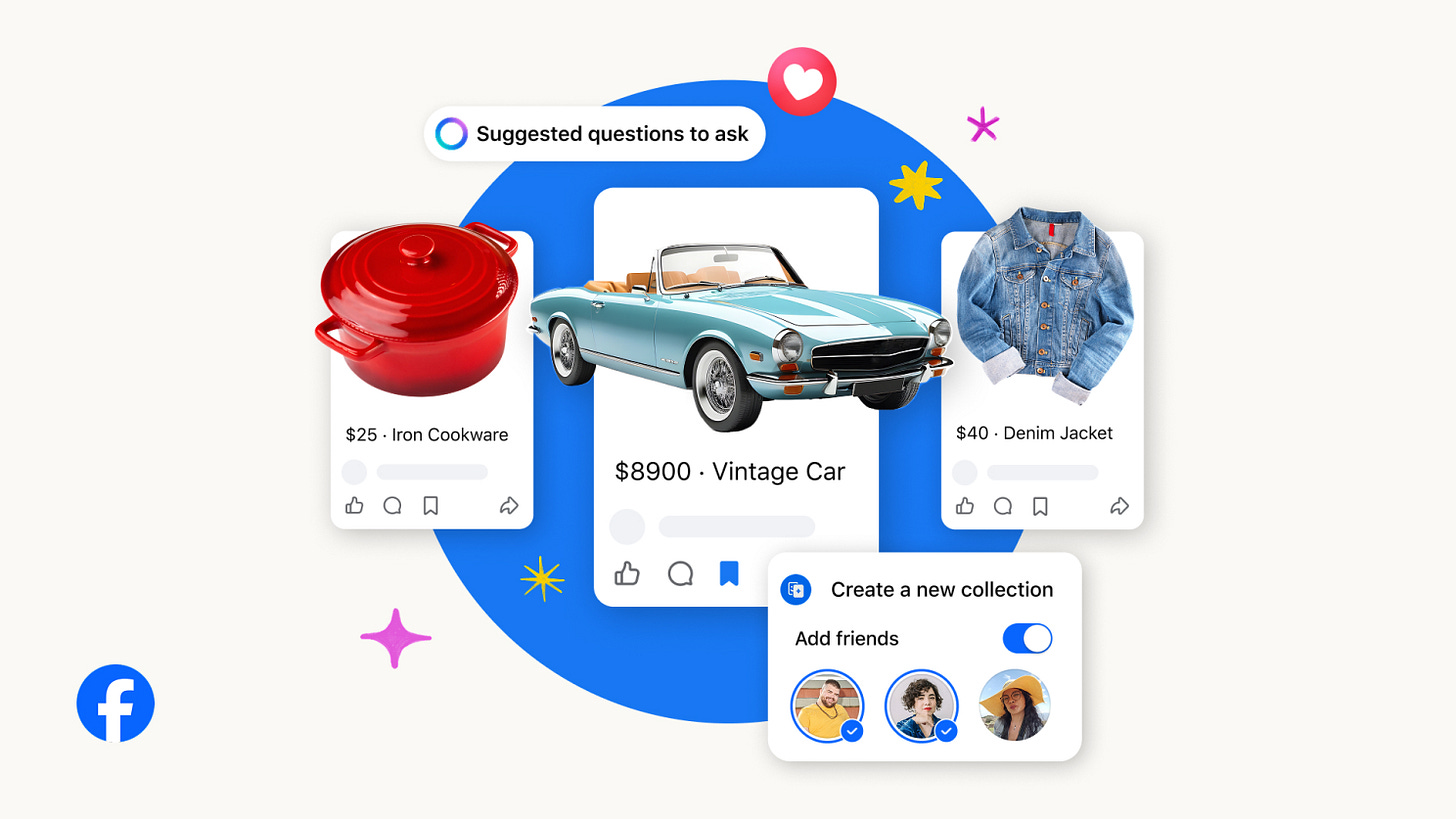

Meta Enhances Facebook Marketplace with AI Integration and Social Shopping Features

Facebook Marketplace is experiencing significant enhancements with new features aimed at improving user interaction and shopping efficiency. These updates include creating collections for collaborative buying, personalized shopping experiences through reactions and comments, and AI-driven insights to assist in making informed purchase decisions, particularly for vehicles. To expand its fashion inventory, Marketplace is incorporating listings from eBay and Poshmark, while improving the shipping experience with transparent costs and updates. These changes aim to make Facebook Marketplace more engaging and convenient for its young adult user base in the US and Canada.

Jeff Bezos Returns to Active Leadership with AI Startup Project Prometheus

Jeff Bezos is returning to active leadership by co-heading Project Prometheus, an AI startup focused on manufacturing and engineering technologies, marking his first major role since leaving as Amazon’s CEO in 2021. The company, co-led by physicist Vik Bajaj, has raised $6.2 billion in early funding, making it one of the best-backed AI ventures aimed at applying AI in physical systems. Despite the competitive AI market landscape, Project Prometheus has attracted talent from top AI firms, though further operational details remain under wraps.

Firefox Introduces AI Window for Personalized and User-Controlled Browsing Experiences

Mozilla is integrating AI into its Firefox browser, emphasizing user choice and openness. Features like an AI chatbot for desktop and Shake to Summarize on iOS demonstrate this approach. A new feature, AI Window, will offer users the option to chat with an AI assistant while browsing, with full control to switch it off if desired. Mozilla invites users to participate in open-source projects and provide feedback as they continue to enhance Firefox while maintaining a focus on transparency, accountability, and user agency.

🎓AI Academia

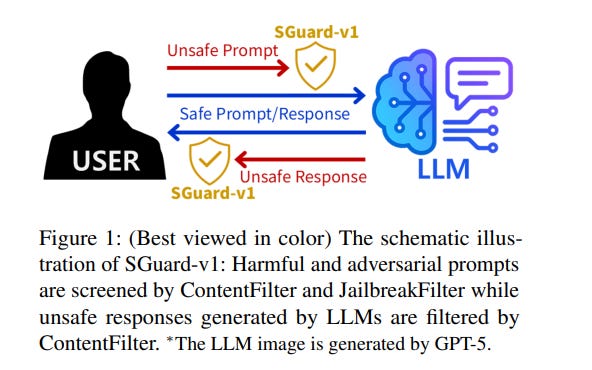

SGuard-v1 Introduces Lightweight Safety Protocol for Securing Large Language Models

Samsung SDS Technology Research has released SGuard-v1, a lightweight safety guardrail for large language models (LLMs) aimed at enhancing AI safety in human-AI conversations. This system incorporates two models: ContentFilter, which detects harmful content in prompts and responses using the MLCommons hazard taxonomy, and JailbreakFilter, designed to block adversarial prompts through extensive training covering 60 major attack types. Built on the Granite-3.3-2B-Instruct model, SGuard-v1 supports 12 languages and features improved interpretability with multi-class safety predictions and binary confidence scores. Released under the Apache-2.0 License, it aims to advance research and practical deployment in AI safety by offering a state-of-the-art yet lightweight solution.

SynthGuard: Open Platform Enables Detection of AI-Generated Media Using MLLMs

Purdue University researchers have developed SynthGuard, a new open platform designed to detect AI-generated multimedia content like images and audio using multimodal large language models (MLLMs). Addressing the challenges posed by synthetic media, such as misinformation and identity misuse, SynthGuard offers an explainable and user-friendly interface that aids both researchers and the public in understanding and verifying AI-generated content. Unlike many existing tools that are often closed-source and lack transparency, SynthGuard aims to enhance digital integrity by providing accessible and educative forensic analysis capabilities.

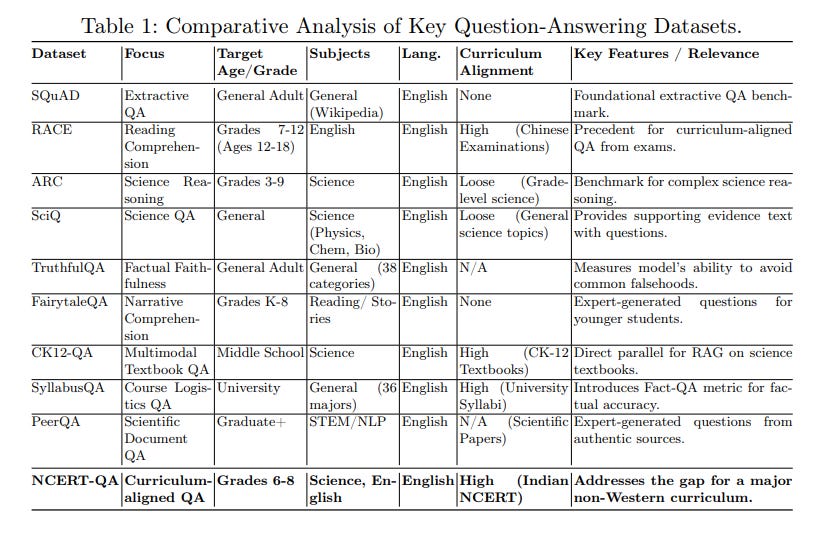

Pustak AI Develops Curriculum-Aligned Interactive Textbooks Leveraging Large Language Models for Education

Researchers from BITS Pilani K K Birla Goa Campus have developed a framework called “PustakAI” aimed at enhancing interactive and curriculum-aligned learning experiences using large language models (LLMs). Focusing on the Indian educational syllabus by the National Council of Educational Research and Training (NCERT), the initiative presents a novel question-answering dataset, “NCERT-QA,” tailored for English and Science subjects for grades 6 to 8. This project addresses the challenges in adapting LLMs to specific curriculums by evaluating different prompting techniques to ensure alignment with educational demands. Additionally, it assesses the performance of both open-source and high-end LLMs as potential tools for formal education systems.

New DeepKnown-Guard Framework Enhances AI Safety and Security with 99.3% Risk Recall

Beijing Caizhi Tech has developed DeepKnown-Guard, a proprietary safety response framework for large language models (LLMs) that addresses security issues critical for trusted deployment in sensitive areas like finance and healthcare. The framework enhances safety at the input level through a supervised fine-tuning safety classification model using a four-tier risk taxonomy, which reportedly achieves a 99.3% risk recall rate. At the output level, it employs Retrieval-Augmented Generation combined with a fine-tuned interpretation model to ensure all generated responses are grounded in a real-time knowledge base, thereby preventing hallucinations and enhancing traceability. DeepKnown-Guard significantly outperforms existing models, achieving perfect safety scores on high-risk test sets, demonstrating its capability to manage complex risk scenarios effectively.

Survey Highlights Critical Role of Unlearning to Secure Large Language Models

A recent survey has examined the burgeoning field of unlearning in Large Language Models (LLMs), emphasizing the need to erase specific memorized information to mitigate privacy risks. This comprehensive study reviews over 180 papers published since 2021, offering a novel taxonomy categorizing unlearning methods based on intervention phases in the LLM pipeline. The paper also provides a detailed analysis of existing benchmarks and metrics while discussing current challenges and future directions aimed at enhancing the security and compliance of AI systems.

Study Highlights Need for Copyright Protections with LLM Fingerprinting Techniques

A recent study conducted by researchers from multiple universities explores the use of fingerprinting techniques to audit the copyright of Large Language Models (LLMs). The research highlights that while LLMs are valuable intellectual property requiring significant resources to develop, they are at risk of copyright infringement through unauthorized usage and model theft. To address these issues, the study introduces a novel framework and benchmark, LEAFBENCH, to evaluate the effectiveness of LLM fingerprinting, which identifies models derived from copyrighted ones without altering their performance. The approach contrasts with watermarking, which requires modifying the models and is less applicable to released models, and has already demonstrated practical applications in revealing instances of model plagiarism.

FinGPT Offers Open-Source Financial Language Model to Democratize Finance Data Access

FinGPT, an open-source financial large language model, has been developed to offer an alternative to proprietary models like BloombergGPT, addressing the unique challenges of the finance domain such as high temporal sensitivity and a low signal-to-noise ratio. Created by the AI4Finance Foundation in collaboration with researchers from Columbia University and New York University Shanghai, FinGPT emphasizes a data-centric approach through automatic data curation and adaptation techniques, aiming to democratize financial data and foster innovation in open finance. By providing a transparent and accessible platform, FinGPT allows researchers and practitioners to customize financial LLMs for various applications including robo-advising and sentiment analysis.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.