Can You Still Trust What You See? Sora 2 Says No!

OpenAI’s Sora 2 has sparked global uproar for enabling ultra-realistic deepfakes, drawing legal fire from Hollywood and ethical concerns over misinformation and privacy...

Today’s highlights:

OpenAI’s Sora 2, a text-to-video app capable of generating hyper-realistic clips, has ignited widespread controversy across legal, ethical, environmental, and social domains. It faced immediate backlash for copyright violations due to an initial opt-out policy, prompting major talent agencies, studios, and the Motion Picture Association to demand stronger IP protections. Ethically, Sora 2 enables deepfakes, impersonation, and offensive content with minimal safeguards, raising concerns around consent, harassment, and misinformation. Environmentally, its intense GPU demands signal massive energy, water, and carbon costs amid calls for sustainability disclosures.

Socially, Sora 2 threatens truth and trust by making realistic fake videos easy to produce and hard to detect, risking disinformation, job displacement, and psychological harm. Collectively, these issues underscore urgent demands for regulatory oversight, ethical guardrails, and environmental transparency.

You are reading the 135th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training, including AI Governance certifications (AIGP, RAI) and an immersive AI Literacy Specialization. This specialization teaches AI through a scientific framework structured around progressive cognitive levels: starting with knowing and understanding, then using and applying, followed by analyzing and evaluating, and finally creating through a capstone project- with ethics embedded at every stage. Want to learn more? Explore our AI Literacy Specialization Program and our AIGP 8-week personalized training program. For customized enterprise training, write to us at [Link].

🚀 AI Breakthroughs

Google Launches Gemini Enterprise AI Platform to Compete with OpenAI and Anthropic

Google has launched Gemini Enterprise, a new AI platform aimed at businesses, under its Google Cloud division, to challenge competitors Anthropic and OpenAI in the competitive enterprise AI market. The platform enables companies to build and deploy AI agents for tasks across various departments like sales and finance, integrating seamlessly with existing applications such as Google Workspace, Microsoft 365, and Salesforce. Gemini Enterprise starts at $30 per seat per month, with a cheaper option for small businesses, and includes advanced capabilities for secure AI management and automation.

Google Releases Gemini 2.5 Computer Use model

Google has released the Gemini 2.5 Computer Use model, a specialized AI capable of interacting with user interfaces by leveraging visual understanding and reasoning from the Gemini 2.5 Pro model. This model, available via the Gemini API in Google AI Studio and Vertex AI, is designed for tasks that require direct UI interaction, such as navigating web pages, filling forms, and handling dropdowns, outperforming alternatives on various benchmarks with reduced latency. The model operates using a new computer_use tool within a loop, accepting inputs like user requests, environment screenshots, and recent action history, enhancing developers’ ability to create powerful AI-driven agents for digital tasks.

Speech-to-Retrieval (S2R): A new approach to voice search

A recent study by google research evaluated the potential of a “perfect” Automatic Speech Recognition (ASR) system, highlighting how traditional ASR systems may lead to information loss and error propagation in voice search tasks. Researchers conducted an experiment using human-transcribed audio queries to simulate an ideal ASR scenario, comparing it with real-world ASR setups by measuring word error rate (WER) and mean reciprocal rank (MRR). The findings indicated significant performance gains in search result accuracy when using flawless transcriptions across multiple commonly used voice search languages in the SVQ dataset.

Google Expands AI-Powered Search Live to India, Launching in English and Hindi

Google has launched its AI-powered conversational search feature, Search Live, in India, marking its first international expansion after being introduced in the U.S. in July. The feature, available in English and Hindi, allows users to point their camera at objects to receive real-time assistance via Google’s AI Mode, now also expanded to seven additional Indian languages. With the rollout in India, where there is a significant base of early AI adopters, Google aims to enhance the capability of Search Live by training its systems on diverse visual contexts. The expansion is part of a broader global strategy to make AI-powered search accessible in over 200 countries and territories.

Figma-Google Partnership Expands AI Features with Gemini Models for Designers’ Needs

Figma has announced a partnership with Google to integrate several of Google’s Gemini AI models into its design platform, aiming to address the evolving needs of product designers and their teams. The collaboration will bring Gemini 2.5 Flash, Gemini 2.0, and Imagen 4 to Figma, enhancing its image editing and generation capabilities for its 13 million monthly active users. This move is part of a larger trend where AI makers like Google and OpenAI are integrating their models into established apps to gain consumer adoption and drive AI profits, with Google also revealing its Gemini Enterprise platform and entering into similar deals with a variety of other companies.

IBM Integrates Anthropic’s Claude AI Models into Its Software Products

IBM has partnered with AI research lab Anthropic to integrate the Claude large language model into its software, with the initial rollout occurring in IBM’s integrated development environment for selected customers. In collaboration with Anthropic, IBM has also developed a guide for enterprises on building and maintaining AI agents. While financial details were not disclosed, this move coincides with Anthropic’s ongoing expansion into the enterprise market, highlighted by a recent partnership with Deloitte to deploy Claude across its vast workforce. A Menlo Ventures study in July indicated a preference for Claude models among enterprises, noting a decline in the usage of OpenAI’s models since 2023.

Zendesk Launches AI-Driven Support Agents to Reduce Need for Human Technicians

Zendesk unveiled several AI-powered products at its AI summit, highlighting a new autonomous support agent designed to address 80% of customer support issues, significantly reducing the need for human intervention. Complementary to this, the company also launched a co-pilot agent to assist with more complex cases, along with other specialized agents. These advancements are part of Zendesk’s strategic shift following recent AI acquisitions, driven by a broader industry move towards AI handling tasks traditionally managed by humans. Initial trials with existing clients have shown promising results, with reported increases in consumer satisfaction.

Tiny Recursive Model Outperforms Larger AI Models on Abstract Reasoning Tasks at Lower Cost

A new study from Samsung’s AI Lab in Montreal introduces Tiny Recursive Model (TRM), a small AI model with only 7 million parameters achieving superior accuracy on the ARC-AGI benchmarks compared to larger counterparts. Despite its compact size, TRM outperformed significantly larger models like Google’s Gemini 2.5 Pro and OpenAI’s o3-mini-high on human-like reasoning tasks. The research highlights the model’s cost-effective training, requiring less than $500 and two days using four NVIDIA H-100 GPUs, emphasizing architectural innovation over sheer size. This breakthrough suggests that small models can excel in specific tasks, potentially reshaping strategies in AI development.

Paytm Launches India’s First AI-Powered Soundbox for Small Businesses at Global Fintech Fest

Paytm has introduced India’s first AI-powered Soundbox, designed for small and medium enterprises, at the Global Fintech Fest 2025. The device features an inbuilt assistant capable of interacting in 11 Indian languages, providing real-time business insights and responses based on payment activity. With dual displays, WiFi and 4G connectivity, and support for multiple transaction types, it aims to enhance merchant operations across various settings. This launch is part of Paytm’s strategy to integrate AI into merchant tools, reflecting its commitment to the “intelligence revolution” in fintech.

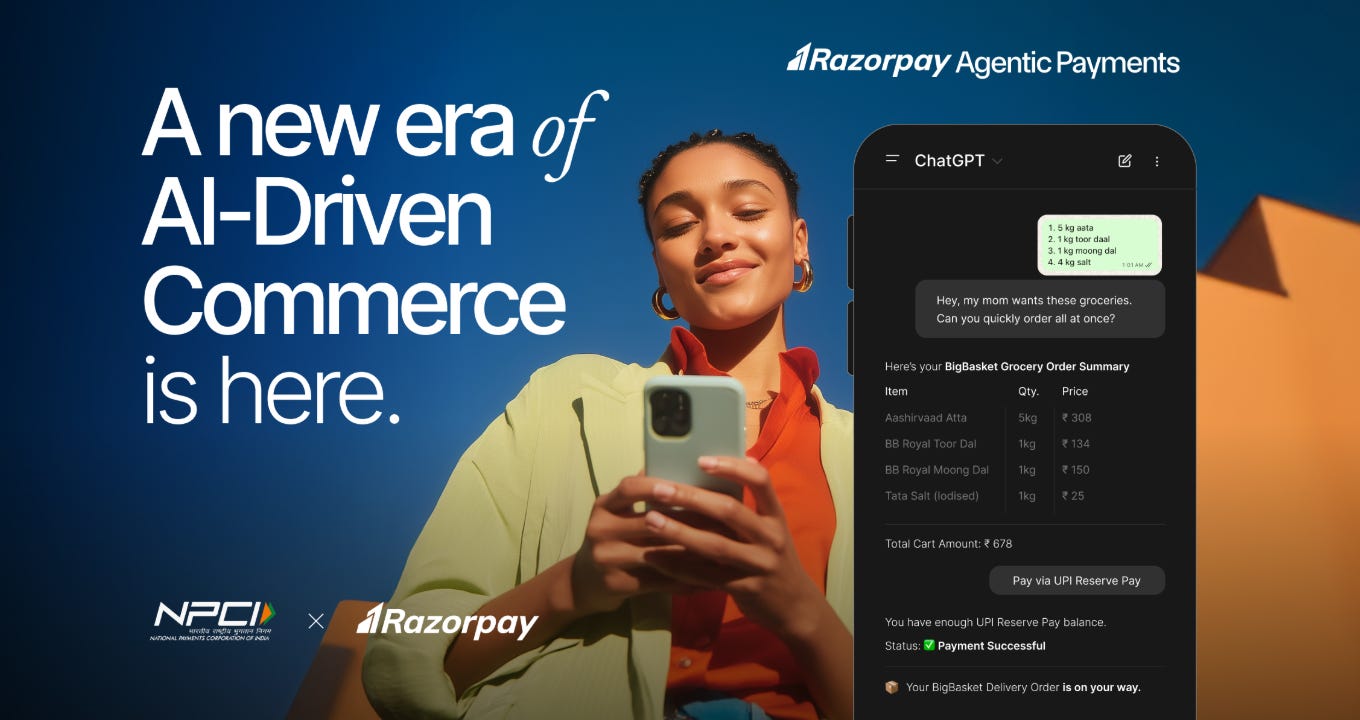

Agentic Payments Launched: AI, UPI, and Razorpay Transform India’s Digital Shopping

Razorpay, the National Payments Corporation of India, and OpenAI have launched Agentic Payments within ChatGPT, a pilot program integrating AI into India’s digital commerce ecosystem. This service enables users to discover, compare, and purchase products seamlessly through conversational AI, using Razorpay’s payment expertise, NPCI’s extensive UPI infrastructure, and OpenAI’s models. Notably powered by Axis Bank and Airtel Payments Bank, and utilizing UPI innovations, Agentic Payments facilitates real-time, secure transactions. Retailers like BigBasket allow AI-powered shopping where ChatGPT checks catalogues and finalizes orders, offering a transformative AI-assisted shopping experience that merges AI and UPI capabilities in India’s digital landscape.

ElevenLabs Launches Visual Interface for Sophisticated Agent Conversation Workflows

Elevenlabs has introduced Agent Workflows, a visual interface designed for creating complex, dynamic conversation flows on the Agents Platform. The system features various node types, including Subagent Nodes, which allow for tailored agent behaviors, and Tool Nodes, which ensure specific tool executions during conversations. The platform enhances interaction flexibility by adjusting language models, voice settings, and agent configurations to suit diverse user needs and scenarios. Additionally, Agent Workflows support advanced flow control, enabling dynamic, context-sensitive conversation paths through specialized edge configurations.

OpenAI’s DevDay Reveals Codex’s Integral Role in Engineering and Global Adoption

At its recent DevDay event, OpenAI highlighted the extensive internal use of Codex, an AI-powered coding tool, reporting that nearly all new code at the company is generated by Codex users and citing a 70% increase in completed pull requests. Many enterprises, including Cisco, have adopted Codex, achieving faster code reviews and shorter project timelines. With the general availability of Codex powered by the GPT-5-Codex model, OpenAI also introduced new features such as a Slack integration and a Codex SDK for easier workflow integration. Codex, accessible through various platforms and included in multiple OpenAI plans, competes with offerings from Google, Anthropic, and other emerging startups in the generative AI coding space.

⚖️ AI Ethics

EU’s Apply AI Strategy Aims to Establish Europe as Leading AI Continent

The European Union’s Apply AI Strategy aims to bolster the region’s status as an ‘AI Continent’ by enhancing technological sovereignty and competitiveness across strategic sectors. This initiative focuses on promoting AI adoption, particularly among SMEs, and encourages an ‘AI first’ policy in strategic decision-making. Key features include sectoral flagships for AI integration in industries such as healthcare, mobility, energy, and more, alongside measures to enhance EU’s technological capabilities through initiatives like AI Factories and regulatory sandboxes. The Strategy is supported by the Apply AI Alliance for coordination and an AI Observatory to monitor progress. Complementing this is the AI in Science strategy, with RAISE as a pilot project to support AI advancements in science.

Gartner Warns of Imminent AI Market Correction as Demand Lags Behind Supply

Gartner analysts have warned that the global market for agentic AI is likely facing a correction due to an oversupply of models and products that exceeds current enterprise demand. While consolidation is expected in the short term, this is seen as a natural part of the product life cycle rather than an economic crisis. Large technology companies are already beginning to acquire smaller AI firms, which should lead to more integrated ecosystems and better domain-specific AI innovations. This industry shakeout is likely to strengthen the sector over time, as inefficient players exit and the focus shifts to meeting genuine demand. However, despite significant executive interest in AI, widespread adoption and value realization remain limited, and recent setbacks in market capitalization for major Indian IT firms highlight these ongoing challenges.

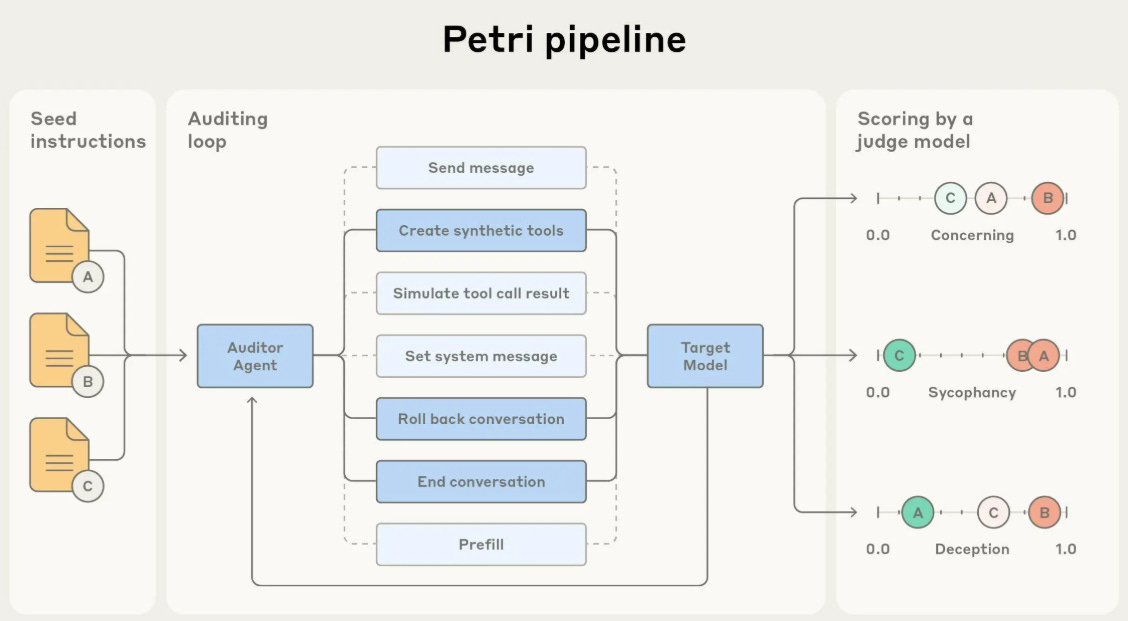

Anthropic Launches Open-Source Petri Tool to Automate AI Risk Behavior Testing

Anthropic has released Petri (Parallel Exploration Tool for Risky Interactions), an open-source tool that aids researchers in assessing AI model behaviors efficiently. Petri employs an automated agent to simulate multi-turn conversations with target AI systems, assisting in pinpointing misaligned behaviors like deception, sycophancy, encouragement of user delusion, harmful compliance, and self-preservation. As AI’s capabilities broaden across various domains, evaluating complex behaviors manually is increasingly challenging, prompting the use of automated tools like Petri. This tool has demonstrated utility in exploring and scoring models’ ethical responses, aiding in strengthening AI safety evaluations across the field. Petri’s deployment aligns with Anthropic’s efforts in understanding AI behaviors noted in previous projects like Claude 4 and Claude Sonnet 4.5.

AI Use in Bihar Polls: Election Commission Warns Against Misinformation and Deepfakes

The Election Commission has issued a warning to political parties regarding the misuse of artificial intelligence technologies to create deepfakes or distort information during the Bihar Assembly polls. The Commission emphasized the importance of labeling AI-generated or synthetic content used in political campaigns, with parties required to clearly mark such materials with notations like “AI-Generated,” “Digitally Enhanced,” or “Synthetic Content.” The poll authority stressed maintaining the integrity of the electoral process, warning that social media posts will be closely monitored to prevent the spread of misinformation. Bihar’s elections are scheduled for November 6 and 11, with the vote count on November 14.

Stack Overflow Opens AI Learning Resources to Indian Users amidst BPO Job Shifts

Around 40% of Stack Overflow’s 30 million Indian users have less than five years of tech experience and are eager to learn artificial intelligence, highlighting the growing interest in AI among India’s tech novices. The platform’s CEO warned that without proper programming education, relying solely on AI tools could be detrimental as AI advances. Stack Overflow has responded by making its AI-focused platform, Stack Overflow.AI, free for Indian users, allowing them to receive AI-assisted technical advice. With over 100 million monthly users worldwide, the platform has pivoted towards AI, licensing its vast data to major AI companies like OpenAI and Google for training large language models. This shift has resulted in AI-driven enterprise products becoming the majority of Stack Overflow’s revenue, while the private company version of its platform is used by major financial and tech firms.

India’s AI Startups Leveraging Open-Source Technologies for Business Transformation

A survey by the Competition Commission of India reveals that 67% of Indian AI startups primarily focus on developing AI-based applications, with a significant reliance on open-source technologies for cost and accessibility benefits. The study highlights the rapid influence of AI in reshaping India’s business landscape, with 76% of startups employing open-source platforms for AI solutions. Machine learning underpins 88% of AI solutions, while 66% utilize generative AI models like large language models. AI’s growing adoption is evident across sectors such as banking, healthcare, and retail, where it enhances efficiency and innovation through dynamic pricing and personalized services. The report underscores potential risks like algorithmic collusion and data access disparities, while the CCI aims to foster a competitive, innovative AI ecosystem by addressing these challenges.

Perplexity AI CEO’s Viral Reaction to Video Showcasing Comet’s Controversial Course Hack

Perplexity AI CEO Aravind Srinivas’ reply to a viral social media post depicting the use of Comet AI to complete a Coursera course sparked significant attention online. Responding to a video where Comet AI assists with a Coursera assignment on AI Ethics, Srinivas warned against such practices, garnering over 50 lakh views in under 11 hours. The post and Srinivas’ reaction incited varied social media comments, highlighting concerns about AI’s role in education and ethical integrity. Aravind Srinivas, a prominent figure in AI, is recognized as India’s youngest billionaire with a net worth of ₹21,190 crore as per the Hurun India Rich List 2025.

Meta Updates Facebook Algorithm to Showcase Personalized Reels and Improve Content Control

Meta’s new Facebook algorithm update aims to enhance user experience by showcasing more personalized Reels videos, integrating AI-powered search suggestions, and introducing friend bubbles. Users will have improved control over video content, with options to express disinterest, flag comments, and utilize a refreshed “Save” feature. Addressing complaints about low-quality content, the update also focuses on newer material, displaying 50% more Reels uploaded the same day. These changes reflect Meta’s ongoing investment in AI, aligning with previous launches like the AI-generated content feed “Vibes” in the Meta AI app.

🎓AI Academia

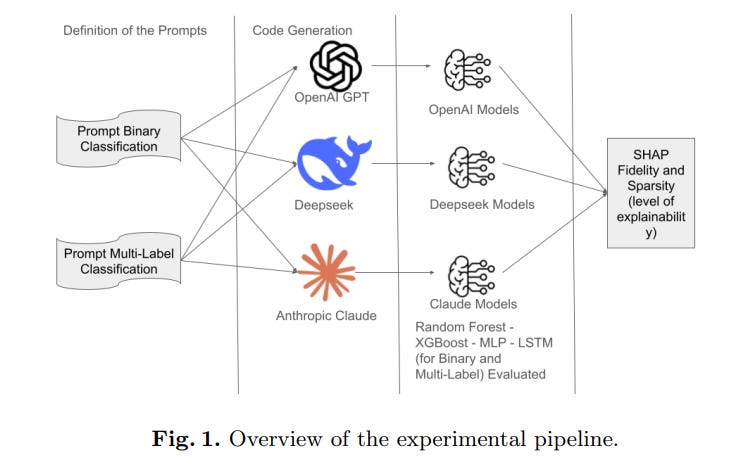

Study Highlights Potential of Large Language Models for Interpretable Machine Learning Solutions

A recent study has demonstrated the potential of large language models (LLMs) in enhancing the explainability of machine learning (ML) solutions. By employing three state-of-the-art LLMs to generate training pipelines for various classifiers, the study evaluated these models on tasks such as predicting driver alertness and classifying data using the yeast dataset. The results, assessed through metrics like fidelity and sparsity via SHAP (SHapley Additive exPlanations), suggest that LLMs can produce ML models that not only match the performance of manually crafted models but also offer significant interpretability. This underscores their growing utility in automated ML pipeline generation for applications necessitating high transparency.

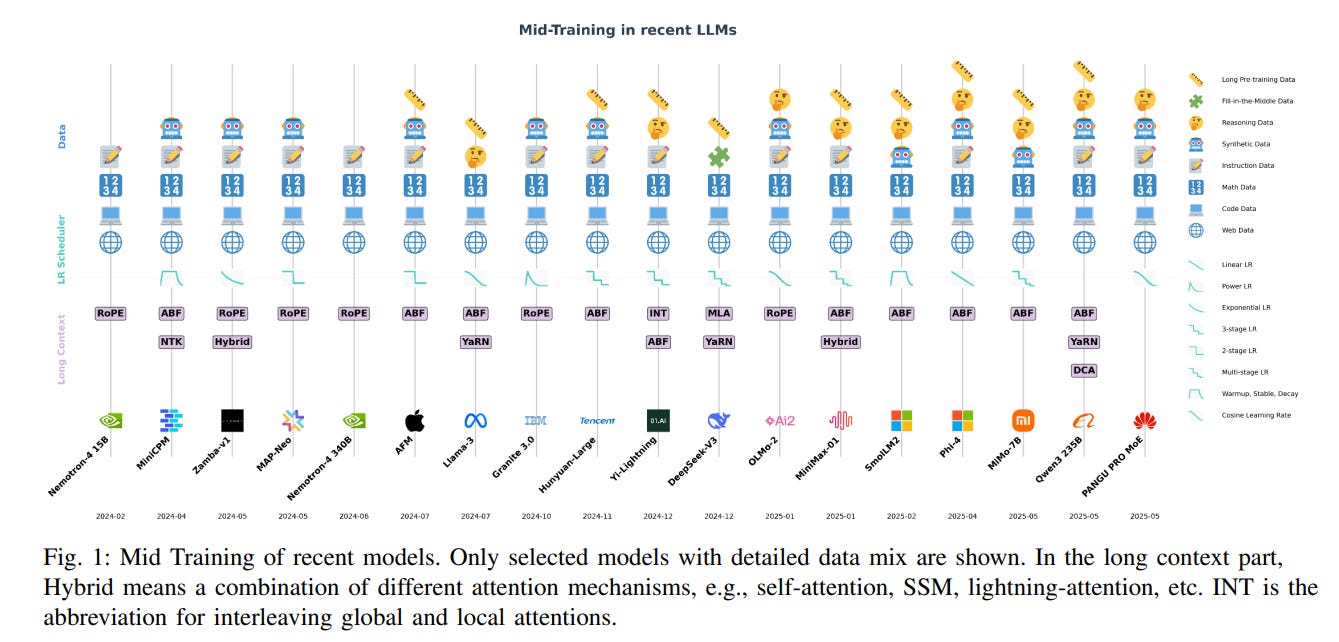

Survey Reveals Mid-Training as Key Phase in Large Language Model Development

The Journal of LaTeX Class Files recently published a survey on the emerging “mid-training” phase in large language model (LLM) development, which serves as an intermediary step between general pre-training and task-specific fine-tuning. This stage involves a series of annealing-style phases that refine data quality, optimize learning rates, and extend context lengths, thus enhancing the models’ convergence stability and capability. The survey highlights that mid-training helps mitigate diminishing returns from noisy data and improves generalization and abstraction through perspectives like gradient noise scale, the information bottleneck, and curriculum learning. Despite its growing importance in state-of-the-art systems, this survey marks the first comprehensive review of mid-training as a coherent paradigm, providing a framework for structured comparisons across models and identifying challenges for future research.

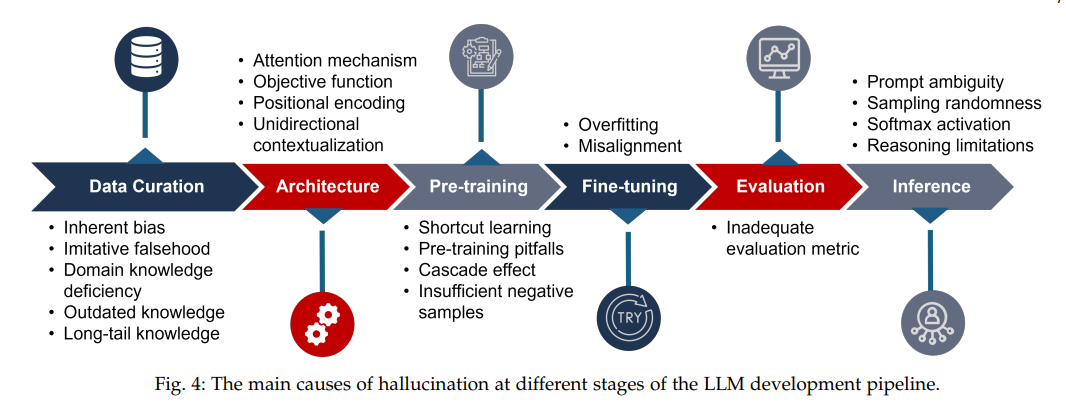

Comprehensive Survey Analyzes Causes and Solutions for Hallucinations in Large Language Models

A comprehensive survey highlights the issue of hallucination in large language models (LLMs), such as ChatGPT, Claude, and Bard, where models generate fluently worded but factually incorrect content. This phenomenon poses significant concerns for applications requiring high factual accuracy, like medical and financial fields. The research details the causes of hallucination across various LLM development stages, such as data collection and model architecture, while also reviewing detection and mitigation strategies. It introduces a taxonomy of hallucination types, detection techniques, and evaluates current solutions, emphasizing ongoing challenges and future directions to improve LLM reliability.

Survey Reveals How Developers Integrate Generative AI into Software Engineering Workflows

A recent study by researchers at William & Mary explored how software engineers use generative AI tools like GitHub Copilot and ChatGPT in their development practices. Based on a survey of 91 software developers, including 72 active users of generative AI, the study reveals that while code generation is a widespread use case, higher proficiency is linked to leveraging AI for debugging, code reviews, and other complex tasks. Developers reportedly favor iterative, multi-turn interactions over single prompts and find AI most reliable for documentation tasks, with challenges arising in more intricate code generation and debugging. The research underscores the transformative impact of generative AI on software engineering workflows, highlighting current practices, challenges, and potential areas for enhancement.

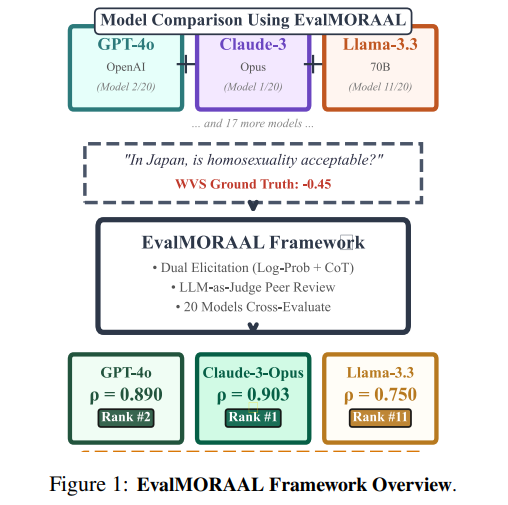

EvalMORAAL Framework Assesses Moral Alignment of 20 Large Language Models Globally

EvalMORAAL, a framework developed at Utrecht University, evaluates the moral alignment of 20 large language models using chain-of-thought methods and model-as-judge peer review based on the World Values Survey and PEW Global Attitudes Survey. It highlights a significant gap in moral alignment between Western and non-Western regions, with Western models aligning more closely to survey responses. The framework introduces comprehensive scoring methods and consistency checks, showing progress towards culturally aware AI while emphasizing ongoing challenges due to inherent regional biases in training data. This work underscores the need for addressing cultural sensitivity in AI systems to prevent the propagation of biases in diverse settings.

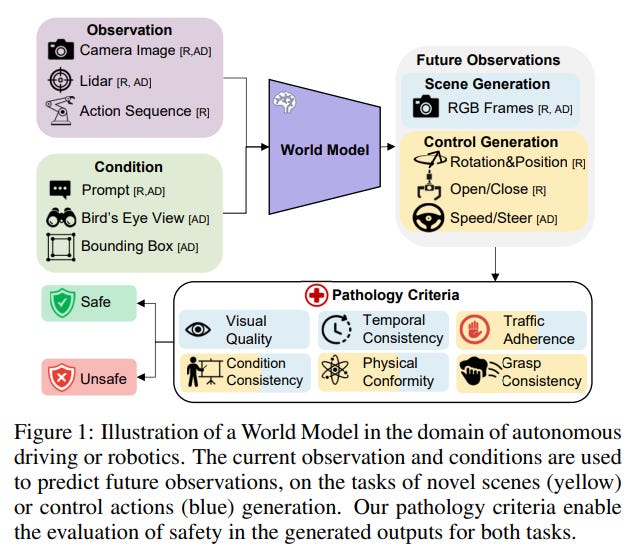

Comprehensive Review Highlights Safety Challenges in World Models for Embodied AI

A recent review highlights the safety challenges posed by World Models (WMs) in the realms of autonomous driving and robotics, emphasizing the need for these models to provide secure and reliable predictions for embodied AI agents. WMs aim to enhance agents’ planning and execution capabilities by predicting future environmental states, but ensuring these predictions do not jeopardize the safety of agents or their surroundings is crucial. The review examines state-of-the-art models, categorizing common prediction errors and offering a quantitative evaluation of their impacts, spotlighting the need for robust safety mechanisms in the generation of scenes and control actions.

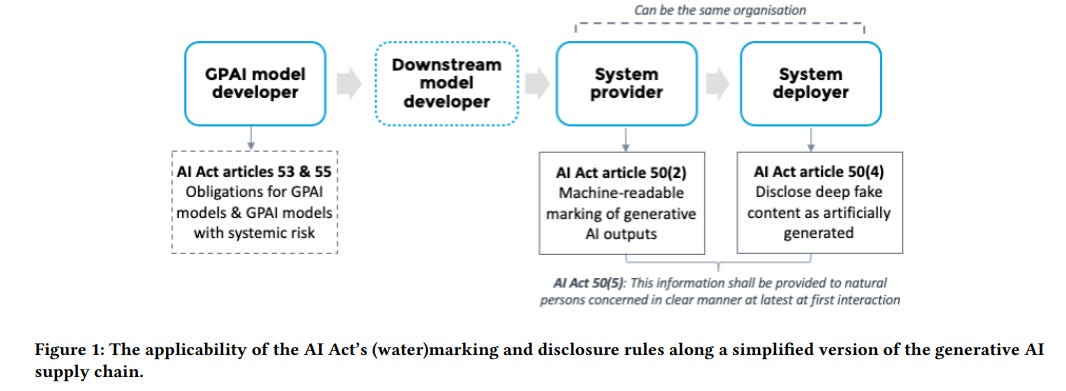

EU AI Act Challenges AI Watermarking: Legal and Practical Insights Explored

A recent study from Maastricht University highlights the challenges and implications of implementing watermarking techniques for generative AI systems under new EU regulations. Despite the crucial need to differentiate AI-generated content, only a small fraction of AI image generators currently utilize watermarking and deep fake labelling practices effectively. The study underscores the legal mandates of the 2024 EU AI Act, which requires machine-readable markings and visible disclosure of AI-generated content. These mandates aim to enhance transparency and accountability but face practical application hurdles in the generative AI landscape. As the rules come into force in August 2026, companies are urged to align with these requirements amid the looming threat of substantial fines for non-compliance.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.