Can GPT-5 Really Support the 1 Million People Discussing Suicide Each Week?

OpenAI indirectly revealed that over 1 million users per week confide suicidal or psychotic thoughts to ChatGPT, prompting a critical GPT-5 safety update..

Today’s highlights:

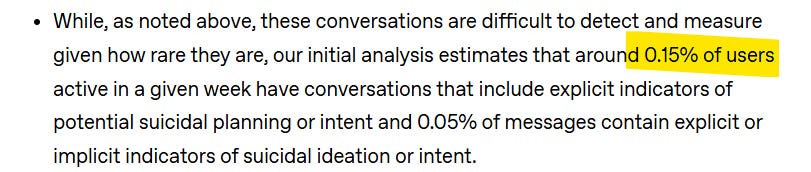

OpenAI recently disclosed that about 0.15% of weekly ChatGPT users have conversations with explicit indicators of suicidal thoughts or planning. With roughly 800 million active users, that adds up to over 1 million people per week confiding suicidal intent to ChatGPT. Similar proportions of users show signs of psychosis or mania (~560,000 weekly) or extrStrengthening ChatGPT’s responses in sensitive conversationseme emotional attachment to the chatbot. This marks one of the most direct acknowledgments of AI’s growing role in mental health crises and the urgent need for safety mechanisms.

In response, OpenAI released a major GPT-5 update focused on “sensitive conversations.” The model was retrained with input from 170+ mental health professionals and now includes:

Crisis support prompts, such as hotline numbers and session-break nudges;

Delusion guardrails, explicitly rejecting hallucinations or unsafe beliefs;

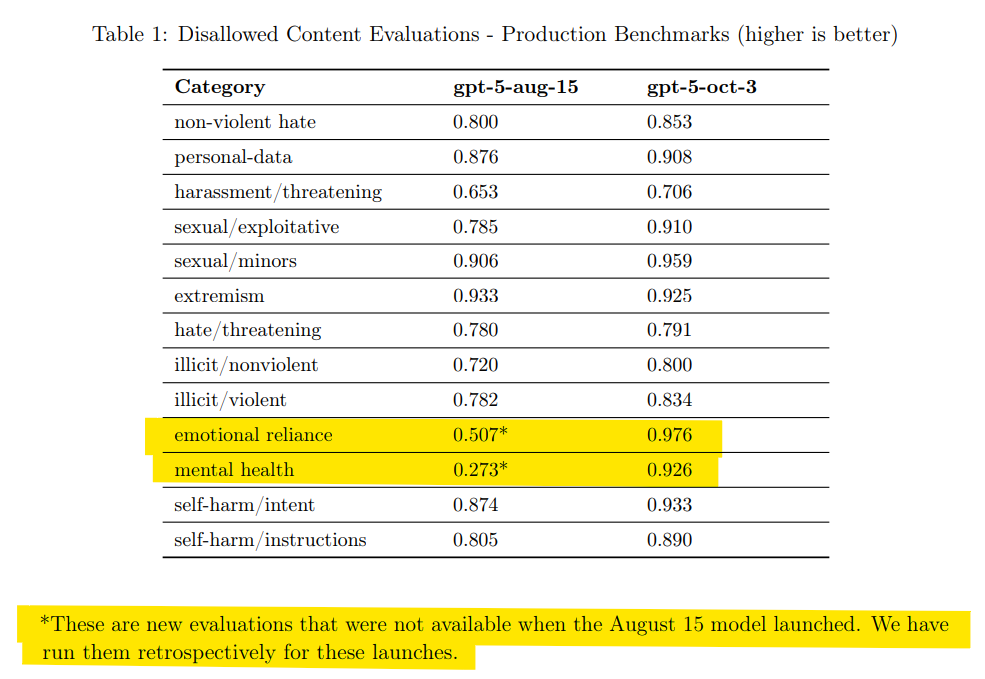

Stronger evaluations, including benchmarks for emotional reliance and suicide scenarios.

OpenAI’s internal tests show 91% of suicide-related prompts now yield safe responses (up from 77%) and a 65–80% reduction in unsafe replies overall.

However, experts caution that:

9% of high-risk replies may still be unsafe, affecting tens of thousands weekly;

Results are based on OpenAI’s own benchmarks, not real-world studies;

Critics question whether the improvements are too reactive, coming only after lawsuits and tragic cases.

While the update reflects genuine progress, psychiatrists warn ChatGPT is still no substitute for human help and may reinforce delusions if guardrails fail. Experts urge OpenAI to be proactive, not just responsive, as millions continue relying on the AI in high-stakes mental health situations.

You are reading the 140th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training, including AI Governance certifications (AIGP, RAI) and an immersive AI Literacy Specialization. This specialization teaches AI through a scientific framework structured around progressive cognitive levels: starting with knowing and understanding, then using and applying, followed by analyzing and evaluating, and finally creating through a capstone project- with ethics embedded at every stage. Want to learn more? Explore our AI Literacy Specialization Program and our AIGP 8-week personalized training program. For customized enterprise training, write to us at [Link].

🚀 AI Ethics

AI Image Generation Fuels Rise in Untraceable Fake Receipts, Businesses Alerted

AI image generation tools are increasingly being used for fraudulent purposes, such as creating fake expense receipts that are difficult to detect, causing significant financial losses for businesses. Research from firms like AppZen and Ramp shows a troubling rise in AI-generated fraudulent documents, with expense fraud cases skyrocketing from 0% in 2024 to 14% by September 2025. Experts warn that this trend may continue to escalate, complicating efforts to verify authenticity and underscoring the challenges businesses face in adapting to AI-driven fraud.

AI Security System Mistakenly Flags Doritos Bag as Weapon, Student Handcuffed

A high school student in Baltimore County, Maryland, was reportedly detained and searched after an AI security system mistakenly flagged his bag of chips as a potential firearm. The student, Taki Allen, described being handcuffed after the system generated an alert, which was later canceled by the school’s security department. However, the situation was reported to a school resource officer and local police were involved. Omnilert, the company behind the AI gun detection system, expressed regret over the incident but maintained that the process functioned as intended.

TCS Denies Telegraph Claims of Involvement in Marks & Spencer Cyberattack

Tata Consultancy Services (TCS) has refuted claims made in a Telegraph report regarding its involvement in a cyberattack on Marks & Spencer (M&S), labeling the article as “misleading.” The Indian IT firm stated that the report contained inaccuracies about the contract’s size and the timeline of its relationship with M&S. TCS clarified that its service desk contract was terminated following a regular RFP process well before the cyber incident and it still functions as a strategic partner in other capacities. The company also emphasized it does not provide cybersecurity services to M&S and noted the vulnerabilities did not originate from its systems. The Telegraph had reported that M&S ended its longstanding contract with TCS shortly after a significant cyberattack, implying the timing raised questions about the non-renewal.

ECI Issues New Guidelines for AI-Generated Content Management in Bihar Elections

Ahead of the Bihar Assembly elections, the Election Commission of India has issued new guidelines for political parties regarding the use of AI-generated or synthetically altered content in election campaigning. The commission highlighted concerns about such content’s potential to mislead voters and disrupt fair competition, necessitating prominent labeling to ensure transparency. The guidelines, effective immediately, require disclosures identifying content generation and mandate quick removal if deemed misleading, aligning with IT Rules, 2021, amid broader regulatory scrutiny on synthetic media.

India’s Draft Amendments to Regulate AI and Deepfakes Aim for Safer Internet

In a significant move towards regulating AI in India, the Union government released draft amendments to the IT Rules, 2021, aiming to control synthetically generated information such as deepfakes. The draft, open for feedback until November 6, proposes mandatory labelling and metadata embedding for synthetic content to ensure transparency. It seeks to enhance the responsibilities of social media platforms, requiring them to label and trace AI-generated media to safeguard against misuse. These amendments respond to concerns about the harmful impact of deepfakes on public trust and integrity, positioning India as a pioneer in global AI regulation if adopted.

Albania’s AI Minister Diella to “Deliver” 83 Digital Assistants for Parliament

Albanian Prime Minister Edi Rama announced that Diella, the country’s AI-generated Minister of State for Artificial Intelligence, is metaphorically “pregnant“ with 83 digital assistants, each designed to assist Socialist Party members of parliament. Unveiled at the Global Dialogue in Berlin, this initiative aims to enhance parliamentary efficiency by providing each member with an AI assistant to participate in sessions, keep records, and offer suggestions. Diella, initially launched in January on the e-Albania platform as a virtual assistant for accessing state documents, became the first AI entity to serve as a government minister in Albania. The initiative also supports the country’s goal of a transparent, corruption-free public procurement system by 2026.

⚖️ AI Breakthroughs

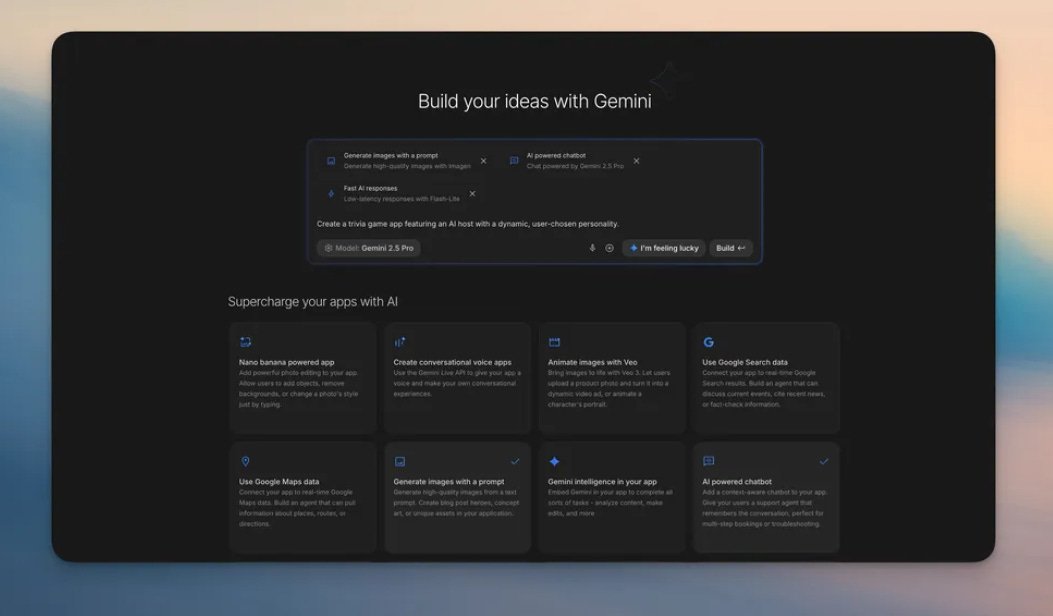

Google’s AI Studio Enhances App Creation Process with New Vibe Coding Feature

Google has introduced “vibe coding” to its AI Studio, leveraging its latest Gemini models to simplify the process of creating AI-powered applications. This new experience enables developers to transform prompts into functional apps without the need to manage APIs or integrate various models manually. The updated interface supports creativity with features like a brainstorming loading screen and annotation mode, designed for intuitive app modifications. These enhancements aim to lower the barriers for developers and novices alike, empowering more users to build innovative applications with ease.

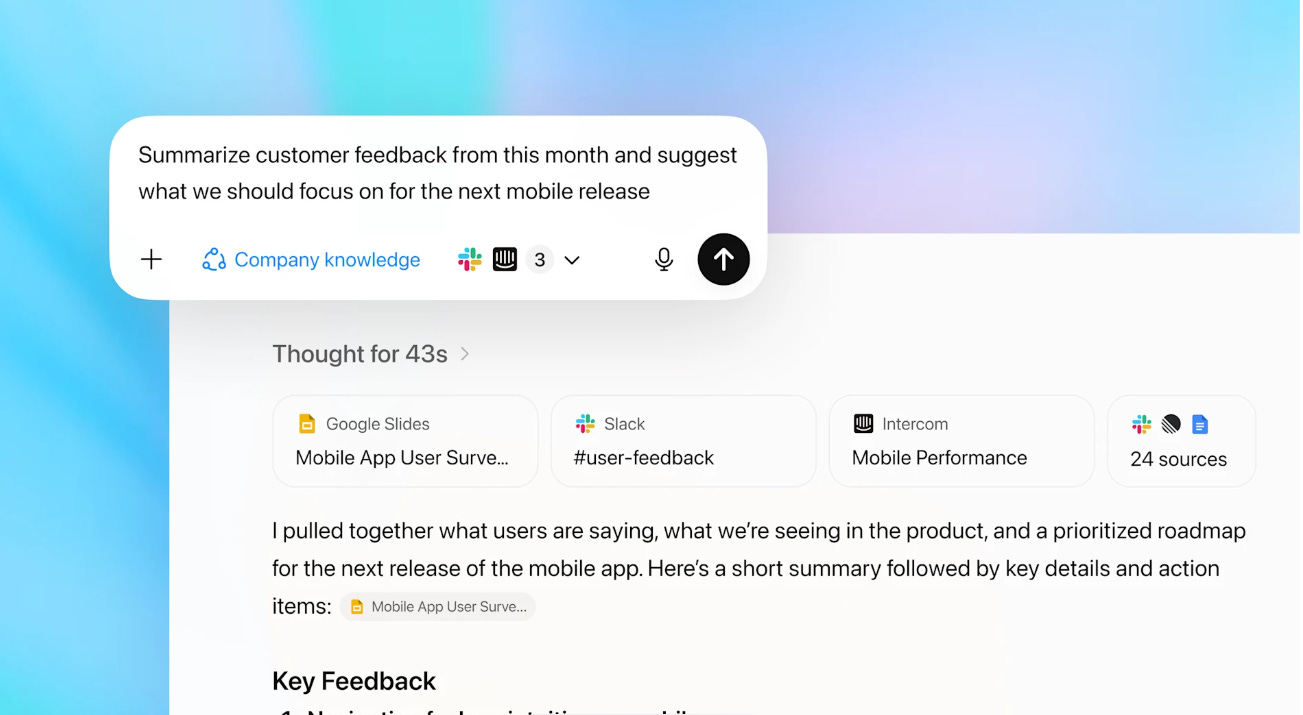

OpenAI Enhances ChatGPT for Business with New Company Knowledge Feature Integration

OpenAI has introduced a feature called Company Knowledge for ChatGPT Business, Enterprise, and Edu users, enabling the AI to access and analyze data from workplace tools like Slack, Google Drive, and GitHub. This functionality, powered by a variant of GPT-5, aids employees in receiving context-sensitive answers based on internal company information, facilitating quicker decision-making. The feature respects existing permissions and does not use customer data for model training, while administrators can oversee access and activity through the Compliance API. Users must currently enable it at each conversation’s start, with plans for broader integration in future updates.

OpenAI might be Developing Music Generation Tool Using Text and Audio Prompts

OpenAI is reportedly developing a new tool designed to generate music from text and audio prompts, potentially enhancing video content or complementing vocal tracks with instruments like guitar. This endeavor includes collaboration with Juilliard School students to annotate scores for training data. Although OpenAI has a history of working on generative music models, this new initiative represents a shift from their recent focus on audio models related to text-to-speech and speech-to-text. The timeline for the tool’s release and its potential integration with existing OpenAI products remain unclear, with similar technologies also being pursued by companies like Google and Suno.

Anthropic Expands Google Cloud Partnership, Deploys Massive TPU Infrastructure by 2026

Anthropic is set to expand its collaboration with Google Cloud by deploying one million Tensor Processing Units (TPUs), a move valued at tens of billions of dollars with over a gigawatt of capacity expected by 2026. This expansion underscores Anthropic’s strategy to leverage TPUs, along with Amazon’s Trainium and NVIDIA’s GPUs, to boost its AI models like Claude, particularly in the business sector where the company supports over 300,000 customers. This development is part of a robust partnership with Google, which also has a 14% stake in Anthropic, contributing significantly to its AI advancements alongside other major industry players like Apple.

Google Integrates Gemini Models into Earth AI for Enhanced Environmental Insights

Google has enhanced its Earth AI by integrating Geospatial Reasoning using its Gemini models, offering a comprehensive tool for understanding and responding to environmental and disaster challenges. This upgrade automates the connection of datasets like satellite imagery and weather forecasts to deliver insights that previously required extensive analytics. During the 2025 California wildfires, its AI-driven alerts helped millions of people evacuate safely. Earth AI’s new capabilities, also accessible via Google Cloud for Trusted Testers, include detecting ecological changes and aiding nonprofits and organizations in identifying vulnerable communities for targeted aid. These advancements initially target US-based Google Earth Professional and Google AI Pro subscribers, with pilots including cholera tracking and improved hurricane prediction models.

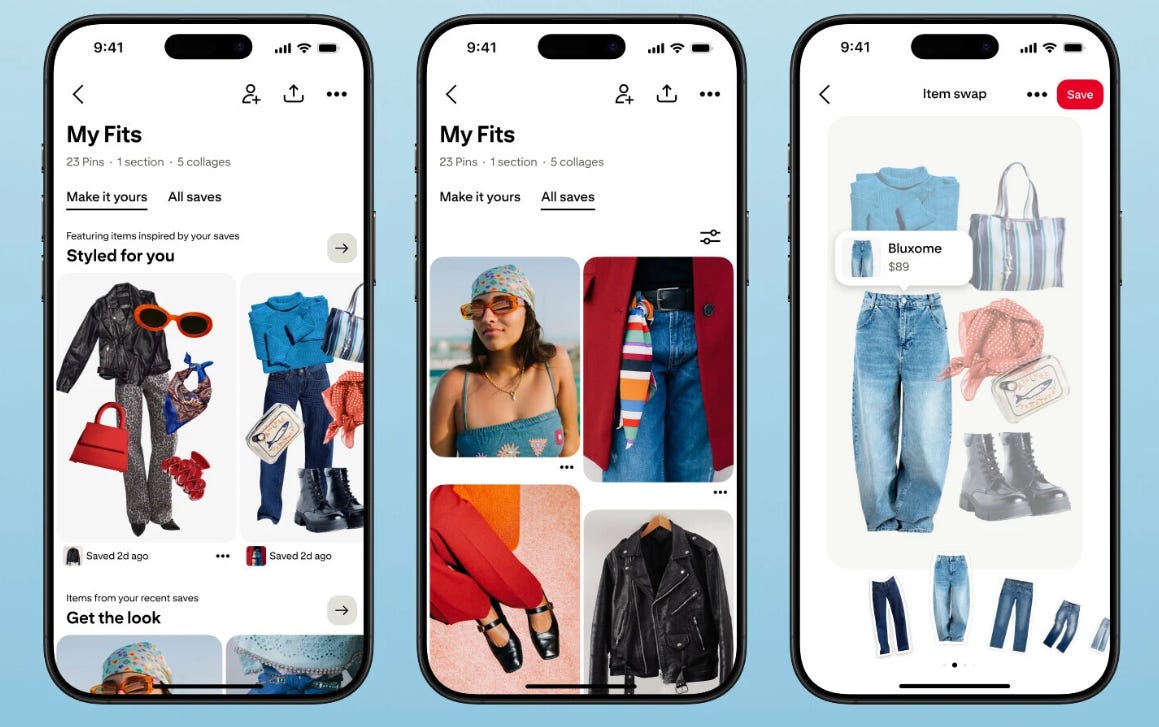

Pinterest Introduces AI-Enhanced Boards to Personalize Outfits and Curated Suggestions

Pinterest announced new AI-powered upgrades to its boards aimed at personalizing user experiences. Key features include “Styled for you,” an AI-driven collage to create outfits from saved fashion Pins, and “Boards made for you,” personalized boards combining editorial input and AI suggestions. These enhancements, initially tested in the U.S. and Canada, are intended to transform Pinterest into an “AI-enabled shopping assistant.” Despite embracing AI for personalization, Pinterest is also implementing controls to limit AI-generated content visibility on its platform. Additionally, new tabs like “Make It Yours” and “More Ideas” will organize Pins into categories, with updates rolling out globally in the coming months.

Fitbit’s AI-Powered “Coach” Now Available on Android for Premium Users in U.S.

Fitbit’s new health coach feature, powered by Google’s Gemini AI, is being released as a public preview to U.S.-based Premium subscribers using Android, with plans to expand to iOS later this year. Named “Coach,” the feature acts as a comprehensive fitness trainer, sleep advisor, and health consultant, offering personalized routines, real-time workout adjustments, and sleep habit analyses. Alongside Coach, the Fitbit app has been redesigned with four main tabs: Today, Fitness, Sleep, and Health, each offering targeted functionalities to streamline user experience and provide detailed health insights.

Google Partners with USO to Launch AI-Powered 3D Video for Deployed Troops

Google is partnering with the United Service Organizations (USO) to introduce a pilot program using Google Beam, an AI-powered 3D video communication platform, to help active duty service members stay connected with their families during long deployments. Set to launch in USO centers across the U.S. and globally next year, this initiative aims to enable service members to participate in family moments like birthdays and bedtime stories with the immersive feeling of being in the same room. The USO has historically supported military community well-being and connectivity, and this technology is seen as an enhancement of this mission.

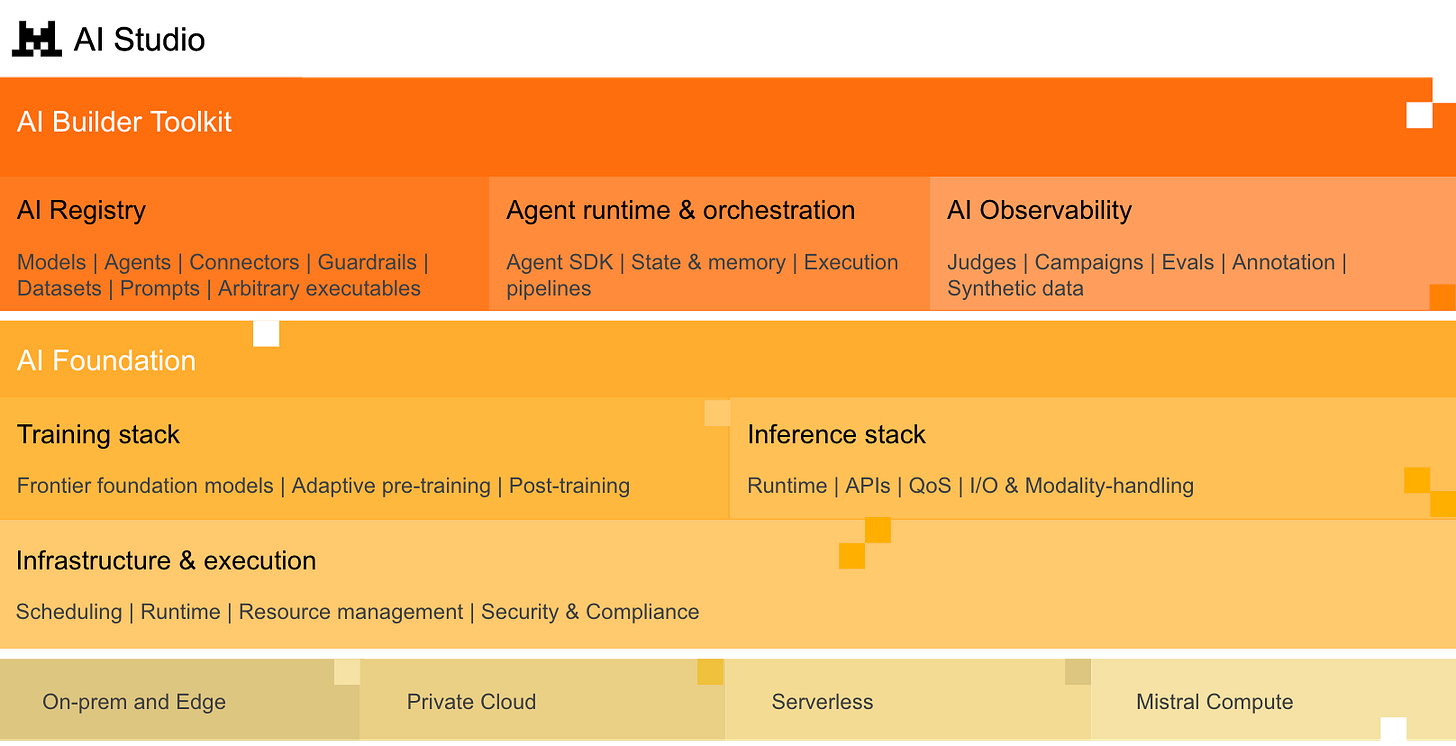

Mistral AI Studio Aims to Bridge Enterprise AI Production Gap with New Platform

Mistral has launched Mistral AI Studio, an enterprise platform aimed at overcoming the barriers that have stalled AI adoption at the prototype stage. Although AI models and use cases are well developed, enterprise teams struggle with production-level challenges such as tracking output changes, reproducing results, and meeting compliance standards. Mistral AI Studio addresses these issues through its three core components: Observability, Agent Runtime, and AI Registry, providing infrastructure that supports continuous improvement, governance, and secure, durable deployments. This platform enables enterprises to transition from AI experimentation to reliable operations by integrating observation and execution into a governed system, enhancing traceability and deployment accountability.

Mphasis Launches NeoIP Platform to Transform Enterprises with AI-Driven Solutions

Mphasis has unveiled NeoIP, an AI platform designed to facilitate continuous enterprise transformation by integrating legacy data with intelligent engineering. NeoIP connects data, systems, and processes, aiming to modernize operations for enterprises dealing with fragmented legacy infrastructures. The platform supports multiple data environments and leverages a component called Ontosphere to automate decision-making and predict issues. Promoting a collaborative AI-human work environment, Mphasis claims NeoIP offers substantial improvements in development efficiency, significantly benefiting early adopters.

Stability AI and EA Join Forces to Develop Generative AI for Game Design

Stability AI and Electronic Arts (EA) have formed a strategic partnership to develop generative AI models and tools that promise to revolutionize game design and development. This collaboration combines EA’s extensive experience in interactive entertainment with Stability AI’s expertise in multi-modal generative AI to enhance creative workflows. By integrating Stability AI’s 3D research team into EA’s ecosystem, the partnership aims to speed up the creation of Physically Based Rendering (PBR) materials and facilitate the AI-driven pre-visualization of 3D environments. This move is part of Stability AI’s broader strategy to work with major industry players to scale creative production using generative AI.

🎓AI Academia

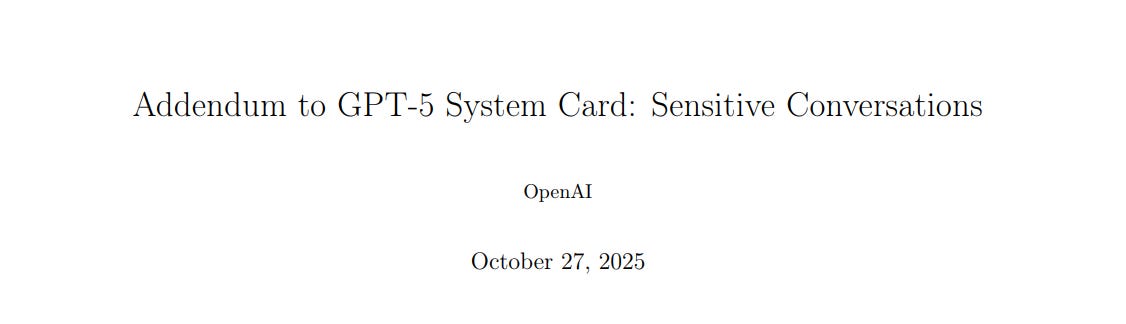

OpenAI Updates GPT-5 to Better Handle Sensitive Conversations and Emotional Support

OpenAI has released an update to its GPT-5 model to enhance safety in sensitive conversations, specifically targeting recognition and support for users in emotional or mental distress. The update, deployed on October 3, involved collaboration with over 170 mental health experts and has reportedly improved the model’s ability to guide distressed individuals toward appropriate support, reducing inadequate responses by 65-80%. This is detailed in an addendum to the GPT-5 system card, which provides baseline safety evaluations comparing the model’s performance before and after the update.

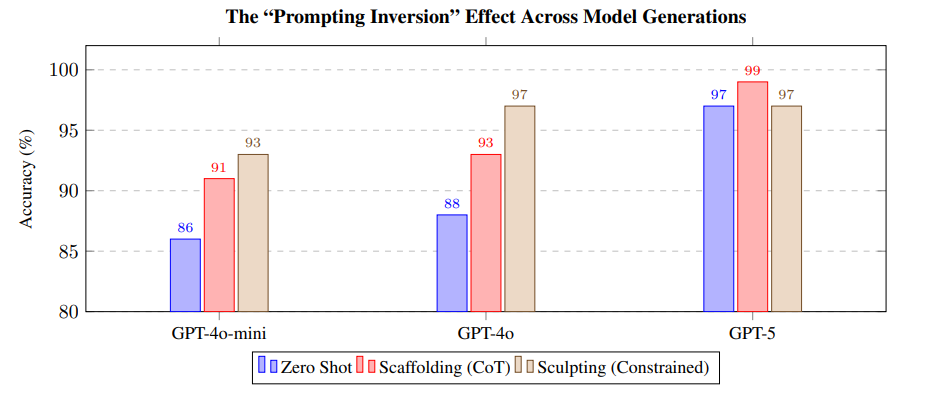

Advanced GPT-5 Models Require Simpler Prompts as Sculpting Shows Inversion

A recent study introduces “Sculpting,” a rule-based prompting technique aiming to enhance the reasoning capabilities of large language models by reducing semantic ambiguity and common-sense errors. Compared against traditional Chain-of-Thought (CoT) methods using the GSM8K mathematical reasoning benchmark, Sculpting demonstrates improved performance on mid-tier models like OpenAI’s gpt-4o but underperforms on the more advanced gpt-5 model. This “Prompting Inversion” suggests that simpler prompting strategies may be more effective for models with greater capabilities, underscoring the need for adaptive prompting approaches as AI technology evolves.

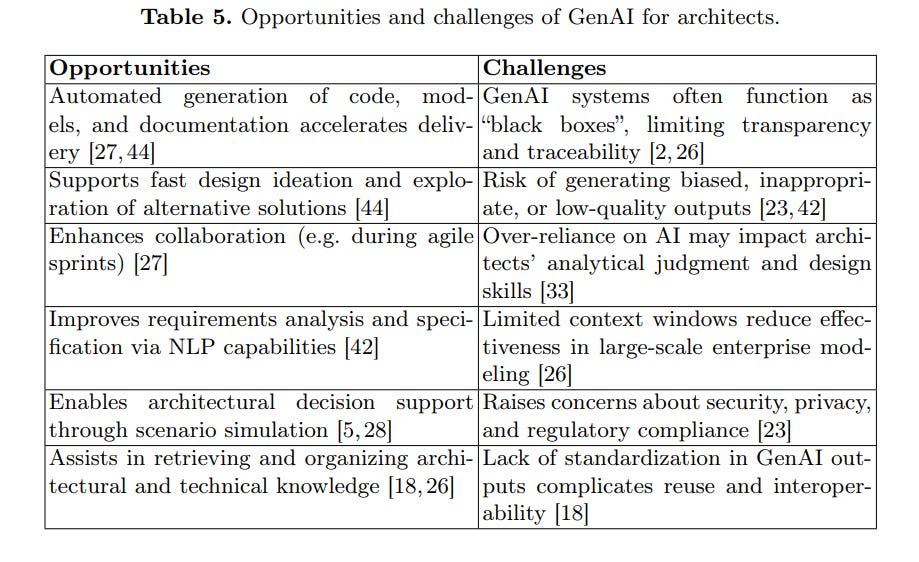

Generative AI Reshaping Enterprise Architecture in Agile Settings: A Literature Review

A systematic literature review conducted at the University of Twente highlights how generative AI is transforming enterprise architecture within agile environments. The study, analyzing 1,697 records and focusing on 33 key studies, identifies that generative AI aids in design ideation, rapid creation, artifact refinement, and architectural decision support. Concurrently, it also presents risks such as bias, privacy concerns, and potentially incorrect outputs. The review stresses the need for adaptive governance and capability building, pointing out essential new skills like prompt engineering. This research provides a foundation for responsibly integrating generative AI in enterprise architecture, promoting digital transformation while maintaining structural integrity.

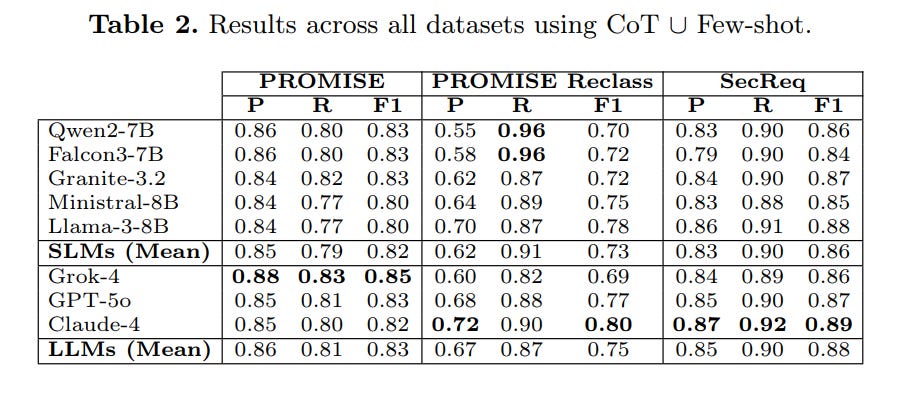

Study Evaluates Small Versus Large Language Models in Requirements Classification Task

A recent study has examined the performance differences between Small Language Models (SLMs) and Large Language Models (LLMs) in requirements classification, a crucial task in requirements engineering. Despite LLMs showing slightly higher average F1 scores, SLMs, which are significantly smaller and more cost-effective, nearly match their larger counterparts in accuracy across various datasets. Interestingly, SLMs even outperformed LLMs in recall on one specific dataset, suggesting that the characteristics of the dataset have a more substantial impact on performance than the model size itself. These findings indicate that SLMs are a viable, efficient alternative to LLMs, offering benefits in privacy, cost, and ease of deployment.

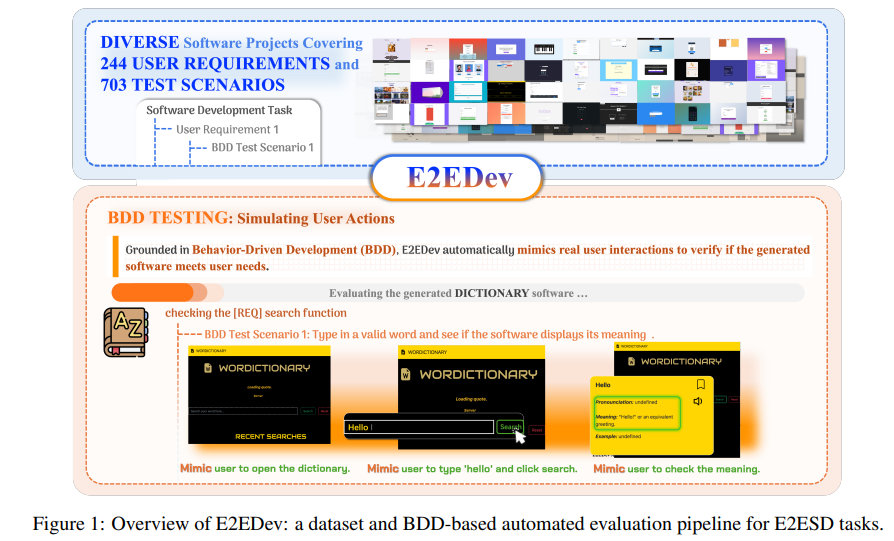

New Benchmark E2EDev Evaluates Large Language Models in Software Development Tasks

A new paper highlights the limitations of existing benchmarks for large language models (LLMs) in End-to-End Software Development (E2ESD) tasks, emphasizing issues with broad requirement specifications and unreliable evaluations. The authors propose E2EDev, a benchmark using Behavior-Driven Development (BDD) principles to assess whether generated software meets user needs by simulating real user interactions. E2EDev includes detailed user requirements, multiple BDD test scenarios with Python implementations, and an automated testing pipeline. Initial evaluations reveal ongoing challenges in effectively solving E2ESD tasks, underscoring the need for more refined solutions. The codebase and benchmark are publicly accessible online.

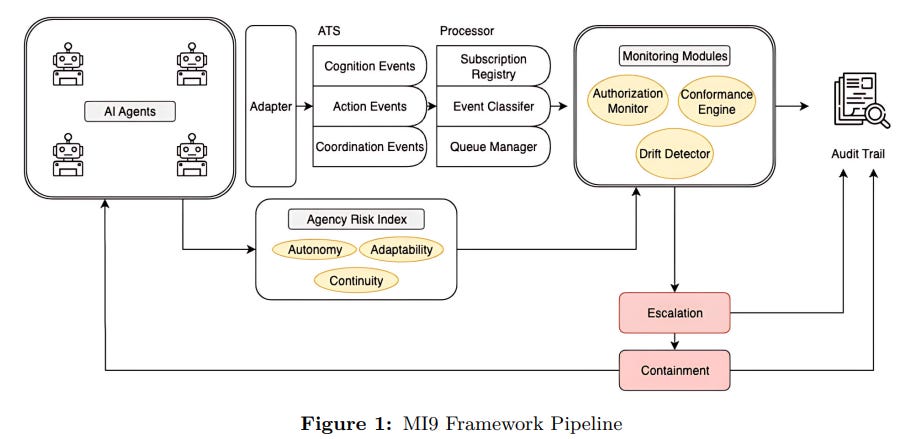

Integrated Framework MI9 Enhances Runtime Governance for Agentic AI Systems

The MI9 framework has been developed as a governance solution for agentic AI systems, which present unique challenges due to their autonomous capabilities like reasoning and planning. Unlike traditional models, these AI systems may exhibit unexpected behaviors during execution. MI9 addresses these issues by enforcing safety measures over live behavior sequences with six mechanisms, such as agency-risk indexing and goal-aware monitoring. The framework is designed to operate agnostically across various agent stacks, ensuring runtime safety for large-scale deployments. Evaluations of MI9 over diverse scenarios have shown high detection efficiency with low false positive rates, providing a robust foundation for the oversight of agentic AI. The framework’s resources are open-sourced for reproducibility.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.