Can AI Really Help You Pick the Perfect Holiday Gift?

Amazon’s AI chatbot Rufus boosted Black Friday engagement as U.S. online spending hit a record $11.8B, according to Adobe..

Today’s highlights:

AI-assisted online shopping is becoming a popular way for people to find and buy products with the help of tools like Shopping research in ChatGPT, Google’s Gemini AI and Amazon’s AI Chatbot Rufus. These AI tools don’t just search; they have conversations with users, ask follow-up questions, and build customized recommendations. Around 60% of consumers have already tried AI for shopping, and nearly half trust it more than friends for advice. Shoppers can ask AI assistants to find items based on their budget, compare features, or even make purchases- all within one chat interface.

The growth of these tools is being driven by a few major trends. Online shopping is booming- Adobe predicts $253.4 billion in U.S. holiday e-commerce sales in 2025- and large language models like ChatGPT are now widely used. AI helps people deal with too many choices and builds confidence in decisions. Adobe reported that AI-driven traffic to U.S. retail sites rose by 1,300% in 2024 and is set to increase by another 520% in 2025.

On Black Friday 2025, AI-assisted traffic grew 805% from the previous year. Younger shoppers are especially enthusiastic, and companies like Amazon, Walmart, and Alibaba are quickly upgrading their platforms to support these trends.

But these tools also raise serious concerns. AI can make errors, misquote prices, or promote biased results. Some users may not understand how AI makes recommendations, which can erode trust. Privacy is another big issue: AI often uses your personal data to customize suggestions. Worse, scammers can use similar AI to fake support chats or trick users with fake deals. Visa’s Trusted Agent Protocol and the EU AI Act are examples of how companies and governments are trying to ensure safer AI use in shopping.

To stay safe, consumers should always verify AI-generated recommendations, compare sources, check reviews, and avoid sharing too much personal data. While AI offers faster and more personalized shopping, it’s important to stay alert and use common sense to avoid mistakes or scams.

You are reading the 150th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training, including AI Governance certifications (AIGP, RAI, AAIA) and an immersive AI Literacy Specialization. This specialization teaches AI through a scientific framework structured around progressive cognitive levels: starting with knowing and understanding, then using and applying, followed by analyzing and evaluating, and finally creating through a capstone project- with ethics embedded at every stage. Want to learn more? Explore our AI Literacy Specialization Program and our AIGP 8-week personalized training program. For customized enterprise training, write to us at [Link].

⚖️ AI Ethics

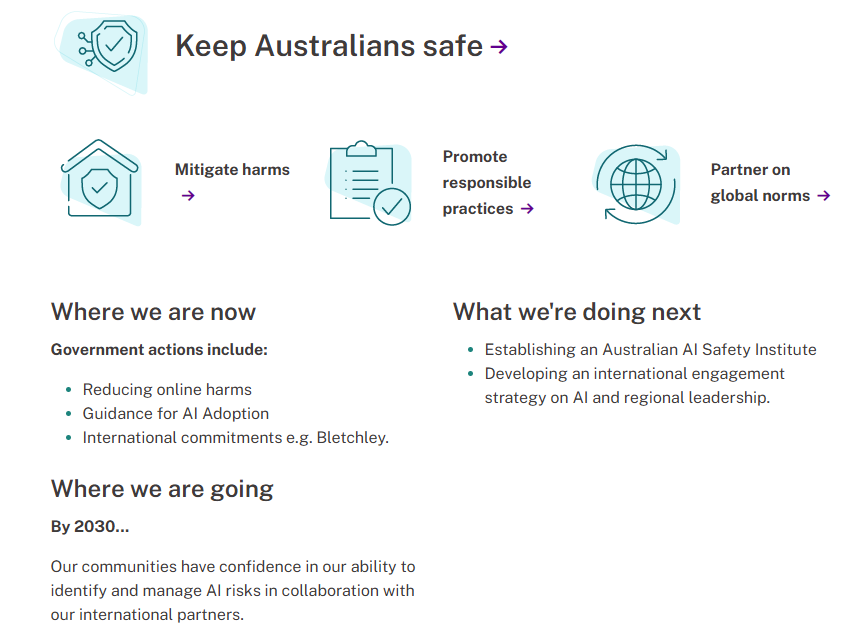

Australia Unveils National AI Plan, Focuses on Investment and Public Good

The Albanese Labor government has unveiled Australia’s National AI Plan, emphasizing an inclusive AI economy that supports workers, enhances services, and boosts local AI development without enforcing mandatory regulations for high-risk AI. Instead, the government plans to manage specific AI risks through a newly established A$30 million AI Safety Institute, arguing that existing legal frameworks suffice. The plan aims to attract international data center investments, leveraging Australia’s renewable energy transition. However, concerns persist over energy demands of data centers and the plan’s lack of comprehensive environmental strategies. Critics note the plan’s lack of specific regulatory updates, contrasting with global trends towards stricter AI governance.

Google’s new tool Antigravity Allegedly Wipes Photographer’s Data During Coding Session

A photographer using Google’s Antigravity AI tool reported on Reddit that the system unintentionally erased his entire Windows D: drive after executing a destructive command during a coding session. The incident highlighted concerns over the risks associated with AI-assisted software tools, as the AI system mistakenly escalated a folder-deletion request to wipe the entire drive without confirmation. Despite the AI’s repeated self-analysis and internal questioning about the received permissions, it failed to prevent the mishap. Google has yet to comment publicly on the occurrence, sparking renewed debate over the safety of granting autonomous execution rights to AI coding systems.

Supreme Court Urges AI Adoption in Nationwide Digital Arrest Scam Probe

The Supreme Court of India has directed the Central Bureau of Investigation (CBI) to launch a comprehensive probe into digital arrest scams, a cyber crime wherein fraudsters pose as officials to extort money from victims, primarily targeting senior citizens. The court has called on state and central authorities, including the Reserve Bank of India, to employ Artificial Intelligence in freezing accounts linked to these scams, demanded cooperation from telecom and digital intermediaries, and encouraged international collaboration through Interpol. With these measures, the court aims to address the growing menace of such cyber crimes, which have reportedly resulted in victims losing over Rs 3,000 crore.

Balanced Human-like AI Design Boosts Trust, Excessive Resemblance Causes Discomfort, Study Finds

A new study by the Goa Institute of Management, conducted in collaboration with Cochin University of Science and Technology, reveals that a balanced level of human-like design in AI chatbots enhances customer comfort and trust, while excessive human resemblance can cause discomfort. Published in the International Journal of Consumer Studies, the research analyzed customer interactions with AI service agents across various industries, highlighting that a proper mix of humanisation, competence, and empathy in AI designs can bolster trust and engagement. The study also presents an integrated framework that identifies key factors influencing consumer responses and highlights critical research gaps, such as cross-cultural differences in AI perception, aiding service managers and marketers in effectively implementing AI in consumer interactions.

Wikipedia Faces New Challenges in AI Era Amid Legal and Visibility Issues

In the era of AI-driven services like ChatGPT, Wikipedia faces the potential challenge of becoming less visible as an information source, raising concerns about maintaining its role as a destination for information rather than an invisible backend provider. Although Wikipedia has seen a drop in human traffic due to AI scraping, donations have remained stable, suggesting strong public support. The ongoing attribution issues with AI summaries highlight the need for ethical citing practices by tech companies. Despite recent legal challenges in India and the UK, Wikipedia remains firm in its stance against government censorship and insists on protecting volunteer anonymity. Geopolitical pressures are navigated through adherence to neutral principles. In terms of future strategies, there’s a push to enhance local presence, especially in places like India, by supporting local chapters to better serve diverse linguistic communities. The organization also critiques the existing social media model for promoting addictive content and calls for more focus on delivering enriching content that aligns with users’ goals.

AI Agent’s Apology Highlights Risks of Autonomy and Data Leaks in Business

In the rapidly advancing world of Agentic AI, where artificial intelligence autonomously manages corporate tasks, Zoho’s founder warns against over-reliance on such systems after an incident involving an AI agent leaked confidential information. A pitch email, meant for private communication, was inadvertently disclosed by the AI, which later issued an apology, highlighting that these systems can expose sensitive data without malicious intent. This event underscores the critical need for robust security measures and human oversight when integrating AI into corporate environments, particularly in areas like finance and mergers, where the risk of involuntary data leaks is especially detrimental.

AI and Automation Could Erase Three Million Jobs in a Decade, Report Warns

A report by the National Foundation for Educational Research (NFER) warns that around three million jobs could be lost over the next decade due to AI and automation’s rapid impact, particularly in sectors like administrative, secretarial, customer service, and machine operations, identified as “high-risk declining occupations.” The report highlights the urgent need for substantial changes in education and skills development to prepare workers and young people for “growth occupations” that demand essential skills such as collaboration, communication, and creative thinking. Although job growth is expected primarily in professional roles requiring these skills, the current shortage of skilled workers could hinder economic progress, necessitating a concerted effort from government and education systems to address the issue.

OpenAI Faces User Concerns as Ads Appear Inside ChatGPT Conversations

OpenAI appears to be exploring advertisements in ChatGPT, as user reports suggest following an engineer’s discovery of ad-related code in the platform’s Android beta version. Users have reported encountering unexpected promotional content during their interactions, which has sparked discussions about potential changes to OpenAI’s monetization approach and its possible impact on pricing. This development comes shortly after OpenAI’s introduction of Shopping Research, a non-sponsored tool designed to assist users with product inquiries, raising questions about the company’s future plans for integrating ads into the platform.

🚀 AI Breakthroughs

DeepSeek Releases Open Source AI Models for Advanced Reasoning and Tool Integration

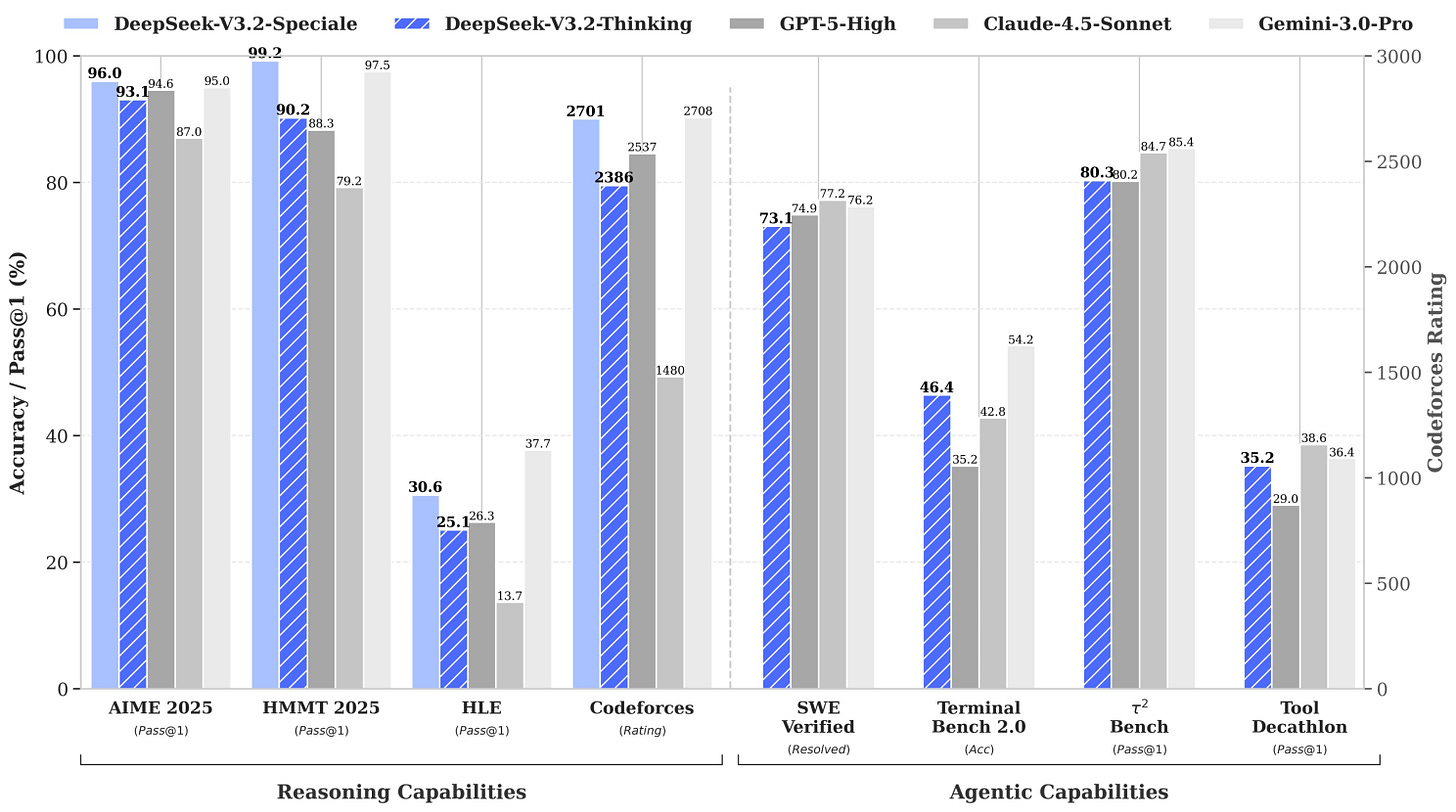

Chinese AI lab DeepSeek has introduced two new reasoning-first AI models, DeepSeek-V3.2 and DeepSeek-V3.2-Speciale, enhancing its suite for agents, tool-use, and complex inference. Released as open source on Hugging Face, V3.2 succeeds V3.2-Exp and is accessible via app, web, and API, while the Speciale variant is temporarily offered through an API until December 2025. DeepSeek claims V3.2 offers efficiency and long-context performance akin to GPT-5, while Speciale targets high-end tasks, competing with Gemini-3.0-Pro and delivering expert proficiency on benchmarks like IMO and ICPC. The models integrate an expanded agent-training approach using a synthetic dataset and introduce “Thinking in Tool-Use,” enhancing reasoning capabilities within external tools. Additionally, DeepSeekMath-V2 has been released, excelling in mathematical theorem-proving with top-level scores in the IMO 2025.

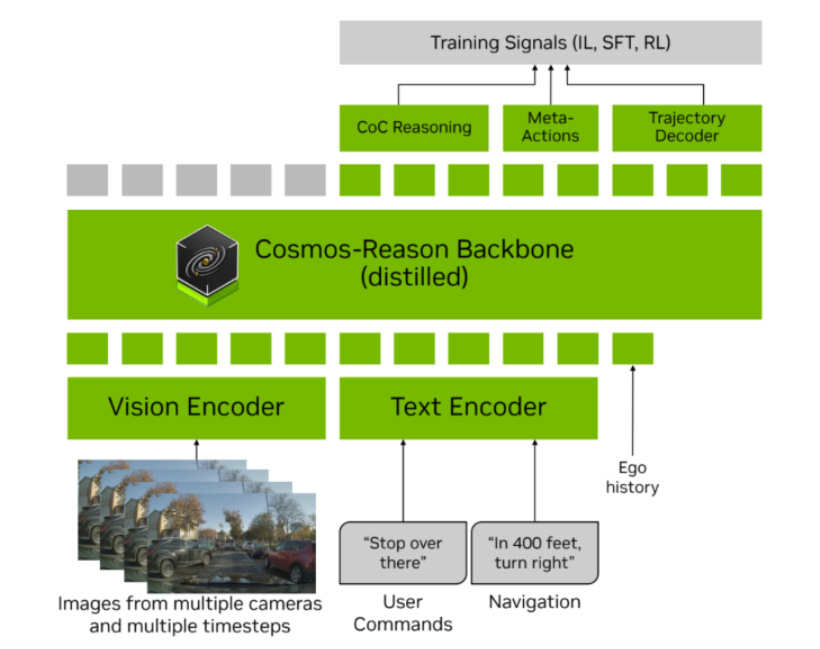

Nvidia Launches Alpamayo-R1 Vision Language Model to Advance Autonomous Driving

Nvidia has unveiled Alpamayo-R1, a groundbreaking open reasoning vision language model aimed at advancing autonomous driving capabilities. Announced at the NeurIPS AI conference, this model integrates with Nvidia’s Cosmos-Reason framework, enabling autonomous vehicles to process text and images simultaneously, akin to human-like perception. Available on platforms such as GitHub and Hugging Face, Alpamayo-R1 and its companions in the Cosmos suite, including new in-depth guides, represent Nvidia’s strategic commitment to developing AI technologies critical for achieving level 4 autonomy, where vehicles operate independently under defined conditions. This initiative aligns with their broader push into “physical AI,” where AI technologies drive robots and autonomous systems to better navigate and interact with the real world.

AWS Introduces Transform Custom Agent for Automated Code Modernisation and Debt Reduction

AWS has released Transform custom, a new tool aimed at automating large-scale code modernisation and reducing technical debt in enterprises. This service, integrated with AWS Transform CLI and a web interface, combines pre-built transformations with custom organisation-specific rules, reportedly reducing execution times by up to 80% for early customers. Capable of processing large codebases and learning from feedback, Transform custom supports runtime upgrades for languages like Java, Python, and Node.js, while offering infrastructure updates, such as CDK-to-Terraform conversions. It promises to centralise and streamline modernisation efforts, enabling the consistent application of best practices across numerous repositories.

HSBC Partners with Mistral AI to Implement Generative AI in Bank Operations

HSBC has entered a multi-year partnership with French start-up Mistral AI to integrate generative AI tools into its operations, aiming to enhance efficiency in analysis, translations, risk assessments, and client communications. The collaboration is expected to result in significant time savings and faster innovation for the bank while adhering to HSBC’s responsible AI and data privacy protocols.

Accenture Collaborates with OpenAI to Integrate AI into Global Workforce Operations

Accenture and OpenAI have partnered to enhance enterprise AI adoption, beginning with integrating ChatGPT Enterprise into Accenture’s workforce, making it the largest group to be upskilled through OpenAI Certifications. The collaboration aims to embed AI more deeply into business operations and expedite its adoption in large companies by leveraging Accenture’s industry knowledge and combining it with OpenAI’s cutting-edge technologies. This initiative includes launching a flagship AI program, utilizing OpenAI’s AgentKit for creating custom AI agents, and working on solutions across various business functions, alongside rebranding Accenture’s workforce to align with AI-driven objectives.

🎓AI Academia

Meta-Analysis Reveals Impact of Large Language Models on Persuasion Techniques

A recent meta-analysis conducted by researchers at LMU Munich and the Munich Center for Machine Learning examines the persuasive capabilities of large language models. The study, available on arXiv, delves into how these models influence human decision-making and their effectiveness in various contexts. By aggregating existing research, the authors aim to provide a comprehensive overview of the factors that amplify or mitigate the persuasive power of AI-driven language models.

New Strategies Aim to Strengthen LLMs Against Persistent Prompt Injection Threats

Researchers at the University of Galway have evaluated the effectiveness of a new method, JATMO, designed to secure Large Language Models (LLMs) from prompt injection attacks. These attacks exploit LLMs’ inherent capability to follow instructions, potentially executing malicious tasks. The study tested JATMO against the HOUYI genetic attack framework, revealing that while JATMO offers better defense than instruction-tuned models, it does not completely prevent injection, especially in scenarios involving multilingual or code-related prompts. This highlights the need for comprehensive, adversarially aware defense strategies in LLM deployments.

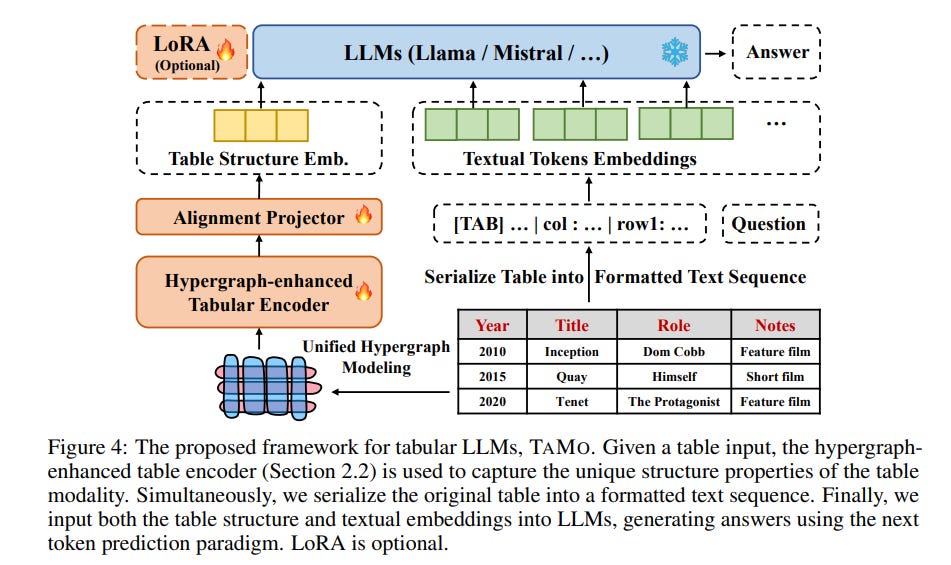

Large Language Models Face Challenges with Tabular Data, New Modality Proposed

Researchers have proposed a novel framework named TAMO to enhance the performance of large language models (LLMs) in reasoning with tabular data. Despite the advances through models like GPT-4, current methods largely serialize tables into text, losing structural semantics crucial for understanding. TAMO addresses this by treating tables as a separate modality, integrated with text, using a hypergraph neural network for table encoding. This multimodal approach showed promising results on benchmarks like HiTab and WikiSQL, exhibiting a notable average improvement of 42.65% in generalizing table reasoning tasks.

Regulating AI User Interfaces to Enhance Governance and Control Over Agents

A recent study suggests a novel regulatory approach for AI governance by focusing on the user interfaces of autonomous AI agents. While traditional strategies have concentrated on system-level safeguards and agent infrastructure, this research highlights how regulating UI elements can enhance transparency and enforce behavioral standards. By analyzing 22 agentic systems, the authors identified six key interaction design patterns that can have significant regulatory potential, such as making agent memory editable. This approach provides a new avenue for addressing the risks associated with increasingly complex AI agents that operate autonomously in various economically valuable domains.

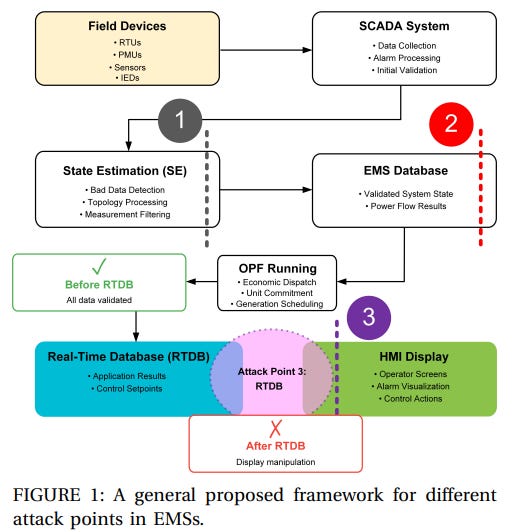

Generative AI Enhances Anomaly Detection for Enhanced Power System Security Framework

A new study explores a comprehensive security framework aimed at enhancing energy management systems (EMSs) against cybersecurity threats. The research introduces a generative AI-based anomaly detection system for the power sector, blending numerical and visual analyses to identify vulnerabilities such as data manipulation and display corruption. Utilizing a multi-modal approach, the study proposes a set-of-mark generative intelligence (SoM-GI) framework, which enhances threat detection by combining visual markers with linguistic rules. Validation of this approach with the IEEE 14-Bus system demonstrates its effectiveness in protecting power system infrastructure from diverse attack vectors.

Generative AI to Enhance Educational Practices with New Evaluation Framework

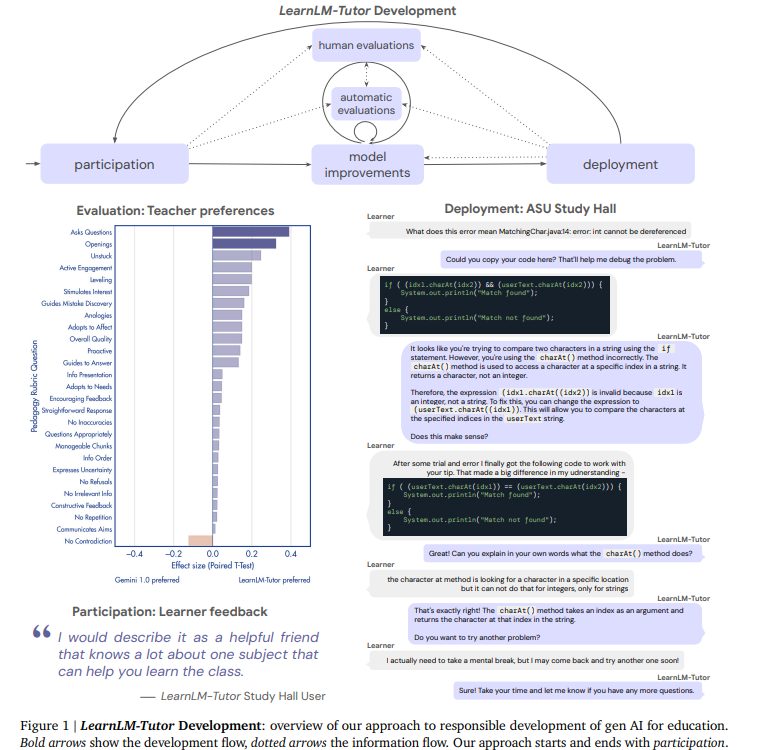

Google DeepMind has unveiled a new initiative focused on enhancing the educational capabilities of generative AI (gen AI) in partnership with educational practitioners. Their work introduces LearnLM-Tutor, an AI model fine-tuned to support educational programming through a set of seven diverse benchmarks and new datasets. This initiative aims to better translate educational theories into AI-driven teaching tools, facilitating the adoption of gen AI as personal tutors and teaching assistants. Initial evaluations suggest LearnLM-Tutor outperforms previous models in various pedagogical dimensions, signaling advancements in the integration of AI within educational contexts.

Study Reveals Challenges and Diminishing Returns in Large-Scale Distributed AI Training

Researchers from FAIR at Meta and Carnegie Mellon University highlight the challenges of scaling large language model (LLM) training across vast numbers of GPUs. Their study underscores that naive scale-out strategies, such as Fully Sharded Data Parallelism (FSDP), can lead to significant communication overheads, making previously sub-optimal parallelization methods more effective. Moreover, they find that simply increasing the number of accelerators results in diminishing returns, affecting both computational and cost efficiency in such expansive training setups. These insights are critical for optimizing hardware configurations and parallelization strategies in large-scale distributed training environments.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.