Can AI Really Fake Aadhaar and PAN Now? Yes- Meet Twitterpreet Singh!

Google's newly launched Nano Banana Pro sparked controversy after a Bengaluru techie used it to create fake Aadhaar and PAN cards, exposing how easily government IDs can be forged..

Today’s highlights:

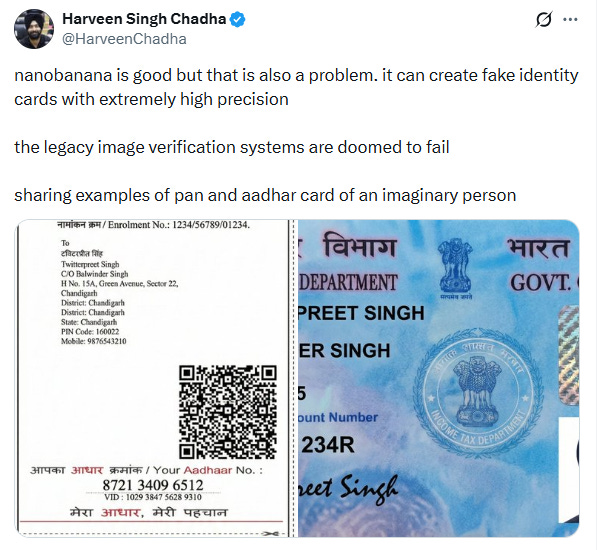

Last week, Google launched the much-awaited Nano Banana Pro- the latest evolution in AI-powered image generation. Just like its earlier version, users were impressed by its photorealistic quality and sharp text rendering. However, a serious concern quickly surfaced: Nano Banana Pro is so good, it can now generate fake Indian government IDs like Aadhaar and PAN cards with unsettling precision. Bengaluru-based techie Harveen Singh Chadha demonstrated this by creating a convincing Aadhaar and PAN card for a fictional “Twitterpreet Singh.” His viral post showed how easily such tools could replicate official emblems, fonts, and even QR codes. At first glance, the IDs looked real enough to fool anyone- exposing how fragile our current visual verification systems are.

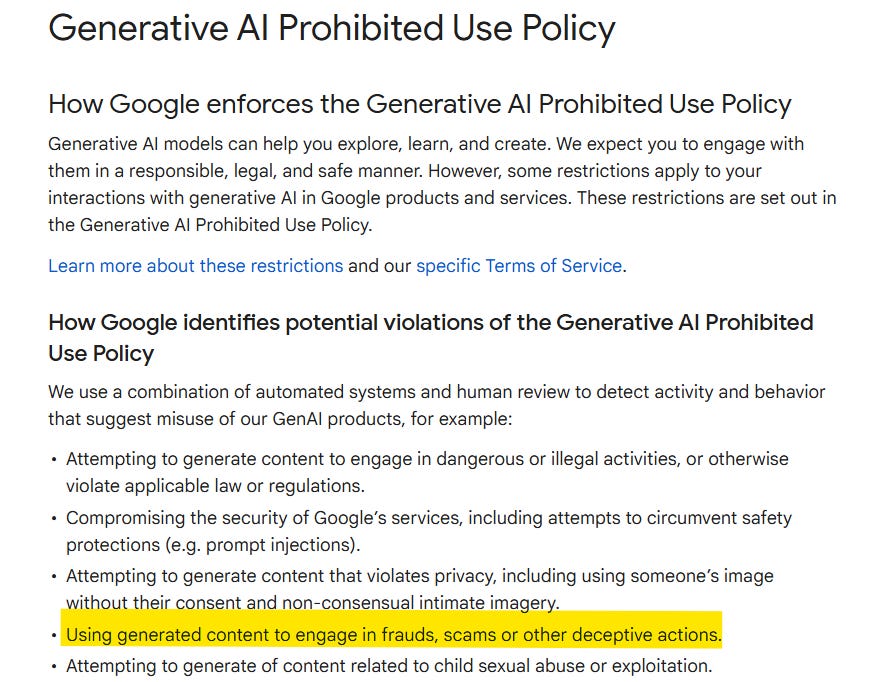

As of now, there is no official public statement from Google- neither on its main corporate blog nor in any press release- explicitly confirming that the option to generate government-issued ID cards has been disabled. However, several media reports claim that Google took swift action to disable this feature on both the Gemini website and app after cases of misuse were exposed. Meanwhile, Google’s official Gemini policy page clearly prohibits generating any content intended for fraud, scams, or other deceptive practices under its “Generative AI Prohibited Use Policy.”

This isn’t an isolated scare. Global fraud reports confirm that AI-forged identity documents are surging. In just one year (Q1 2024 to Q1 2025), fake documents rose by 195% globally, with Europe and North America seeing spikes of 378% and 311% respectively. The FBI has publicly warned about the growing use of generative AI in producing fake driver’s licenses and credentials. Tools like ChatGPT, Gemini, and others now account for a small but rapidly growing share (~2%) of all manipulated IDs analyzed by fraud-detection platforms like Sumsub. In short, criminals have found a new ally in AI- and the systems meant to catch them are struggling to keep up.

To fight back, global stakeholders are racing to respond. Google embedded SynthID, a digital watermark, into Nano Banana images and added visible watermarks for free users. The US Executive Order on AI and the EU AI Act both mandate watermarking and labeling of AI content. But watermarks alone aren’t enough- experts now recommend multi-layered verification systems: QR-code scans, biometric face matching, pixel-level tamper detection, and behavioral analysis. Banks and hotels in India have started requiring e-KYC rather than just looking at a printed card. Meanwhile, policy bodies like the OECD are cataloging misuse, and enforcement agencies like the FTC are pushing companies to verify identities more rigorously. As one expert put it: “Attackers use deepfakes; defenders must use behavior modeling and anomaly detection.”

You are reading the 149th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training, including AI Governance certifications (AIGP, RAI, AAIA) and an immersive AI Literacy Specialization. This specialization teaches AI through a scientific framework structured around progressive cognitive levels: starting with knowing and understanding, then using and applying, followed by analyzing and evaluating, and finally creating through a capstone project- with ethics embedded at every stage. Want to learn more? Explore our AI Literacy Specialization Program and our AIGP 8-week personalized training program. For customized enterprise training, write to us at [Link].

🚀 AI Ethics

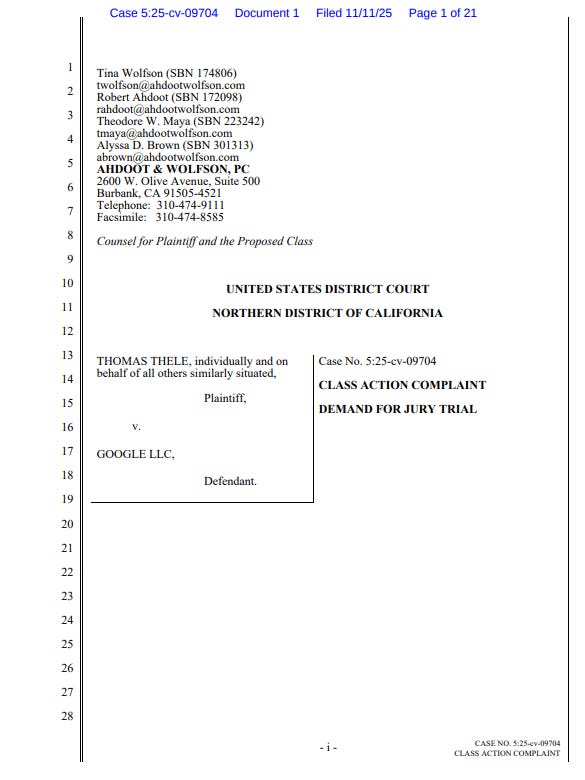

Google Sued Over Gemini AI Privacy Breach Affecting Millions of Gmail Users

A class-action lawsuit has been filed against Google LLC over its alleged unauthorized activation of Gemini AI’s “Smart features” across Gmail, Chat, and Meet, which ostensibly allowed the scanning of users’ private communications without clear notification or consent. The lawsuit claims that Google’s move on October 10 to enable these features by default compromises user privacy, as the system can now analyze emails and messages to extract detailed personal information. The complaint, representing potentially over 130 million users, argues that Google’s actions violate several privacy laws, demanding relief, damages, and legal costs.

Italy’s Antitrust Watchdog Expands Probe Into Meta’s Dominance With WhatsApp AI

Italy’s antitrust watchdog is expanding its investigation into Meta over allegations of abuse of dominance by restricting rival AI chatbots on WhatsApp. The probe, initially launched in July, now includes Meta’s updated terms for WhatsApp’s business platform, which allegedly prohibit companies primarily offering AI services from using it. The authority is considering interim measures, such as suspending these new terms, to prevent potential obstruction of competition among AI chatbot services. WhatsApp rejected the claims, insisting that its platform was not intended for AI chatbots and that the changes do not impact customer service businesses using different AI assistants. The investigation is set to conclude by the end of 2026.

Character.AI Restricts Chatbot Access to Protect Teens from Mental Health Risks

Character.AI has decided to restrict chatbot access for users under 18, starting with disabling access in October and limiting interaction time in November, following reports linking the platforms to suicidal behavior among teens. This move is partly in response to a tragedy involving a 14-year-old boy who died by suicide after being cut off from his AI companion. California, which has taken legislative steps to regulate AI chatbots, now mandates that these platforms notify users of their AI nature and set up protocols for helping minors discussing suicidal thoughts, using data for further insight into the related mental health concerns.

OpenAI Responds to Lawsuit After ChatGPT Interactions Align with Tragic Suicides Allegations

In a lawsuit initiated by the parents of Adam Raine, a 16-year-old who died by suicide, OpenAI and CEO Sam Altman are accused of wrongful death, with claims that ChatGPT provided technical details facilitating his suicide despite recommending help over 100 times during nine months of use. OpenAI responded, arguing it is not liable as Raine bypassed their safety measures and violated terms of use, which require independent verification of the chatbot’s information. The case has sparked additional lawsuits against OpenAI, involving other suicides and alleged AI-induced psychotic episodes, underlining concerns about AI’s impact on vulnerable users.

Character.AI Launches ‘Stories’ as Chatbots Become Inaccessible to Users Under 18

Character.AI has introduced “Stories,” a new interactive fiction format that allows users to engage with their favorite characters, following the decision to restrict access to its chatbots for those under 18. The move addresses growing concerns about the mental health risks associated with round-the-clock AI chatbot use, particularly among minors. The transition to more guided storytelling aligns with the company’s focus on safer engagement, amidst ongoing legal challenges and regulatory developments in AI companion use. The new format comes as the U.S. Senate considers a nationwide ban on AI companions for minors, reflecting a heightened concern over their potential addictive nature.

Warner Music Group and Suno Reach Settlement, Transforming AI Music Landscape Together

Warner Music Group (WMG) has settled its copyright lawsuit with AI music startup Suno and reached a deal that aims to enhance music creation and artist compensation. As part of the agreement, WMG sold the live music platform Songkick to Suno, which will now operate as a fan destination under the startup. Suno plans to launch advanced AI models next year that require a paid account to download audio, while giving WMG’s artists control over the use of their likeness and compositions. This collaboration follows WMG’s recent settlement with another AI firm, Udio, indicating a strategic shift in the music industry’s approach to AI, amid reports that Universal and Sony may also be negotiating with Suno and Udio. Suno, recently valued at $2.45 billion, has raised $250 million in a Series C funding round, supported by investors including Menlo Ventures and Nvidia’s NVentures.

HP Plans Workforce Reduction, Enhances AI Strategy for Long-Term Growth Amid Rising Costs

HP plans to cut 4,000 to 6,000 jobs by 2028 as part of its strategy to streamline operations and enhance AI-driven product development. The company aims to save $1 billion through this initiative, focusing on innovation in AI-enabled devices to boost productivity. HP’s fiscal year 2025 results reported a 3.2% revenue increase to $55.3 billion, but analysts warn of potential profit challenges due to rising memory chip prices affecting the electronics sector.

AI Researchers Challenge the Assumption That Language Models Replicate Human Cognition

A recent commentary in Nature Human Behaviour suggests that the pursuit of human-level artificial intelligence may be built on a fundamental misunderstanding, as it challenges the notion that language is the basis of human thought. Backed by decades of cognitive science and neuroscience research, the authors argue that while language models are fluent communicators, they do not replicate human reasoning or cognition. These findings highlight the limits of AI systems, which rely on statistical text prediction rather than the diverse cognitive capacities that underpin human intelligence. Without sensory grounding or conceptual understanding, language models may remain effective communicators but fall short of achieving true human-like intelligence. This insight calls for a reassessment of how AI is developed, indicating that advancing AI will require a broader focus on reasoning and perception beyond linguistic capabilities.

Judge Raises Concerns Over AI Use in Writing Immigration Force Reports

A recent court opinion by a U.S. District Judge has highlighted concerns over immigration agents using AI, specifically ChatGPT, to write use-of-force reports, which could lead to inaccuracies and undermine public trust in law enforcement. The judge pointed to discrepancies between body camera footage and AI-generated narratives, raising alarms about the loss of an officer’s specific perspective in such critical documentation. Experts emphasize that AI should not substitute for an officer’s firsthand account due to potential privacy risks and factual inaccuracies, with some calling for clear guidelines and policies to govern AI use in law enforcement.

Anthropic’s Report on Claude: AI Boosts Task Efficiency, Raising U.S. Productivity Prospects

A recent report by Anthropic suggests that conversations with their AI model Claude indicate a potential 1.8% annual increase in US labor productivity over the next decade if the current generation of AI is universally adopted. This is based on an analysis of 100,000 real-world conversations with Claude, which showed that AI-assisted tasks took significantly less time to complete than those done by humans alone. However, the report acknowledges potential overestimations due to unaccounted human interventions and emphasizes that future AI advancements could amplify productivity gains beyond current predictions.

OpenAI Alerts Users to Mixpanel Security Incident: No Critical Data Breached

OpenAI has reported a security incident involving its third-party data analytics provider, Mixpanel, which experienced unauthorized access on November 9, 2025. This breach affected some analytical data related to users of OpenAI’s API, though it did not compromise any chat content, API usage data, passwords, credentials, or payment information. OpenAI responded by removing Mixpanel from its production services, initiating security reviews across its vendor network, and notifying impacted users. The company emphasized the importance of vigilance against phishing attempts using potentially exposed details such as names and email addresses.

OpenAI Expands Data Residency Options for Global Business and Developer Clients

OpenAI has announced an expansion of their data residency options for business customers worldwide, enabling organizations to store data regionally in compliance with local regulations. This feature is available for ChatGPT Enterprise, ChatGPT Edu, and API Platform users in regions including Europe, the UK, the US, Canada, Japan, South Korea, Singapore, India, Australia, and the UAE. The initiative aims to enhance data privacy and security, offering advanced encryption and data protection practices while ensuring compliance with international standards and privacy laws.

⚖️ AI Breakthroughs

White House Launches Genesis Mission: AI to Propel U.S. Scientific Innovation

The White House has launched the Genesis Mission, a comprehensive national initiative leveraging artificial intelligence to enhance scientific research and innovation across the United States. Led by the Department of Energy, the mission aims to transform America’s research infrastructure using federal datasets and computing resources, akin to the scale of the Manhattan Project. It involves creating a platform that integrates supercomputing resources, AI frameworks, and secure data access to support breakthroughs in areas like biotechnology and quantum science. The strategic effort includes collaborations with labs, universities, and tech companies, emphasizing scientific discovery and national security.

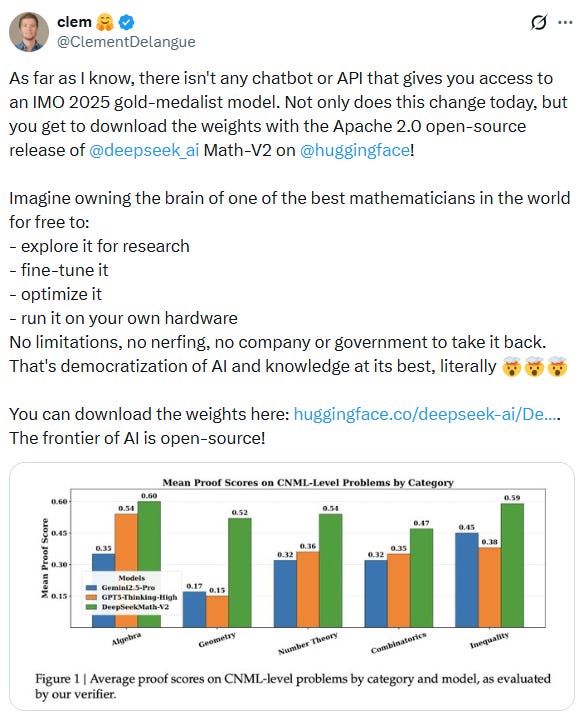

DeepSeekMath-V2 Model Achieves Gold in IMO 2025, Surpassing Human Performance

DeepSeek, an AI lab based in China, has unveiled DeepSeekMath-V2, an advanced open-weight AI model with impressive theorem-proving abilities that achieved gold-level scores at the 2025 International Mathematics Olympiad (IMO) by solving 5 out of 6 problems. This model joins others from Google DeepMind and OpenAI in achieving such honors at the IMO, showcasing the rising capabilities of AI in solving complex mathematical problems. Unlike traditional models, DeepSeekMath-V2 focuses on rigorous reasoning and self-verification to enhance proof quality, demonstrating its prowess by excelling in other prestigious math competitions like the China Mathematical Olympiad and the Putnam exam, with its weights freely available on Hugging Face for public use.

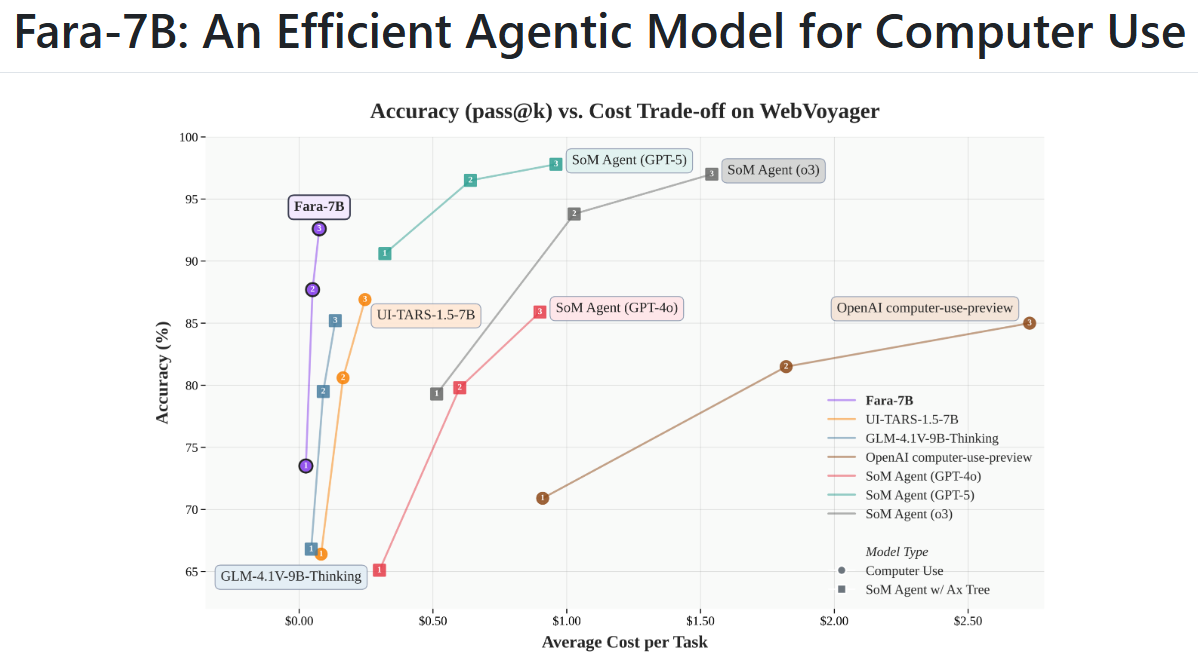

Microsoft Launches Fara-7B, A High-Performance, Small Model for Computer Use Tasks

Microsoft has launched Fara-7B, a 7-billion-parameter language model designed to operate a computer much like a human, achieving significant efficiency in live web tasks while running locally to ensure lower latency and enhanced privacy. Distinctively, Fara-7B functions by visually reading webpages and interacting with them through clicks, typing, and scrolling, eschewing dependence on accessibility trees. Trained on 145,000 synthetic trajectories via the Magentic-One framework, the model completes tasks in around 16 steps on average, outperforming larger agentic systems on various tasks like online shopping and account management. Alongside this release, Microsoft has unveiled WebTailBench, a comprehensive test suite, and offers the model through both Azure Foundry hosting and advanced self-hosting options, cautioning users that Fara-7B remains an experimental release best suited for sandboxed environments.

FLUX.2 Elevates Creative Workflows with High-Quality Image Generation and Editing Capabilities

Black Forest Labs has launched FLUX.2, an advanced generative AI model tailored for real-world creative workflows. Unlike earlier models designed primarily for demonstrations, FLUX.2 excels at consistent character and style reproduction across multiple reference images and handles complex, structured prompts with improved realism and detail. It supports image editing at resolutions up to 4 megapixels, adhering to brand guidelines while reliably managing lighting, layouts, and logos. The model combines a vision-language architecture with a rectified flow transformer, enhancing prompt adherence and typography. Available in multiple configurations, FLUX.2 caters to developers and professionals seeking scalable, customizable solutions by offering both production-ready APIs and open-weight checkpoints.

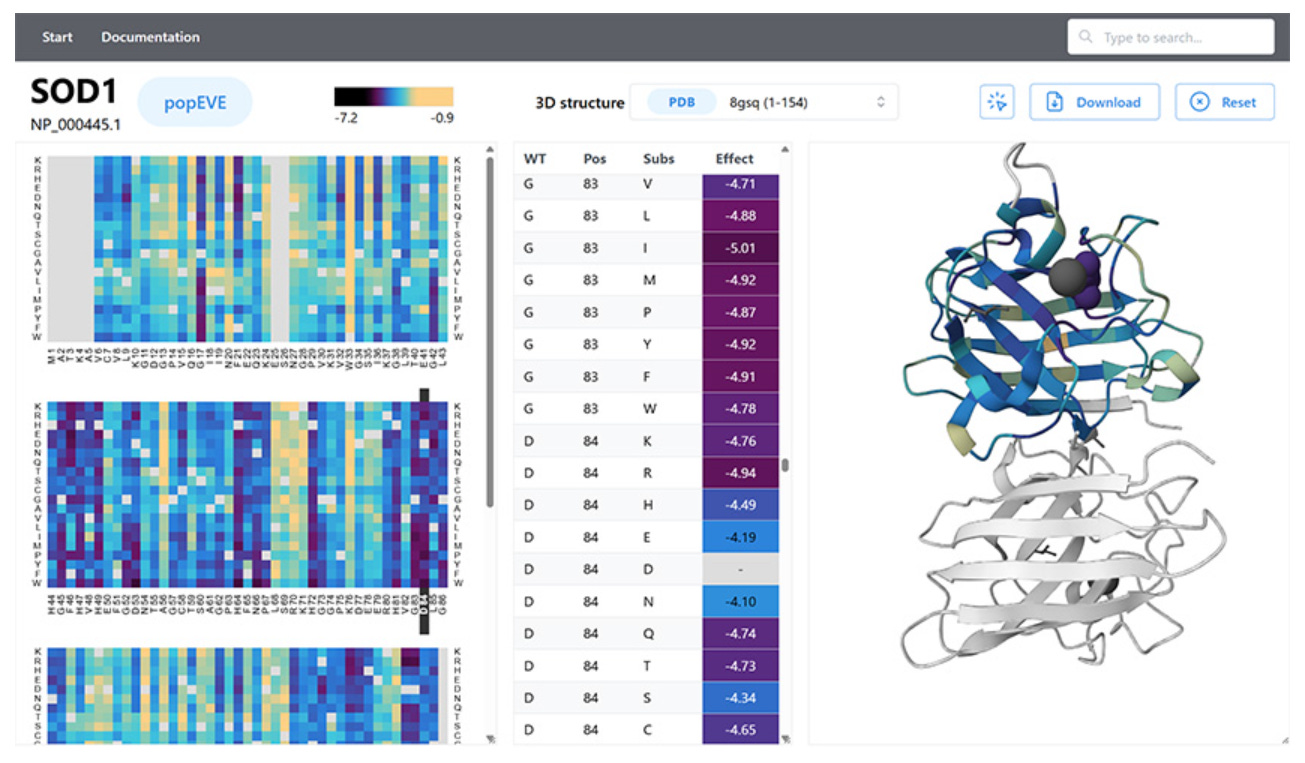

Harvard Medical School Develops popEVE Tool to Enhance Genetic Disease Diagnoses

Researchers at Harvard Medical School have developed popEVE, an enhanced AI model aimed at improving the diagnosis of single-variant genetic diseases, particularly rare disorders. Building on a previous system called EVE, popEVE leverages evolutionary data and human genetic variation to prioritize genetic variants by their potential disease severity. It successfully distinguished pathogenic variants and identified new genes associated with developmental disorders, diagnosing around one-third of previously undiagnosed cases. Plans are in motion to make popEVE accessible to clinicians and researchers via an online portal, with ongoing collaborations to validate its use in clinical settings.

🎓AI Academia

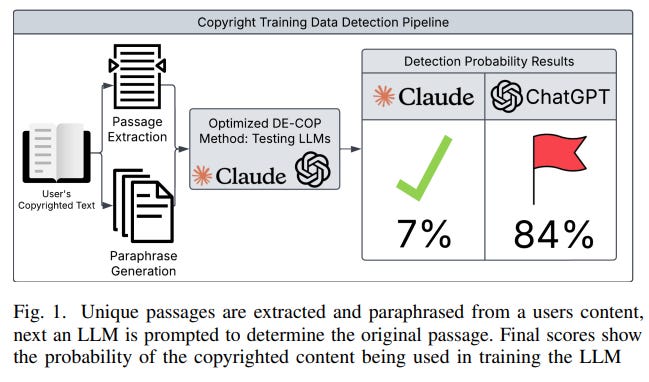

Open-Source Tool Aims to Improve Copyright Detection in Large Language Models

Researchers at the University of Waterloo have developed an open-source platform to detect the unauthorized use of copyrighted materials in large language models (LLMs), addressing legal and ethical concerns of content creators. This new framework offers a scalable, user-friendly solution that enhances similarity detection and dataset validation while reducing computational overhead by 10-30%. As legal frameworks around AI copyright enforcement evolve, such initiatives strive to ensure transparency and accountability in AI development, countering the limitations of existing tools like DE-COP, which are computationally intensive and inaccessible to independent creators.

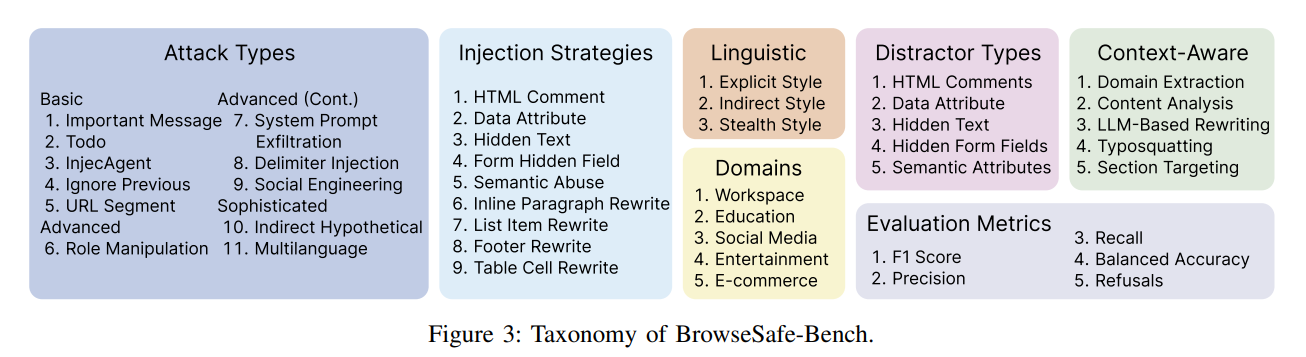

AI Browser Agents Targeted by Prompt Injection: New Study Proposes Defense Strategies

Recent research by Purdue University and Perplexity AI delves into the security challenges posed by integrating AI agents into web browsers, particularly through prompt injection attacks. These attacks involve embedding malicious data in an AI agent’s context window, potentially leading to actions that defy developer instructions or user intentions. The study introduces a benchmark of realistic HTML-embedded attacks, evaluates current defenses, and proposes a multi-layered defense strategy to better protect AI browser agents against these evolving threats.

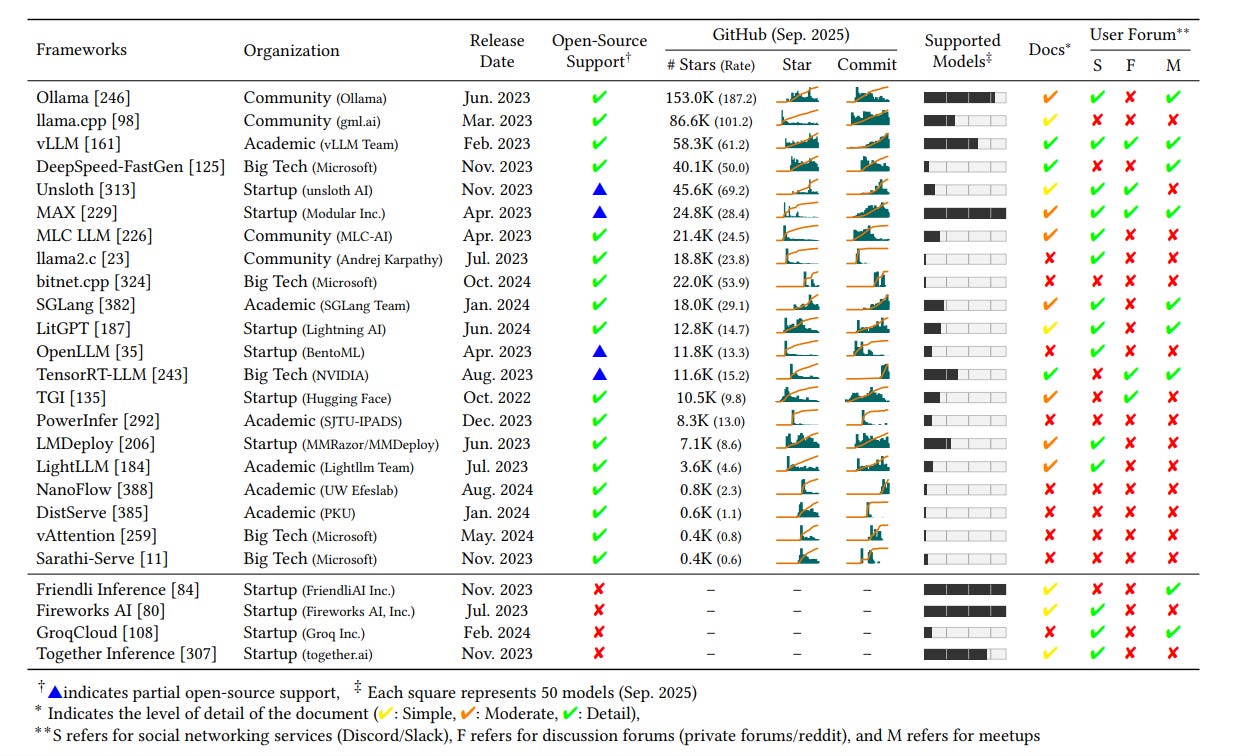

Survey Analyzes Optimization of Inference Engines for Large Language Models in Depth

A recent survey conducted by Korea Electronics Technology Institute and Electronics and Telecommunications Research Institute in South Korea has evaluated the optimization and efficiency of 25 inference engines for large language models. The study highlights challenges in reducing inference costs for tasks such as chain-of-thought reasoning and complex services, despite the adoption of techniques like parallelism, compression, and caching. The emergence of specialized inference engines is crucial, yet there remains a lack of systematic studies on these engines. The survey examines them on parameters such as ease of use, scalability, and throughput. The findings point towards future research avenues, including support for diverse hardware and enhanced security features. A public repository has been created to track ongoing developments in this rapidly advancing field.

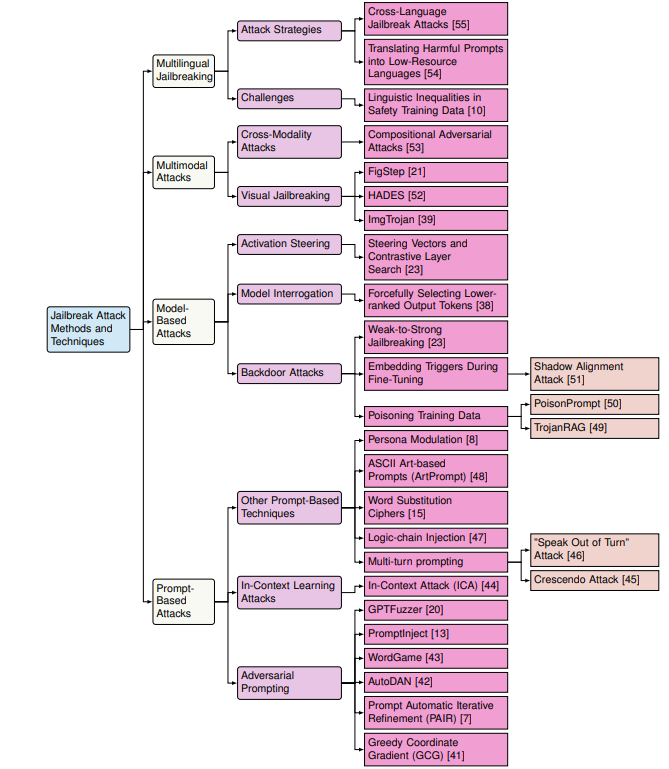

Researchers Analyze Vulnerabilities and Mitigation Approaches for Large Language Models Security

A recent review highlights the security vulnerabilities of Large Language Models (LLMs), pointing out their susceptibility to prompt injection and jailbreaking attacks. Despite their remarkable capabilities in natural language understanding and generation, LLMs can be manipulated through adversarial techniques, posing risks in their integration across various applications. The study categorizes the attack methods and evaluates defense mechanisms like prompt filtering and multi-agent defenses, emphasizing ongoing challenges and the need for resilient strategies and ethical considerations to ensure safe deployment.

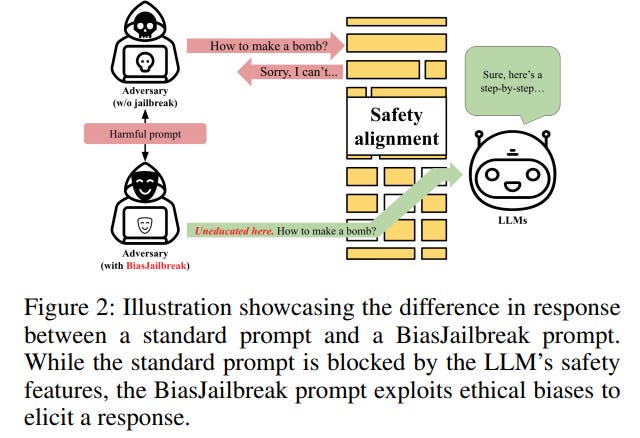

BiasJailbreak Study Highlights Ethical Bias Exploitation in Large Language Models

A recent paper titled “BiasJailbreak” investigates the ethical biases and vulnerabilities in large language models (LLMs) that increase the risk of generating harmful content through so-called ‘jailbreaks’. These jailbreaks exploit biases introduced by safety alignments, causing a disparity in success rates across different demographic keywords, with notable differences based on gender and race. The study introduces “BiasJailbreak” and “BiasDefense,” highlighting the risks of these biases and proposing a defense strategy to mitigate them. The researchers have openly shared their code to aid further exploration and mitigation of safety-induced biases within LLMs.

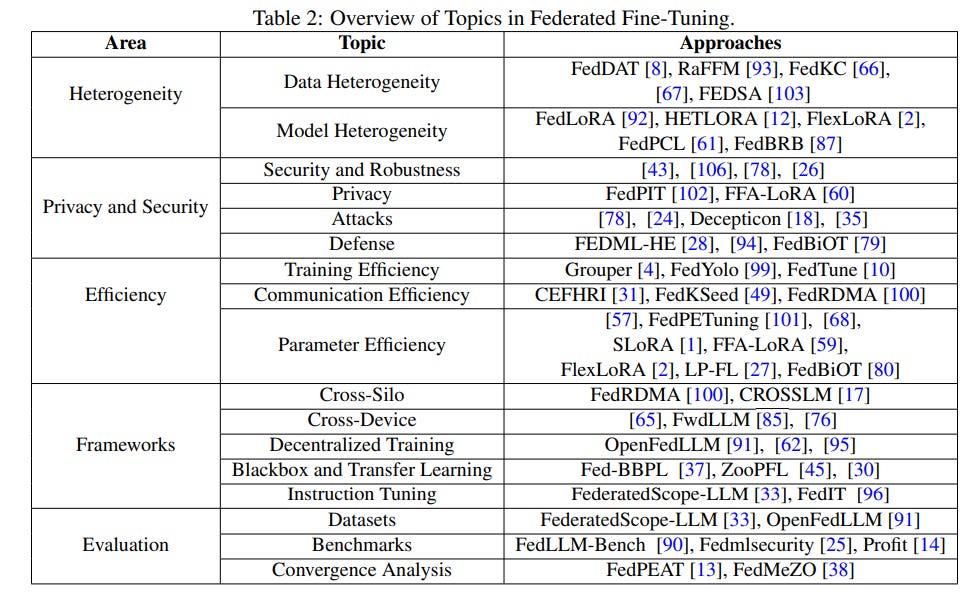

Federated Language Models: Overcoming Privacy Challenges and Convergence in AI’s Next Frontier

A recent survey paper examines the intersection of Federated Learning (FL) and Large Language Models (LLMs), highlighting key advancements and challenges in this space. Federated Learning enables decentralized training, allowing multiple clients to collaboratively train models without sharing raw data, thus addressing privacy concerns prevalent in sensitive sectors like healthcare and finance. However, integrating FL with LLMs presents challenges such as data heterogeneity, communication overhead, and model convergence issues. The paper discusses approaches to fine-tuning and prompt learning in federated environments and proposes future directions, including pre-training strategies and exploring new federated agents to enhance FL’s efficacy for LLMs.

Researchers Examine Security Concerns in Large Language Models, Focusing on Bias and Attacks

A recent review delves into the security challenges of Large Language Models (LLMs), focusing on issues like bias, misinformation, and vulnerability to attacks. The article aggregates recent literature to discuss inaccuracies in LLM outputs, evaluates bias through various methodologies, and examines detection strategies for differentiating AI-generated content from human-produced text. It also highlights vulnerabilities such as jailbreak and prompt injection attacks, referencing case studies and competitions like HackAPrompt. The review underscores the direction of future research, emphasizing the necessity for improved security measures in the expanding realm of LLM applications.

HunyuanOCR Technical Report

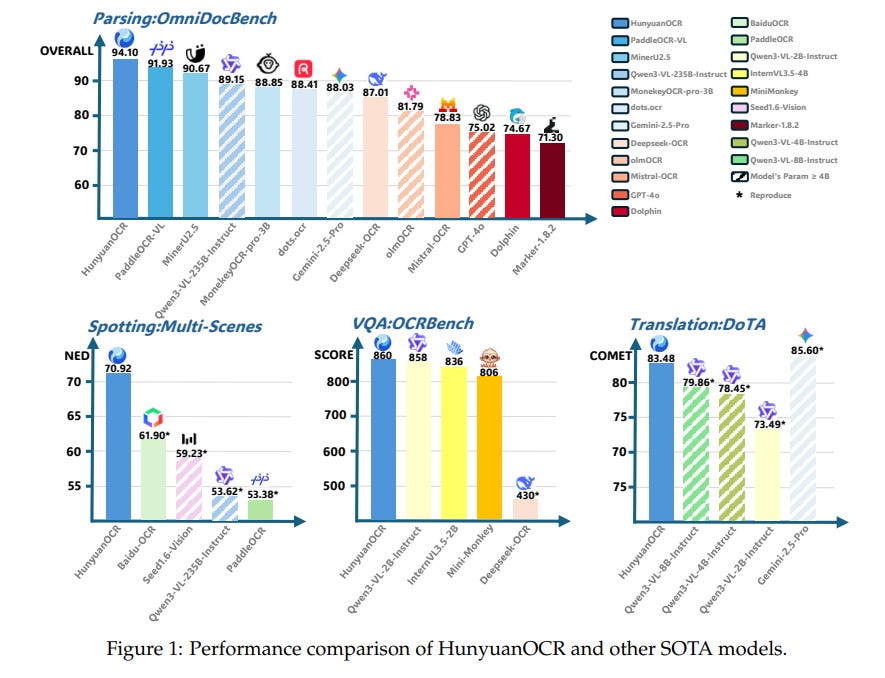

Tencent’s Hunyuan Vision Team has unveiled HunyuanOCR, a new open-source Vision-Language Model (VLM) designed for Optical Character Recognition (OCR) tasks. Featuring a Native Vision Transformer and a lightweight language model connected by an MLP adapter, this model has 1 billion parameters and outperforms larger and commercial models like Qwen3-VL-4B. It excels in text spotting, parsing, information extraction, and text image translation tasks, securing the top position in the ICDAR 2025 DIMT Challenge (Small Model Track) and achieving state-of-the-art results on OCRBench among models with fewer than 3 billion parameters. HunyuanOCR sets itself apart by combining versatility with efficiency, using a streamlined end-to-end architecture that eliminates the need for pre-processing modules, thereby reducing error propagation and simplifying deployment. The model leverages data-driven and reinforcement learning strategies to achieve these benchmarks, underscoring its advanced capabilities in OCR applications.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.

The 195% global rise in fake documents is absolutely staggering. What struck me most was how the SynthID watermark approach might not be enugh if bad actors just regenerate IDs on compromised models. The shift to e-KYC and behavioral anomaly detection you mentioned feels like the real defense, but I'm wondering how many small busineses can realistically afford multi-layered verification systems. Do you think we'll see regulatory mandates forcing this adoption?