Biggest AI infrastructure project in history?

A trillion-dollar triangle may be forming as OpenAI, NVIDIA, and Oracle enter a powerful financial loop..

Today’s highlights:

OpenAI is reportedly planning to spend $100–300 billion over the next few years to buy AI computing power from Oracle, which runs massive data centers. Oracle, in turn, is spending $40+ billion to buy NVIDIA’s powerful AI chips to build these data centers. Meanwhile, NVIDIA is planning to invest up to $100 billion back into OpenAI, helping fund those same AI data centers. So apparently the money flows in a loop: OpenAI → Oracle → NVIDIA → OpenAI. This triangle gives OpenAI the computing power it needs, makes Oracle a serious player in AI cloud infrastructure, and helps NVIDIA keep selling its chips while also gaining a stake in OpenAI’s future success.

You are reading the 131st edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training, including AI Governance certifications (AIGP, RAI) and an immersive AI Literacy Specialization. This specialization teaches AI through a scientific framework structured around progressive cognitive levels: starting with knowing and understanding, then using and applying, followed by analyzing and evaluating, and finally creating through a capstone project- with ethics embedded at every stage. Want to learn more? Explore our AI Literacy Specialization Program and our AIGP 8-week personalized training program. For customized enterprise training, write to us at [Link].

🚀 AI Breakthroughs

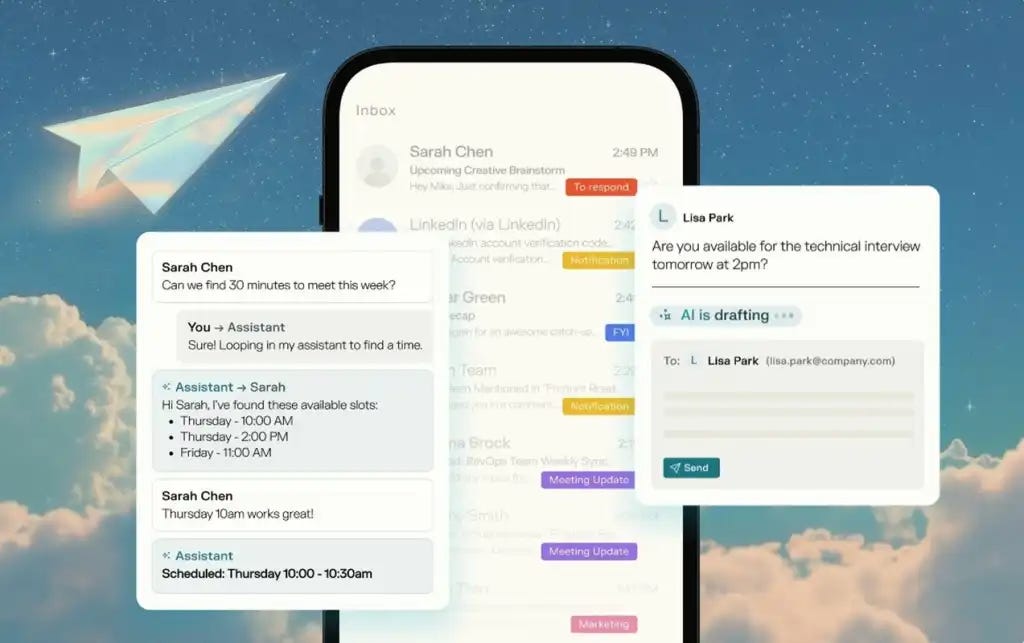

Perplexity launches the Perplexity Email Assistant for Gmail and Outlook Users

AI company Perplexity has launched the Perplexity Email Assistant, an AI-driven tool for Gmail and Outlook users. Available exclusively through the company’s Max plan at $200 per month, the assistant helps manage emails by drafting messages, organizing communications, suggesting meeting times, and participating in email threads, all while ensuring security compliance with SOC 2 and GDPR without using personal data for training.

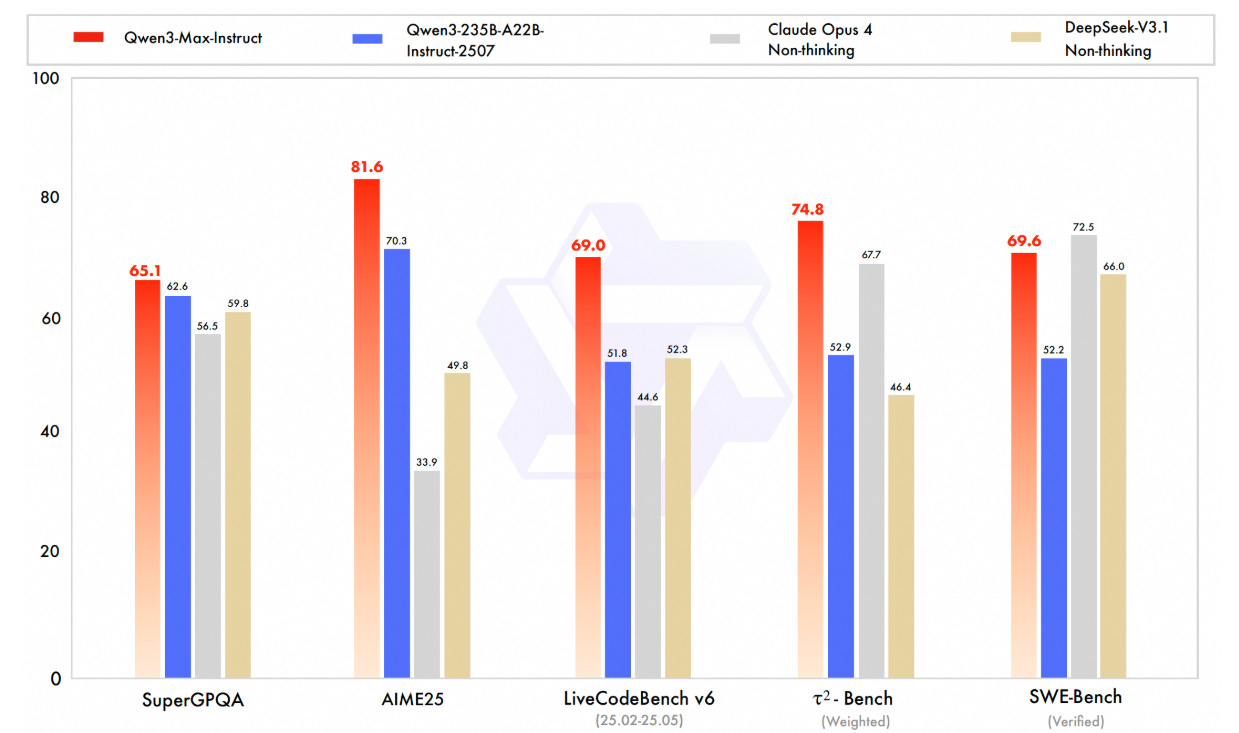

Alibaba launches Qwen3-Max, its largest and most advanced AI model

Alibaba’s Qwen team has launched Qwen3-Max, its largest and most advanced AI model with over 1 trillion parameters, trained on 36 trillion tokens. It outperforms many rivals, including GPT-5-Chat, in key areas like coding, reasoning, and agent capabilities. The model comes in two versions:

Qwen3-Max-Instruct, available now via API and Qwen Chat, ranks top 3 globally on text benchmarks and scores high on agent and coding tasks.

Qwen3-Max-Thinking, still in training, has already scored 100% on elite math benchmarks (AIME 25, HMMT) using advanced reasoning and tool use.

The model is optimized for efficient large-scale training using Mixture of Experts (MoE) and novel techniques like ChunkFlow. Developers can use it via a compatible OpenAI-style API on Alibaba Cloud. This launch marks a significant leap in China’s frontier AI competition.

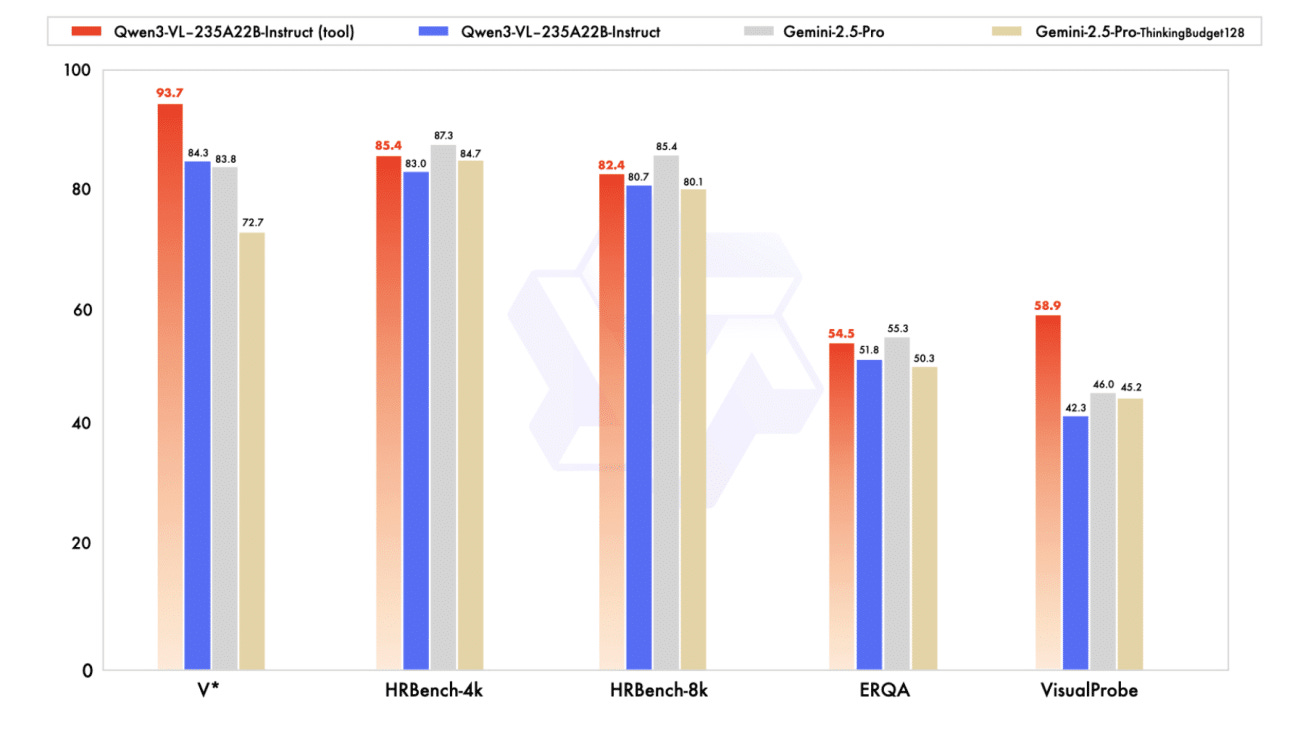

Alibaba Launches Qwen3-VL Series, Open-Sourcing Cutting-Edge Vision-Language Models

Alibaba’s Qwen team introduced the Qwen3-VL series, positioning it as the most advanced vision-language line in their portfolio, featuring the open-sourcing of its flagship model, Qwen3-VL-235B-A22B, in both Instruct and Thinking versions. This release aims to shift visual AI from basic recognition to deeper reasoning and execution, with models supporting up to one million tokens for complex text and visual processing. Notable features include advanced architectural changes such as an interleaved MRoPE positional scheme, DeepStack technology, and text-timestamp alignment, boosting perception and execution capabilities. The models, especially the Thinking variant, have demonstrated superior performance in complex tasks against competitors, as seen in benchmarks. Alibaba’s open release strategy is intended to foster community research while challenging closed-source leaders, further bolstered by the unveiling of Qwen3-Next, which enhances efficiency through hybrid attention and sparse MoE and suggests forthcoming advancements in the Qwen series.

NVIDIA and OpenAI Partner to Build Massive AI Infrastructure for Global Scaling

OpenAI and NVIDIA have announced a strategic partnership focused on expanding AI infrastructure, with plans to scale OpenAI’s computing capabilities through multi-gigawatt data centers powered by NVIDIA GPUs. This collaboration aims to support OpenAI’s growing user base and advanced AI models by deploying at least 10 gigawatts of NVIDIA systems, contributing to training and inference demands on a global scale. NVIDIA plans to invest up to $100 billion as each gigawatt is implemented, marking one of the largest AI infrastructure projects to date, with the first gigawatt expected to be operational by the second half of 2026.

Microsoft Launches Massive AI Datacenter Projects in the US, UK, and Norway to Expand Global Cloud Capabilities

Microsoft is making significant investments in AI infrastructure with the introduction of new AI datacenters worldwide. A key highlight is the Fairwater AI datacenter in Wisconsin, the largest and most advanced facility of its kind in the U.S., featuring a massive construction designed to support demanding AI workloads. In Norway, Microsoft has partnered with nScale and Aker JV to establish a new hyperscale AI datacenter, while in the UK, a collaboration with nScale aims to build the nation’s largest supercomputer. These purpose-built AI datacenters are intended to enhance efficiency and computing power on a global scale by integrating with Microsoft’s existing network of over 400 cloud datacenters, utilizing state-of-the-art technologies such as NVIDIA GPUs and advanced liquid cooling systems to optimize performance and resource management.

Microsoft Develops Advanced Microfluidic Chip Cooling System to Enhance AI Performance

Microsoft has unveiled an advanced microfluidic cooling system for AI chips, claiming it can remove heat up to three times more effectively than existing methods. By channeling coolant directly through microchannels etched into the silicon, this technology aims to address the mounting heat issues associated with increasingly powerful AI chips, potentially enhancing energy efficiency and reducing costs in data centers. Collaborating with Swiss startup Corintis, Microsoft designed bio-inspired cooling channels to optimize efficiency, with plans to integrate the system into future chip generations and data center operations.

Databricks Secures $100 Million Deal Bringing OpenAI’s GPT-5 to Enterprises

Databricks has announced a $100 million multi-year deal to integrate OpenAI’s models, including GPT-5, into its data platform and AI product, Agent Bricks. The partnership aims to attract enterprise customers by securely embedding generative AI capabilities directly into corporate data infrastructures. This move comes as part of Databricks’ broader strategy to enhance AI-driven applications and mirrors a similar agreement with Anthropic earlier this year. The financial arrangement ensures OpenAI receives a minimum guaranteed payment, underscoring Databricks’ commitment amid rising demand from clients like Mastercard for integrated AI solutions.

Huxe Launches Globally with $4.6M Funding to Turn Emails into Podcasts

Huxe, an audio-first app developed by former Google engineers, has recently secured $4.6 million in funding from notable investors including Conviction and Dylan Field. Drawing inspiration from Google’s successful AI tool NotebookLM, Huxe offers users the ability to generate AI-hosted podcasts that produce daily briefings based on emails and schedules, along with topic explorations. Launched on an invite-only basis in June, this app is now generally available on iOS and Android, providing personalized audio updates on various topics. The developers observed that users frequently utilized audio for news briefings, motivating the pivot toward creating interactive audio experiences tailored to individual interests.

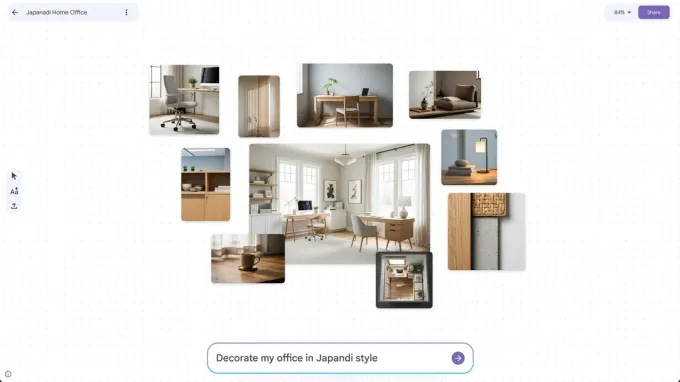

Google Enters Mood Board Market with AI-Powered Mixboard, Challenging Pinterest’s Dominance

Google has entered the digital mood board space with the launch of Mixboard, an AI-powered tool that rivals Pinterest’s existing collage feature. Available as a public beta in the U.S. through Google Labs, Mixboard allows users to create mood boards using text prompts, eliminating the need for an existing image collection. The service incorporates Google’s new image editing model, Nano Banana, which supports complex edits and realistic image creation. Mixboard offers features like generating ideas, editing images, and text creation, and is aimed at users interested in home decor, event themes, and DIY projects.

Chrome DevTools MCP Server Public Preview Allows AI Coding Assistants to Debug in Browser

Today marks the public preview launch of the Chrome DevTools Model Context Protocol (MCP) server, designed to integrate Chrome DevTools with AI coding assistants. This new development addresses a key limitation faced by AI coding agents—namely, the inability to observe the real-time impacts of code execution in browsers. By providing AI agents with access to DevTools’ debugging and performance analysis features, the protocol enhances their ability to diagnose and rectify issues effectively. The MCP server facilitates actions such as real-time code verification, network error diagnosis, user behavior simulation, live styling inspection, and performance audits by leveraging Chrome’s features. As a continually evolving tool, feedback is encouraged to refine and expand its capabilities.

Google Enables AI-Powered Photo Editing for Android Users with Natural Language Input

Google has introduced an AI-powered photo editing feature for Android users, allowing them to edit images via natural language instructions in Google Photos. Initially launched for Pixel 10 devices in August, this Gemini-powered tool simplifies the editing process by enabling users to make various adjustments, such as lighting changes, object removal, and creative enhancements, using voice or text commands. This new capability is available to U.S. users aged 18 and up and includes functionality for C2PA Content Credentials to identify AI-created images.

Google Releases Data Commons Model Context Protocol for Enhanced AI Data Access

Google released the Data Commons Model Context Protocol (MCP) Server on September 24, enhancing access to Data Commons’ public datasets for developers and organizations without needing an API. The MCP server enables faster deployment of AI applications with reliable data, as evidenced by its usage in ONE campaign’s tool for analyzing health financing data. Developed by Anthropic, MCP is an open-standard framework linking AI applications to diverse data, thus supporting Data Commons’ goal of grounding AI responses in real-world statistics to reduce hallucinations in large language models.

32-Billion-Parameter Code World Model LLM Offers New Insights in Code Generation

The release of Code World Model (CWM), a 32-billion-parameter language model with open weights, marks a significant advancement in code generation research through world models. By mid-training CWM with observation-action trajectories from Python and Docker environments, the model enhances code understanding beyond static code learning. It demonstrates impressive performance in coding and math tasks, achieving notable pass@1 scores on several benchmarks. CWM facilitates step-by-step simulation of Python code execution, providing researchers a robust platform to investigate how world modeling can improve reasoning and planning in computational environments. Model checkpoints are available for further exploration in code world modeling.

OpenAI Releases GDPval to Evaluate AI Performance Against Human Professionals Across Industries

OpenAI has introduced a new benchmark named GDPval, which aims to evaluate how its AI models compare to human professionals across various industries and occupations. The test involves models like OpenAI’s GPT-5 and Anthropic’s Claude Opus 4.1, assessing them against industry expert work quality in sectors such as healthcare, finance, and manufacturing. Early results show Claude Opus 4.1 matching or surpassing human experts in 49% of tasks, while a version of GPT-5 achieved a 40.6% success rate. Despite these advancements, OpenAI acknowledges the current limitations of GDPval, emphasizing that it currently only tests report generation, while plans are in place to develop more comprehensive assessments in the future. The goal is to help professionals use AI tools to focus more on high-value tasks, reflecting progress toward economically significant AI applications.

OpenAI’s ChatGPT Pulse Delivers Personalized Morning Briefs for Pro Subscribers

OpenAI is introducing a new feature for ChatGPT called Pulse, designed to generate personalized reports for users overnight, aiming to make ChatGPT a proactive assistant rather than just a conversational chatbot. Initially available to Pro subscribers at $200 a month, Pulse provides users with five to 10 briefs on topics like news updates or personalized recommendations. The feature is part of OpenAI’s strategy to offer asynchronous AI services, eventually expanding access as it scales its server capacity. With the potential to rival existing news services, Pulse includes source citations and integrates with applications like Google Calendar and Gmail, though its computational requirements may limit broader rollout until optimizations are made.

Clarifai Launches New AI Engine to Double Speed and Cut Costs by 40%

On Thursday, Clarifai revealed a new reasoning engine that promises to double the speed and reduce the cost by 40% for running AI models. This system is designed to be versatile across various models and cloud platforms, employing advanced optimizations such as CUDA kernels and speculative decoding to enhance inference capabilities on existing hardware. Verified by third-party benchmarks, the reasoning engine achieves industry-leading throughput and latency, particularly catering to the demands of multi-step agentic models amid rising pressure on AI infrastructure. This innovation arrives as the AI sector witnesses rapid growth in compute needs, with some companies planning extensive data center expansions to meet future demand, while Clarifai emphasizes optimization of current resources.

Union IT Minister Urges Adoption of Zoho and Other Indian Tech Products

Union IT Minister Ashwini Vaishnaw is transitioning to Zoho’s suite of productivity tools, aligning with Prime Minister Narendra Modi’s call for adopting Indian products. Zoho, based in Tamil Nadu, is a major homegrown software company with over $1 billion in revenue and a comprehensive application portfolio. This shift follows Modi’s appeal to reduce reliance on foreign goods and coincides with India-US tariff disputes. Meanwhile, India’s AI Mission has advanced eight firms in its foundation model initiative aimed at developing sovereign AI capabilities.

Cloudflare Open Sources VibeSDK Enabling Developers to Create AI Platforms Easily

Cloudflare has open-sourced VibeSDK, a platform designed to simplify the deployment of AI-powered development environments by allowing developers to build, test, and deploy applications using plain language descriptions. VibeSDK integrates large language models for code generation, debugging, and real-time iteration within Cloudflare’s secure sandboxes and offers features like one-click deployment and global scalability via Cloudflare Workers. The platform supports pre-built templates for common applications, while observability and cost management are enhanced through AI-powered routing and caching. The decision to open-source VibeSDK aligns with Cloudflare’s aim to foster innovation, resembling its previous strategy with the Workers runtime.

Open Semantic Interchange Initiative Launched by Snowflake, Salesforce, and Industry Leaders

Snowflake, in collaboration with Salesforce, BlackRock, and other technology partners, has launched the Open Semantic Interchange (OSI), an open-source initiative designed to standardize data semantics across various platforms and tools. This initiative introduces a vendor-neutral semantic model specification aimed at ensuring consistency in defining and sharing business logic for AI and BI applications. By addressing the lack of a common framework for interpreting business metrics and metadata, OSI seeks to enhance interoperability, reduce operational complexity, and foster AI and BI adoption. Supported by a broad coalition, OSI represents a shift towards collaboration and open standards to build a more connected and open ecosystem.

Virginia Woman Uses ChatGPT for Lottery Win, Donates Entire Prize to Charities

Carrie Edwards, a Virginia resident, won $150,000 in the lottery after using ChatGPT to select her numbers but plans to donate the entire amount to charity. After her initial $50,000 prize was tripled by the Power Play option, Edwards chose to distribute the funds among three causes: the Association for Frontotemporal Degeneration, Shalom Farms, and the Navy-Marine Corps Relief Society, all of which hold personal significance in her life. Her decision highlights a philanthropic approach to lottery winnings, underscoring a commitment to using unexpected fortune for charitable endeavors.

xAI Offers Grok AI Chatbot to US Government at 42 Cents Per Year

Elon Musk’s xAI has secured a deal with the U.S. General Services Administration to offer its AI chatbot Grok to federal agencies at a competitive rate of 42 cents for 18 months, undercutting similar offerings from OpenAI and Anthropic priced at $1 for a year. This agreement follows xAI’s previous issues with securing vendor approval after Grok’s controversial outputs, but pressure from the White House eventually placed it on the approved vendor list. xAI is also involved in a $200 million AI contract with the Pentagon alongside other major AI firms.

Neon Mobile App Ranks Second on Apple’s App Store by Recording Calls for AI Data

Neon Mobile, a controversial app that records users’ phone calls and sells the audio data to AI companies, has rapidly ascended to the No. 2 spot in Apple’s U.S. App Store’s Social Networking section. The app claims to offer users financial compensation for granting access to their audio conversations, promoting the potential to earn up to $30 per day. Despite potential legal and privacy concerns, including broad data usage rights outlined in its terms and possible exploitation of users’ voice data, the app’s rise indicates a growing consumer willingness to exchange privacy for monetary incentives. Legal experts express caution, suggesting the app’s practices could sidestep wiretap laws while raising issues about the actual privacy protections of user data.

⚖️ AI Ethics

Spotify Enhances AI Policies to Combat Spam and Unauthorized Voice Cloning

Spotify has announced updates to its AI policy, aiming to increase transparency around AI-driven music creation and limit unauthorized voice clones. The company plans to adopt the DDEX industry standard for labeling AI elements in music credits and will implement a music spam filter to curb the proliferation of spam tracks. Spotify will collaborate with distributors to prevent fraudulent uploads and address profile mismatches. These measures follow a rise in AI-generated music, with Spotify emphasizing support for authentic AI use while targeting fraudulent practices. The changes come amid growing concerns about AI-generated content on music streaming platforms.

California Senator Advances New AI Safety Bill with Broader Industry Support and Less Resistance

California State Senator Scott Wiener has revisited AI safety legislation with SB 53, after his previous bill, SB 1047, was vetoed due to concerns it would hinder AI innovation. Unlike its predecessor, SB 53 has garnered wider support, including endorsements from industry giants like Anthropic, and focuses on transparency and self-reporting by major AI companies without imposing liability for harms caused by AI models. The bill, awaiting Governor Gavin Newsom’s decision, mandates safety reporting requirements for leading AI labs and seeks to balance innovation with public safety by targeting the potential catastrophic risks of AI.

Meta Invests Millions in Super PAC to Challenge State AI Regulations

Meta is investing “tens of millions” into a new super PAC, the American Technology Excellence Project, to combat state-level tech policy proposals perceived as hindrances to AI advancement, according to Axios. The super PAC, managed by veteran political operatives from both major parties, aims to support tech-friendly politicians in the upcoming midterm elections, amid an influx of state-level AI regulations and growing concerns over child safety and AI. This move aligns with Silicon Valley’s broader push against fragmented AI regulation, underscoring Big Tech’s strategic efforts to maintain U.S. leadership in AI development.

OpenAI’s Bold Plan: AI Infrastructure Aimed at Curing Cancer with Nvidia

OpenAI’s ambitious plans aim to harness 10 gigawatts of computing power to revolutionize fields such as cancer treatment and personalized education, with the potential for curing complex diseases and enhancing global AI accessibility. Partnering with Nvidia, the project envisions a vast AI infrastructure, with initial data centers expected to be operational by next year. While experts caution that curing cancer is complex due to the variety of its types, breakthroughs in computing power could drive significant advances in medicine and other fields, highlighting AI’s transformative potential.

Nadal Warns Against Unauthorized AI-Generated Videos Misusing His Image and Voice

Former tennis star Rafael Nadal revealed that unauthorized artificial intelligence-generated videos using his likeness and voice are circulating online, promoting advice or investment proposals falsely attributed to him. Nadal, who retired in November 2024 after a distinguished career, including 22 Grand Slam victories, denounced these videos as deceptive advertising on social media. This incident highlights ongoing concerns about misuse of AI in digital content creation without consent.

EU’s Ambitious Plan to Transform into Leading AI Continent Faces Challenges

The European Union aims to become a leader in artificial intelligence, marked by the adoption of the Artificial Intelligence Act in 2024 and the announcement of an AI continent action plan in April 2025. While the plan emphasizes regulatory oversight, investment, infrastructure, and skills development, the EU still faces significant challenges, including a fragmented market and reliance on foreign technologies, which obstruct its leadership in AI. The European Parliament’s role will be crucial in shaping future legislation, such as the Cloud and AI Development Act, while balancing innovation and democratic safeguards amid differing stakeholder views.

Unemployment Spike Among Young Americans Highlights Unique Challenges in 2025 Economy

In 2025, a significant rise in unemployment among Americans under 25, especially recent graduates, is drawing concern from economists and labor analysts. Unlike global counterparts, the U.S. faces a “no hire, no fire” economy characterized by a low job turnover rate rather than being primarily driven by artificial intelligence. Federal Reserve Chair and major financial institutions highlight that this predicament stems from a broadly cooled economy and cautious hiring practices, with the brunt of the impact felt most acutely by young job seekers.

🎓AI Academia

Examining Embodied AI’s Role in Achieving Artificial General Intelligence Through LLMs and WMs

A recent paper in the IEEE Circuits and Systems Magazine delves into the advancements in Embodied Artificial Intelligence (AI), highlighting its potential as a key avenue towards achieving Artificial General Intelligence (AGI). The work explores the integration of Large Language Models (LLMs) and World Models (WMs), emphasizing their role in enhancing embodied AI through semantic reasoning and physical law-compliant interactions. The study underscores the transition from unimodal to multimodal approaches, which aim to develop AI systems capable of complex task execution in dynamic environments by combining multiple sensory modalities. This evolution signifies a broader applicability of embodied AI in real-world scenarios, suggesting promising future research directions.

Qwen3-Omni Model Achieves State-of-the-Art Performance Across Multiple Modalities

Qwen3-Omni, released by the Qwen Team, is a groundbreaking multimodal AI model achieving state-of-the-art performance in text, image, audio, and video without compromising on quality compared to specialized single-modal models. Especially strong in audio tasks, it outperforms prominent closed-source models across numerous benchmarks. Utilizing a Thinker-Talker Mixture-of-Experts architecture, the model excels in multilingual text and speech processing, offering robust capabilities in real-time speech and cross-modal reasoning. With support for extensive language interaction and advanced real-time streaming capabilities, Qwen3-Omni sets a new standard in seamless multimodal integration, overcoming typical trade-offs seen in such systems. It includes specialized models like Qwen3-Omni-30B-A3B-Captioner for precise audio captioning and is available under an open license.

Understanding Regulatory Measures to Mitigate Communication Bias in Large Language Models

A scholarly article highlights the concerns surrounding communication bias in large language models (LLMs) and the implications for societal and regulatory frameworks. The paper examines how LLMs, now central to modern communication, can influence opinions and democratic processes by reinforcing biases. Regulatory efforts like the EU Artificial Intelligence Act aim to enforce transparency and accountability, yet often treat communication bias as a secondary issue. The authors argue that detecting and mitigating such bias is challenging due to the models’ complexity. A multifaceted approach including value chain regulation and technology design governance is suggested to ensure fair and balanced AI systems that enhance decision-making without compromising societal values.

New Framework Offers Rapid Defense Against Attacks on Large Language Models

A recent study highlights the vulnerabilities of large language models (LLMs) to malicious attacks due to their increased autonomy and access permissions. While traditional approaches struggle to address zero-day attacks, the study proposes a robust defense system incorporating threat intelligence, data aggregation, and a release platform to enable quick detection updates without disrupting operations. Emphasizing machine learning-based detection, it underscores the need for continuous adaptation and response to emerging threats, noting that current detection systems are not foolproof and cannot guarantee complete protection against adversarial attacks.

Study Highlights Hidden Safety Risks of Large Language Models in Everyday Use

Researchers from prominent institutions have revealed a new class of issues with Large Language Models (LLMs) they call “secondary risks,” characterized by harmful or misleading behaviors occurring during normal usage, even without malicious intent. Unlike adversarial attacks that manipulate models into unsafe responses, these non-adversarial failures arise from imperfect model generalization and often slip through existing safety nets. The study introduces a framework named SecLens to systematically uncover these vulnerabilities, demonstrating through extensive testing on 16 popular LLMs that such risks are prevalent and transferable across different models. This underscores an urgent need for improved safety mechanisms to manage the benign yet potentially damaging behaviors of LLMs in real-world applications.

Experts Call for Responsible AI Standards to Tackle Challenges in Generative AI

A recent study conducted by researchers from institutions across the globe emphasizes the pressing need for responsible practices in the development and governance of generative AI as it transitions from research to widespread application. The study reveals that while there is substantial coverage regarding bias and toxicity, areas such as privacy, provenance, and the integrity of media remain inadequately addressed. It also highlights the limitations of current evaluation methods, which are often static and localized, thereby restricting the broader applicability of audit and lifecycle assurances. The research calls for a strategic agenda focusing on adaptive evaluations, detailed documentation, privacy and deepfake risk assessments, and consistent metric validation to ensure a sustainable future for generative AI.

MediNotes AI System Aims to Automate SOAP Note Creation in Healthcare Settings

Researchers at the University of Chicago have developed MediNotes, a generative AI framework aimed at reducing the administrative burden of medical documentation for healthcare providers. MediNotes uses advanced AI technologies, including Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG), to automatically generate SOAP (Subjective, Objective, Assessment, Plan) notes from medical conversations. By integrating speech recognition and efficient NLP techniques, MediNotes can capture and process both text and voice inputs, creating structured and contextually accurate medical notes in real-time, or from recorded audio, which boosts the quality and efficiency of clinical workflows and reduces the burden on healthcare professionals. Initial evaluations suggest that MediNotes markedly improves the accuracy and usability of automated medical documentation, helping to enhance patient care.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.