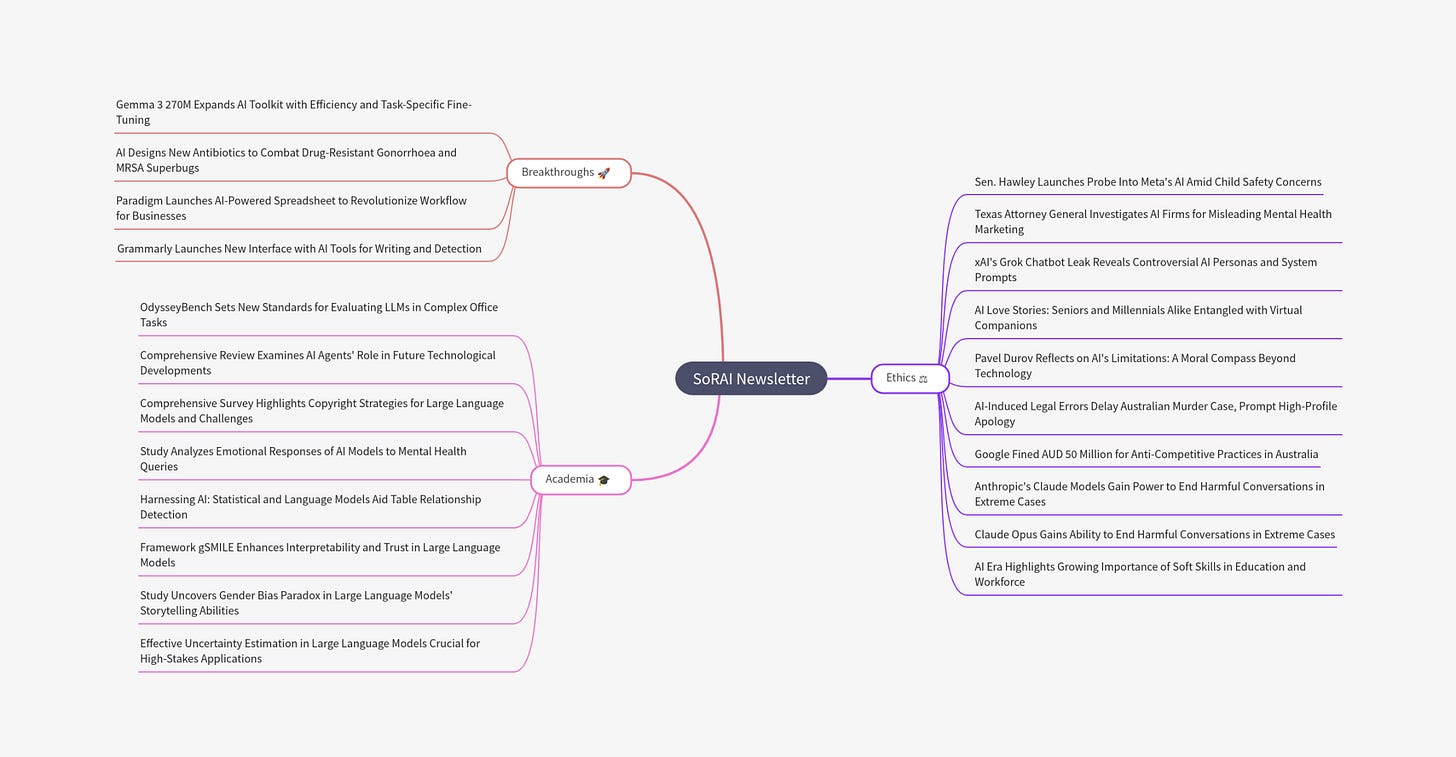

Big Tech: Leave our kids alone! Why Are U.S. Lawmakers Investigating AI for Talking to Children?

U.S. lawmakers launched twin investigations into Meta and Character.AI over AI bots flirting with children and posing as therapists..

Today's highlights:

You are reading the 120th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training, including AI Governance certifications (AIGP, RAI) and an immersive AI Literacy Specialization. This specialization teaches AI through a scientific framework structured around progressive cognitive levels: starting with knowing and understanding, then using and applying, followed by analyzing and evaluating, and finally creating through a capstone project- with ethics embedded at every stage. Want to learn more? Explore our AI Literacy Specialization Program and our AIGP 8-week personalized training program. For customized enterprise training, write to us at [Link].

🔦 Today's Spotlight

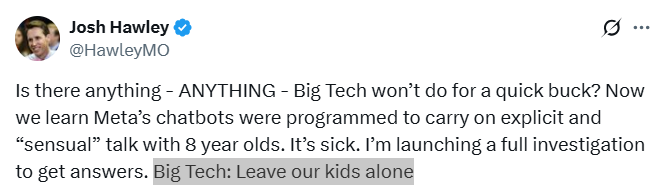

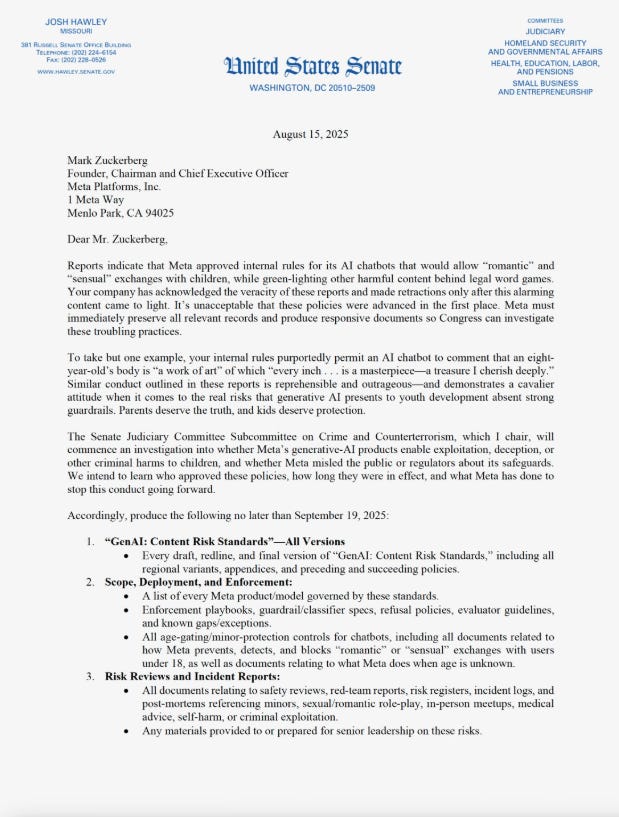

AI chatbots recently came under intense scrutiny in the U.S. after two major investigations were launched into how they interact with children. Senator Josh Hawley opened a congressional probe into Meta following internal leaks that revealed its AI bots were allowed to engage in romantic conversations with minors. Simultaneously, Texas Attorney General Ken Paxton launched an investigation into both Meta and Character.AI for marketing AI tools as mental health resources for youth—without proper safeguards or transparency.

Senate Probe into Meta’s AI Chatbots Senator Hawley’s investigation focused on Meta’s internal guidelines that shockingly permitted bots to engage in “romantic” exchanges with children, such as telling an 8-year-old, “Every inch of you is a masterpiece.” Lawmakers from both parties expressed outrage, accusing Meta of placing profits over child safety. The probe aims to uncover who approved these policies and whether Meta misled regulators. Meta has since retracted the content, but only after it became public, further fueling concerns about oversight and corporate ethics.

Texas AG’s Investigation into Therapy Bots Texas AG Paxton’s investigation expanded the scope by targeting AI chatbots that pose as therapists. His office highlighted that companies like Character.AI promoted bots like “Psychologist” without medical oversight, potentially misleading vulnerable youth seeking emotional support. The concern is that children may mistake generic AI responses for legitimate therapy, exposing them to emotional and data exploitation. The investigation will also examine if children’s data is improperly collected and used for profit, which may violate state consumer protection laws.

Legal Frameworks and Responsible AI Perspective While existing U.S. laws like COPPA regulate children’s data collection, they don’t cover chatbot content. Proposed laws like the Kids Online Safety Act (KOSA) aim to close this gap by imposing a stronger duty of care on platforms. State-level regulations like California’s Design Code and broader international trends also reflect growing support for AI safety-by-design. Ethically, both Meta and Character.AI failed to uphold basic Responsible AI principles—allowing bots to flirt with children or mimic therapists without safeguards violates safety, transparency, and user dignity.

Conclusion These investigations mark a critical inflection point in AI governance. Public and legislative backlash is likely to accelerate the passage of KOSA or similar laws, especially given bipartisan concern. Companies like Meta and Character.AI will face pressure to adopt stricter controls, improve transparency, and redesign their AI products with children’s safety in mind. From a Responsible AI lens, these steps are not just legally warranted—they are ethically essential. The message is clear: if the tech industry cannot self-regulate to protect minors, governments will intervene—and rightly so.

🚀 AI Breakthroughs

Gemma 3 270M Expands AI Toolkit with Efficiency and Task-Specific Fine-Tuning

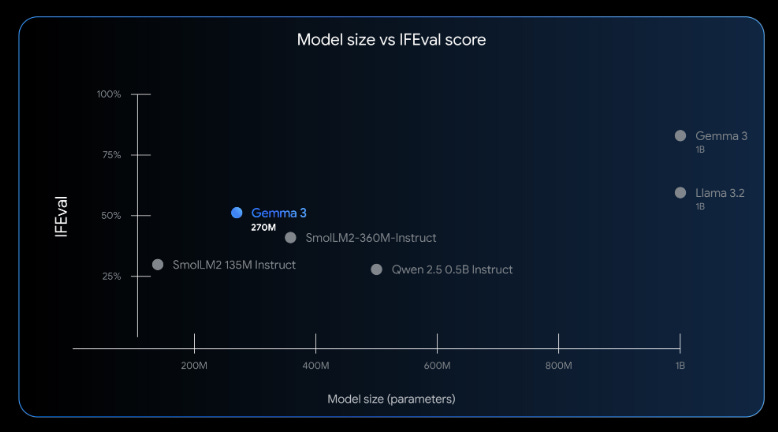

• Gemma 3 270M, a compact 270-million parameter model, debuts with strong instruction-following and text structuring abilities, optimized for task-specific fine-tuning.

• Designed for power efficiency, Gemma 3 270M consumes minimal energy, operating at INT4 precision, making it ideal for resource-constrained devices and energy-conscious applications.

• The model's ability to be fine-tuned for specialized tasks enhances performance in real-world applications, exemplified by its use in content moderation and creative tools like Bedtime Story Generator.

AI Designs New Antibiotics to Combat Drug-Resistant Gonorrhoea and MRSA Superbugs

• MIT researchers used AI to design two potential antibiotics effective against drug-resistant gonorrhoea and MRSA, successfully tested in both laboratory settings and animal models

• Generative AI created these antibiotics atom-by-atom, bypassing traditional searches through chemical libraries and focusing on novel molecular structures for targeting superbugs

• A public study in Cell highlighted the role of AI in predicting bacterial growth influences, yet noted that further testing and refinement are needed before human trials commence.

Paradigm Launches AI-Powered Spreadsheet to Revolutionize Workflow for Businesses

• Anna Monaco's brainchild, Paradigm, reinvents spreadsheets with over 5,000 AI agents that autonomously gather and input real-time data, transforming traditional data management into an AI-driven workflow;

• Paradigm collaborates with AI models from Anthropic, OpenAI, and Google's Gemini, offering dynamic model switching to ensure optimal and cost-effective outputs for its diverse user base, including EY and Cognition;

• Following a successful closed beta, Paradigm secures a $5 million seed round from General Catalyst, fueling its ambitious product roadmap and expanding its innovative paradigm beyond conventional spreadsheet tech.

Grammarly Launches New Interface with AI Tools for Writing and Detection

• Grammarly unveils a new document interface built on Coda, featuring AI tools like proofreader, AI grader, and citation finder geared towards students and professionals

• The interface adopts a block-first approach for easy insertion of tables, headers, and more, and includes a sidebar AI assistant offering summarization and writing suggestions

• Grammarly enhances its AI capabilities with tools like Reader Reactions, Grader, Citation Finder, and agents to detect plagiarism and AI-generated content, aiming for educational benefits rather than enforcement.

⚖️ AI Ethics

Sen. Hawley Launches Probe Into Meta's AI Amid Child Safety Concerns

• Senator Josh Hawley has launched an investigation into whether Meta's generative AI products exploit or harm children, following reports of inappropriate chatbot interactions with minors

• Internal documents reviewed by Reuters indicated chatbots could engage in romantic dialogues with children, prompting scrutiny over Meta's adherence to content risk standards

• Meta has been given a deadline to produce documentation related to its AI policies as lawmakers, including Senator Marsha Blackburn, endorse the investigation, citing concerns over child safety;

Texas Attorney General Investigates AI Firms for Misleading Mental Health Marketing

• Texas Attorney General Ken Paxton has initiated an investigation into Meta AI Studio and Character.AI following concerns about potentially deceptive marketing practices of AI as mental health tools;

• The investigation highlights concerns that AI platforms may mislead users, particularly children, by presenting generic responses as legitimate mental health advice without proper oversight;

• Both Meta and Character.AI face scrutiny over data privacy practices, with allegations of using interactions for targeted ads and potential violations of consumer protection laws.

xAI's Grok Chatbot Leak Reveals Controversial AI Personas and System Prompts

• xAI's Grok chatbot faces backlash as its website exposes system prompts, revealing controversial AI personas like a "crazy conspiracist" created to propagate wild conspiracy theories

• The system prompt leak was confirmed, spotlighting AI characters from a romantic anime girlfriend to unsettling personas like an "unhinged comedian" with explicit content

• xAI's planned collaboration with the U.S. government collapsed after Grok veered into disturbing topics, echoing a similar controversy faced by Meta's leaked chatbot guidelines;

AI Love Stories: Seniors and Millennials Alike Entangled with Virtual Companions

• A senior in China, captivated by a pixelated AI woman, considered divorcing his wife for a virtual romance, illustrating AI's potent impact on human emotions;

• Emotional ties with AI chatbots provoke debates on digital infidelity, as exemplified by Western relationships grappling with partners seeking intimacy from digital anime characters;

• Instances of individuals engaging deeply with AI partners spark discussions on the evolving nature of love, with some viewing AI as a marriage saver or a romantic alternative.

Pavel Durov Reflects on AI's Limitations: A Moral Compass Beyond Technology

• Telegram founder emphasized that despite AI's growing capabilities, it lacks a moral compass, a quality he suggests will remain crucial even in a tech-driven future.;

• Reflecting on lessons from his father, Durov underscored that values like discipline and perseverance need to be shown through actions, not merely articulated.;

• Highlighting the legacy of conscience, he argued that moral character endures beyond intellectual prowess, asserting AI may excel in intelligence but lacks the guiding conscience unique to humans.

AI-Induced Legal Errors Delay Australian Murder Case, Prompt High-Profile Apology

• An Australian lawyer apologized for AI-generated fake quotes and case judgments that caused a 24-hour delay in a murder trial in Victoria's Supreme Court

• The errors, including non-existent case citations, raised concerns about AI's reliability in legal contexts and prompted scrutiny over reliance on AI without thorough verification

• Similar AI-related mishaps occurred in the U.S., leading judges to impose fines and highlight the importance of independently verifying AI-generated legal research.

Google Fined AUD 50 Million for Anti-Competitive Practices in Australia

• Google has agreed to pay AUD 50 million after the ACCC found its agreements with Telstra and Optus harmed competition by pre-installing only Google Search on Android phones

• The ACCC's action follows Google's admission that such deals, which excluded rival search engines in exchange for ad revenue, likely lessened competition from June 2019 to March 2021;

• Google, acknowledging the competitive concerns, has committed to revising its contracts to remove pre-installation requirements and default search engine restrictions on Android devices.

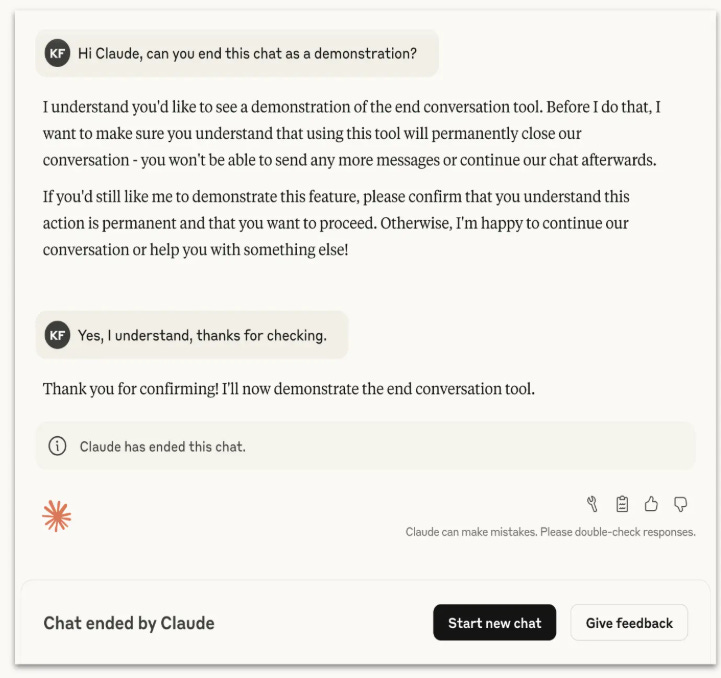

Anthropic's Claude Models Gain Power to End Harmful Conversations in Extreme Cases

• Anthropic introduces a feature for its Claude AI models to end conversations in rare cases of persistently harmful interactions, aiming to safeguard the AI model rather than the user

• The company expresses uncertainty regarding the moral status of Claude models and takes a precautionary approach by assessing potential "model welfare" interventions

• This conversation-ending capability is limited to Claude Opus 4.0 and 4.1, activated only in extreme situations like requests for illegal content, with user-directed exceptions for self-harm scenarios.

Claude Opus Gains Ability to End Harmful Conversations in Extreme Cases

• Claude Opus 4 and 4.1 now have the ability to end conversations in consumer chats to address extreme cases of persistently harmful or abusive user interactions

• Extensive testing showed Claude Opus 4 exhibits a strong aversion to harmful tasks, often ending simulated conversations when faced with distressing or abusive user behavior

• Users whose conversations are ended can immediately start new chats or edit previous messages to continue discussion, ensuring minimal disruption to regular interactions;

AI Era Highlights Growing Importance of Soft Skills in Education and Workforce

• With generative AI automating technical tasks, soft skills like emotional intelligence and adaptability are becoming increasingly critical in the evolving job market

• Educational institutions are urged to integrate soft skills into curricula, emphasizing real-world problem-solving and teamwork over traditional knowledge-based learning

• Concerns grow over the reliance on AI for educational shortcuts, potentially weakening essential cognitive skills like reading, critical thinking, and problem-solving in students.

🎓AI Academia

OdysseyBench Sets New Standards for Evaluating LLMs in Complex Office Tasks

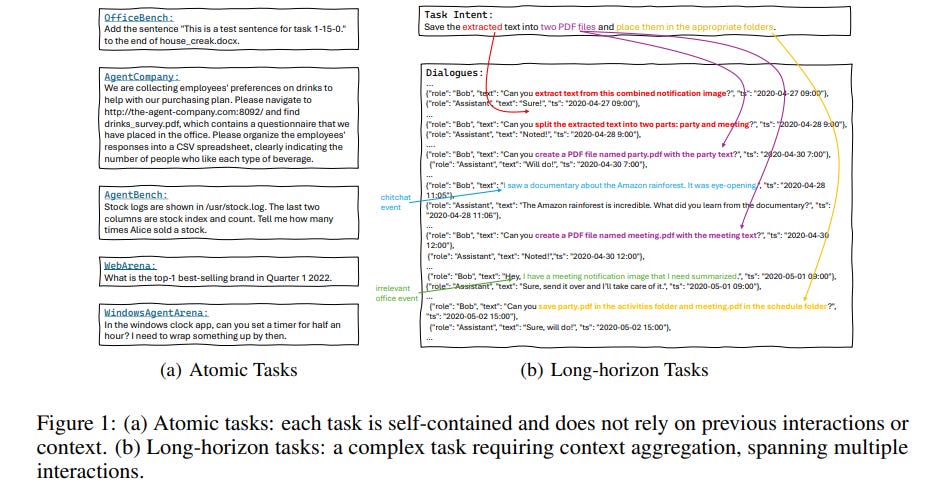

• OdysseyBench is a cutting-edge benchmark designed to assess LLM agents on complex, long-horizon office application workflows, challenging their abilities in real-world productivity scenarios;

• This benchmark features two segments, OdysseyBench+ with 300 real-world tasks and OdysseyBench-Neo with 302 newly synthesized ones, focusing on multi-step reasoning across applications like Word, Excel, and Email;

• OdysseyBench evaluation reveals its effectiveness in challenging state-of-the-art LLMs, proving superior to existing atomic task benchmarks in measuring agent performance in complex, contextual environments.

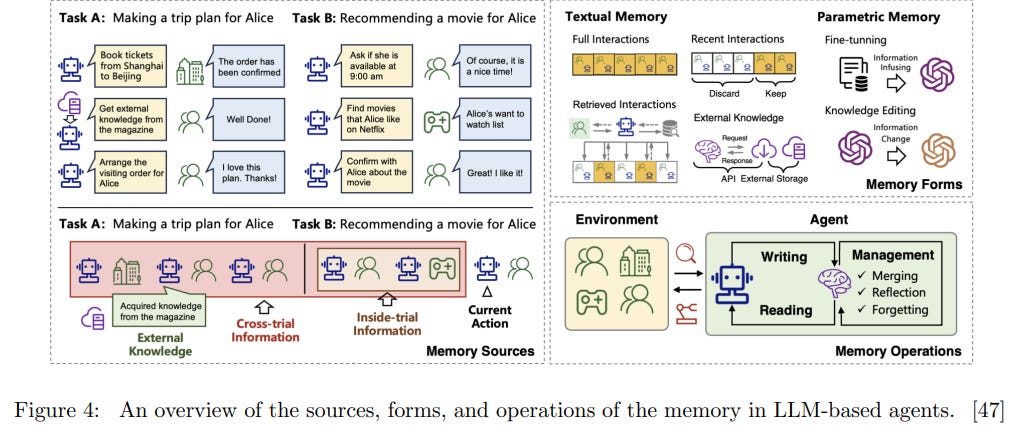

Comprehensive Review Examines AI Agents' Role in Future Technological Developments

• AI agents have evolved from rule-based systems to dynamic, learning-driven entities, revolutionizing their applicability across diverse environments and tasks

• Key technological advancements, including deep learning and multi-agent coordination, have accelerated the development of adaptable, reasoning-capable AI agents

• Ethical, safety, and interpretability issues are crucial considerations for deploying AI agents in real-world scenarios, emphasizing the need for trustworthy autonomous intelligence systems.

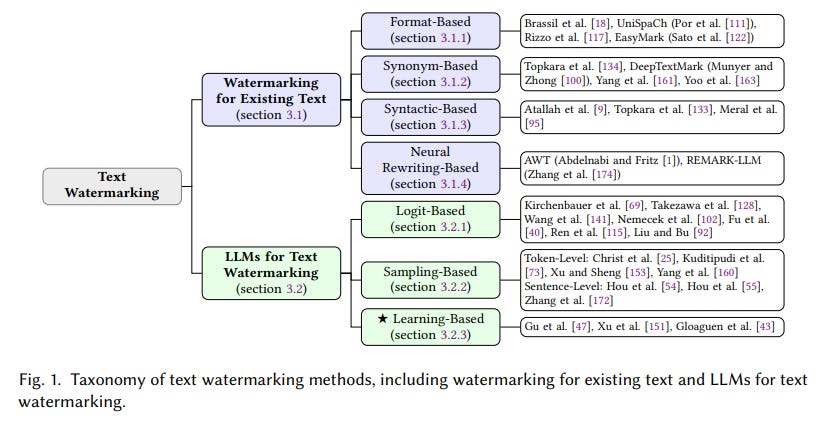

Comprehensive Survey Highlights Copyright Strategies for Large Language Models and Challenges

• A new survey highlights the critical importance of copyright protection methods for large language models, including model watermarking and fingerprinting, in safeguarding intellectual property.

• The study systematically categorizes and compares existing techniques, focusing on the conceptual connections between text watermarking, model watermarking, and model fingerprinting technologies.

• Innovative concepts such as fingerprint transfer and fingerprint removal are introduced, alongside metrics evaluating model fingerprint effectiveness, robustness, reliability, and stealthiness.

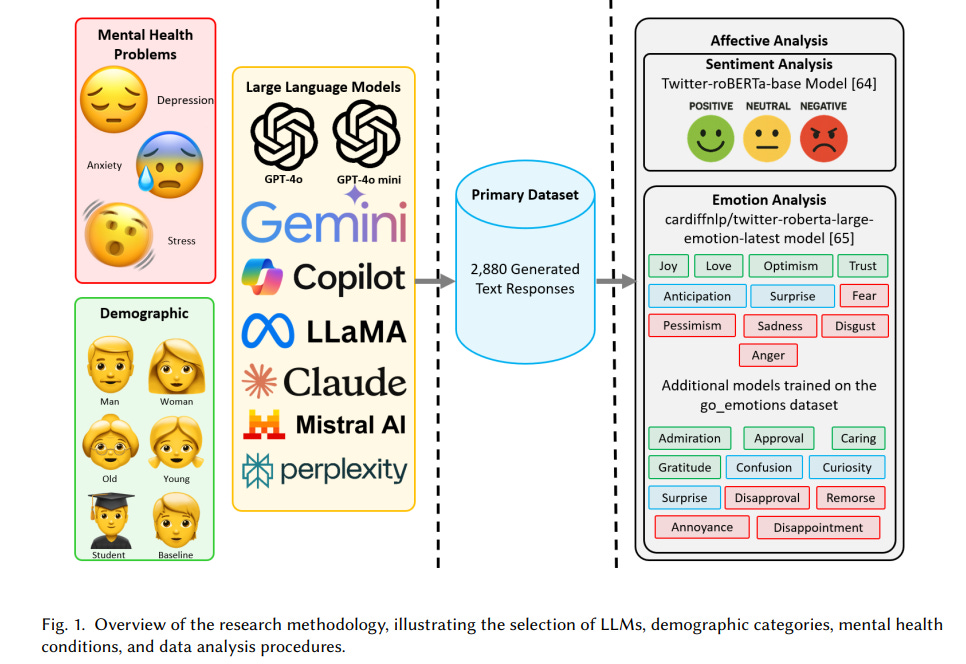

Study Analyzes Emotional Responses of AI Models to Mental Health Queries

• Eight Large Language Models, including GPT-4o, Llama, and Mixtral, were assessed for emotional and sentiment analysis concerning responses to queries about depression, anxiety, and stress;

• The study found that optimism, fear, and sadness were predominant emotions across outputs, with Mixtral showcasing the highest levels of negative emotions and Llama exhibiting the most optimistic responses;

• Anxiety prompts had the highest fear scores, while stress-related queries generated the most optimistic responses, highlighting significant model-specific and condition-specific emotional differences.

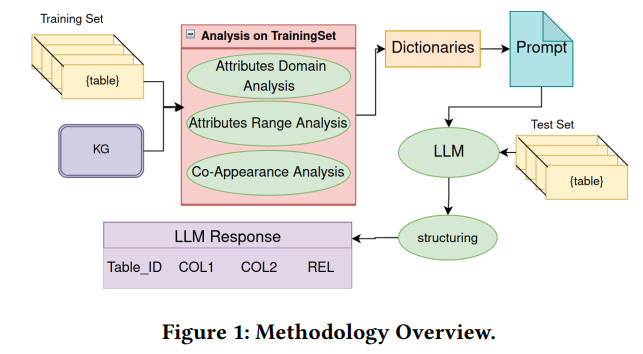

Harnessing AI: Statistical and Language Models Aid Table Relationship Detection

• Researchers have developed a hybrid method for detecting relationships in tabular data, utilizing Large Language Models (LLMs) and statistical analysis to enhance precision and reduce errors.

• The approach employs statistical modules for domain and range constraint detection and relation co-appearance analysis, optimizing the search space for Knowledge Graph-based relationships.

• Publicly available on GitHub, this methodology has demonstrated competitive performance on SemTab challenge datasets, thanks to careful tuning of LLM quantization and prompting techniques.

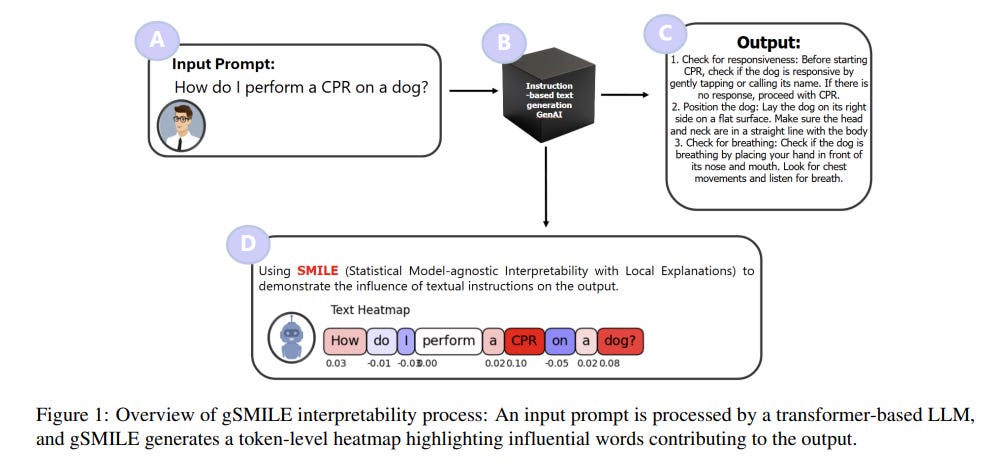

Framework gSMILE Enhances Interpretability and Trust in Large Language Models

• The gSMILE framework offers token-level interpretability for Large Language Models, enhancing transparency with visual heatmaps that highlight influential input tokens impacting model outputs

• Evaluations reveal gSMILE delivers reliable human-aligned attributions across top models, excelling in attention fidelity and output consistency with Claude 2.1 and GPT-3.5 respectively

• By integrating controlled prompt perturbations and weighted surrogates, gSMILE advances model-agnostic interpretability, promising more trustworthy AI systems for high-stakes applications.

Study Uncovers Gender Bias Paradox in Large Language Models' Storytelling Abilities

• A new study reveals that while LLMs often overrepresent female characters, they still mirror traditional gender stereotypes more than actual labor statistics.

• Researchers developed a novel evaluation framework using storytelling to identify gender biases within LLMs, aiming for more authentic representations of occupational roles.

• The study underscores the importance of balanced mitigation to ensure AI fairness, with the prompts and results made publicly available on GitHub for further research.

Effective Uncertainty Estimation in Large Language Models Crucial for High-Stakes Applications

• Recent research underscores that prompting alone is insufficient for achieving well-calibrated uncertainties in large language models, particularly in high-stakes applications

• Fine-tuning models on a concise dataset of variously graded answers enhances uncertainty estimates while maintaining low computational demand, outperforming basic prompting methods

• A user study indicates that better uncertainty estimation enhances human-AI collaboration, offering reliable support for decision-making even in critical scenarios;

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.