Are We Watching the Death of Responsible AI in Real Time?

The European Commission is thinking about postponing parts of the EU’s Artificial Intelligence Act after strong pressure from businesses and Donald Trump’s administration..

Today’s highlights:

The European Commission is preparing a “Digital Omnibus” package that could delay and dilute key parts of the EU AI Act- particularly those related to high-risk AI systems and transparency requirements. Companies using such systems may get up to a year’s grace period, with penalties for failing to label AI-generated content postponed to August 2027. Narrow-purpose tools and internal AI applications may be exempt from registration altogether. The Commission frames this as easing the burden for SMEs and enabling smoother enforcement, but critics argue it risks gutting vital safeguards.

This move follows intense lobbying from Big Tech (Meta, Apple, Google), pressure from the U.S. government (including reported threats of trade retaliation from the Trump administration), and a July 2025 open letter from CEOs of major European firms like Airbus, Mercedes, and BNP Paribas demanding delays. EU industry leaders argue the Act’s complexity could scare off investment and hamper innovation. However, civil society groups and MEPs warn that postponing enforcement could jeopardize public safety, erode trust, and fracture the EU’s global leadership in tech regulation.

You are reading the 144th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) . Subscribe today for regular updates!

At the School of Responsible AI (SoRAI), we empower individuals and organizations to become AI-literate through comprehensive, practical, and engaging programs. For individuals, we offer specialized training, including AI Governance certifications (AIGP, RAI, AAIA) and an immersive AI Literacy Specialization. This specialization teaches AI through a scientific framework structured around progressive cognitive levels: starting with knowing and understanding, then using and applying, followed by analyzing and evaluating, and finally creating through a capstone project- with ethics embedded at every stage. Want to learn more? Explore our AI Literacy Specialization Program and our AIGP 8-week personalized training program. For customized enterprise training, write to us at [Link].

🚀 AI Ethics

Wikipedia Sets Guidelines for Responsible AI Use of Its Content

The Wikimedia Foundation, which oversees Wikipedia, has outlined a strategy to sustain its platform amid declining traffic due to AI developments, urging AI developers to responsibly attribute content when using the encyclopedia’s resources. In a recent blog post, the organization emphasized that its paid Wikimedia Enterprise platform allows large-scale access to content without overburdening Wikipedia’s servers, supporting its nonprofit mission. Despite not threatening legal action for unauthorized use, Wikipedia has flagged AI bots for inflated traffic, overshadowing a reported 8% decline in human page views year-over-year. The foundation’s AI strategy also includes deploying AI to assist editors with repetitive tasks while maintaining human oversight.

India to Establish AI Governance Bodies to Enhance Policy Coordination

The Indian government plans to establish an Artificial Intelligence Governance Group (AIGG) and a Technology and Policy Expert Committee (TPEC) by next month, as part of the India AI governance guidelines recently released. These bodies aim to streamline AI policy-making and will commence their initiatives before the India AI Summit in February next year. The AIGG, led by the principal scientific advisor, will coordinate AI governance across ministries with representatives from various official and regulatory bodies, while TPEC will focus on strategic and implementation roles for AI policies. The AI Safety Institute will oversee the safe development of AI, with a focus on creating regulatory sandboxes and practical tools to support the guidelines.

Entrepreneur Media Sues Meta for Alleged Unauthorized Use of Copyrighted Content

Entrepreneur Media has filed a lawsuit against Meta Platforms in a California federal court, alleging that Meta used its copyrighted materials to train its Llama large language models without authorization. The business magazine’s owner accuses Meta of copying its business strategy books and professional development guides to develop AI-generated content that competes with Entrepreneur’s publications. This suit is one of several brought by copyright holders against tech companies like Meta for using protected content in AI training, with Entrepreneur seeking monetary damages and a legal order to stop the supposed infringement. Meta has previously argued that their use of such content constitutes “fair use” under U.S. copyright law.

Big Tech Criticizes India’s Draft AI Rules as Costly and Impractical

Big tech companies, policy think tanks, and industry body Nasscom have criticized the Indian government’s proposed AI content regulations as costly and impractical, urging instead a focus on the potential harm of AI content. The draft rules, which require watermarking and tagging of algorithmically modified content, have been deemed too broad and challenging to implement by major platforms like Meta and Google. These companies argue that the regulations could increase operational costs significantly and make social media platforms in India difficult to operate. The feedback period for these proposed rules has been extended by the Ministry of Electronics and IT to allow more in-depth industry consultation.

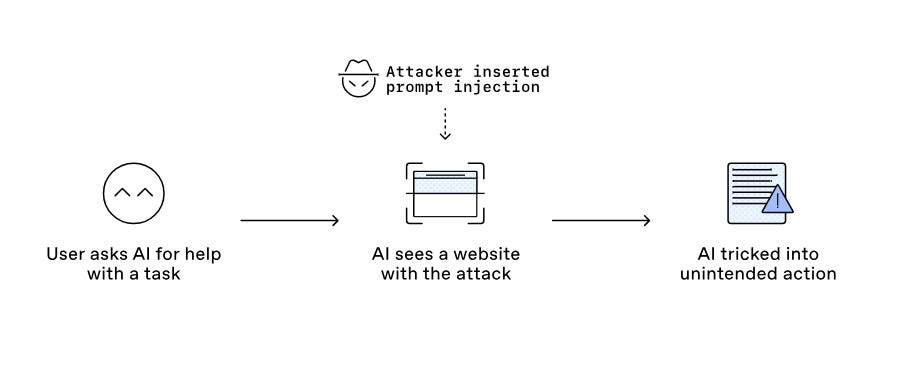

OpenAI Highlights Prompt Injection Threats, Advances Security Measures for AI Systems

OpenAI highlights the growing security challenges associated with prompt injections, a form of social engineering that manipulates AI systems into unintended actions by embedding malicious instructions within seemingly benign content. These attacks exploit the AI’s ability to access various online sources, posing risks such as fraud and data breaches. As AI tools become more integrated into tasks like browsing, research, and purchasing, OpenAI emphasizes its proactive measures, including safety training, monitoring, and security protections, to safeguard against these threats and enhance user control over AI interactions.

BSA Urges Indian Government to Implement AI-Friendly Text and Data Mining Policy

The Business Software Alliance (BSA), representing major tech firms like Microsoft and AWS, has called on the Indian government to introduce a text and data mining exception in its copyright laws to support AI development. In its report, BSA argues that such a legal update is essential for enabling innovation while maintaining respect for copyright, as current restrictions limit AI training with publicly available data. This recommendation comes amid India’s preparations for the Digital India Act and upcoming implementation of the Digital Personal Data Protection Act. BSA emphasizes the need for a unified national approach to AI regulation and suggests aligning with international standards while also advocating for expanded cloud infrastructure and access to non-sensitive government datasets.

⚖️ AI Breakthroughs

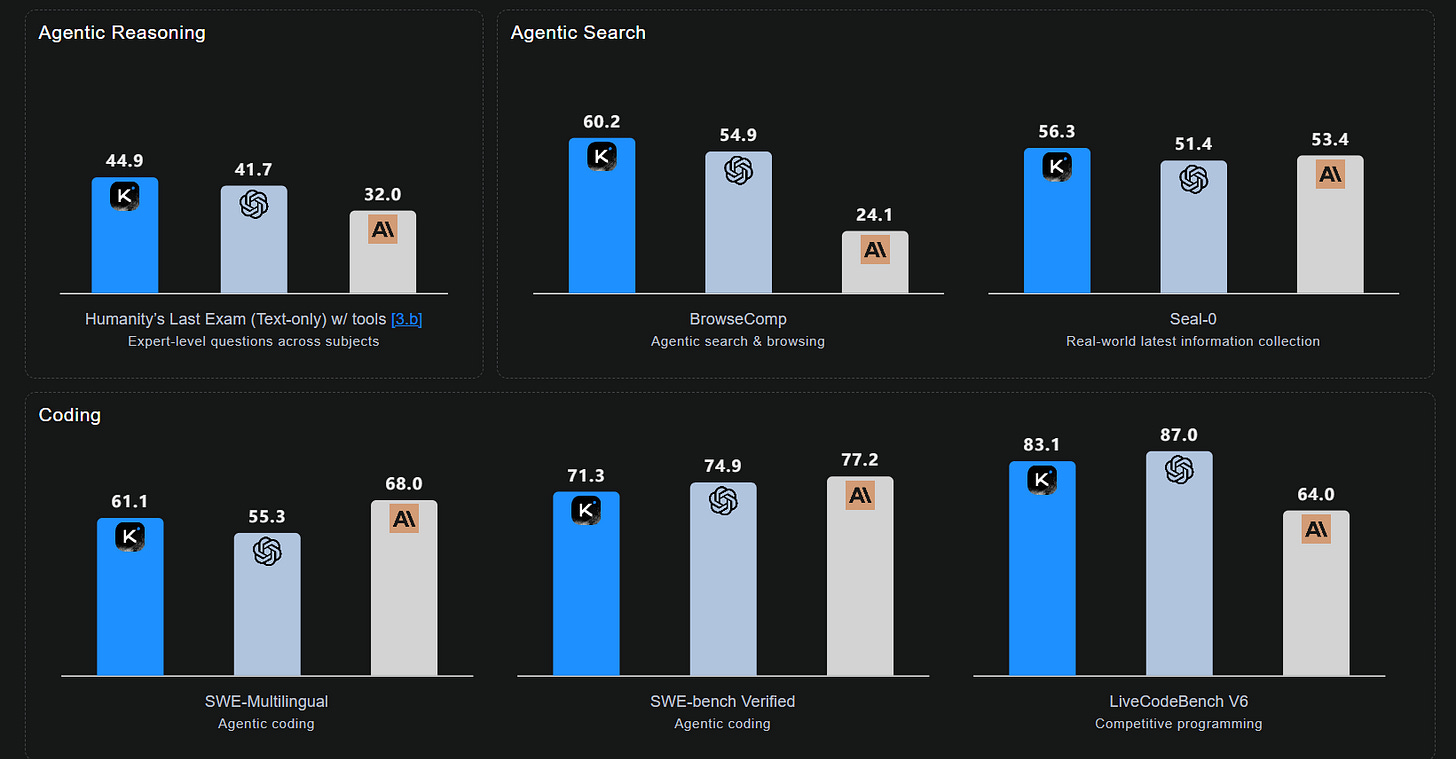

Moonshot AI’s Kimi K2 Thinking Outperforms GPT-5 and More in Key Benchmarks

Moonshot AI, a Chinese startup backed by Alibaba, unveiled its latest AI model, Kimi K2 Thinking, on November 6, claiming superiority over leading systems like OpenAI’s GPT-5 in key reasoning and coding benchmarks. The model activates 32 billion parameters per inference from a total of one trillion parameters and supports context windows of up to 256,000 tokens. Designed for explicit reasoning with a focus on transparency, Kimi K2 Thinking achieved notable scores on competitive benchmarks and can execute extensive tool calls autonomously. Despite its scale, the model maintains a competitive pricing structure, significantly undercutting GPT-5. Released under a Modified MIT License, it reflects a push by Chinese open-source AI firms to rival U.S. proprietary systems and aims to democratize access to advanced AI technology.

Reliance Jio Expands Free Google AI Offer to All 5G Users in India

Reliance Jio has expanded its partnership with Google by offering all Jio 5G users 18 months of free access to Google AI Pro, a service previously limited to users aged 18–25. This initiative coincides with Jio and Google’s recent collaboration to provide Gemini’s premium AI tools to Indian users at no extra cost, aiming to mainstream advanced AI utilities. The Google AI Pro subscription, normally priced at ₹1,950 per month, delivers benefits including the Gemini 2.5 Pro model and expanded access to various AI models and Google services, valued at approximately ₹35,100. Users can activate the offer through the MyJio app.

Microsoft Unveils New AI Team to Pursue Safe ‘Humanist’ Superintelligence Advances

Microsoft is forming a new research team, the MAI Superintelligence Team, to explore superintelligence and other advanced AI forms, as part of a broader push to develop practical, human-centered AI technology. The initiative, led by a former DeepMind co-founder, reflects the tech giant’s ambition to diversify its AI capabilities beyond OpenAI, its current partner in developing models for Bing and Copilot. The move follows similar efforts by competitors like Meta, who are also heavily investing in AI research. The team at Microsoft plans to focus on creating AI that serves specific human needs, particularly in education, healthcare, and renewable energy, steering away from the pursuit of generalist AI systems.

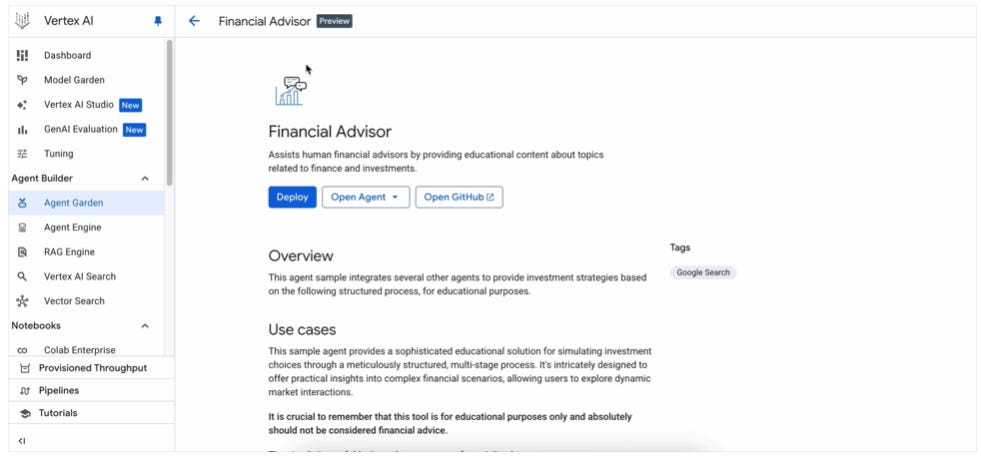

Agent Garden Expands Access, Simplifies AI Agent Development for All Users

Agent Garden has now been made accessible to all users, expanding beyond just Google Cloud users, to facilitate the creation of AI agents, particularly complex multi-agent systems. This tool aims to alleviate the common challenges developers face, such as integrating diverse tools and frameworks, by offering a comprehensive repository of agent samples and solutions through its Agent Development Kit (ADK). With capabilities like seamless deployment and integration with systems such as BigQuery and Vertex AI Search, Agent Garden provides developers with resources to efficiently design AI agents that tackle intricate business challenges and workflows.

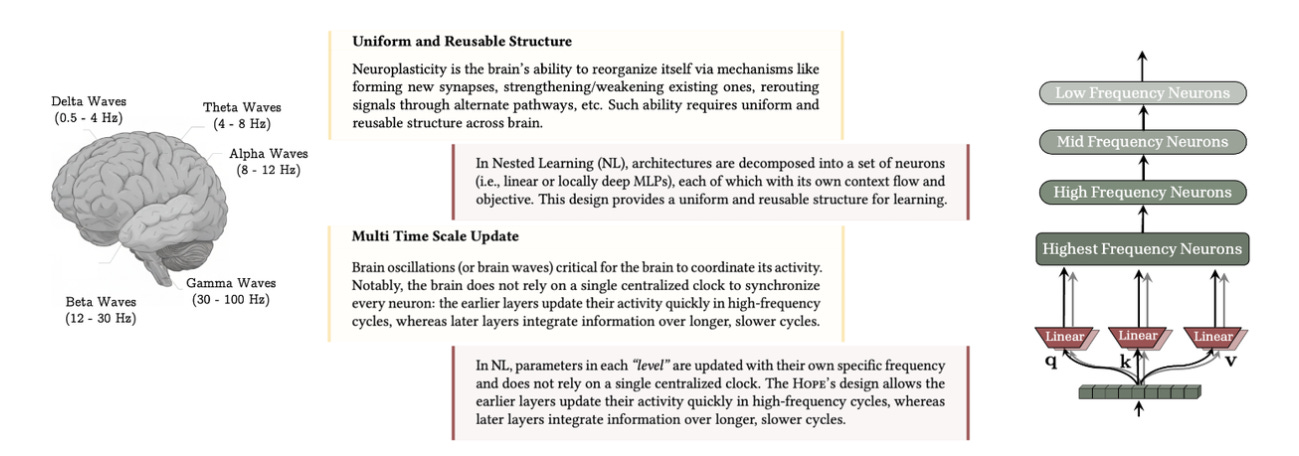

Nested Learning Bridges Gap Between Architecture and Optimization in AI Models

In a recent publication, Google Research has detailed the concept of Nested Learning, aimed at addressing fundamental challenges in machine learning, such as catastrophic forgetting. Traditional approaches have struggled to integrate a model’s architecture and its optimization methods, often treating them as separate entities. Nested Learning, however, unifies these elements, optimizing them as interconnected, multi-level systems. This innovative approach, tested with a self-modifying architecture called “Hope,” has shown superior performance in language modeling, offering improved long-term memory management compared to existing models.

Google Maps Integrates AI-Powered Tools to Simplify Interactive Project Development

Google Maps is enhancing its platform with new AI features, utilizing Gemini models to power developments like a builder agent and an MCP server to integrate AI assistants seamlessly with Google Maps’ technical documentation. Among the updates, the builder agent allows developers and users to create map-based prototypes by simply describing their projects in text, while a styling agent enables customized mapping to match specific themes. Additionally, the company introduces Grounding Lite, allowing developers to connect AI models to external data sources, facilitating questions related to location queries. These tools are set to improve interaction with Maps data and boost project development efficiency.

OpenAI Offers Free ChatGPT Plus to Support Transitioning U.S. Servicemembers and Veterans

OpenAI is offering U.S. servicemembers and veterans a year of free access to ChatGPT Plus to assist with their transition from military to civilian life. This initiative aims to help with various challenges such as job hunting, resume building, and navigating benefits by providing tools like interview practice, form explanations, and planning resources. The program, supported by verification from SheerID, allows eligible individuals to utilize the platform’s latest AI models and features, with the aim of easing the adjustment process for those who have served.

🎓AI Academia

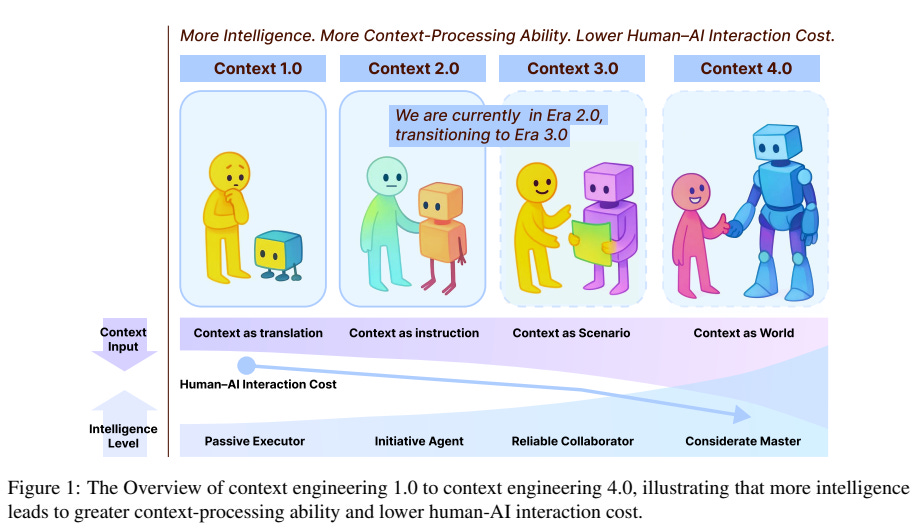

Researchers Trace Evolution and Future of Context Engineering in AI Systems

Researchers from SII-GAIR have delved into the concept of context engineering, a field exploring how machines can better interpret human interactions by developing a deeper understanding of contextual cues. This paper maps the evolution of context engineering from early human-computer interaction frameworks to current paradigms in human-agent interaction, emphasizing the historical trajectory and future possibilities of integrating more intelligent systems. As AI advances, there is a transition from Context Engineering 2.0 to 3.0, promising improved context-processing abilities and reduced human-AI interaction costs, thus setting the stage for a broader systematic approach in AI development.

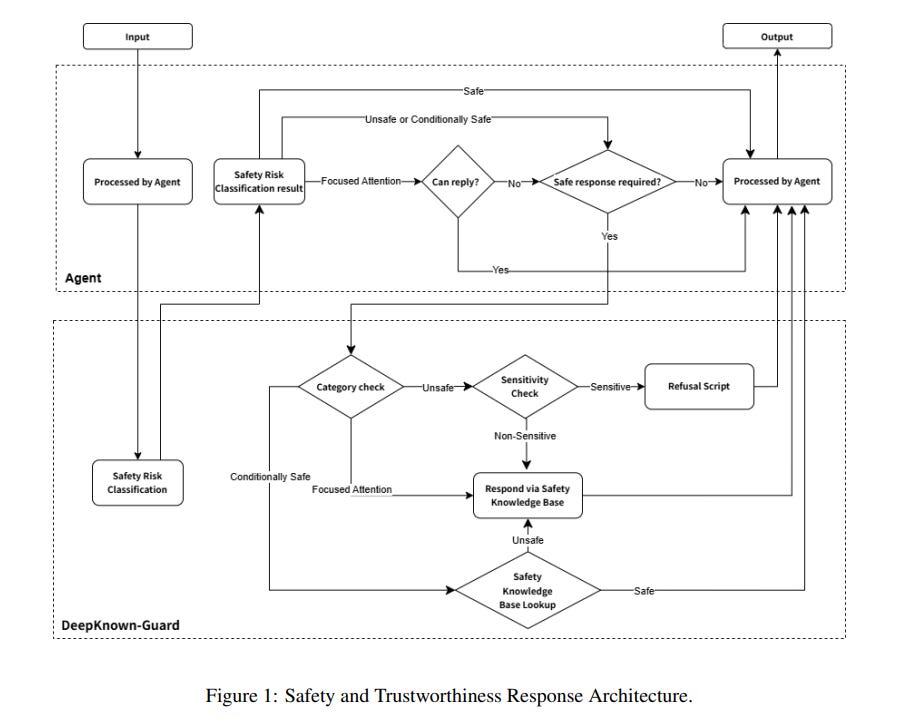

DeepKnown-Guard Framework Enhances Safety of Large Language Models in Critical Domains

Beijing Caizhi Tech has developed DeepKnown-Guard, a proprietary safety response framework aimed at addressing security challenges associated with Large Language Models (LLMs). The framework enhances input and output safety through a sophisticated classification model and Retrieval-Augmented Generation (RAG), achieving a 99.3% risk recall rate and ensuring that outputs are grounded in real-time, reliable data. Unlike other binary risk approaches, DeepKnown-Guard uses a four-tier taxonomy to identify nuanced risks, demonstrating superior performance on both public safety benchmarks and high-risk test scenarios, thus offering a robust solution for deploying high-trust LLM applications in sensitive domains.

Open Agent Specification Standardizes AI Agents for Compatibility Across Multiple Frameworks

Agent Spec, a new declarative language developed by researchers at Oracle Corporation, aims to unify the fragmented landscape of AI agent frameworks by providing a standardized way to define, execute, and evaluate AI agents and workflows across various platforms. This framework-agnostic specification ensures reusability, portability, and interoperability by introducing consistent control, data flow semantics, and evaluation protocols akin to those used in standardizing large language model assessments. With tools like a Python SDK and adapters for popular agent frameworks, Agent Spec bridges the gap between model-centric and agent-centric standards, enabling reliable and portable agentic systems.

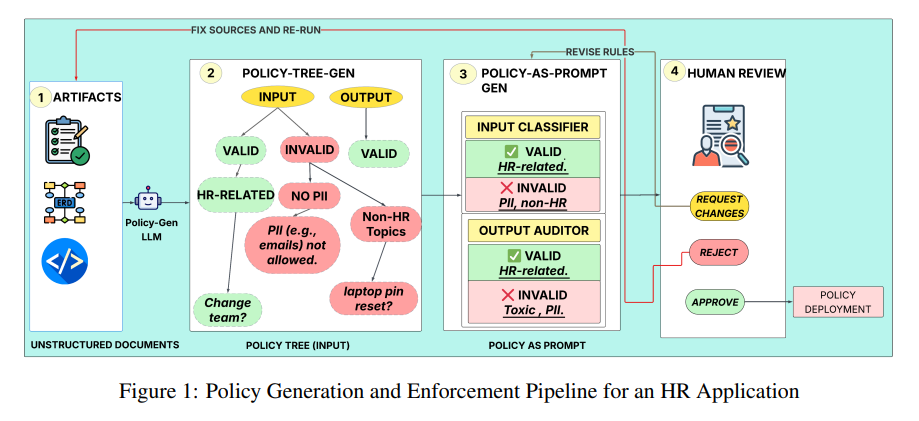

Policy-as-Prompt Framework Converts AI Governance into Enforceable Guardrails for Safe Deployment

A new framework, “Policy as Prompt,” has been developed to enhance AI governance in regulated and safety-critical environments by transforming policy documents into enforceable AI guardrails. This system converts unstructured design artifacts into a source-linked policy tree, which is compiled into prompt-based classifiers for real-time monitoring, ensuring AI agents operate within secure and compliant boundaries. The approach aims to close the “policy-to-practice” gap, providing dynamic, context-aware security and auditable rationales aligned with AI governance frameworks, such as the EU AI Act. It emphasizes least privilege principles and human-in-the-loop review processes to enhance AI safety and security assurance.

A Comprehensive Cost-Benefit Analysis: On-Premise Vs. Commercial LLM Deployments

A study from Carnegie Mellon University and independent researchers provides a comprehensive cost-benefit analysis of deploying large language models (LLMs) on-premise versus using commercial cloud services like OpenAI and Google. The analysis highlights that while cloud services offer easy scalability and accessibility, they come with concerns related to data privacy and long-term costs. On-premise deployment of open-source models, although requiring significant initial investment, can become economically viable for organizations with high-volume processing needs or strict data compliance demands, achieving break-even at various timeframes based on model size and usage. The study assists organizations in evaluating the total cost of ownership to determine the feasibility of local LLM deployment.

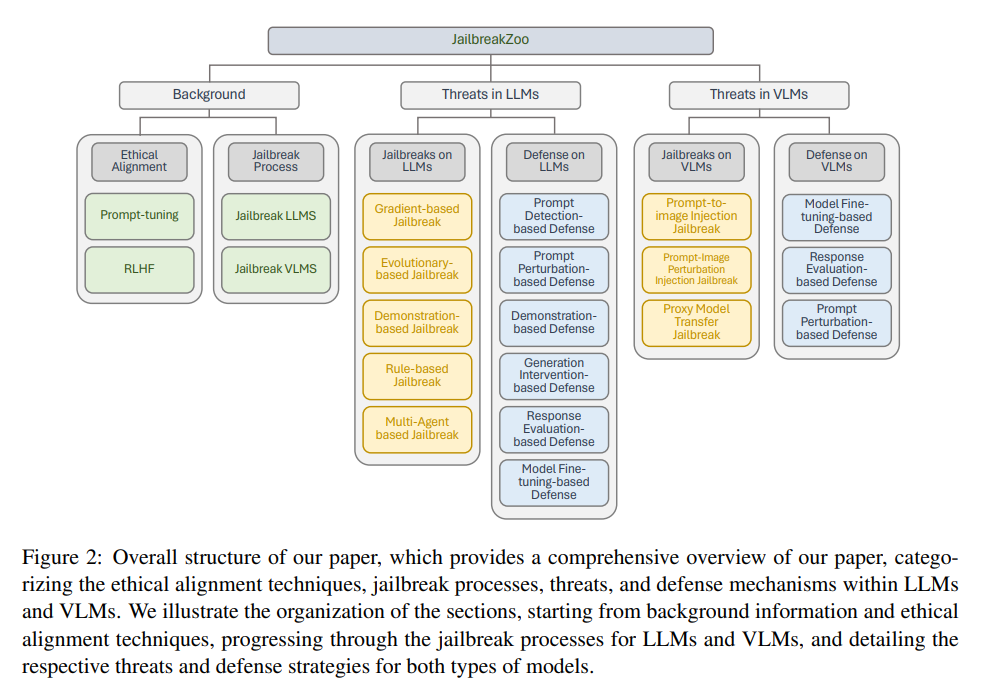

JailbreakZoo Examines Security Threats in AI Language and Vision-Language Models

A recent survey titled “JailbreakZoo: Survey, Landscapes, and Horizons in Jailbreaking Large Language and Vision-Language Models” examines the rising concerns around the security and ethical use of Large Language Models (LLMs) and Vision-Language Models (VLMs). The study provides an extensive overview of the practice of jailbreaking, which involves bypassing the ethical and operational constraints of these models, and categorizes such jailbreaks into seven distinct types. It highlights the development of defense mechanisms to address these vulnerabilities and identifies research gaps, suggesting future directions for enhancing security frameworks. This survey underscores the need for integrated perspectives combining jailbreak strategies and defenses to ensure a secure AI environment. More detailed findings are available on the authors’ online platform.

AI Incident Reporting Systems: Critical for Managing Safety Risks and Legal Incidents

Researchers from RAND and the Centre for the Governance of AI have proposed a framework for designing incident reporting systems to address harms caused by general-purpose AI systems. As AI systems increasingly become a critical part of various sectors, they have been linked to various harmful incidents, underscoring the need for systematic reporting mechanisms. By examining nine safety-critical industries like nuclear power and healthcare, the study highlights essential considerations for AI incident reporting systems, including policy goals, enforcement, and the role of both mandatory and voluntary reporting. These insights aim to inform policymakers and researchers in creating effective AI governance frameworks that enhance safety and accountability.

About SoRAI: SoRAI is committed to advancing AI literacy through practical, accessible, and high-quality education. Our programs emphasize responsible AI use, equipping learners with the skills to anticipate and mitigate risks effectively. Our flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.